Wenjun Huang

Cauchy-Schwarz Fairness Regularizer

Dec 10, 2025

Abstract:Group fairness in machine learning is often enforced by adding a regularizer that reduces the dependence between model predictions and sensitive attributes. However, existing regularizers are built on heterogeneous distance measures and design choices, which makes their behavior hard to reason about and their performance inconsistent across tasks. This raises a basic question: what properties make a good fairness regularizer? We address this question by first organizing existing in-process methods into three families: (i) matching prediction statistics across sensitive groups, (ii) aligning latent representations, and (iii) directly minimizing dependence between predictions and sensitive attributes. Through this lens, we identify desirable properties of the underlying distance measure, including tight generalization bounds, robustness to scale differences, and the ability to handle arbitrary prediction distributions. Motivated by these properties, we propose a Cauchy-Schwarz (CS) fairness regularizer that penalizes the empirical CS divergence between prediction distributions conditioned on sensitive groups. Under a Gaussian comparison, we show that CS divergence yields a tighter bound than Kullback-Leibler divergence, Maximum Mean Discrepancy, and the mean disparity used in Demographic Parity, and we discuss how these advantages translate to a distribution-free, kernel-based estimator that naturally extends to multiple sensitive attributes. Extensive experiments on four tabular benchmarks and one image dataset demonstrate that the proposed CS regularizer consistently improves Demographic Parity and Equal Opportunity metrics while maintaining competitive accuracy, and achieves a more stable utility-fairness trade-off across hyperparameter settings compared to prior regularizers.

LUNE: Efficient LLM Unlearning via LoRA Fine-Tuning with Negative Examples

Dec 08, 2025Abstract:Large language models (LLMs) possess vast knowledge acquired from extensive training corpora, but they often cannot remove specific pieces of information when needed, which makes it hard to handle privacy, bias mitigation, and knowledge correction. Traditional model unlearning approaches require computationally expensive fine-tuning or direct weight editing, making them impractical for real-world deployment. In this work, we introduce LoRA-based Unlearning with Negative Examples (LUNE), a lightweight framework that performs negative-only unlearning by updating only low-rank adapters while freezing the backbone, thereby localizing edits and avoiding disruptive global changes. Leveraging Low-Rank Adaptation (LoRA), LUNE targets intermediate representations to suppress (or replace) requested knowledge with an order-of-magnitude lower compute and memory than full fine-tuning or direct weight editing. Extensive experiments on multiple factual unlearning tasks show that LUNE: (I) achieves effectiveness comparable to full fine-tuning and memory-editing methods, and (II) reduces computational cost by about an order of magnitude.

Recover-to-Forget: Gradient Reconstruction from LoRA for Efficient LLM Unlearning

Dec 08, 2025

Abstract:Unlearning in large foundation models (e.g., LLMs) is essential for enabling dynamic knowledge updates, enforcing data deletion rights, and correcting model behavior. However, existing unlearning methods often require full-model fine-tuning or access to the original training data, which limits their scalability and practicality. In this work, we introduce Recover-to-Forget (R2F), a novel framework for efficient unlearning in LLMs based on reconstructing full-model gradient directions from low-rank LoRA adapter updates. Rather than performing backpropagation through the full model, we compute gradients with respect to LoRA parameters using multiple paraphrased prompts and train a gradient decoder to approximate the corresponding full-model gradients. To ensure applicability to larger or black-box models, the decoder is trained on a proxy model and transferred to target models. We provide a theoretical analysis of cross-model generalization and demonstrate that our method achieves effective unlearning while preserving general model performance. Experimental results demonstrate that R2F offers a scalable and lightweight alternative for unlearning in pretrained LLMs without requiring full retraining or access to internal parameters.

QUILL: An Algorithm-Architecture Co-Design for Cache-Local Deformable Attention

Nov 17, 2025

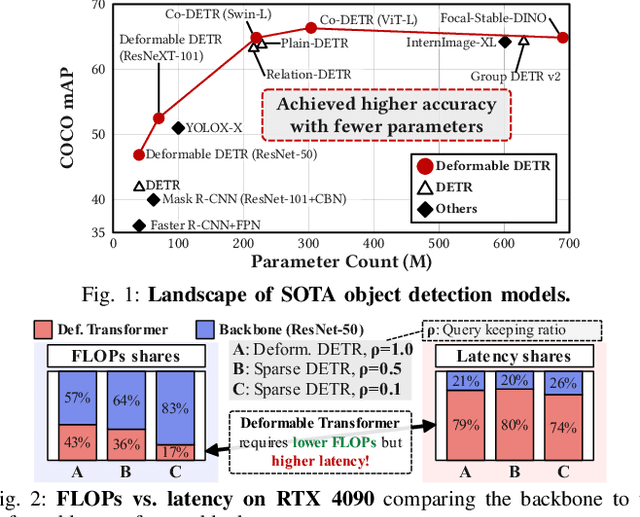

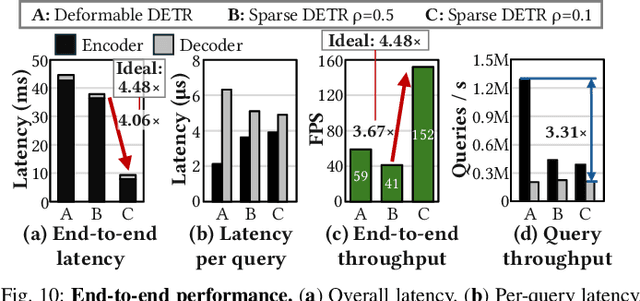

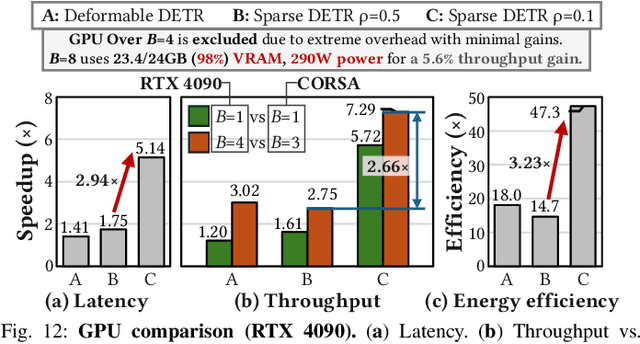

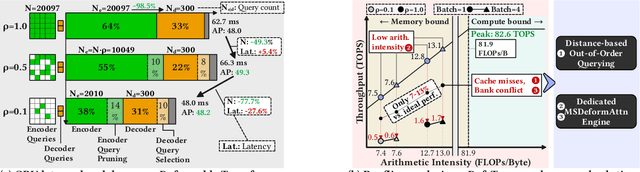

Abstract:Deformable transformers deliver state-of-the-art detection but map poorly to hardware due to irregular memory access and low arithmetic intensity. We introduce QUILL, a schedule-aware accelerator that turns deformable attention into cache-friendly, single-pass work. At its core, Distance-based Out-of-Order Querying (DOOQ) orders queries by spatial proximity; the look-ahead drives a region prefetch into an alternate buffer--forming a schedule-aware prefetch loop that overlaps memory and compute. A fused MSDeformAttn engine executes interpolation, Softmax, aggregation, and the final projection (W''m) in one pass without spilling intermediates, while small tensors are kept on-chip and surrounding dense layers run on integrated GEMMs. Implemented as RTL and evaluated end-to-end, QUILL achieves up to 7.29x higher throughput and 47.3x better energy efficiency than an RTX 4090, and exceeds prior accelerators by 3.26-9.82x in throughput and 2.01-6.07x in energy efficiency. With mixed-precision quantization, accuracy tracks FP32 within <=0.9 AP across Deformable and Sparse DETR variants. By converting sparsity into locality--and locality into utilization--QUILL delivers consistent, end-to-end speedups.

Draft and Refine with Visual Experts

Nov 14, 2025Abstract:While recent Large Vision-Language Models (LVLMs) exhibit strong multimodal reasoning abilities, they often produce ungrounded or hallucinated responses because they rely too heavily on linguistic priors instead of visual evidence. This limitation highlights the absence of a quantitative measure of how much these models actually use visual information during reasoning. We propose Draft and Refine (DnR), an agent framework driven by a question-conditioned utilization metric. The metric quantifies the model's reliance on visual evidence by first constructing a query-conditioned relevance map to localize question-specific cues and then measuring dependence through relevance-guided probabilistic masking. Guided by this metric, the DnR agent refines its initial draft using targeted feedback from external visual experts. Each expert's output (such as boxes or masks) is rendered as visual cues on the image, and the model is re-queried to select the response that yields the largest improvement in utilization. This process strengthens visual grounding without retraining or architectural changes. Experiments across VQA and captioning benchmarks show consistent accuracy gains and reduced hallucination, demonstrating that measuring visual utilization provides a principled path toward more interpretable and evidence-driven multimodal agent systems.

Enabling Group Fairness in Graph Unlearning via Bi-level Debiasing

May 14, 2025

Abstract:Graph unlearning is a crucial approach for protecting user privacy by erasing the influence of user data on trained graph models. Recent developments in graph unlearning methods have primarily focused on maintaining model prediction performance while removing user information. However, we have observed that when user information is deleted from the model, the prediction distribution across different sensitive groups often changes. Furthermore, graph models are shown to be prone to amplifying biases, making the study of fairness in graph unlearning particularly important. This raises the question: Does graph unlearning actually introduce bias? Our findings indicate that the predictions of post-unlearning models become highly correlated with sensitive attributes, confirming the introduction of bias in the graph unlearning process. To address this issue, we propose a fair graph unlearning method, FGU. To guarantee privacy, FGU trains shard models on partitioned subgraphs, unlearns the requested data from the corresponding subgraphs, and retrains the shard models on the modified subgraphs. To ensure fairness, FGU employs a bi-level debiasing process: it first enables shard-level fairness by incorporating a fairness regularizer in the shard model retraining, and then achieves global-level fairness by aligning all shard models to minimize global disparity. Our experiments demonstrate that FGU achieves superior fairness while maintaining privacy and accuracy. Additionally, FGU is robust to diverse unlearning requests, ensuring fairness and utility performance across various data distributions.

Effective Reinforcement Learning Control using Conservative Soft Actor-Critic

May 06, 2025Abstract:Reinforcement Learning (RL) has shown great potential in complex control tasks, particularly when combined with deep neural networks within the Actor-Critic (AC) framework. However, in practical applications, balancing exploration, learning stability, and sample efficiency remains a significant challenge. Traditional methods such as Soft Actor-Critic (SAC) and Proximal Policy Optimization (PPO) address these issues by incorporating entropy or relative entropy regularization, but often face problems of instability and low sample efficiency. In this paper, we propose the Conservative Soft Actor-Critic (CSAC) algorithm, which seamlessly integrates entropy and relative entropy regularization within the AC framework. CSAC improves exploration through entropy regularization while avoiding overly aggressive policy updates with the use of relative entropy regularization. Evaluations on benchmark tasks and real-world robotic simulations demonstrate that CSAC offers significant improvements in stability and efficiency over existing methods. These findings suggest that CSAC provides strong robustness and application potential in control tasks under dynamic environments.

Multi-Goal Dexterous Hand Manipulation using Probabilistic Model-based Reinforcement Learning

Apr 30, 2025Abstract:This paper tackles the challenge of learning multi-goal dexterous hand manipulation tasks using model-based Reinforcement Learning. We propose Goal-Conditioned Probabilistic Model Predictive Control (GC-PMPC) by designing probabilistic neural network ensembles to describe the high-dimensional dexterous hand dynamics and introducing an asynchronous MPC policy to meet the control frequency requirements in real-world dexterous hand systems. Extensive evaluations on four simulated Shadow Hand manipulation scenarios with randomly generated goals demonstrate GC-PMPC's superior performance over state-of-the-art baselines. It successfully drives a cable-driven Dexterous hand, DexHand 021 with 12 Active DOFs and 5 tactile sensors, to learn manipulating a cubic die to three goal poses within approximately 80 minutes of interactions, demonstrating exceptional learning efficiency and control performance on a cost-effective dexterous hand platform.

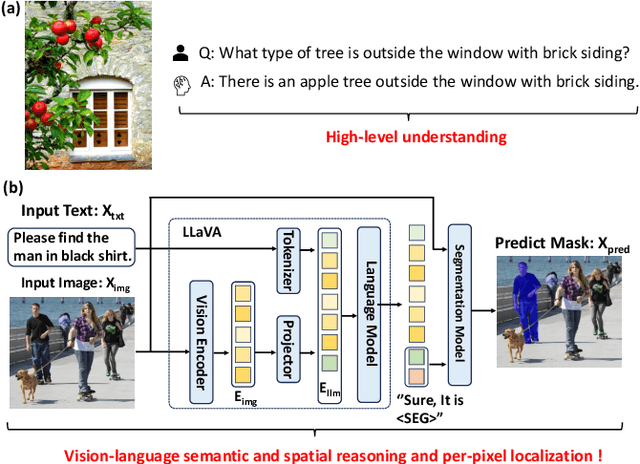

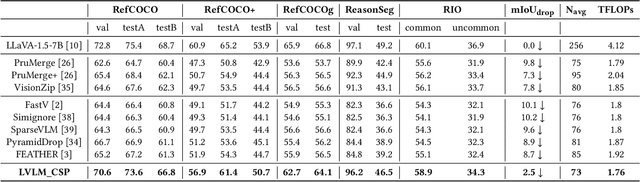

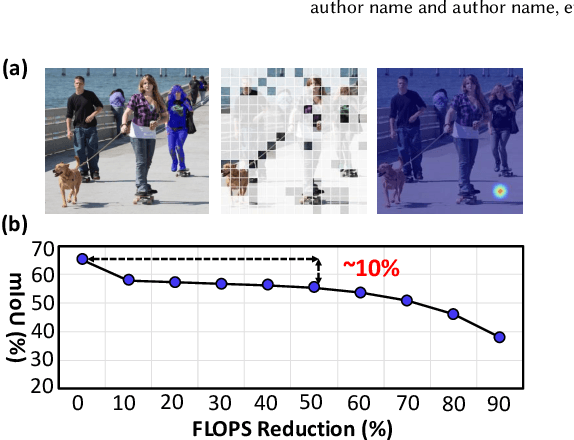

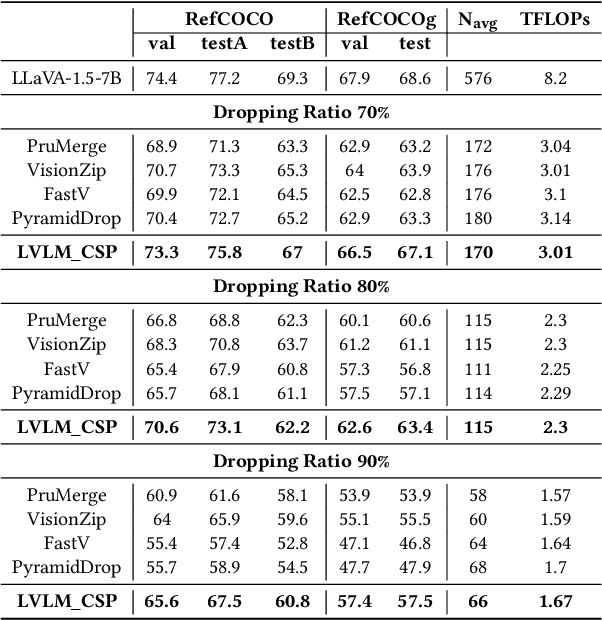

LVLM_CSP: Accelerating Large Vision Language Models via Clustering, Scattering, and Pruning for Reasoning Segmentation

Apr 15, 2025

Abstract:Large Vision Language Models (LVLMs) have been widely adopted to guide vision foundation models in performing reasoning segmentation tasks, achieving impressive performance. However, the substantial computational overhead associated with LVLMs presents a new challenge. The primary source of this computational cost arises from processing hundreds of image tokens. Therefore, an effective strategy to mitigate such overhead is to reduce the number of image tokens, a process known as image token pruning. Previous studies on image token pruning for LVLMs have primarily focused on high level visual understanding tasks, such as visual question answering and image captioning. In contrast, guiding vision foundation models to generate accurate visual masks based on textual queries demands precise semantic and spatial reasoning capabilities. Consequently, pruning methods must carefully control individual image tokens throughout the LVLM reasoning process. Our empirical analysis reveals that existing methods struggle to adequately balance reductions in computational overhead with the necessity to maintain high segmentation accuracy. In this work, we propose LVLM_CSP, a novel training free visual token pruning method specifically designed for LVLM based reasoning segmentation tasks. LVLM_CSP consists of three stages: clustering, scattering, and pruning. Initially, the LVLM performs coarse-grained visual reasoning using a subset of selected image tokens. Next, fine grained reasoning is conducted, and finally, most visual tokens are pruned in the last stage. Extensive experiments demonstrate that LVLM_CSP achieves a 65% reduction in image token inference FLOPs with virtually no accuracy degradation, and a 70% reduction with only a minor 1% drop in accuracy on the 7B LVLM.

PacketCLIP: Multi-Modal Embedding of Network Traffic and Language for Cybersecurity Reasoning

Mar 05, 2025Abstract:Traffic classification is vital for cybersecurity, yet encrypted traffic poses significant challenges. We present PacketCLIP, a multi-modal framework combining packet data with natural language semantics through contrastive pretraining and hierarchical Graph Neural Network (GNN) reasoning. PacketCLIP integrates semantic reasoning with efficient classification, enabling robust detection of anomalies in encrypted network flows. By aligning textual descriptions with packet behaviors, it offers enhanced interpretability, scalability, and practical applicability across diverse security scenarios. PacketCLIP achieves a 95% mean AUC, outperforms baselines by 11.6%, and reduces model size by 92%, making it ideal for real-time anomaly detection. By bridging advanced machine learning techniques and practical cybersecurity needs, PacketCLIP provides a foundation for scalable, efficient, and interpretable solutions to tackle encrypted traffic classification and network intrusion detection challenges in resource-constrained environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge