Sanggeon Yun

Internal Flow Signatures for Self-Checking and Refinement in LLMs

Feb 02, 2026Abstract:Large language models can generate fluent answers that are unfaithful to the provided context, while many safeguards rely on external verification or a separate judge after generation. We introduce \emph{internal flow signatures} that audit decision formation from depthwise dynamics at a fixed inter-block monitoring boundary. The method stabilizes token-wise motion via bias-centered monitoring, then summarizes trajectories in compact \emph{moving} readout-aligned subspaces constructed from the top token and its close competitors within each depth window. Neighboring window frames are aligned by an orthogonal transport, yielding depth-comparable transported step lengths, turning angles, and subspace drift summaries that are invariant to within-window basis choices. A lightweight GRU validator trained on these signatures performs self-checking without modifying the base model. Beyond detection, the validator localizes a culprit depth event and enables a targeted refinement: the model rolls back to the culprit token and clamps an abnormal transported step at the identified block while preserving the orthogonal residual. The resulting pipeline provides actionable localization and low-overhead self-checking from internal decision dynamics. \emph{Code is available at} \texttt{github.com/EavnJeong/Internal-Flow-Signatures-for-Self-Checking-and-Refinement-in-LLMs}.

HopFormer: Sparse Graph Transformers with Explicit Receptive Field Control

Feb 02, 2026Abstract:Graph Transformers typically rely on explicit positional or structural encodings and dense global attention to incorporate graph topology. In this work, we show that neither is essential. We introduce HopFormer, a graph Transformer that injects structure exclusively through head-specific n-hop masked sparse attention, without the use of positional encodings or architectural modifications. This design provides explicit and interpretable control over receptive fields while enabling genuinely sparse attention whose computational cost scales linearly with mask sparsity. Through extensive experiments on both node-level and graph-level benchmarks, we demonstrate that our approach achieves competitive or superior performance across diverse graph structures. Our results further reveal that dense global attention is often unnecessary: on graphs with strong small-world properties, localized attention yields more stable and consistently high performance, while on graphs with weaker small-world effects, global attention offers diminishing returns. Together, these findings challenge prevailing assumptions in graph Transformer design and highlight sparsity-controlled attention as a principled and efficient alternative.

T-SAR: A Full-Stack Co-design for CPU-Only Ternary LLM Inference via In-Place SIMD ALU Reorganization

Nov 17, 2025

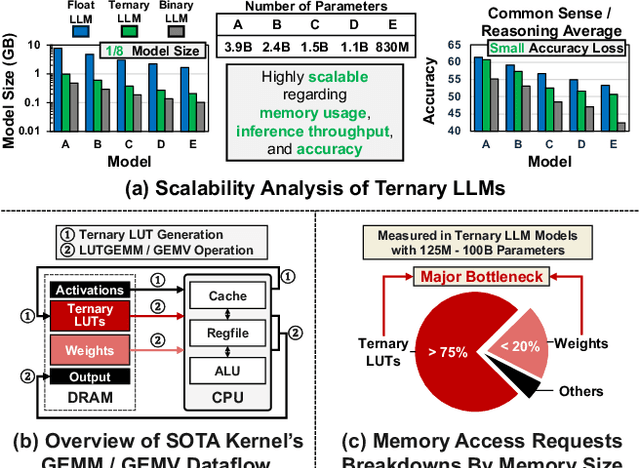

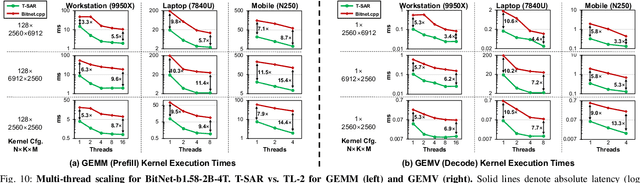

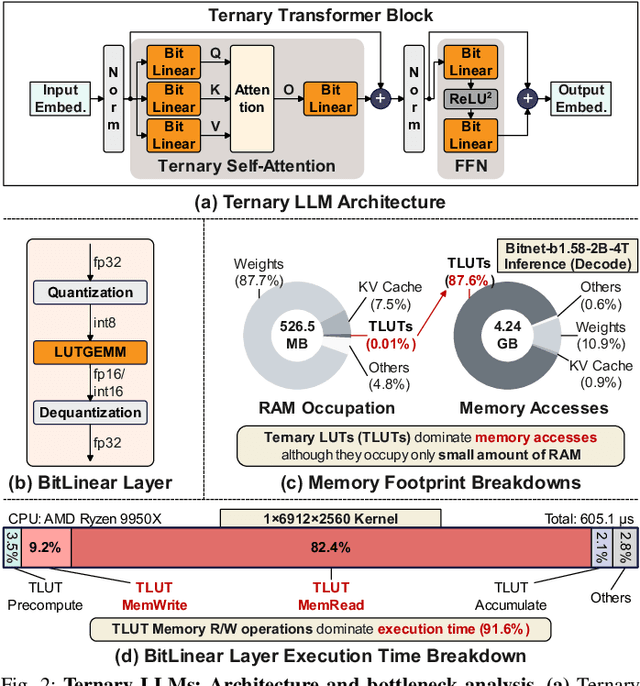

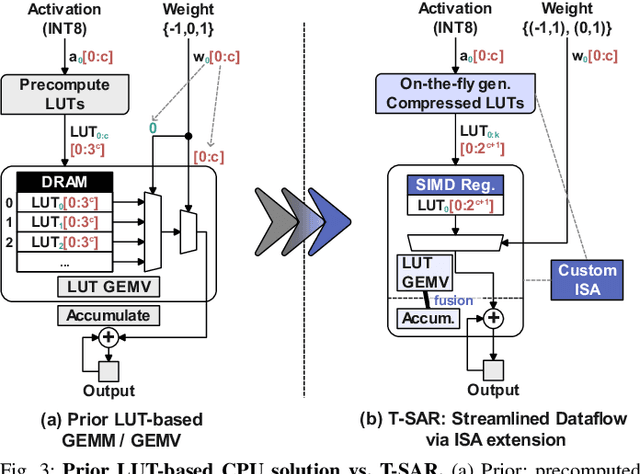

Abstract:Recent advances in LLMs have outpaced the computational and memory capacities of edge platforms that primarily employ CPUs, thereby challenging efficient and scalable deployment. While ternary quantization enables significant resource savings, existing CPU solutions rely heavily on memory-based lookup tables (LUTs) which limit scalability, and FPGA or GPU accelerators remain impractical for edge use. This paper presents T-SAR, the first framework to achieve scalable ternary LLM inference on CPUs by repurposing the SIMD register file for dynamic, in-register LUT generation with minimal hardware modifications. T-SAR eliminates memory bottlenecks and maximizes data-level parallelism, delivering 5.6-24.5x and 1.1-86.2x improvements in GEMM latency and GEMV throughput, respectively, with only 3.2% power and 1.4% area overheads in SIMD units. T-SAR achieves up to 2.5-4.9x the energy efficiency of an NVIDIA Jetson AGX Orin, establishing a practical approach for efficient LLM inference on edge platforms.

QUILL: An Algorithm-Architecture Co-Design for Cache-Local Deformable Attention

Nov 17, 2025

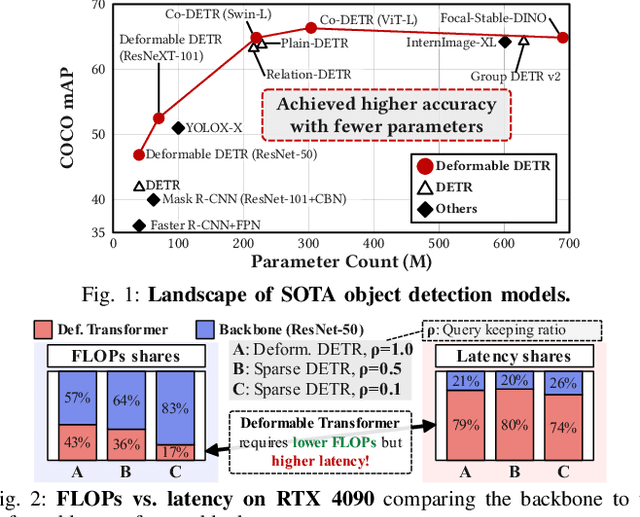

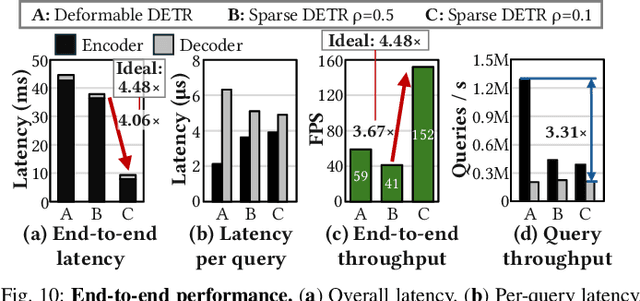

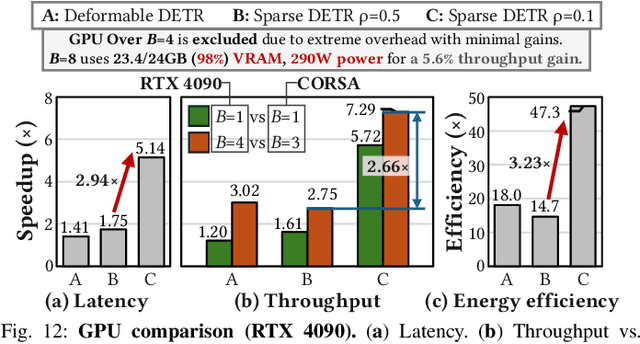

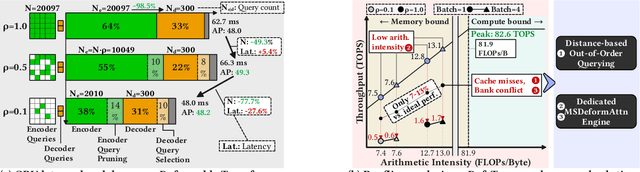

Abstract:Deformable transformers deliver state-of-the-art detection but map poorly to hardware due to irregular memory access and low arithmetic intensity. We introduce QUILL, a schedule-aware accelerator that turns deformable attention into cache-friendly, single-pass work. At its core, Distance-based Out-of-Order Querying (DOOQ) orders queries by spatial proximity; the look-ahead drives a region prefetch into an alternate buffer--forming a schedule-aware prefetch loop that overlaps memory and compute. A fused MSDeformAttn engine executes interpolation, Softmax, aggregation, and the final projection (W''m) in one pass without spilling intermediates, while small tensors are kept on-chip and surrounding dense layers run on integrated GEMMs. Implemented as RTL and evaluated end-to-end, QUILL achieves up to 7.29x higher throughput and 47.3x better energy efficiency than an RTX 4090, and exceeds prior accelerators by 3.26-9.82x in throughput and 2.01-6.07x in energy efficiency. With mixed-precision quantization, accuracy tracks FP32 within <=0.9 AP across Deformable and Sparse DETR variants. By converting sparsity into locality--and locality into utilization--QUILL delivers consistent, end-to-end speedups.

Draft and Refine with Visual Experts

Nov 14, 2025Abstract:While recent Large Vision-Language Models (LVLMs) exhibit strong multimodal reasoning abilities, they often produce ungrounded or hallucinated responses because they rely too heavily on linguistic priors instead of visual evidence. This limitation highlights the absence of a quantitative measure of how much these models actually use visual information during reasoning. We propose Draft and Refine (DnR), an agent framework driven by a question-conditioned utilization metric. The metric quantifies the model's reliance on visual evidence by first constructing a query-conditioned relevance map to localize question-specific cues and then measuring dependence through relevance-guided probabilistic masking. Guided by this metric, the DnR agent refines its initial draft using targeted feedback from external visual experts. Each expert's output (such as boxes or masks) is rendered as visual cues on the image, and the model is re-queried to select the response that yields the largest improvement in utilization. This process strengthens visual grounding without retraining or architectural changes. Experiments across VQA and captioning benchmarks show consistent accuracy gains and reduced hallucination, demonstrating that measuring visual utilization provides a principled path toward more interpretable and evidence-driven multimodal agent systems.

LogHD: Robust Compression of Hyperdimensional Classifiers via Logarithmic Class-Axis Reduction

Nov 06, 2025

Abstract:Hyperdimensional computing (HDC) suits memory, energy, and reliability-constrained systems, yet the standard "one prototype per class" design requires $O(CD)$ memory (with $C$ classes and dimensionality $D$). Prior compaction reduces $D$ (feature axis), improving storage/compute but weakening robustness. We introduce LogHD, a logarithmic class-axis reduction that replaces the $C$ per-class prototypes with $n\!\approx\!\lceil\log_k C\rceil$ bundle hypervectors (alphabet size $k$) and decodes in an $n$-dimensional activation space, cutting memory to $O(D\log_k C)$ while preserving $D$. LogHD uses a capacity-aware codebook and profile-based decoding, and composes with feature-axis sparsification. Across datasets and injected bit flips, LogHD attains competitive accuracy with smaller models and higher resilience at matched memory. Under equal memory, it sustains target accuracy at roughly $2.5$-$3.0\times$ higher bit-flip rates than feature-axis compression; an ASIC instantiation delivers $498\times$ energy efficiency and $62.6\times$ speedup over an AMD Ryzen 9 9950X and $24.3\times$/$6.58\times$ over an NVIDIA RTX 4090, and is $4.06\times$ more energy-efficient and $2.19\times$ faster than a feature-axis HDC ASIC baseline.

DecoHD: Decomposed Hyperdimensional Classification under Extreme Memory Budgets

Nov 05, 2025Abstract:Decomposition is a proven way to shrink deep networks without changing I/O. We bring this idea to hyperdimensional computing (HDC), where footprint cuts usually shrink the feature axis and erode concentration and robustness. Prior HDC decompositions decode via fixed atomic hypervectors, which are ill-suited for compressing learned class prototypes. We introduce DecoHD, which learns directly in a decomposed HDC parameterization: a small, shared set of per-layer channels with multiplicative binding across layers and bundling at the end, yielding a large representational space from compact factors. DecoHD compresses along the class axis via a lightweight bundling head while preserving native bind-bundle-score; training is end-to-end, and inference remains pure HDC, aligning with in/near-memory accelerators. In evaluation, DecoHD attains extreme memory savings with only minor accuracy degradation under tight deployment budgets. On average it stays within about 0.1-0.15% of a strong non-reduced HDC baseline (worst case 5.7%), is more robust to random bit-flip noise, reaches its accuracy plateau with up to ~97% fewer trainable parameters, and -- in hardware -- delivers roughly 277x/35x energy/speed gains over a CPU (AMD Ryzen 9 9950X), 13.5x/3.7x over a GPU (NVIDIA RTX 4090), and 2.0x/2.4x over a baseline HDC ASIC.

MissionHD: Data-Driven Refinement of Reasoning Graph Structure through Hyperdimensional Causal Path Encoding and Decoding

Aug 20, 2025Abstract:Reasoning graphs from Large Language Models (LLMs) are often misaligned with downstream visual tasks such as video anomaly detection (VAD). Existing Graph Structure Refinement (GSR) methods are ill-suited for these novel, dataset-less graphs. We introduce Data-driven GSR (D-GSR), a new paradigm that directly optimizes graph structure using downstream task data, and propose MissionHD, a hyperdimensional computing (HDC) framework to operationalize it. MissionHD uses an efficient encode-decode process to refine the graph, guided by the downstream task signal. Experiments on challenging VAD and VAR benchmarks show significant performance improvements when using our refined graphs, validating our approach as an effective pre-processing step.

A Few Large Shifts: Layer-Inconsistency Based Minimal Overhead Adversarial Example Detection

May 19, 2025Abstract:Deep neural networks (DNNs) are highly susceptible to adversarial examples--subtle, imperceptible perturbations that can lead to incorrect predictions. While detection-based defenses offer a practical alternative to adversarial training, many existing methods depend on external models, complex architectures, heavy augmentations, or adversarial data, limiting their efficiency and generalizability. We introduce a lightweight, plug-in detection framework that leverages internal layer-wise inconsistencies within the target model itself, requiring only benign data for calibration. Our approach is grounded in the A Few Large Shifts Assumption, which posits that adversarial perturbations typically induce large representation shifts in a small subset of layers. Building on this, we propose two complementary strategies--Recovery Testing (RT) and Logit-layer Testing (LT)--to expose internal disruptions caused by adversaries. Evaluated on CIFAR-10, CIFAR-100, and ImageNet under both standard and adaptive threat models, our method achieves state-of-the-art detection performance with negligible computational overhead and no compromise to clean accuracy.

PacketCLIP: Multi-Modal Embedding of Network Traffic and Language for Cybersecurity Reasoning

Mar 05, 2025Abstract:Traffic classification is vital for cybersecurity, yet encrypted traffic poses significant challenges. We present PacketCLIP, a multi-modal framework combining packet data with natural language semantics through contrastive pretraining and hierarchical Graph Neural Network (GNN) reasoning. PacketCLIP integrates semantic reasoning with efficient classification, enabling robust detection of anomalies in encrypted network flows. By aligning textual descriptions with packet behaviors, it offers enhanced interpretability, scalability, and practical applicability across diverse security scenarios. PacketCLIP achieves a 95% mean AUC, outperforms baselines by 11.6%, and reduces model size by 92%, making it ideal for real-time anomaly detection. By bridging advanced machine learning techniques and practical cybersecurity needs, PacketCLIP provides a foundation for scalable, efficient, and interpretable solutions to tackle encrypted traffic classification and network intrusion detection challenges in resource-constrained environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge