CORAL: Colored structural representation for bi-modal place recognition

Paper and Code

Nov 22, 2020

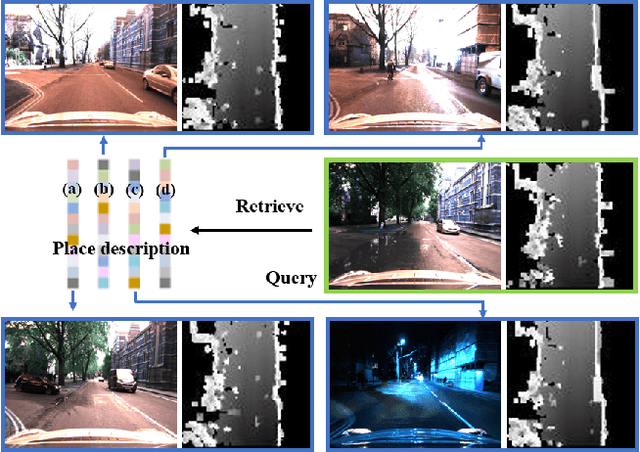

Place recognition is indispensable for drift-free localization system. Due to the variations of the environment, place recognition using single modality has limitations. In this paper, we propose a bi-modal place recognition method, which can extract compound global descriptor from the two modalities, vision and LiDAR. Specifically, we build elevation image generated from point cloud modality as a discriminative structural representation. Based on the 3D information, we derive the correspondences between 3D points and image pixels, by which the pixel-wise visual features can be inserted into the elevation map grids. In this way, we fuse the structural features and visual features in the consistent bird-eye view frame, yielding a semantic feature representation with sensible geometry, namely CORAL. Comparisons on the Oxford RobotCar show that CORAL has superior performance against other state-of-the-art methods. We also demonstrate that our network can be generalized to other scenes and sensor configurations using cross-city datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge