Sha Lu

BEV-ODOM2: Enhanced BEV-based Monocular Visual Odometry with PV-BEV Fusion and Dense Flow Supervision for Ground Robots

Sep 18, 2025

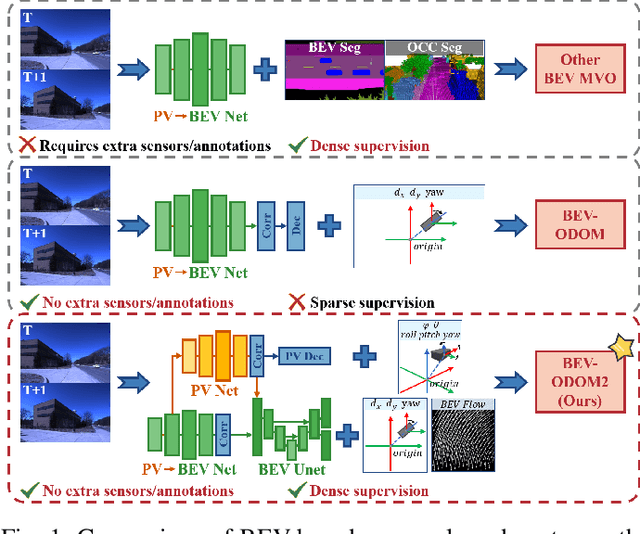

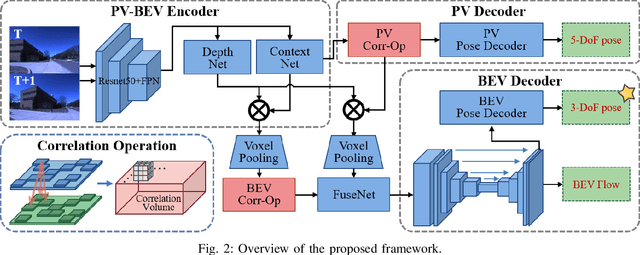

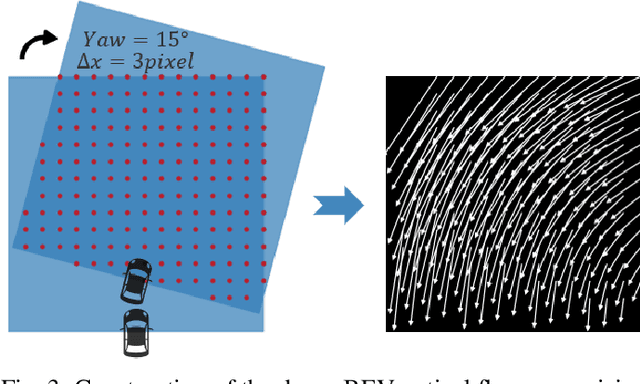

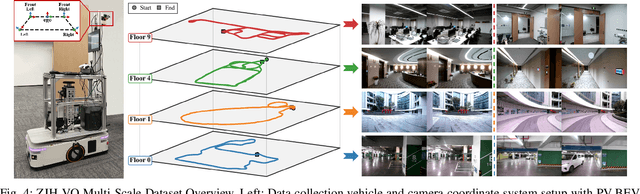

Abstract:Bird's-Eye-View (BEV) representation offers a metric-scaled planar workspace, facilitating the simplification of 6-DoF ego-motion to a more robust 3-DoF model for monocular visual odometry (MVO) in intelligent transportation systems. However, existing BEV methods suffer from sparse supervision signals and information loss during perspective-to-BEV projection. We present BEV-ODOM2, an enhanced framework addressing both limitations without additional annotations. Our approach introduces: (1) dense BEV optical flow supervision constructed from 3-DoF pose ground truth for pixel-level guidance; (2) PV-BEV fusion that computes correlation volumes before projection to preserve 6-DoF motion cues while maintaining scale consistency. The framework employs three supervision levels derived solely from pose data: dense BEV flow, 5-DoF for the PV branch, and final 3-DoF output. Enhanced rotation sampling further balances diverse motion patterns in training. Extensive evaluation on KITTI, NCLT, Oxford, and our newly collected ZJH-VO multi-scale dataset demonstrates state-of-the-art performance, achieving 40 improvement in RTE compared to previous BEV methods. The ZJH-VO dataset, covering diverse ground vehicle scenarios from underground parking to outdoor plazas, is publicly available to facilitate future research.

CarPlanner: Consistent Auto-regressive Trajectory Planning for Large-scale Reinforcement Learning in Autonomous Driving

Feb 27, 2025Abstract:Trajectory planning is vital for autonomous driving, ensuring safe and efficient navigation in complex environments. While recent learning-based methods, particularly reinforcement learning (RL), have shown promise in specific scenarios, RL planners struggle with training inefficiencies and managing large-scale, real-world driving scenarios. In this paper, we introduce \textbf{CarPlanner}, a \textbf{C}onsistent \textbf{a}uto-\textbf{r}egressive \textbf{Planner} that uses RL to generate multi-modal trajectories. The auto-regressive structure enables efficient large-scale RL training, while the incorporation of consistency ensures stable policy learning by maintaining coherent temporal consistency across time steps. Moreover, CarPlanner employs a generation-selection framework with an expert-guided reward function and an invariant-view module, simplifying RL training and enhancing policy performance. Extensive analysis demonstrates that our proposed RL framework effectively addresses the challenges of training efficiency and performance enhancement, positioning CarPlanner as a promising solution for trajectory planning in autonomous driving. To the best of our knowledge, we are the first to demonstrate that the RL-based planner can surpass both IL- and rule-based state-of-the-arts (SOTAs) on the challenging large-scale real-world dataset nuPlan. Our proposed CarPlanner surpasses RL-, IL-, and rule-based SOTA approaches within this demanding dataset.

BEV-DWPVO: BEV-based Differentiable Weighted Procrustes for Low Scale-drift Monocular Visual Odometry on Ground

Feb 27, 2025Abstract:Monocular Visual Odometry (MVO) provides a cost-effective, real-time positioning solution for autonomous vehicles. However, MVO systems face the common issue of lacking inherent scale information from monocular cameras. Traditional methods have good interpretability but can only obtain relative scale and suffer from severe scale drift in long-distance tasks. Learning-based methods under perspective view leverage large amounts of training data to acquire prior knowledge and estimate absolute scale by predicting depth values. However, their generalization ability is limited due to the need to accurately estimate the depth of each point. In contrast, we propose a novel MVO system called BEV-DWPVO. Our approach leverages the common assumption of a ground plane, using Bird's-Eye View (BEV) feature maps to represent the environment in a grid-based structure with a unified scale. This enables us to reduce the complexity of pose estimation from 6 Degrees of Freedom (DoF) to 3-DoF. Keypoints are extracted and matched within the BEV space, followed by pose estimation through a differentiable weighted Procrustes solver. The entire system is fully differentiable, supporting end-to-end training with only pose supervision and no auxiliary tasks. We validate BEV-DWPVO on the challenging long-sequence datasets NCLT, Oxford, and KITTI, achieving superior results over existing MVO methods on most evaluation metrics.

BEV-ODOM: Reducing Scale Drift in Monocular Visual Odometry with BEV Representation

Nov 15, 2024

Abstract:Monocular visual odometry (MVO) is vital in autonomous navigation and robotics, providing a cost-effective and flexible motion tracking solution, but the inherent scale ambiguity in monocular setups often leads to cumulative errors over time. In this paper, we present BEV-ODOM, a novel MVO framework leveraging the Bird's Eye View (BEV) Representation to address scale drift. Unlike existing approaches, BEV-ODOM integrates a depth-based perspective-view (PV) to BEV encoder, a correlation feature extraction neck, and a CNN-MLP-based decoder, enabling it to estimate motion across three degrees of freedom without the need for depth supervision or complex optimization techniques. Our framework reduces scale drift in long-term sequences and achieves accurate motion estimation across various datasets, including NCLT, Oxford, and KITTI. The results indicate that BEV-ODOM outperforms current MVO methods, demonstrating reduced scale drift and higher accuracy.

RING#: PR-by-PE Global Localization with Roto-translation Equivariant Gram Learning

Aug 30, 2024

Abstract:Global localization using onboard perception sensors, such as cameras and LiDARs, is crucial in autonomous driving and robotics applications when GPS signals are unreliable. Most approaches achieve global localization by sequential place recognition and pose estimation. Some of them train separate models for each task, while others employ a single model with dual heads, trained jointly with separate task-specific losses. However, the accuracy of localization heavily depends on the success of place recognition, which often fails in scenarios with significant changes in viewpoint or environmental appearance. Consequently, this renders the final pose estimation of localization ineffective. To address this, we propose a novel paradigm, PR-by-PE localization, which improves global localization accuracy by deriving place recognition directly from pose estimation. Our framework, RING#, is an end-to-end PR-by-PE localization network operating in the bird's-eye view (BEV) space, designed to support both vision and LiDAR sensors. It introduces a theoretical foundation for learning two equivariant representations from BEV features, which enables globally convergent and computationally efficient pose estimation. Comprehensive experiments on the NCLT and Oxford datasets across both vision and LiDAR modalities demonstrate that our method outperforms state-of-the-art approaches. Furthermore, we provide extensive analyses to confirm the effectiveness of our method. The code will be publicly released.

Leveraging BEV Representation for 360-degree Visual Place Recognition

May 23, 2023

Abstract:This paper investigates the advantages of using Bird's Eye View (BEV) representation in 360-degree visual place recognition (VPR). We propose a novel network architecture that utilizes the BEV representation in feature extraction, feature aggregation, and vision-LiDAR fusion, which bridges visual cues and spatial awareness. Our method extracts image features using standard convolutional networks and combines the features according to pre-defined 3D grid spatial points. To alleviate the mechanical and time misalignments between cameras, we further introduce deformable attention to learn the compensation. Upon the BEV feature representation, we then employ the polar transform and the Discrete Fourier transform for aggregation, which is shown to be rotation-invariant. In addition, the image and point cloud cues can be easily stated in the same coordinates, which benefits sensor fusion for place recognition. The proposed BEV-based method is evaluated in ablation and comparative studies on two datasets, including on-the-road and off-the-road scenarios. The experimental results verify the hypothesis that BEV can benefit VPR by its superior performance compared to baseline methods. To the best of our knowledge, this is the first trial of employing BEV representation in this task.

A Survey on Global LiDAR Localization

Feb 15, 2023

Abstract:Knowledge about the own pose is key for all mobile robot applications. Thus pose estimation is part of the core functionalities of mobile robots. In the last two decades, LiDAR scanners have become a standard sensor for robot localization and mapping. This article surveys recent progress and advances in LiDAR-based global localization. We start with the problem formulation and explore the application scope. We then present the methodology review covering various global localization topics, such as maps, descriptor extraction, and consistency checks. The contents are organized under three themes. The first is the combination of global place retrieval and local pose estimation. Then the second theme is upgrading single-shot measurement to sequential ones for sequential global localization. The third theme is extending single-robot global localization to cross-robot localization on multi-robot systems. We end this survey with a discussion of open challenges and promising directions on global lidar localization.

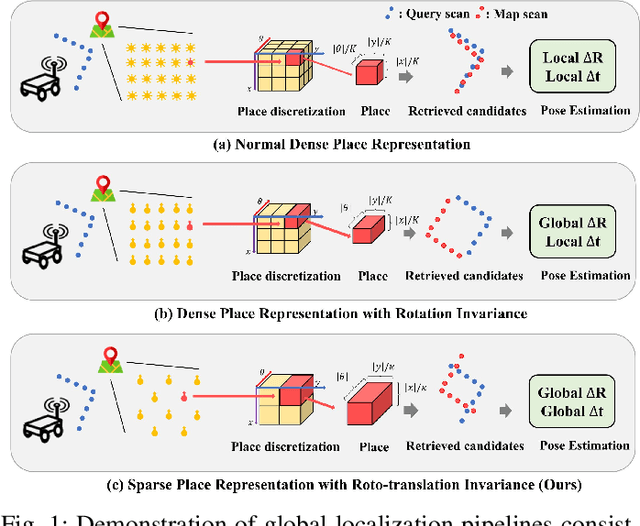

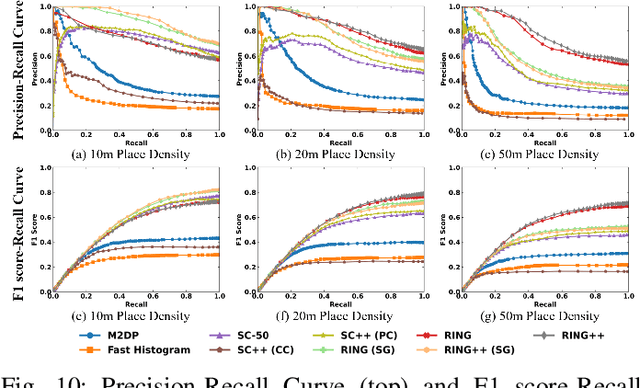

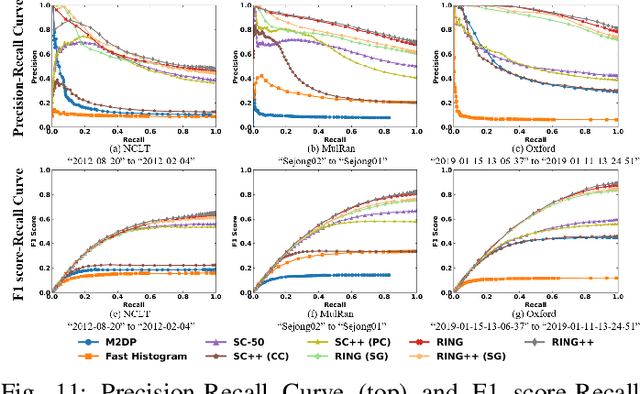

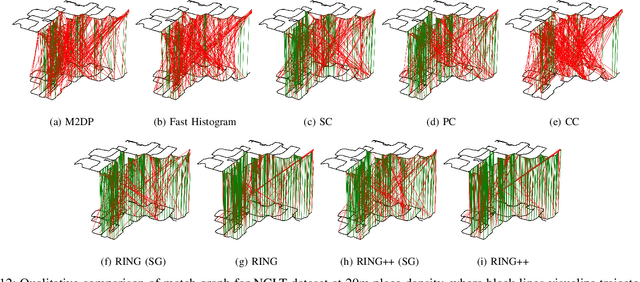

RING++: Roto-translation Invariant Gram for Global Localization on a Sparse Scan Map

Oct 12, 2022

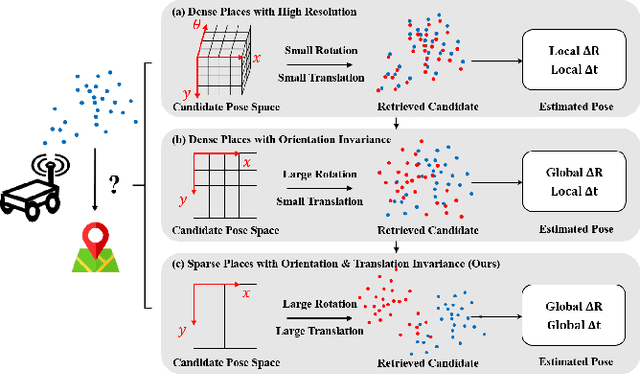

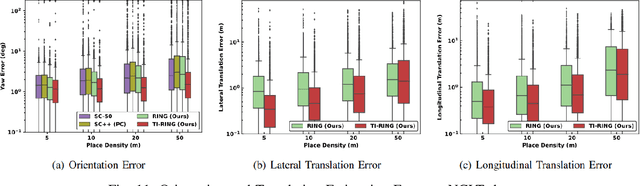

Abstract:Global localization plays a critical role in many robot applications. LiDAR-based global localization draws the community's focus with its robustness against illumination and seasonal changes. To further improve the localization under large viewpoint differences, we propose RING++ which has roto-translation invariant representation for place recognition, and global convergence for both rotation and translation estimation. With the theoretical guarantee, RING++ is able to address the large viewpoint difference using a lightweight map with sparse scans. In addition, we derive sufficient conditions of feature extractors for the representation preserving the roto-translation invariance, making RING++ a framework applicable to generic multi-channel features. To the best of our knowledge, this is the first learning-free framework to address all subtasks of global localization in the sparse scan map. Validations on real-world datasets show that our approach demonstrates better performance than state-of-the-art learning-free methods, and competitive performance with learning-based methods. Finally, we integrate RING++ into a multi-robot/session SLAM system, performing its effectiveness in collaborative applications.

One RING to Rule Them All: Radon Sinogram for Place Recognition, Orientation and Translation Estimation

Apr 17, 2022

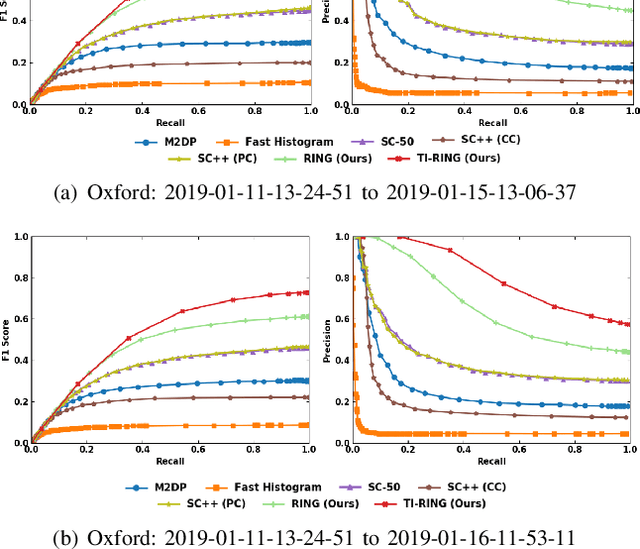

Abstract:LiDAR-based global localization is a fundamental problem for mobile robots. It consists of two stages, place recognition and pose estimation, and yields the current orientation and translation, using only the current scan as query and a database of map scans. Inspired by the definition of a recognized place, we consider that a good global localization solution should keep the pose estimation accuracy with a lower place density. Following this idea, we propose a novel framework towards sparse place-based global localization, which utilizes a unified and learning-free representation, Radon sinogram (RING), for all sub-tasks. Based on the theoretical derivation, a translation invariant descriptor and an orientation invariant metric are proposed for place recognition, achieving certifiable robustness against arbitrary orientation and large translation between query and map scan. In addition, we also utilize the property of RING to propose a global convergent solver for both orientation and translation estimation, arriving at global localization. Evaluation of the proposed RING based framework validates the feasibility and demonstrates a superior performance even under a lower place density.

Translation Invariant Global Estimation of Heading Angle Using Sinogram of LiDAR Point Cloud

Mar 02, 2022

Abstract:Global point cloud registration is an essential module for localization, of which the main difficulty exists in estimating the rotation globally without initial value. With the aid of gravity alignment, the degree of freedom in point cloud registration could be reduced to 4DoF, in which only the heading angle is required for rotation estimation. In this paper, we propose a fast and accurate global heading angle estimation method for gravity-aligned point clouds. Our key idea is that we generate a translation invariant representation based on Radon Transform, allowing us to solve the decoupled heading angle globally with circular cross-correlation. Besides, for heading angle estimation between point clouds with different distributions, we implement this heading angle estimator as a differentiable module to train a feature extraction network end- to-end. The experimental results validate the effectiveness of the proposed method in heading angle estimation and show better performance compared with other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge