Thuc Duy Le

UniSA STEM, University of South Australia, Adelaide, SA, Australia

Linking Model Intervention to Causal Interpretation in Model Explanation

Oct 21, 2024

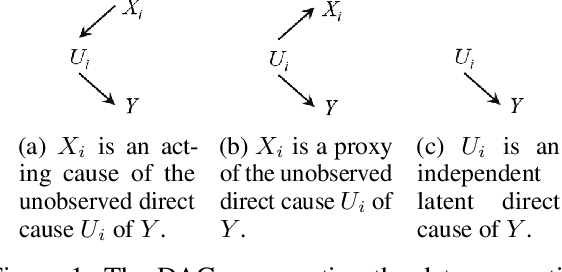

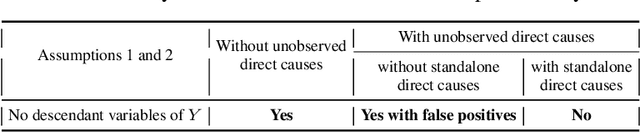

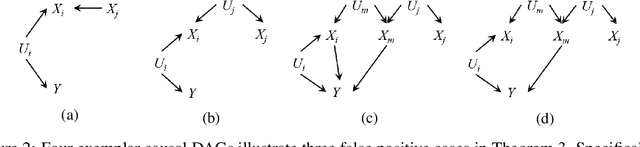

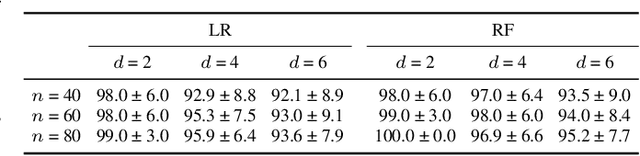

Abstract:Intervention intuition is often used in model explanation where the intervention effect of a feature on the outcome is quantified by the difference of a model prediction when the feature value is changed from the current value to the baseline value. Such a model intervention effect of a feature is inherently association. In this paper, we will study the conditions when an intuitive model intervention effect has a causal interpretation, i.e., when it indicates whether a feature is a direct cause of the outcome. This work links the model intervention effect to the causal interpretation of a model. Such an interpretation capability is important since it indicates whether a machine learning model is trustworthy to domain experts. The conditions also reveal the limitations of using a model intervention effect for causal interpretation in an environment with unobserved features. Experiments on semi-synthetic datasets have been conducted to validate theorems and show the potential for using the model intervention effect for model interpretation.

TSI: A Multi-View Representation Learning Approach for Time Series Forecasting

Sep 30, 2024

Abstract:As the growing demand for long sequence time-series forecasting in real-world applications, such as electricity consumption planning, the significance of time series forecasting becomes increasingly crucial across various domains. This is highlighted by recent advancements in representation learning within the field. This study introduces a novel multi-view approach for time series forecasting that innovatively integrates trend and seasonal representations with an Independent Component Analysis (ICA)-based representation. Recognizing the limitations of existing methods in representing complex and high-dimensional time series data, this research addresses the challenge by combining TS (trend and seasonality) and ICA (independent components) perspectives. This approach offers a holistic understanding of time series data, going beyond traditional models that often miss nuanced, nonlinear relationships. The efficacy of TSI model is demonstrated through comprehensive testing on various benchmark datasets, where it shows superior performance over current state-of-the-art models, particularly in multivariate forecasting. This method not only enhances the accuracy of forecasting but also contributes significantly to the field by providing a more in-depth understanding of time series data. The research which uses ICA for a view lays the groundwork for further exploration and methodological advancements in time series forecasting, opening new avenues for research and practical applications.

A Deconfounding Approach to Climate Model Bias Correction

Aug 22, 2024

Abstract:Global Climate Models (GCMs) are crucial for predicting future climate changes by simulating the Earth systems. However, GCM outputs exhibit systematic biases due to model uncertainties, parameterization simplifications, and inadequate representation of complex climate phenomena. Traditional bias correction methods, which rely on historical observation data and statistical techniques, often neglect unobserved confounders, leading to biased results. This paper proposes a novel bias correction approach to utilize both GCM and observational data to learn a factor model that captures multi-cause latent confounders. Inspired by recent advances in causality based time series deconfounding, our method first constructs a factor model to learn latent confounders from historical data and then applies them to enhance the bias correction process using advanced time series forecasting models. The experimental results demonstrate significant improvements in the accuracy of precipitation outputs. By addressing unobserved confounders, our approach offers a robust and theoretically grounded solution for climate model bias correction.

Robust COVID-19 Detection in CT Images with CLIP

Mar 15, 2024Abstract:In the realm of medical imaging, particularly for COVID-19 detection, deep learning models face substantial challenges such as the necessity for extensive computational resources, the paucity of well-annotated datasets, and a significant amount of unlabeled data. In this work, we introduce the first lightweight detector designed to overcome these obstacles, leveraging a frozen CLIP image encoder and a trainable multilayer perception (MLP). Enhanced with Conditional Value at Risk (CVaR) for robustness and a loss landscape flattening strategy for improved generalization, our model is tailored for high efficacy in COVID-19 detection. Furthermore, we integrate a teacher-student framework to capitalize on the vast amounts of unlabeled data, enabling our model to achieve superior performance despite the inherent data limitations. Experimental results on the COV19-CT-DB dataset demonstrate the effectiveness of our approach, surpassing baseline by up to 10.6% in `macro' F1 score in supervised learning. The code is available at https://github.com/Purdue-M2/COVID-19_Detection_M2_PURDUE.

Instrumental Variable Estimation for Causal Inference in Longitudinal Data with Time-Dependent Latent Confounders

Dec 12, 2023

Abstract:Causal inference from longitudinal observational data is a challenging problem due to the difficulty in correctly identifying the time-dependent confounders, especially in the presence of latent time-dependent confounders. Instrumental variable (IV) is a powerful tool for addressing the latent confounders issue, but the traditional IV technique cannot deal with latent time-dependent confounders in longitudinal studies. In this work, we propose a novel Time-dependent Instrumental Factor Model (TIFM) for time-varying causal effect estimation from data with latent time-dependent confounders. At each time-step, the proposed TIFM method employs the Recurrent Neural Network (RNN) architecture to infer latent IV, and then uses the inferred latent IV factor for addressing the confounding bias caused by the latent time-dependent confounders. We provide a theoretical analysis for the proposed TIFM method regarding causal effect estimation in longitudinal data. Extensive evaluation with synthetic datasets demonstrates the effectiveness of TIFM in addressing causal effect estimation over time. We further apply TIFM to a climate dataset to showcase the potential of the proposed method in tackling real-world problems.

Conditional Instrumental Variable Regression with Representation Learning for Causal Inference

Oct 03, 2023

Abstract:This paper studies the challenging problem of estimating causal effects from observational data, in the presence of unobserved confounders. The two-stage least square (TSLS) method and its variants with a standard instrumental variable (IV) are commonly used to eliminate confounding bias, including the bias caused by unobserved confounders, but they rely on the linearity assumption. Besides, the strict condition of unconfounded instruments posed on a standard IV is too strong to be practical. To address these challenging and practical problems of the standard IV method (linearity assumption and the strict condition), in this paper, we use a conditional IV (CIV) to relax the unconfounded instrument condition of standard IV and propose a non-linear CIV regression with Confounding Balancing Representation Learning, CBRL.CIV, for jointly eliminating the confounding bias from unobserved confounders and balancing the observed confounders, without the linearity assumption. We theoretically demonstrate the soundness of CBRL.CIV. Extensive experiments on synthetic and two real-world datasets show the competitive performance of CBRL.CIV against state-of-the-art IV-based estimators and superiority in dealing with the non-linear situation.

Learning Conditional Instrumental Variable Representation for Causal Effect Estimation

Jun 21, 2023Abstract:One of the fundamental challenges in causal inference is to estimate the causal effect of a treatment on its outcome of interest from observational data. However, causal effect estimation often suffers from the impacts of confounding bias caused by unmeasured confounders that affect both the treatment and the outcome. The instrumental variable (IV) approach is a powerful way to eliminate the confounding bias from latent confounders. However, the existing IV-based estimators require a nominated IV, and for a conditional IV (CIV) the corresponding conditioning set too, for causal effect estimation. This limits the application of IV-based estimators. In this paper, by leveraging the advantage of disentangled representation learning, we propose a novel method, named DVAE.CIV, for learning and disentangling the representations of CIV and the representations of its conditioning set for causal effect estimations from data with latent confounders. Extensive experimental results on both synthetic and real-world datasets demonstrate the superiority of the proposed DVAE.CIV method against the existing causal effect estimators.

Linking a predictive model to causal effect estimation

Apr 10, 2023

Abstract:A predictive model makes outcome predictions based on some given features, i.e., it estimates the conditional probability of the outcome given a feature vector. In general, a predictive model cannot estimate the causal effect of a feature on the outcome, i.e., how the outcome will change if the feature is changed while keeping the values of other features unchanged. This is because causal effect estimation requires interventional probabilities. However, many real world problems such as personalised decision making, recommendation, and fairness computing, need to know the causal effect of any feature on the outcome for a given instance. This is different from the traditional causal effect estimation problem with a fixed treatment variable. This paper first tackles the challenge of estimating the causal effect of any feature (as the treatment) on the outcome w.r.t. a given instance. The theoretical results naturally link a predictive model to causal effect estimations and imply that a predictive model is causally interpretable when the conditions identified in the paper are satisfied. The paper also reveals the robust property of a causally interpretable model. We use experiments to demonstrate that various types of predictive models, when satisfying the conditions identified in this paper, can estimate the causal effects of features as accurately as state-of-the-art causal effect estimation methods. We also show the potential of such causally interpretable predictive models for robust predictions and personalised decision making.

Causal Inference with Conditional Instruments using Deep Generative Models

Nov 29, 2022Abstract:The instrumental variable (IV) approach is a widely used way to estimate the causal effects of a treatment on an outcome of interest from observational data with latent confounders. A standard IV is expected to be related to the treatment variable and independent of all other variables in the system. However, it is challenging to search for a standard IV from data directly due to the strict conditions. The conditional IV (CIV) method has been proposed to allow a variable to be an instrument conditioning on a set of variables, allowing a wider choice of possible IVs and enabling broader practical applications of the IV approach. Nevertheless, there is not a data-driven method to discover a CIV and its conditioning set directly from data. To fill this gap, in this paper, we propose to learn the representations of the information of a CIV and its conditioning set from data with latent confounders for average causal effect estimation. By taking advantage of deep generative models, we develop a novel data-driven approach for simultaneously learning the representation of a CIV from measured variables and generating the representation of its conditioning set given measured variables. Extensive experiments on synthetic and real-world datasets show that our method outperforms the existing IV methods.

Data-Driven Causal Effect Estimation Based on Graphical Causal Modelling: A Survey

Aug 20, 2022

Abstract:In many fields of scientific research and real-world applications, unbiased estimation of causal effects from non-experimental data is crucial for understanding the mechanism underlying the data and for decision-making on effective responses or interventions. A great deal of research has been conducted on this challenging problem from different angles. For causal effect estimation in data, assumptions such as Markov property, faithfulness and causal sufficiency are always made. Under the assumptions, full knowledge such as, a set of covariates or an underlying causal graph, is still required. A practical challenge is that in many applications, no such full knowledge or only some partial knowledge is available. In recent years, research has emerged to use a search strategy based on graphical causal modelling to discover useful knowledge from data for causal effect estimation, with some mild assumptions, and has shown promose in tackling the practical challenge. In this survey, we review the methods and focus on the challenges the data-driven methods face. We discuss the assumptions, strengths and limitations of the data-driven methods. We hope this review will motivate more researchers to design better data-driven methods based on graphical causal modelling for the challenging problem of causal effect estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge