Debo Cheng

University of South Australia

DeNoise: Learning Robust Graph Representations for Unsupervised Graph-Level Anomaly Detection

Nov 06, 2025Abstract:With the rapid growth of graph-structured data in critical domains, unsupervised graph-level anomaly detection (UGAD) has become a pivotal task. UGAD seeks to identify entire graphs that deviate from normal behavioral patterns. However, most Graph Neural Network (GNN) approaches implicitly assume that the training set is clean, containing only normal graphs, which is rarely true in practice. Even modest contamination by anomalous graphs can distort learned representations and sharply degrade performance. To address this challenge, we propose DeNoise, a robust UGAD framework explicitly designed for contaminated training data. It jointly optimizes a graph-level encoder, an attribute decoder, and a structure decoder via an adversarial objective to learn noise-resistant embeddings. Further, DeNoise introduces an encoder anchor-alignment denoising mechanism that fuses high-information node embeddings from normal graphs into all graph embeddings, improving representation quality while suppressing anomaly interference. A contrastive learning component then compacts normal graph embeddings and repels anomalous ones in the latent space. Extensive experiments on eight real-world datasets demonstrate that DeNoise consistently learns reliable graph-level representations under varying noise intensities and significantly outperforms state-of-the-art UGAD baselines.

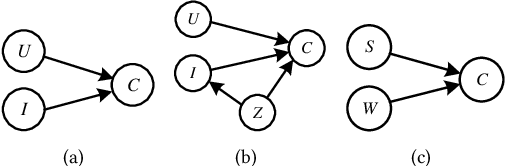

A Novel Generative Model with Causality Constraint for Mitigating Biases in Recommender Systems

May 22, 2025Abstract:Accurately predicting counterfactual user feedback is essential for building effective recommender systems. However, latent confounding bias can obscure the true causal relationship between user feedback and item exposure, ultimately degrading recommendation performance. Existing causal debiasing approaches often rely on strong assumptions-such as the availability of instrumental variables (IVs) or strong correlations between latent confounders and proxy variables-that are rarely satisfied in real-world scenarios. To address these limitations, we propose a novel generative framework called Latent Causality Constraints for Debiasing representation learning in Recommender Systems (LCDR). Specifically, LCDR leverages an identifiable Variational Autoencoder (iVAE) as a causal constraint to align the latent representations learned by a standard Variational Autoencoder (VAE) through a unified loss function. This alignment allows the model to leverage even weak or noisy proxy variables to recover latent confounders effectively. The resulting representations are then used to improve recommendation performance. Extensive experiments on three real-world datasets demonstrate that LCDR consistently outperforms existing methods in both mitigating bias and improving recommendation accuracy.

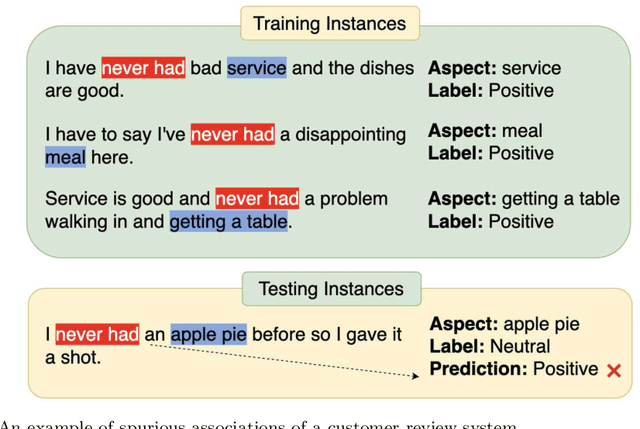

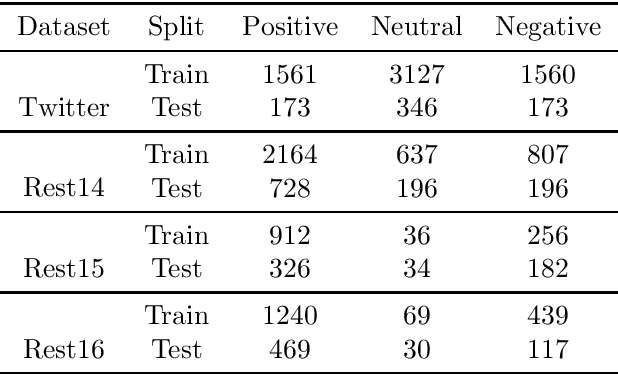

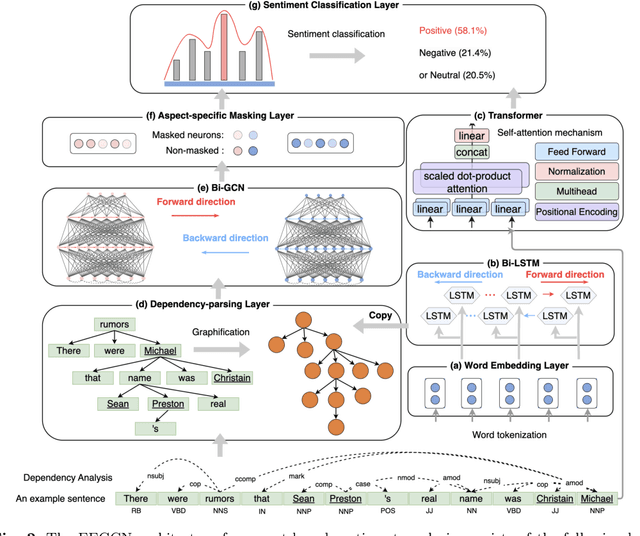

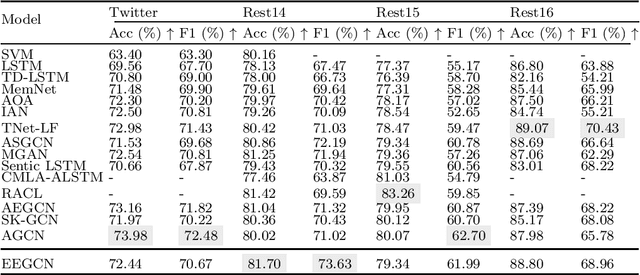

Leveraging Deep Neural Networks for Aspect-Based Sentiment Classification

Mar 17, 2025

Abstract:Aspect-based sentiment analysis seeks to determine sentiment with a high level of detail. While graph convolutional networks (GCNs) are commonly used for extracting sentiment features, their straightforward use in syntactic feature extraction can lead to a loss of crucial information. This paper presents a novel edge-enhanced GCN, called EEGCN, which improves performance by preserving feature integrity as it processes syntactic graphs. We incorporate a bidirectional long short-term memory (Bi-LSTM) network alongside a self-attention-based transformer for effective text encoding, ensuring the retention of long-range dependencies. A bidirectional GCN (Bi-GCN) with message passing then captures the relationships between entities, while an aspect-specific masking technique removes extraneous information. Extensive evaluations and ablation studies on four benchmark datasets show that EEGCN significantly enhances aspect-based sentiment analysis, overcoming issues with syntactic feature extraction and advancing the field's methodologies.

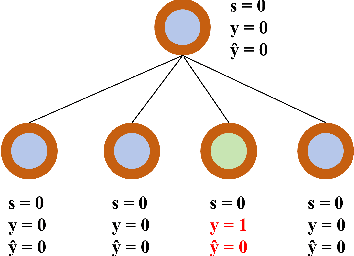

Counterfactual Samples Constructing and Training for Commonsense Statements Estimation

Dec 29, 2024

Abstract:Plausibility Estimation (PE) plays a crucial role for enabling language models to objectively comprehend the real world. While large language models (LLMs) demonstrate remarkable capabilities in PE tasks but sometimes produce trivial commonsense errors due to the complexity of commonsense knowledge. They lack two key traits of an ideal PE model: a) Language-explainable: relying on critical word segments for decisions, and b) Commonsense-sensitive: detecting subtle linguistic variations in commonsense. To address these issues, we propose a novel model-agnostic method, referred to as Commonsense Counterfactual Samples Generating (CCSG). By training PE models with CCSG, we encourage them to focus on critical words, thereby enhancing both their language-explainable and commonsense-sensitive capabilities. Specifically, CCSG generates counterfactual samples by strategically replacing key words and introducing low-level dropout within sentences. These counterfactual samples are then incorporated into a sentence-level contrastive training framework to further enhance the model's learning process. Experimental results across nine diverse datasets demonstrate the effectiveness of CCSG in addressing commonsense reasoning challenges, with our CCSG method showing 3.07% improvement against the SOTA methods.

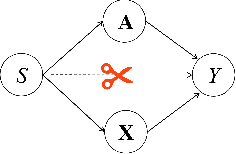

Toward Fair Graph Neural Networks Via Dual-Teacher Knowledge Distillation

Nov 30, 2024Abstract:Graph Neural Networks (GNNs) have demonstrated strong performance in graph representation learning across various real-world applications. However, they often produce biased predictions caused by sensitive attributes, such as religion or gender, an issue that has been largely overlooked in existing methods. Recently, numerous studies have focused on reducing biases in GNNs. However, these approaches often rely on training with partial data (e.g., using either node features or graph structure alone), which can enhance fairness but frequently compromises model utility due to the limited utilization of available graph information. To address this tradeoff, we propose an effective strategy to balance fairness and utility in knowledge distillation. Specifically, we introduce FairDTD, a novel Fair representation learning framework built on Dual-Teacher Distillation, leveraging a causal graph model to guide and optimize the design of the distillation process. Specifically, FairDTD employs two fairness-oriented teacher models: a feature teacher and a structure teacher, to facilitate dual distillation, with the student model learning fairness knowledge from the teachers while also leveraging full data to mitigate utility loss. To enhance information transfer, we incorporate graph-level distillation to provide an indirect supplement of graph information during training, as well as a node-specific temperature module to improve the comprehensive transfer of fair knowledge. Experiments on diverse benchmark datasets demonstrate that FairDTD achieves optimal fairness while preserving high model utility, showcasing its effectiveness in fair representation learning for GNNs.

Leaning Time-Varying Instruments for Identifying Causal Effects in Time-Series Data

Nov 26, 2024

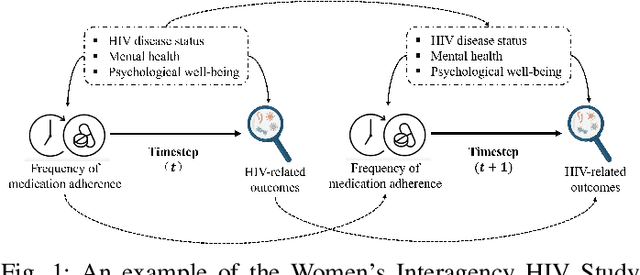

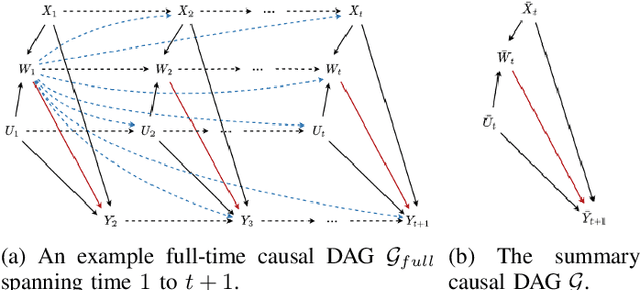

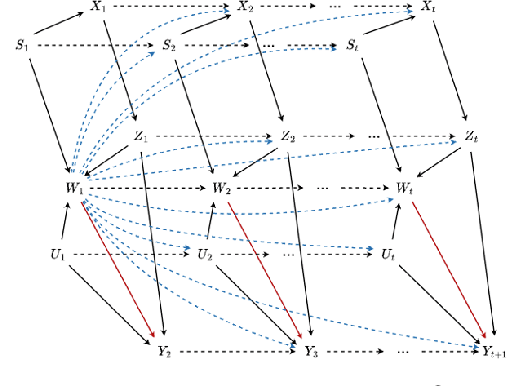

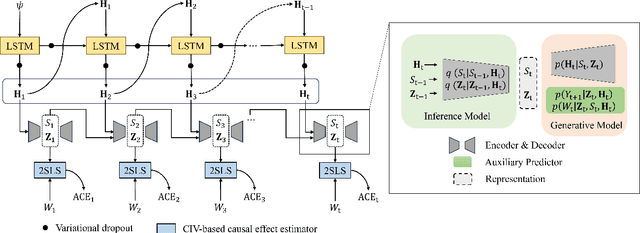

Abstract:Querying causal effects from time-series data is important across various fields, including healthcare, economics, climate science, and epidemiology. However, this task becomes complex in the existence of time-varying latent confounders, which affect both treatment and outcome variables over time and can introduce bias in causal effect estimation. Traditional instrumental variable (IV) methods are limited in addressing such complexities due to the need for predefined IVs or strong assumptions that do not hold in dynamic settings. To tackle these issues, we develop a novel Time-varying Conditional Instrumental Variables (CIV) for Debiasing causal effect estimation, referred to as TDCIV. TDCIV leverages Long Short-Term Memory (LSTM) and Variational Autoencoder (VAE) models to disentangle and learn the representations of time-varying CIV and its conditioning set from proxy variables without prior knowledge. Under the assumptions of the Markov property and availability of proxy variables, we theoretically establish the validity of these learned representations for addressing the biases from time-varying latent confounders, thus enabling accurate causal effect estimation. Our proposed TDCIV is the first to effectively learn time-varying CIV and its associated conditioning set without relying on domain-specific knowledge.

Linking Model Intervention to Causal Interpretation in Model Explanation

Oct 21, 2024

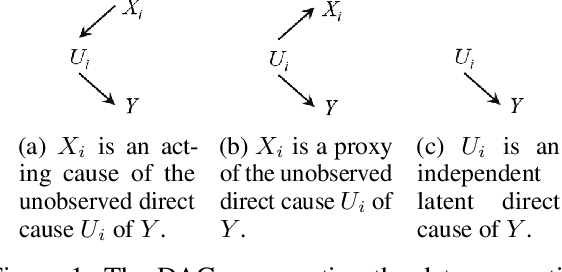

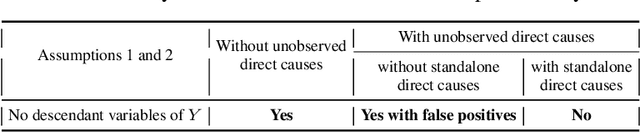

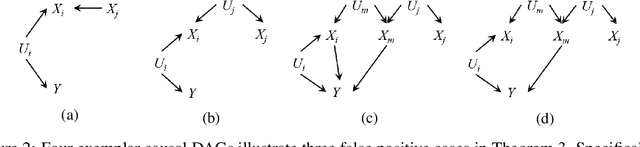

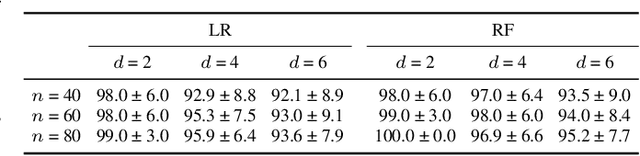

Abstract:Intervention intuition is often used in model explanation where the intervention effect of a feature on the outcome is quantified by the difference of a model prediction when the feature value is changed from the current value to the baseline value. Such a model intervention effect of a feature is inherently association. In this paper, we will study the conditions when an intuitive model intervention effect has a causal interpretation, i.e., when it indicates whether a feature is a direct cause of the outcome. This work links the model intervention effect to the causal interpretation of a model. Such an interpretation capability is important since it indicates whether a machine learning model is trustworthy to domain experts. The conditions also reveal the limitations of using a model intervention effect for causal interpretation in an environment with unobserved features. Experiments on semi-synthetic datasets have been conducted to validate theorems and show the potential for using the model intervention effect for model interpretation.

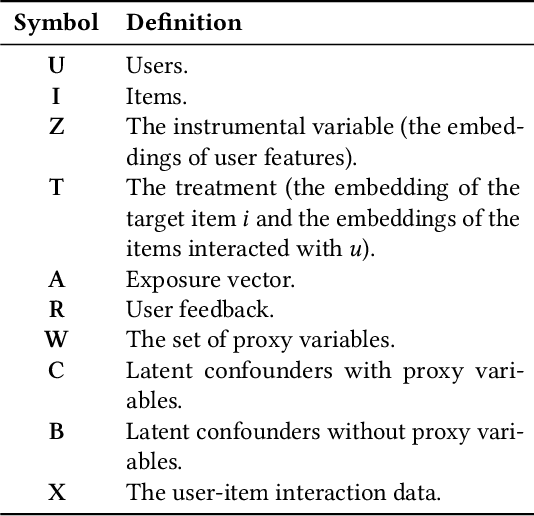

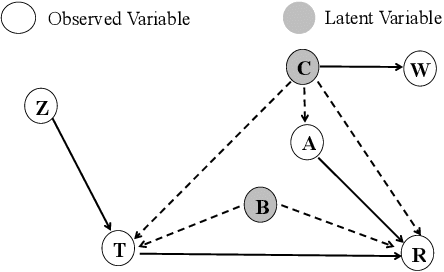

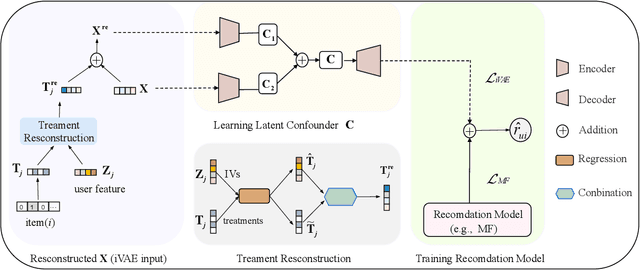

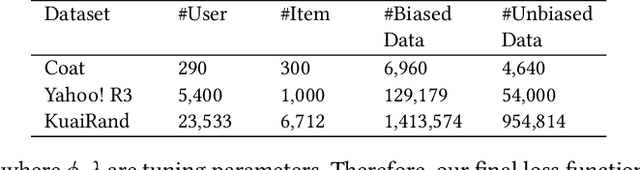

Mitigating Dual Latent Confounding Biases in Recommender Systems

Oct 16, 2024

Abstract:Recommender systems are extensively utilised across various areas to predict user preferences for personalised experiences and enhanced user engagement and satisfaction. Traditional recommender systems, however, are complicated by confounding bias, particularly in the presence of latent confounders that affect both item exposure and user feedback. Existing debiasing methods often fail to capture the complex interactions caused by latent confounders in interaction data, especially when dual latent confounders affect both the user and item sides. To address this, we propose a novel debiasing method that jointly integrates the Instrumental Variables (IV) approach and identifiable Variational Auto-Encoder (iVAE) for Debiased representation learning in Recommendation systems, referred to as IViDR. Specifically, IViDR leverages the embeddings of user features as IVs to address confounding bias caused by latent confounders between items and user feedback, and reconstructs the embedding of items to obtain debiased interaction data. Moreover, IViDR employs an Identifiable Variational Auto-Encoder (iVAE) to infer identifiable representations of latent confounders between item exposure and user feedback from both the original and debiased interaction data. Additionally, we provide theoretical analyses of the soundness of using IV and the identifiability of the latent representations. Extensive experiments on both synthetic and real-world datasets demonstrate that IViDR outperforms state-of-the-art models in reducing bias and providing reliable recommendations.

Multi-Cause Deconfounding for Recommender Systems with Latent Confounders

Oct 16, 2024

Abstract:In recommender systems, various latent confounding factors (e.g., user social environment and item public attractiveness) can affect user behavior, item exposure, and feedback in distinct ways. These factors may directly or indirectly impact user feedback and are often shared across items or users, making them multi-cause latent confounders. However, existing methods typically fail to account for latent confounders between users and their feedback, as well as those between items and user feedback simultaneously. To address the problem of multi-cause latent confounders, we propose a multi-cause deconfounding method for recommender systems with latent confounders (MCDCF). MCDCF leverages multi-cause causal effect estimation to learn substitutes for latent confounders associated with both users and items, using user behaviour data. Specifically, MCDCF treats the multiple items that users interact with and the multiple users that interact with items as treatment variables, enabling it to learn substitutes for the latent confounders that influence the estimation of causality between users and their feedback, as well as between items and user feedback. Additionally, we theoretically demonstrate the soundness of our MCDCF method. Extensive experiments on three real-world datasets demonstrate that our MCDCF method effectively recovers latent confounders related to users and items, reducing bias and thereby improving recommendation accuracy.

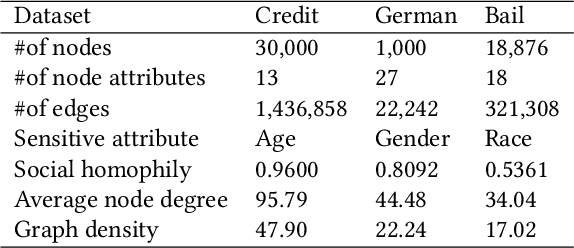

Towards Fair Graph Representation Learning in Social Networks

Oct 15, 2024

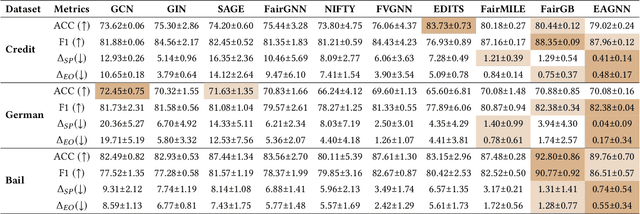

Abstract:With the widespread use of Graph Neural Networks (GNNs) for representation learning from network data, the fairness of GNN models has raised great attention lately. Fair GNNs aim to ensure that node representations can be accurately classified, but not easily associated with a specific group. Existing advanced approaches essentially enhance the generalisation of node representation in combination with data augmentation strategy, and do not directly impose constraints on the fairness of GNNs. In this work, we identify that a fundamental reason for the unfairness of GNNs in social network learning is the phenomenon of social homophily, i.e., users in the same group are more inclined to congregate. The message-passing mechanism of GNNs can cause users in the same group to have similar representations due to social homophily, leading model predictions to establish spurious correlations with sensitive attributes. Inspired by this reason, we propose a method called Equity-Aware GNN (EAGNN) towards fair graph representation learning. Specifically, to ensure that model predictions are independent of sensitive attributes while maintaining prediction performance, we introduce constraints for fair representation learning based on three principles: sufficiency, independence, and separation. We theoretically demonstrate that our EAGNN method can effectively achieve group fairness. Extensive experiments on three datasets with varying levels of social homophily illustrate that our EAGNN method achieves the state-of-the-art performance across two fairness metrics and offers competitive effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge