Towards Fair Graph Representation Learning in Social Networks

Paper and Code

Oct 15, 2024

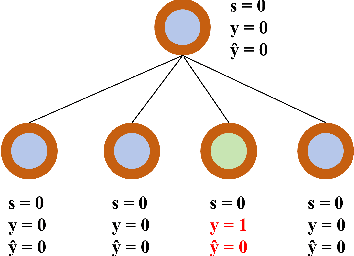

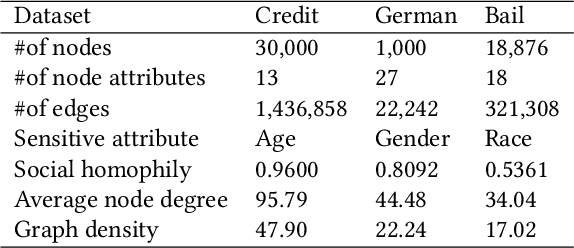

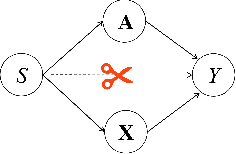

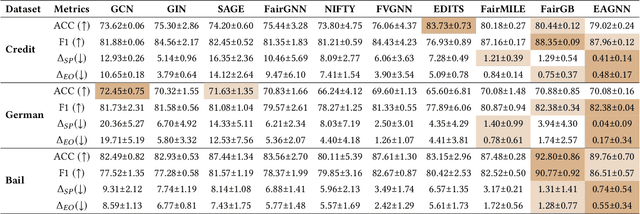

With the widespread use of Graph Neural Networks (GNNs) for representation learning from network data, the fairness of GNN models has raised great attention lately. Fair GNNs aim to ensure that node representations can be accurately classified, but not easily associated with a specific group. Existing advanced approaches essentially enhance the generalisation of node representation in combination with data augmentation strategy, and do not directly impose constraints on the fairness of GNNs. In this work, we identify that a fundamental reason for the unfairness of GNNs in social network learning is the phenomenon of social homophily, i.e., users in the same group are more inclined to congregate. The message-passing mechanism of GNNs can cause users in the same group to have similar representations due to social homophily, leading model predictions to establish spurious correlations with sensitive attributes. Inspired by this reason, we propose a method called Equity-Aware GNN (EAGNN) towards fair graph representation learning. Specifically, to ensure that model predictions are independent of sensitive attributes while maintaining prediction performance, we introduce constraints for fair representation learning based on three principles: sufficiency, independence, and separation. We theoretically demonstrate that our EAGNN method can effectively achieve group fairness. Extensive experiments on three datasets with varying levels of social homophily illustrate that our EAGNN method achieves the state-of-the-art performance across two fairness metrics and offers competitive effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge