Qiuguo Zhu

PUMA: Perception-driven Unified Foothold Prior for Mobility Augmented Quadruped Parkour

Jan 22, 2026Abstract:Parkour tasks for quadrupeds have emerged as a promising benchmark for agile locomotion. While human athletes can effectively perceive environmental characteristics to select appropriate footholds for obstacle traversal, endowing legged robots with similar perceptual reasoning remains a significant challenge. Existing methods often rely on hierarchical controllers that follow pre-computed footholds, thereby constraining the robot's real-time adaptability and the exploratory potential of reinforcement learning. To overcome these challenges, we present PUMA, an end-to-end learning framework that integrates visual perception and foothold priors into a single-stage training process. This approach leverages terrain features to estimate egocentric polar foothold priors, composed of relative distance and heading, guiding the robot in active posture adaptation for parkour tasks. Extensive experiments conducted in simulation and real-world environments across various discrete complex terrains, demonstrate PUMA's exceptional agility and robustness in challenging scenarios.

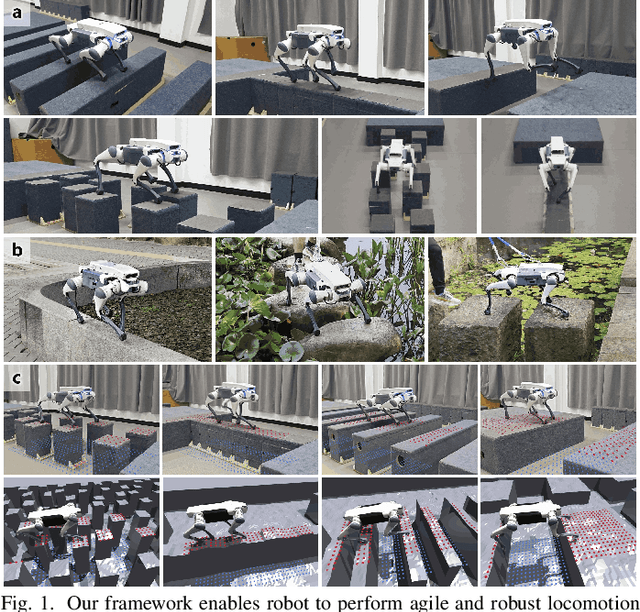

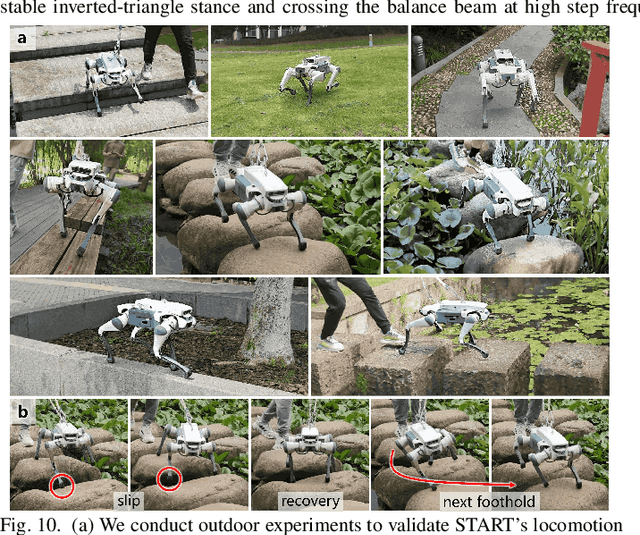

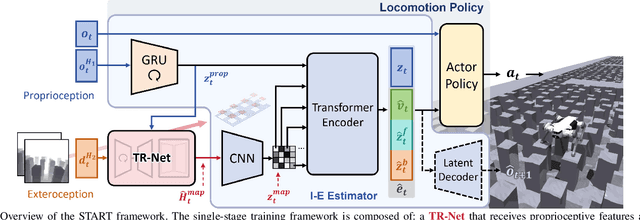

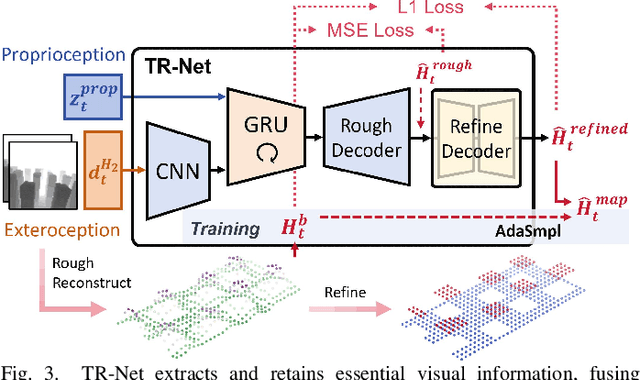

START: Traversing Sparse Footholds with Terrain Reconstruction

Dec 15, 2025

Abstract:Traversing terrains with sparse footholds like legged animals presents a promising yet challenging task for quadruped robots, as it requires precise environmental perception and agile control to secure safe foot placement while maintaining dynamic stability. Model-based hierarchical controllers excel in laboratory settings, but suffer from limited generalization and overly conservative behaviors. End-to-end learning-based approaches unlock greater flexibility and adaptability, but existing state-of-the-art methods either rely on heightmaps that introduce noise and complex, costly pipelines, or implicitly infer terrain features from egocentric depth images, often missing accurate critical geometric cues and leading to inefficient learning and rigid gaits. To overcome these limitations, we propose START, a single-stage learning framework that enables agile, stable locomotion on highly sparse and randomized footholds. START leverages only low-cost onboard vision and proprioception to accurately reconstruct local terrain heightmap, providing an explicit intermediate representation to convey essential features relevant to sparse foothold regions. This supports comprehensive environmental understanding and precise terrain assessment, reducing exploration cost and accelerating skill acquisition. Experimental results demonstrate that START achieves zero-shot transfer across diverse real-world scenarios, showcasing superior adaptability, precise foothold placement, and robust locomotion.

A Hierarchical Region-Based Approach for Efficient Multi-Robot Exploration

Mar 17, 2025Abstract:Multi-robot autonomous exploration in an unknown environment is an important application in robotics.Traditional exploration methods only use information around frontier points or viewpoints, ignoring spatial information of unknown areas. Moreover, finding the exact optimal solution for multi-robot task allocation is NP-hard, resulting in significant computational time consumption. To address these issues, we present a hierarchical multi-robot exploration framework using a new modeling method called RegionGraph. The proposed approach makes two main contributions: 1) A new modeling method for unexplored areas that preserves their spatial information across the entire space in a weighted graph called RegionGraph. 2) A hierarchical multi-robot exploration framework that decomposes the global exploration task into smaller subtasks, reducing the frequency of global planning and enabling asynchronous exploration. The proposed method is validated through both simulation and real-world experiments, demonstrating a 20% improvement in efficiency compared to existing methods.

MOVE: Multi-skill Omnidirectional Legged Locomotion with Limited View in 3D Environments

Dec 04, 2024

Abstract:Legged robots possess inherent advantages in traversing complex 3D terrains. However, previous work on low-cost quadruped robots with egocentric vision systems has been limited by a narrow front-facing view and exteroceptive noise, restricting omnidirectional mobility in such environments. While building a voxel map through a hierarchical structure can refine exteroception processing, it introduces significant computational overhead, noise, and delays. In this paper, we present MOVE, a one-stage end-to-end learning framework capable of multi-skill omnidirectional legged locomotion with limited view in 3D environments, just like what a real animal can do. When movement aligns with the robot's line of sight, exteroceptive perception enhances locomotion, enabling extreme climbing and leaping. When vision is obstructed or the direction of movement lies outside the robot's field of view, the robot relies on proprioception for tasks like crawling and climbing stairs. We integrate all these skills into a single neural network by introducing a pseudo-siamese network structure combining supervised and contrastive learning which helps the robot infer its surroundings beyond its field of view. Experiments in both simulations and real-world scenarios demonstrate the robustness of our method, broadening the operational environments for robotics with egocentric vision.

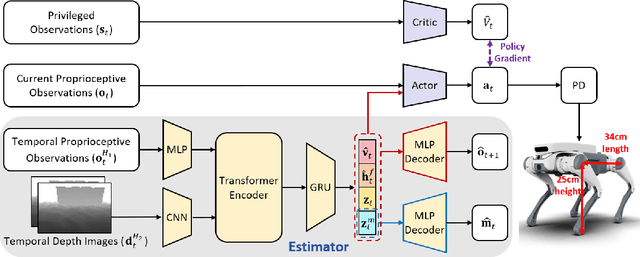

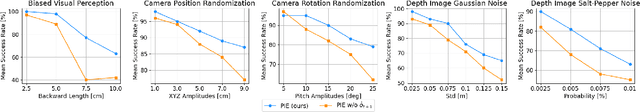

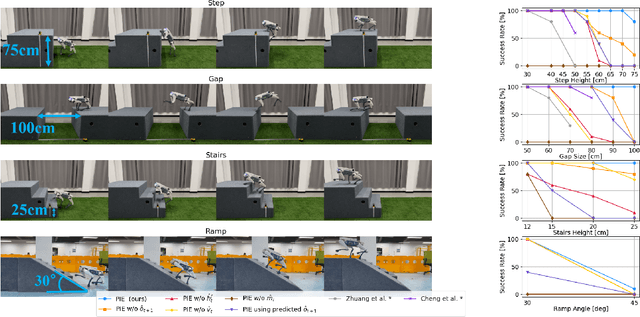

PIE: Parkour with Implicit-Explicit Learning Framework for Legged Robots

Aug 27, 2024

Abstract:Parkour presents a highly challenging task for legged robots, requiring them to traverse various terrains with agile and smooth locomotion. This necessitates comprehensive understanding of both the robot's own state and the surrounding terrain, despite the inherent unreliability of robot perception and actuation. Current state-of-the-art methods either rely on complex pre-trained high-level terrain reconstruction modules or limit the maximum potential of robot parkour to avoid failure due to inaccurate perception. In this paper, we propose a one-stage end-to-end learning-based parkour framework: Parkour with Implicit-Explicit learning framework for legged robots (PIE) that leverages dual-level implicit-explicit estimation. With this mechanism, even a low-cost quadruped robot equipped with an unreliable egocentric depth camera can achieve exceptional performance on challenging parkour terrains using a relatively simple training process and reward function. While the training process is conducted entirely in simulation, our real-world validation demonstrates successful zero-shot deployment of our framework, showcasing superior parkour performance on harsh terrains.

Toward Understanding Key Estimation in Learning Robust Humanoid Locomotion

Mar 09, 2024Abstract:Accurate state estimation plays a critical role in ensuring the robust control of humanoid robots, particularly in the context of learning-based control policies for legged robots. However, there is a notable gap in analytical research concerning estimations. Therefore, we endeavor to further understand how various types of estimations influence the decision-making processes of policies. In this paper, we provide quantitative insight into the effectiveness of learned state estimations, employing saliency analysis to identify key estimation variables and optimize their combination for humanoid locomotion tasks. Evaluations assessing tracking precision and robustness are conducted on comparative groups of policies with varying estimation combinations in both simulated and real-world environments. Results validated that the proposed policy is capable of crossing the sim-to-real gap and demonstrating superior performance relative to alternative policy configurations.

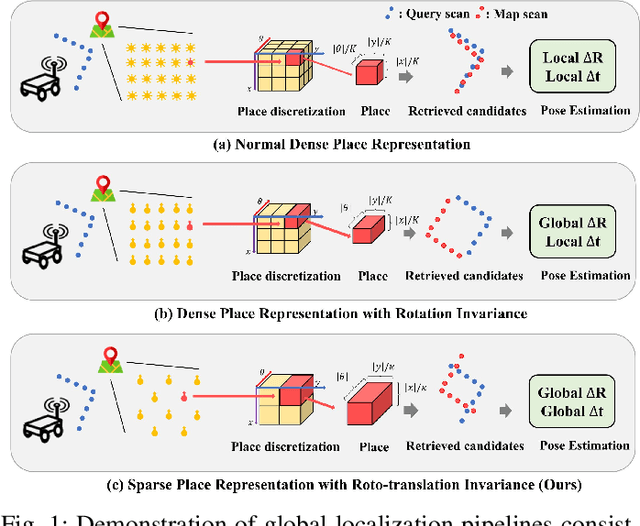

RING++: Roto-translation Invariant Gram for Global Localization on a Sparse Scan Map

Oct 12, 2022

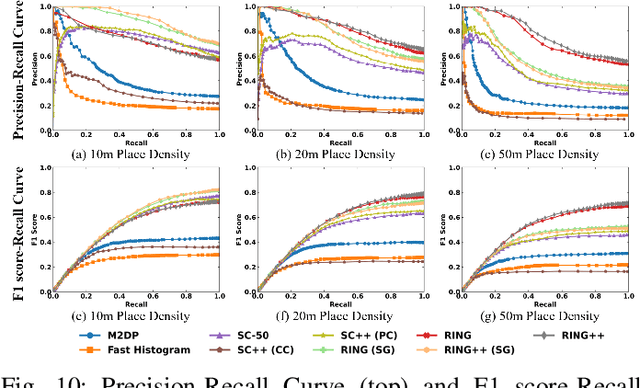

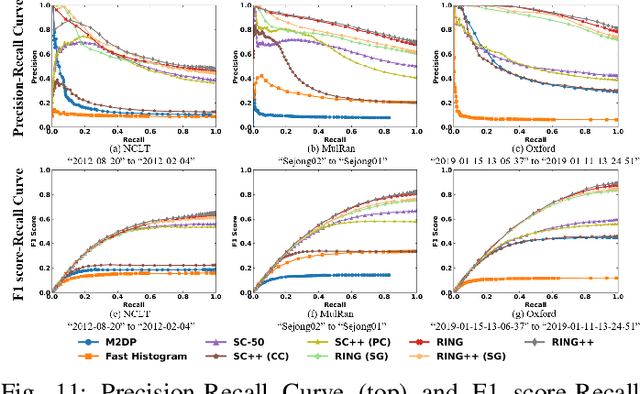

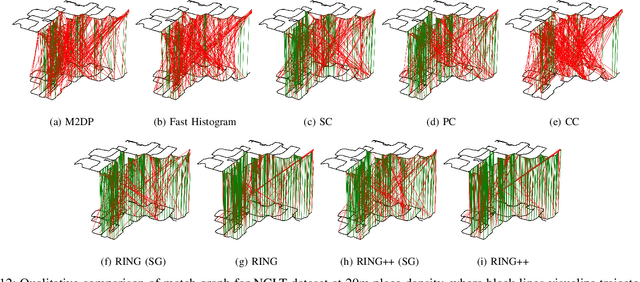

Abstract:Global localization plays a critical role in many robot applications. LiDAR-based global localization draws the community's focus with its robustness against illumination and seasonal changes. To further improve the localization under large viewpoint differences, we propose RING++ which has roto-translation invariant representation for place recognition, and global convergence for both rotation and translation estimation. With the theoretical guarantee, RING++ is able to address the large viewpoint difference using a lightweight map with sparse scans. In addition, we derive sufficient conditions of feature extractors for the representation preserving the roto-translation invariance, making RING++ a framework applicable to generic multi-channel features. To the best of our knowledge, this is the first learning-free framework to address all subtasks of global localization in the sparse scan map. Validations on real-world datasets show that our approach demonstrates better performance than state-of-the-art learning-free methods, and competitive performance with learning-based methods. Finally, we integrate RING++ into a multi-robot/session SLAM system, performing its effectiveness in collaborative applications.

LiDAR-Inertial 3D SLAM with Plane Constraint for Multi-story Building

Feb 17, 2022

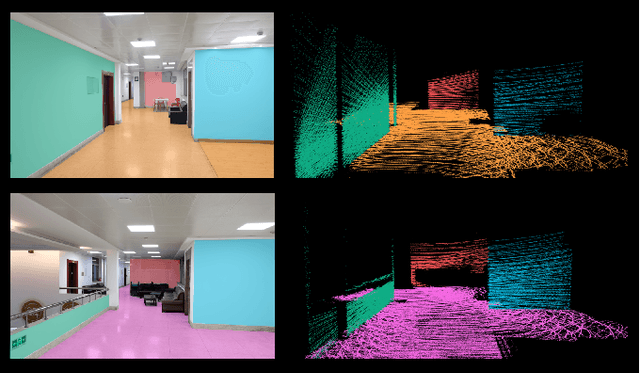

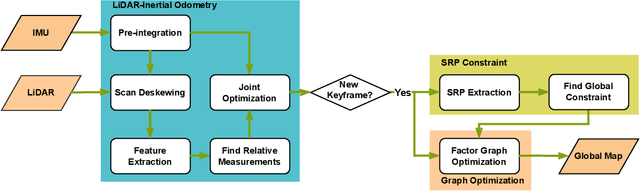

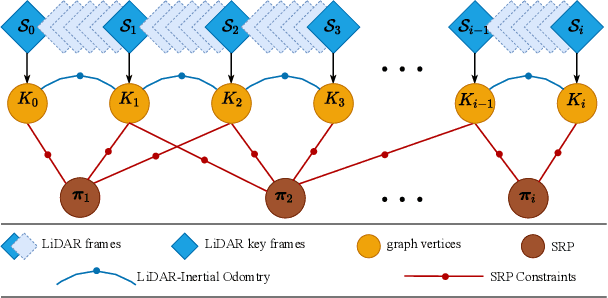

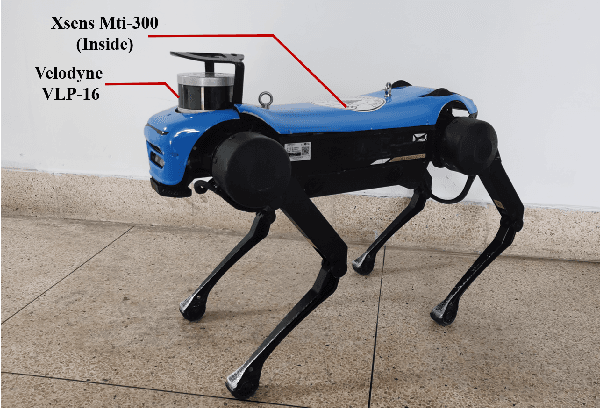

Abstract:The ubiquitous planes and structural consistency are the most apparent features of indoor multi-story Buildings compared with outdoor environments. In this paper, we propose a tightly coupled LiDAR-Inertial 3D SLAM framework with plane features for the multi-story building. The framework we proposed is mainly composed of three parts: tightly coupled LiDAR-Inertial odometry, extraction of representative planes of the structure, and factor graph optimization. By building a local map and inertial measurement unit (IMU) pre-integration, we get LiDAR scan-to-local-map matching and IMU measurements, respectively. Minimize the joint cost function to obtain the LiDAR-Inertial odometry information. Once a new keyframe is added to the graph, all the planes of this keyframe that can represent structural features are extracted to find the constraint between different poses and stories. A keyframe-based factor graph is conducted with the constraint of planes, and LiDAR-Inertial odometry for keyframe poses refinement. The experimental results show that our algorithm has outstanding performance in accuracy compared with the state-of-the-art algorithms.

Multi-expert learning of adaptive legged locomotion

Dec 10, 2020Abstract:Achieving versatile robot locomotion requires motor skills which can adapt to previously unseen situations. We propose a Multi-Expert Learning Architecture (MELA) that learns to generate adaptive skills from a group of representative expert skills. During training, MELA is first initialised by a distinct set of pre-trained experts, each in a separate deep neural network (DNN). Then by learning the combination of these DNNs using a Gating Neural Network (GNN), MELA can acquire more specialised experts and transitional skills across various locomotion modes. During runtime, MELA constantly blends multiple DNNs and dynamically synthesises a new DNN to produce adaptive behaviours in response to changing situations. This approach leverages the advantages of trained expert skills and the fast online synthesis of adaptive policies to generate responsive motor skills during the changing tasks. Using a unified MELA framework, we demonstrated successful multi-skill locomotion on a real quadruped robot that performed coherent trotting, steering, and fall recovery autonomously, and showed the merit of multi-expert learning generating behaviours which can adapt to unseen scenarios.

Search-based Kinodynamic Motion Planning for Omnidirectional Quadruped Robots

Nov 02, 2020

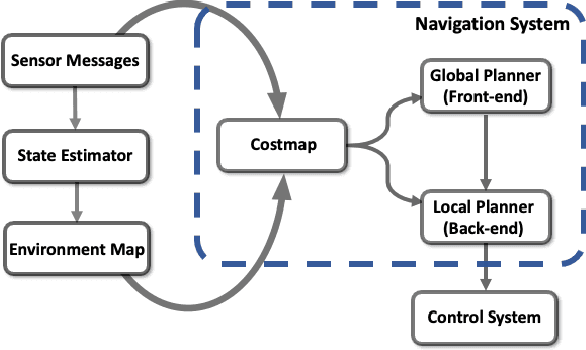

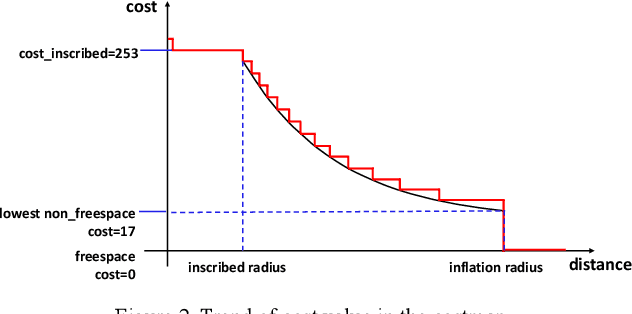

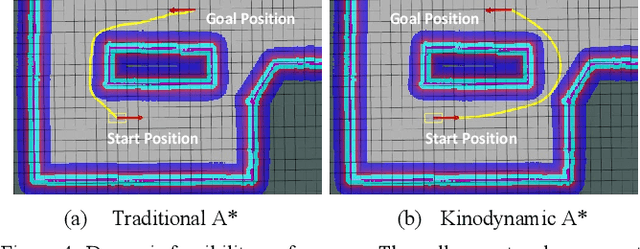

Abstract:Autonomous navigation has played an increasingly significant role in quadruped robots system. However, existing works on path planning used traditional search-based or sample-based methods which did not consider the kinodynamic characteristics of quadruped robots. And paths generated by these methods contain kinodynamically infeasible parts, which are difficult to track. In the present work, we introduced a complete navigation system considering the omnidirectional abilities of quadruped robots. First, we use kinodynamic path finding method to obtain smooth, dynamically feasible, time-optimal initial paths and added collision cost as a soft constraint to ensure safety. Then the trajectory is refined by timed elastic band (TEB) method based on the omnidirectional model of quadruped robot. The superior performance of our work is demonstrated through simulated comparisons and by using our quadruped robot Jueying Mini in our experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge