Chuhao Liu

SG-Reg: Generalizable and Efficient Scene Graph Registration

Apr 20, 2025

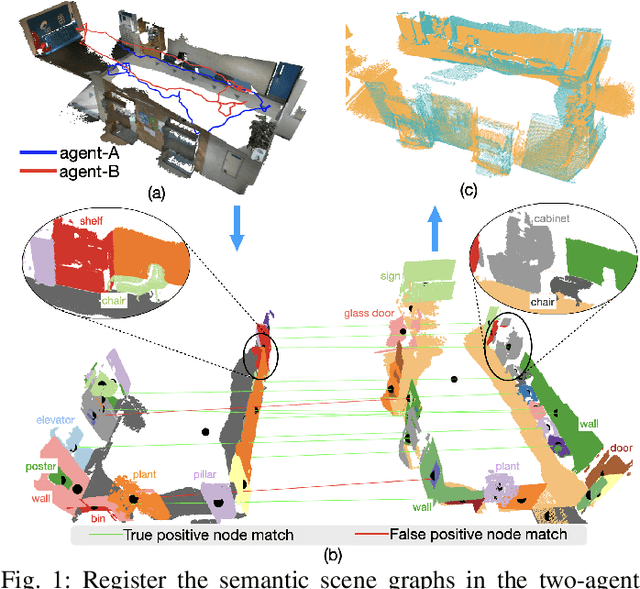

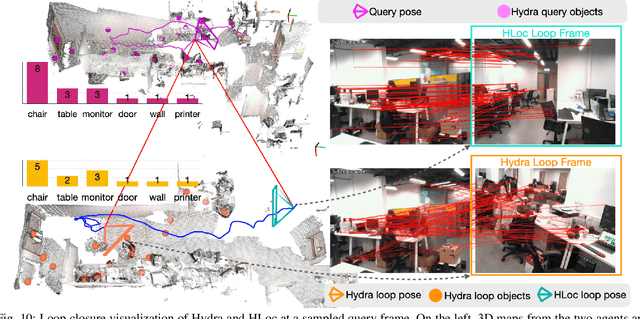

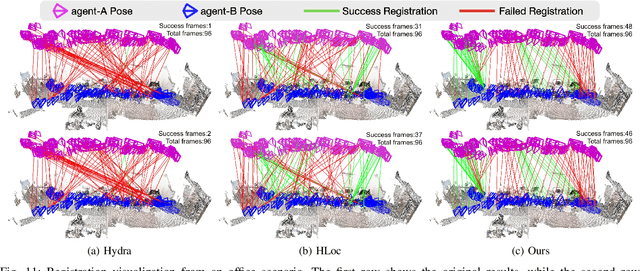

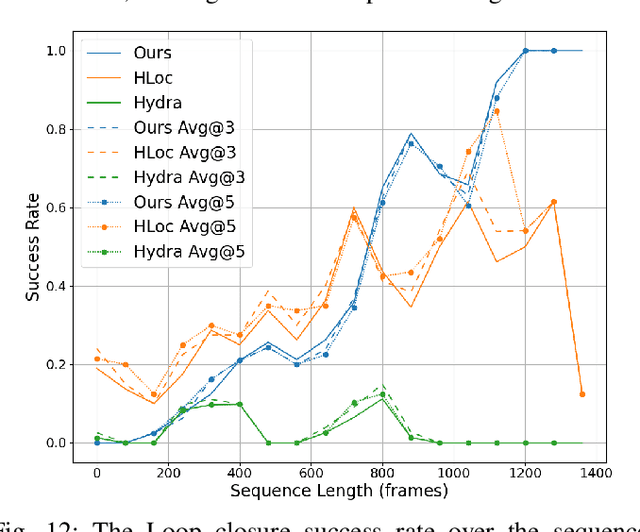

Abstract:This paper addresses the challenges of registering two rigid semantic scene graphs, an essential capability when an autonomous agent needs to register its map against a remote agent, or against a prior map. The hand-crafted descriptors in classical semantic-aided registration, or the ground-truth annotation reliance in learning-based scene graph registration, impede their application in practical real-world environments. To address the challenges, we design a scene graph network to encode multiple modalities of semantic nodes: open-set semantic feature, local topology with spatial awareness, and shape feature. These modalities are fused to create compact semantic node features. The matching layers then search for correspondences in a coarse-to-fine manner. In the back-end, we employ a robust pose estimator to decide transformation according to the correspondences. We manage to maintain a sparse and hierarchical scene representation. Our approach demands fewer GPU resources and fewer communication bandwidth in multi-agent tasks. Moreover, we design a new data generation approach using vision foundation models and a semantic mapping module to reconstruct semantic scene graphs. It differs significantly from previous works, which rely on ground-truth semantic annotations to generate data. We validate our method in a two-agent SLAM benchmark. It significantly outperforms the hand-crafted baseline in terms of registration success rate. Compared to visual loop closure networks, our method achieves a slightly higher registration recall while requiring only 52 KB of communication bandwidth for each query frame. Code available at: \href{http://github.com/HKUST-Aerial-Robotics/SG-Reg}{http://github.com/HKUST-Aerial-Robotics/SG-Reg}.

SLABIM: A SLAM-BIM Coupled Dataset in HKUST Main Building

Feb 24, 2025

Abstract:Existing indoor SLAM datasets primarily focus on robot sensing, often lacking building architectures. To address this gap, we design and construct the first dataset to couple the SLAM and BIM, named SLABIM. This dataset provides BIM and SLAM-oriented sensor data, both modeling a university building at HKUST. The as-designed BIM is decomposed and converted for ease of use. We employ a multi-sensor suite for multi-session data collection and mapping to obtain the as-built model. All the related data are timestamped and organized, enabling users to deploy and test effectively. Furthermore, we deploy advanced methods and report the experimental results on three tasks: registration, localization and semantic mapping, demonstrating the effectiveness and practicality of SLABIM. We make our dataset open-source at https://github.com/HKUST-Aerial-Robotics/SLABIM.

Speak the Same Language: Global LiDAR Registration on BIM Using Pose Hough Transform

May 07, 2024

Abstract:The construction and robotic sensing data originate from disparate sources and are associated with distinct frames of reference. The primary objective of this study is to align LiDAR point clouds with building information modeling (BIM) using a global point cloud registration approach, aimed at establishing a shared understanding between the two modalities, i.e., ``speak the same language''. To achieve this, we design a cross-modality registration method, spanning from front end the back end. At the front end, we extract descriptors by identifying walls and capturing the intersected corners. Subsequently, for the back-end pose estimation, we employ the Hough transform for pose estimation and estimate multiple pose candidates. The final pose is verified by wall-pixel correlation. To evaluate the effectiveness of our method, we conducted real-world multi-session experiments in a large-scale university building, involving two different types of LiDAR sensors. We also report our findings and plan to make our collected dataset open-sourced.

FM-Fusion: Instance-aware Semantic Mapping Boosted by Vision-Language Foundation Models

Feb 07, 2024Abstract:Semantic mapping based on the supervised object detectors is sensitive to image distribution. In real-world environments, the object detection and segmentation performance can lead to a major drop, preventing the use of semantic mapping in a wider domain. On the other hand, the development of vision-language foundation models demonstrates a strong zero-shot transferability across data distribution. It provides an opportunity to construct generalizable instance-aware semantic maps. Hence, this work explores how to boost instance-aware semantic mapping from object detection generated from foundation models. We propose a probabilistic label fusion method to predict close-set semantic classes from open-set label measurements. An instance refinement module merges the over-segmented instances caused by inconsistent segmentation. We integrate all the modules into a unified semantic mapping system. Reading a sequence of RGB-D input, our work incrementally reconstructs an instance-aware semantic map. We evaluate the zero-shot performance of our method in ScanNet and SceneNN datasets. Our method achieves 40.3 mean average precision (mAP) on the ScanNet semantic instance segmentation task. It outperforms the traditional semantic mapping method significantly.

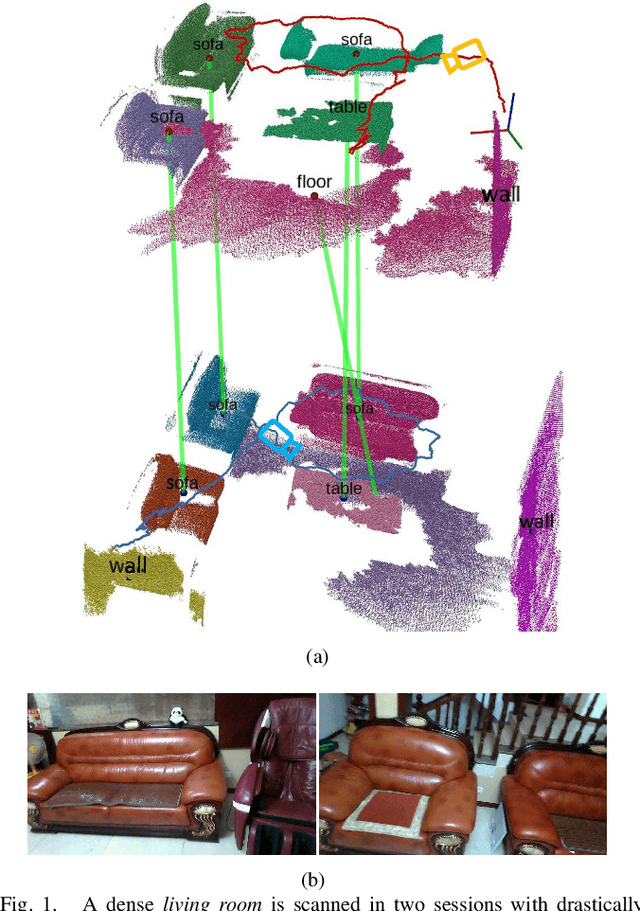

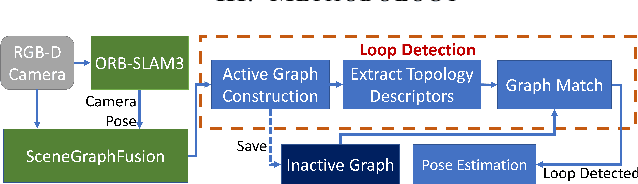

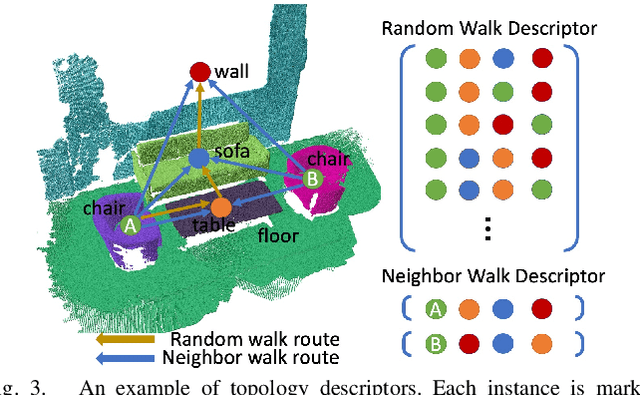

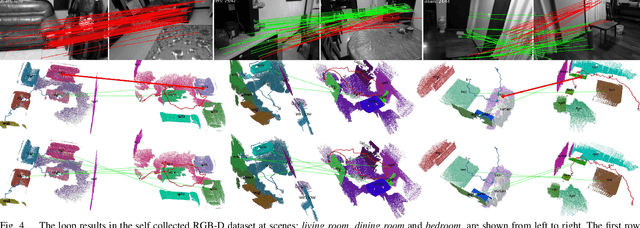

Towards View-invariant and Accurate Loop Detection Based on Scene Graph

May 24, 2023

Abstract:Loop detection plays a key role in visual Simultaneous Localization and Mapping (SLAM) by correcting the accumulated pose drift. In indoor scenarios, the richly distributed semantic landmarks are view-point invariant and hold strong descriptive power in loop detection. The current semantic-aided loop detection embeds the topology between semantic instances to search a loop. However, current semantic-aided loop detection methods face challenges in dealing with ambiguous semantic instances and drastic viewpoint differences, which are not fully addressed in the literature. This paper introduces a novel loop detection method based on an incrementally created scene graph, targeting the visual SLAM at indoor scenes. It jointly considers the macro-view topology, micro-view topology, and occupancy of semantic instances to find correct correspondences. Experiments using handheld RGB-D sequence show our method is able to accurately detect loops in drastically changed viewpoints. It maintains a high precision in observing objects with similar topology and appearance. Our method also demonstrates that it is robust in changed indoor scenes.

Estimation and Adaption of Indoor Ego Airflow Disturbance with Application to Quadrotor Trajectory Planning

Sep 10, 2021

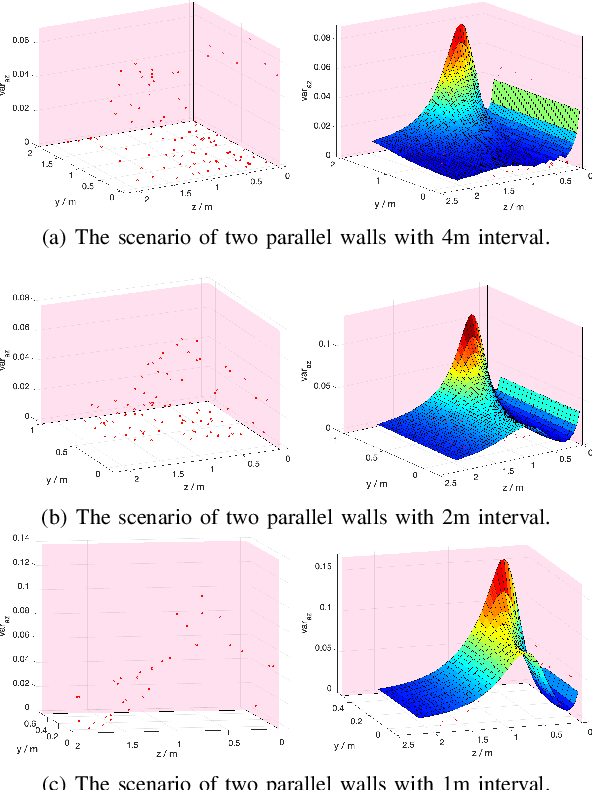

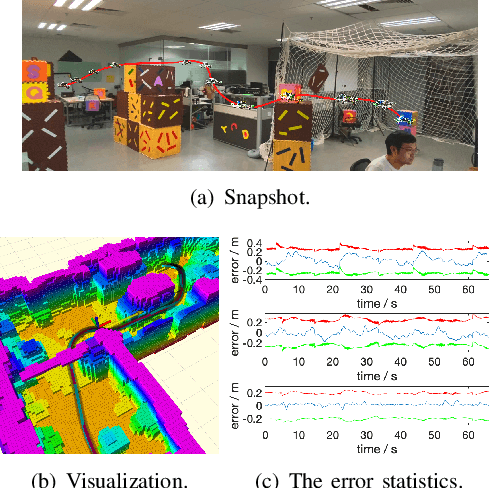

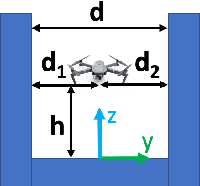

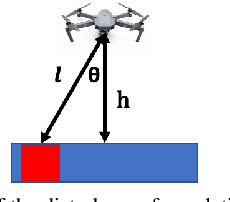

Abstract:It is ubiquitously accepted that during the autonomous navigation of the quadrotors, one of the most widely adopted unmanned aerial vehicles (UAVs), safety always has the highest priority. However, it is observed that the ego airflow disturbance can be a significant adverse factor during flights, causing potential safety issues, especially in narrow and confined indoor environments. Therefore, we propose a novel method to estimate and adapt indoor ego airflow disturbance of quadrotors, meanwhile applying it to trajectory planning. Firstly, the hover experiments for different quadrotors are conducted against the proximity effects. Then with the collected acceleration variance, the disturbances are modeled for the quadrotors according to the proposed formulation. The disturbance model is also verified under hover conditions in different reconstructed complex environments. Furthermore, the approximation of Hamilton-Jacobi reachability analysis is performed according to the estimated disturbances to facilitate the safe trajectory planning, which consists of kinodynamic path search as well as B-spline trajectory optimization. The whole planning framework is validated on multiple quadrotor platforms in different indoor environments.

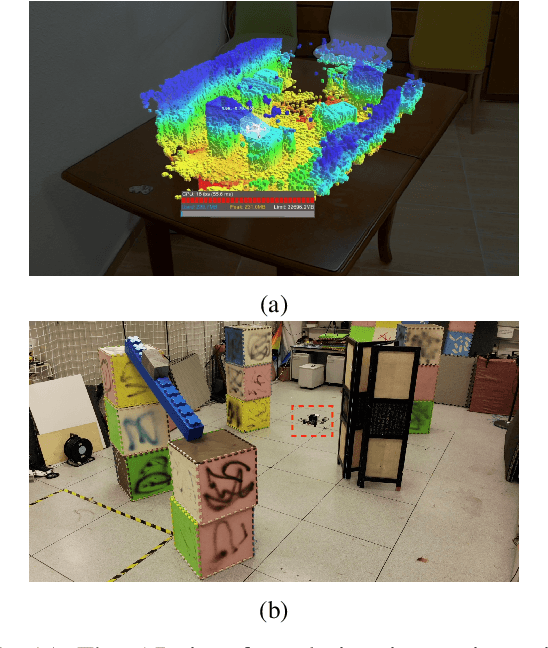

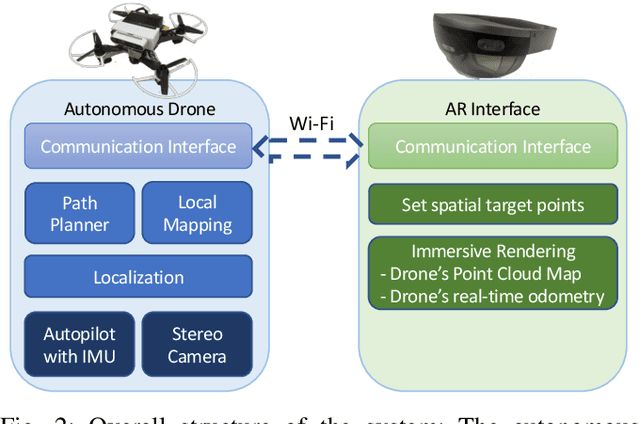

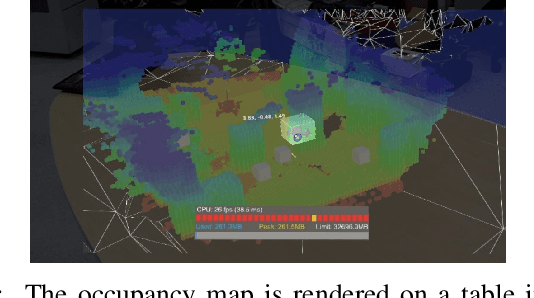

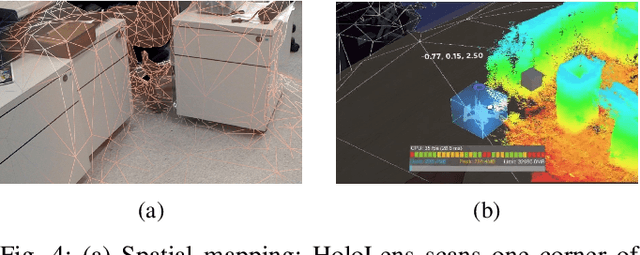

An Augmented Reality Interaction Interface for Autonomous Drone

Aug 05, 2020

Abstract:Human drone interaction in autonomous navigation incorporates spatial interaction tasks, including reconstructed 3D map from the drone and human desired target position. Augmented Reality (AR) devices can be powerful interactive tools for handling these spatial interactions. In this work, we build an AR interface that displays the reconstructed 3D map from the drone on physical surfaces in front of the operator. Spatial target positions can be further set on the 3D map by intuitive head gaze and hand gesture. The AR interface is deployed to interact with an autonomous drone to explore an unknown environment. A user study is further conducted to evaluate the overall interaction performance.

Robust and Efficient Quadrotor Trajectory Generation for Fast Autonomous Flight

Jul 03, 2019

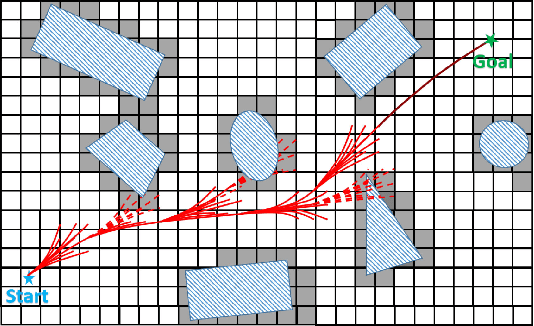

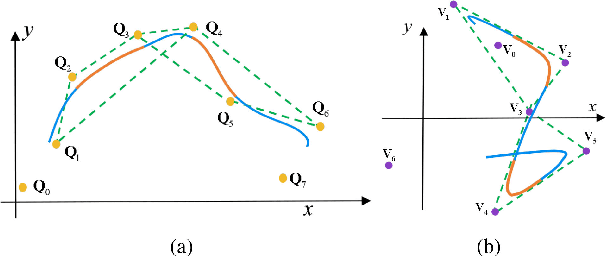

Abstract:In this paper, we propose a robust and efficient quadrotor motion planning system for fast flight in 3-D complex environments. We adopt a kinodynamic path searching method to find a safe, kinodynamic feasible and minimum-time initial trajectory in the discretized control space. We improve the smoothness and clearance of the trajectory by a B-spline optimization, which incorporates gradient information from a Euclidean distance field (EDF) and dynamic constraints efficiently utilizing the convex hull property of B-spline. Finally, by representing the final trajectory as a non-uniform B-spline, an iterative time adjustment method is adopted to guarantee dynamically feasible and non-conservative trajectories. We validate our proposed method in various complex simulational environments. The competence of the method is also validated in challenging real-world tasks. We release our code as an open-source package.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge