Yichen Zhang

Central South University

ERNIE 5.0 Technical Report

Feb 04, 2026Abstract:In this report, we introduce ERNIE 5.0, a natively autoregressive foundation model desinged for unified multimodal understanding and generation across text, image, video, and audio. All modalities are trained from scratch under a unified next-group-of-tokens prediction objective, based on an ultra-sparse mixture-of-experts (MoE) architecture with modality-agnostic expert routing. To address practical challenges in large-scale deployment under diverse resource constraints, ERNIE 5.0 adopts a novel elastic training paradigm. Within a single pre-training run, the model learns a family of sub-models with varying depths, expert capacities, and routing sparsity, enabling flexible trade-offs among performance, model size, and inference latency in memory- or time-constrained scenarios. Moreover, we systematically address the challenges of scaling reinforcement learning to unified foundation models, thereby guaranteeing efficient and stable post-training under ultra-sparse MoE architectures and diverse multimodal settings. Extensive experiments demonstrate that ERNIE 5.0 achieves strong and balanced performance across multiple modalities. To the best of our knowledge, among publicly disclosed models, ERNIE 5.0 represents the first production-scale realization of a trillion-parameter unified autoregressive model that supports both multimodal understanding and generation. To facilitate further research, we present detailed visualizations of modality-agnostic expert routing in the unified model, alongside comprehensive empirical analysis of elastic training, aiming to offer profound insights to the community.

Large Language Model Agents Are Not Always Faithful Self-Evolvers

Jan 30, 2026Abstract:Self-evolving large language model (LLM) agents continually improve by accumulating and reusing past experience, yet it remains unclear whether they faithfully rely on that experience to guide their behavior. We present the first systematic investigation of experience faithfulness, the causal dependence of an agent's decisions on the experience it is given, in self-evolving LLM agents. Using controlled causal interventions on both raw and condensed forms of experience, we comprehensively evaluate four representative frameworks across 10 LLM backbones and 9 environments. Our analysis uncovers a striking asymmetry: while agents consistently depend on raw experience, they often disregard or misinterpret condensed experience, even when it is the only experience provided. This gap persists across single- and multi-agent configurations and across backbone scales. We trace its underlying causes to three factors: the semantic limitations of condensed content, internal processing biases that suppress experience, and task regimes where pretrained priors already suffice. These findings challenge prevailing assumptions about self-evolving methods and underscore the need for more faithful and reliable approaches to experience integration.

Meta-Cognitive Reinforcement Learning with Self-Doubt and Recovery

Jan 28, 2026Abstract:Robust reinforcement learning methods typically focus on suppressing unreliable experiences or corrupted rewards, but they lack the ability to reason about the reliability of their own learning process. As a result, such methods often either overreact to noise by becoming overly conservative or fail catastrophically when uncertainty accumulates. In this work, we propose a meta-cognitive reinforcement learning framework that enables an agent to assess, regulate, and recover its learning behavior based on internally estimated reliability signals. The proposed method introduces a meta-trust variable driven by Value Prediction Error Stability (VPES), which modulates learning dynamics via fail-safe regulation and gradual trust recovery. Experiments on continuous-control benchmarks with reward corruption demonstrate that recovery-enabled meta-cognitive control achieves higher average returns and significantly reduces late-stage training failures compared to strong robustness baselines.

SGD with Dependent Data: Optimal Estimation, Regret, and Inference

Jan 04, 2026Abstract:This work investigates the performance of the final iterate produced by stochastic gradient descent (SGD) under temporally dependent data. We consider two complementary sources of dependence: $(i)$ martingale-type dependence in both the covariate and noise processes, which accommodates non-stationary and non-mixing time series data, and $(ii)$ dependence induced by sequential decision making. Our formulation runs in parallel with classical notions of (local) stationarity and strong mixing, while neither framework fully subsumes the other. Remarkably, SGD is shown to automatically accommodate both independent and dependent information under a broad class of stepsize schedules and exploration rate schemes. Non-asymptotically, we show that SGD simultaneously achieves statistically optimal estimation error and regret, extending and improving existing results. In particular, our tail bounds remain sharp even for potentially infinite horizon $T=+\infty$. Asymptotically, the SGD iterates converge to a Gaussian distribution with only an $O_{\PP}(1/\sqrt{t})$ remainder, demonstrating that the supposed estimation-regret trade-off claimed in prior work can in fact be avoided. We further propose a new ``conic'' approximation of the decision region that allows the covariates to have unbounded support. For online sparse regression, we develop a new SGD-based algorithm that uses only $d$ units of storage and requires $O(d)$ flops per iteration, achieving the long term statistical optimality. Intuitively, each incoming observation contributes to estimation accuracy, while aggregated summary statistics guide support recovery.

StatEval: A Comprehensive Benchmark for Large Language Models in Statistics

Oct 10, 2025Abstract:Large language models (LLMs) have demonstrated remarkable advances in mathematical and logical reasoning, yet statistics, as a distinct and integrative discipline, remains underexplored in benchmarking efforts. To address this gap, we introduce \textbf{StatEval}, the first comprehensive benchmark dedicated to statistics, spanning both breadth and depth across difficulty levels. StatEval consists of 13,817 foundational problems covering undergraduate and graduate curricula, together with 2374 research-level proof tasks extracted from leading journals. To construct the benchmark, we design a scalable multi-agent pipeline with human-in-the-loop validation that automates large-scale problem extraction, rewriting, and quality control, while ensuring academic rigor. We further propose a robust evaluation framework tailored to both computational and proof-based tasks, enabling fine-grained assessment of reasoning ability. Experimental results reveal that while closed-source models such as GPT5-mini achieve below 57\% on research-level problems, with open-source models performing significantly lower. These findings highlight the unique challenges of statistical reasoning and the limitations of current LLMs. We expect StatEval to serve as a rigorous benchmark for advancing statistical intelligence in large language models. All data and code are available on our web platform: https://stateval.github.io/.

ContentV: Efficient Training of Video Generation Models with Limited Compute

Jun 05, 2025Abstract:Recent advances in video generation demand increasingly efficient training recipes to mitigate escalating computational costs. In this report, we present ContentV, an 8B-parameter text-to-video model that achieves state-of-the-art performance (85.14 on VBench) after training on 256 x 64GB Neural Processing Units (NPUs) for merely four weeks. ContentV generates diverse, high-quality videos across multiple resolutions and durations from text prompts, enabled by three key innovations: (1) A minimalist architecture that maximizes reuse of pre-trained image generation models for video generation; (2) A systematic multi-stage training strategy leveraging flow matching for enhanced efficiency; and (3) A cost-effective reinforcement learning with human feedback framework that improves generation quality without requiring additional human annotations. All the code and models are available at: https://contentv.github.io.

Towards Self-Improvement of Diffusion Models via Group Preference Optimization

May 16, 2025Abstract:Aligning text-to-image (T2I) diffusion models with Direct Preference Optimization (DPO) has shown notable improvements in generation quality. However, applying DPO to T2I faces two challenges: the sensitivity of DPO to preference pairs and the labor-intensive process of collecting and annotating high-quality data. In this work, we demonstrate that preference pairs with marginal differences can degrade DPO performance. Since DPO relies exclusively on relative ranking while disregarding the absolute difference of pairs, it may misclassify losing samples as wins, or vice versa. We empirically show that extending the DPO from pairwise to groupwise and incorporating reward standardization for reweighting leads to performance gains without explicit data selection. Furthermore, we propose Group Preference Optimization (GPO), an effective self-improvement method that enhances performance by leveraging the model's own capabilities without requiring external data. Extensive experiments demonstrate that GPO is effective across various diffusion models and tasks. Specifically, combining with widely used computer vision models, such as YOLO and OCR, the GPO improves the accurate counting and text rendering capabilities of the Stable Diffusion 3.5 Medium by 20 percentage points. Notably, as a plug-and-play method, no extra overhead is introduced during inference.

Active Contact Engagement for Aerial Navigation in Unknown Environments with Glass

May 01, 2025Abstract:Autonomous aerial robots are increasingly being deployed in real-world scenarios, where transparent glass obstacles present significant challenges to reliable navigation. Researchers have investigated the use of non-contact sensors and passive contact-resilient aerial vehicle designs to detect glass surfaces, which are often limited in terms of robustness and efficiency. In this work, we propose a novel approach for robust autonomous aerial navigation in unknown environments with transparent glass obstacles, combining the strengths of both sensor-based and contact-based glass detection. The proposed system begins with the incremental detection and information maintenance about potential glass surfaces using visual sensor measurements. The vehicle then actively engages in touch actions with the visually detected potential glass surfaces using a pair of lightweight contact-sensing modules to confirm or invalidate their presence. Following this, the volumetric map is efficiently updated with the glass surface information and safe trajectories are replanned on the fly to circumvent the glass obstacles. We validate the proposed system through real-world experiments in various scenarios, demonstrating its effectiveness in enabling efficient and robust autonomous aerial navigation in complex real-world environments with glass obstacles.

CascadeV: An Implementation of Wurstchen Architecture for Video Generation

Jan 28, 2025Abstract:Recently, with the tremendous success of diffusion models in the field of text-to-image (T2I) generation, increasing attention has been directed toward their potential in text-to-video (T2V) applications. However, the computational demands of diffusion models pose significant challenges, particularly in generating high-resolution videos with high frame rates. In this paper, we propose CascadeV, a cascaded latent diffusion model (LDM), that is capable of producing state-of-the-art 2K resolution videos. Experiments demonstrate that our cascaded model achieves a higher compression ratio, substantially reducing the computational challenges associated with high-quality video generation. We also implement a spatiotemporal alternating grid 3D attention mechanism, which effectively integrates spatial and temporal information, ensuring superior consistency across the generated video frames. Furthermore, our model can be cascaded with existing T2V models, theoretically enabling a 4$\times$ increase in resolution or frames per second without any fine-tuning. Our code is available at https://github.com/bytedance/CascadeV.

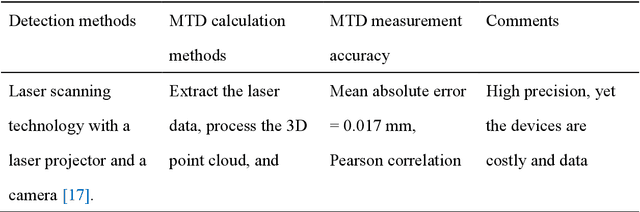

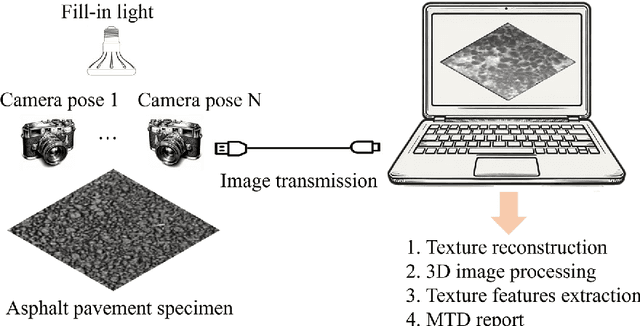

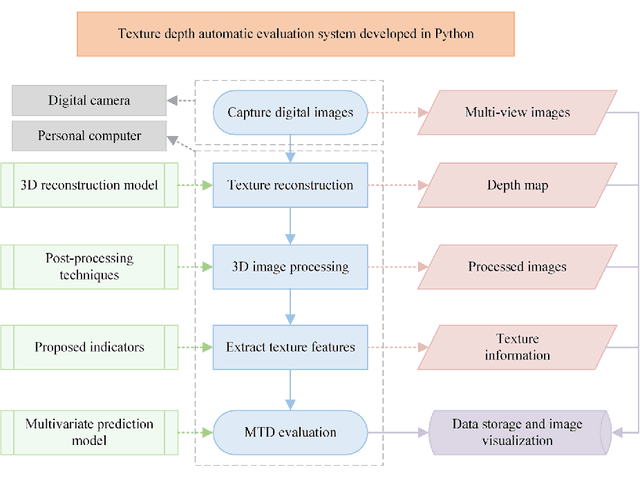

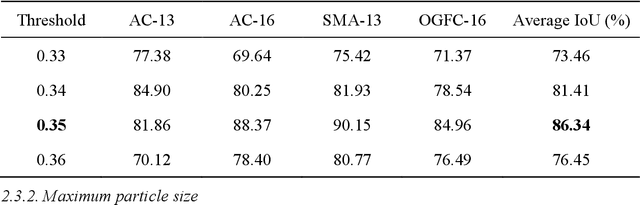

Journey into Automation: Image-Derived Pavement Texture Extraction and Evaluation

Jan 05, 2025

Abstract:Mean texture depth (MTD) is pivotal in assessing the skid resistance of asphalt pavements and ensuring road safety. This study focuses on developing an automated system for extracting texture features and evaluating MTD based on pavement images. The contributions of this work are threefold: firstly, it proposes an economical method to acquire three-dimensional (3D) pavement texture data; secondly, it enhances 3D image processing techniques and formulates features that represent various aspects of texture; thirdly, it establishes multivariate prediction models that link these features with MTD values. Validation results demonstrate that the Gradient Boosting Tree (GBT) model achieves remarkable prediction stability and accuracy (R2 = 0.9858), and field tests indicate the superiority of the proposed method over other techniques, with relative errors below 10%. This method offers a comprehensive end-to-end solution for pavement quality evaluation, from images input to MTD predictions output.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge