Haoxuan Li

ProcMEM: Learning Reusable Procedural Memory from Experience via Non-Parametric PPO for LLM Agents

Feb 02, 2026Abstract:LLM-driven agents demonstrate strong performance in sequential decision-making but often rely on on-the-fly reasoning, re-deriving solutions even in recurring scenarios. This insufficient experience reuse leads to computational redundancy and execution instability. To bridge this gap, we propose ProcMEM, a framework that enables agents to autonomously learn procedural memory from interaction experiences without parameter updates. By formalizing a Skill-MDP, ProcMEM transforms passive episodic narratives into executable Skills defined by activation, execution, and termination conditions to ensure executability. To achieve reliable reusability without capability degradation, we introduce Non-Parametric PPO, which leverages semantic gradients for high-quality candidate generation and a PPO Gate for robust Skill verification. Through score-based maintenance, ProcMEM sustains compact, high-quality procedural memory. Experimental results across in-domain, cross-task, and cross-agent scenarios demonstrate that ProcMEM achieves superior reuse rates and significant performance gains with extreme memory compression. Visualized evolutionary trajectories and Skill distributions further reveal how ProcMEM transparently accumulates, refines, and reuses procedural knowledge to facilitate long-term autonomy.

Rethinking the Flow-Based Gradual Domain Adaption: A Semi-Dual Optimal Transport Perspective

Feb 01, 2026Abstract:Gradual domain adaptation (GDA) aims to mitigate domain shift by progressively adapting models from the source domain to the target domain via intermediate domains. However, real intermediate domains are often unavailable or ineffective, necessitating the synthesis of intermediate samples. Flow-based models have recently been used for this purpose by interpolating between source and target distributions; however, their training typically relies on sample-based log-likelihood estimation, which can discard useful information and thus degrade GDA performance. The key to addressing this limitation is constructing the intermediate domains via samples directly. To this end, we propose an Entropy-regularized Semi-dual Unbalanced Optimal Transport (E-SUOT) framework to construct intermediate domains. Specifically, we reformulate flow-based GDA as a Lagrangian dual problem and derive an equivalent semi-dual objective that circumvents the need for likelihood estimation. However, the dual problem leads to an unstable min-max training procedure. To alleviate this issue, we further introduce entropy regularization to convert it into a more stable alternative optimization procedure. Based on this, we propose a novel GDA training framework and provide theoretical analysis in terms of stability and generalization. Finally, extensive experiments are conducted to demonstrate the efficacy of the E-SUOT framework.

Analyzing and Improving Diffusion Models for Time-Series Data Imputation: A Proximal Recursion Perspective

Feb 01, 2026Abstract:Diffusion models (DMs) have shown promise for Time-Series Data Imputation (TSDI); however, their performance remains inconsistent in complex scenarios. We attribute this to two primary obstacles: (1) non-stationary temporal dynamics, which can bias the inference trajectory and lead to outlier-sensitive imputations; and (2) objective inconsistency, since imputation favors accurate pointwise recovery whereas DMs are inherently trained to generate diverse samples. To better understand these issues, we analyze DM-based TSDI process through a proximal-operator perspective and uncover that an implicit Wasserstein distance regularization inherent in the process hinders the model's ability to counteract non-stationarity and dissipative regularizer, thereby amplifying diversity at the expense of fidelity. Building on this insight, we propose a novel framework called SPIRIT (Semi-Proximal Transport Regularized time-series Imputation). Specifically, we introduce entropy-induced Bregman divergence to relax the mass preserving constraint in the Wasserstein distance, formulate the semi-proximal transport (SPT) discrepancy, and theoretically prove the robustness of SPT against non-stationarity. Subsequently, we remove the dissipative structure and derive the complete SPIRIT workflow, with SPT serving as the proximal operator. Extensive experiments demonstrate the effectiveness of the proposed SPIRIT approach.

Deep Time-series Forecasting Needs Kernelized Moment Balancing

Jan 31, 2026Abstract:Deep time-series forecasting can be formulated as a distribution balancing problem aimed at aligning the distribution of the forecasts and ground truths. According to Imbens' criterion, true distribution balance requires matching the first moments with respect to any balancing function. We demonstrate that existing objectives fail to meet this criterion, as they enforce moment matching only for one or two predefined balancing functions, thus failing to achieve full distribution balance. To address this limitation, we propose direct forecasting with kernelized moment balancing (KMB-DF). Unlike existing objectives, KMB-DF adaptively selects the most informative balancing functions from a reproducing kernel hilbert space (RKHS) to enforce sufficient distribution balancing. We derive a tractable and differentiable objective that enables efficient estimation from empirical samples and seamless integration into gradient-based training pipelines. Extensive experiments across multiple models and datasets show that KMB-DF consistently improves forecasting accuracy and achieves state-of-the-art performance. Code is available at https://anonymous.4open.science/r/KMB-DF-403C.

ThinkTank-ME: A Multi-Expert Framework for Middle East Event Forecasting

Jan 22, 2026Abstract:Event forecasting is inherently influenced by multifaceted considerations, including international relations, regional historical dynamics, and cultural contexts. However, existing LLM-based approaches employ single-model architectures that generate predictions along a singular explicit trajectory, constraining their ability to capture diverse geopolitical nuances across complex regional contexts. To address this limitation, we introduce ThinkTank-ME, a novel Think Tank framework for Middle East event forecasting that emulates collaborative expert analysis in real-world strategic decision-making. To facilitate expert specialization and rigorous evaluation, we construct POLECAT-FOR-ME, a Middle East-focused event forecasting benchmark. Experimental results demonstrate the superiority of multi-expert collaboration in handling complex temporal geopolitical forecasting tasks. The code is available at https://github.com/LuminosityX/ThinkTank-ME.

RAGExplorer: A Visual Analytics System for the Comparative Diagnosis of RAG Systems

Jan 19, 2026Abstract:The advent of Retrieval-Augmented Generation (RAG) has significantly enhanced the ability of Large Language Models (LLMs) to produce factually accurate and up-to-date responses. However, the performance of a RAG system is not determined by a single component but emerges from a complex interplay of modular choices, such as embedding models and retrieval algorithms. This creates a vast and often opaque configuration space, making it challenging for developers to understand performance trade-offs and identify optimal designs. To address this challenge, we present RAGExplorer, a visual analytics system for the systematic comparison and diagnosis of RAG configurations. RAGExplorer guides users through a seamless macro-to-micro analytical workflow. Initially, it empowers developers to survey the performance landscape across numerous configurations, allowing for a high-level understanding of which design choices are most effective. For a deeper analysis, the system enables users to drill down into individual failure cases, investigate how differences in retrieved information contribute to errors, and interactively test hypotheses by manipulating the provided context to observe the resulting impact on the generated answer. We demonstrate the effectiveness of RAGExplorer through detailed case studies and user studies, validating its ability to empower developers in navigating the complex RAG design space. Our code and user guide are publicly available at https://github.com/Thymezzz/RAGExplorer.

HiVid-Narrator: Hierarchical Video Narrative Generation with Scene-Primed ASR-anchored Compression

Jan 12, 2026Abstract:Generating structured narrations for real-world e-commerce videos requires models to perceive fine-grained visual details and organize them into coherent, high-level stories--capabilities that existing approaches struggle to unify. We introduce the E-commerce Hierarchical Video Captioning (E-HVC) dataset with dual-granularity, temporally grounded annotations: a Temporal Chain-of-Thought that anchors event-level observations and Chapter Summary that compose them into concise, story-centric summaries. Rather than directly prompting chapters, we adopt a staged construction that first gathers reliable linguistic and visual evidence via curated ASR and frame-level descriptions, then refines coarse annotations into precise chapter boundaries and titles conditioned on the Temporal Chain-of-Thought, yielding fact-grounded, time-aligned narratives. We also observe that e-commerce videos are fast-paced and information-dense, with visual tokens dominating the input sequence. To enable efficient training while reducing input tokens, we propose the Scene-Primed ASR-anchored Compressor (SPA-Compressor), which compresses multimodal tokens into hierarchical scene and event representations guided by ASR semantic cues. Built upon these designs, our HiVid-Narrator framework achieves superior narrative quality with fewer input tokens compared to existing methods.

IGenBench: Benchmarking the Reliability of Text-to-Infographic Generation

Jan 08, 2026Abstract:Infographics are composite visual artifacts that combine data visualizations with textual and illustrative elements to communicate information. While recent text-to-image (T2I) models can generate aesthetically appealing images, their reliability in generating infographics remains unclear. Generated infographics may appear correct at first glance but contain easily overlooked issues, such as distorted data encoding or incorrect textual content. We present IGENBENCH, the first benchmark for evaluating the reliability of text-to-infographic generation, comprising 600 curated test cases spanning 30 infographic types. We design an automated evaluation framework that decomposes reliability verification into atomic yes/no questions based on a taxonomy of 10 question types. We employ multimodal large language models (MLLMs) to verify each question, yielding question-level accuracy (Q-ACC) and infographic-level accuracy (I-ACC). We comprehensively evaluate 10 state-of-the-art T2I models on IGENBENCH. Our systematic analysis reveals key insights for future model development: (i) a three-tier performance hierarchy with the top model achieving Q-ACC of 0.90 but I-ACC of only 0.49; (ii) data-related dimensions emerging as universal bottlenecks (e.g., Data Completeness: 0.21); and (iii) the challenge of achieving end-to-end correctness across all models. We release IGENBENCH at https://igen-bench.vercel.app/.

Breaking the Gradient Barrier: Unveiling Large Language Models for Strategic Classification

Nov 10, 2025Abstract:Strategic classification~(SC) explores how individuals or entities modify their features strategically to achieve favorable classification outcomes. However, existing SC methods, which are largely based on linear models or shallow neural networks, face significant limitations in terms of scalability and capacity when applied to real-world datasets with significantly increasing scale, especially in financial services and the internet sector. In this paper, we investigate how to leverage large language models to design a more scalable and efficient SC framework, especially in the case of growing individuals engaged with decision-making processes. Specifically, we introduce GLIM, a gradient-free SC method grounded in in-context learning. During the feed-forward process of self-attention, GLIM implicitly simulates the typical bi-level optimization process of SC, including both the feature manipulation and decision rule optimization. Without fine-tuning the LLMs, our proposed GLIM enjoys the advantage of cost-effective adaptation in dynamic strategic environments. Theoretically, we prove GLIM can support pre-trained LLMs to adapt to a broad range of strategic manipulations. We validate our approach through experiments with a collection of pre-trained LLMs on real-world and synthetic datasets in financial and internet domains, demonstrating that our GLIM exhibits both robustness and efficiency, and offering an effective solution for large-scale SC tasks.

Federated Multi-Objective Learning with Controlled Pareto Frontiers

Aug 07, 2025

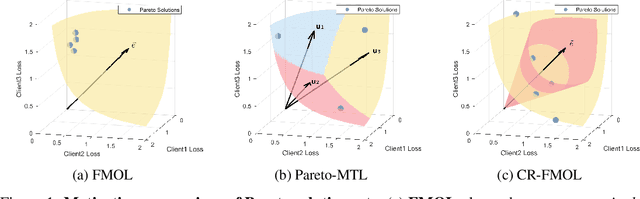

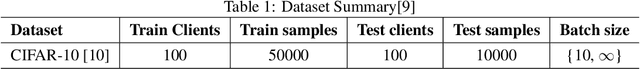

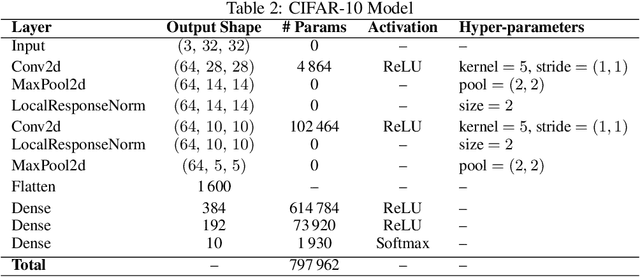

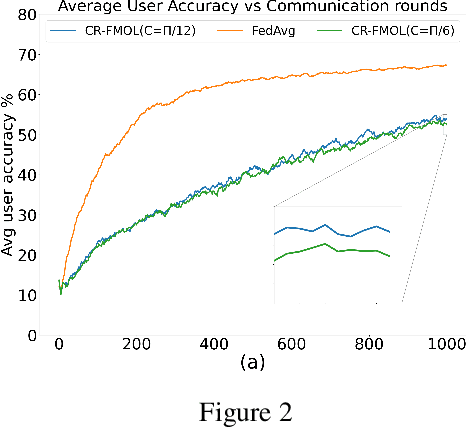

Abstract:Federated learning (FL) is a widely adopted paradigm for privacy-preserving model training, but FedAvg optimise for the majority while under-serving minority clients. Existing methods such as federated multi-objective learning (FMOL) attempts to import multi-objective optimisation (MOO) into FL. However, it merely delivers task-wise Pareto-stationary points, leaving client fairness to chance. In this paper, we introduce Conically-Regularised FMOL (CR-FMOL), the first federated MOO framework that enforces client-wise Pareto optimality through a novel preference-cone constraint. After local federated multi-gradient descent averaging (FMGDA) / federated stochastic multi-gradient descent averaging (FSMGDA) steps, each client transmits its aggregated task-loss vector as an implicit preference; the server then solves a cone-constrained Pareto-MTL sub-problem centred at the uniform vector, producing a descent direction that is Pareto-stationary for every client within its cone. Experiments on non-IID benchmarks show that CR-FMOL enhances client fairness, and although the early-stage performance is slightly inferior to FedAvg, it is expected to achieve comparable accuracy given sufficient training rounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge