Zhuohan Wang

DiffLOB: Diffusion Models for Counterfactual Generation in Limit Order Books

Feb 03, 2026Abstract:Modern generative models for limit order books (LOBs) can reproduce realistic market dynamics, but remain fundamentally passive: they either model what typically happens without accounting for hypothetical future market conditions, or they require interaction with another agent to explore alternative outcomes. This limits their usefulness for stress testing, scenario analysis, and decision-making. We propose \textbf{DiffLOB}, a regime-conditioned \textbf{Diff}usion model for controllable and counterfactual generation of \textbf{LOB} trajectories. DiffLOB explicitly conditions the generative process on future market regimes--including trend, volatility, liquidity, and order-flow imbalance, which enables the model to answer counterfactual queries of the form: ``If the future market regime were X instead of Y, how would the limit order book evolve?'' Our systematic evaluation framework for counterfactual LOB generation consists of three criteria: (1) \textit{Controllable Realism}, measuring how well generated trajectories can reproduce marginal distributions, temporal dependence structure and regime variables; (2) \textit{Counterfactual validity}, testing whether interventions on future regimes induce consistent changes in the generated LOB dynamics; (3) \textit{Counterfactual usefulness}, assessing whether synthetic counterfactual trajectories improve downstream prediction of future market regimes.

Alpha Discovery via Grammar-Guided Learning and Search

Jan 29, 2026Abstract:Automatically discovering formulaic alpha factors is a central problem in quantitative finance. Existing methods often ignore syntactic and semantic constraints, relying on exhaustive search over unstructured and unbounded spaces. We present AlphaCFG, a grammar-based framework for defining and discovering alpha factors that are syntactically valid, financially interpretable, and computationally efficient. AlphaCFG uses an alpha-oriented context-free grammar to define a tree-structured, size-controlled search space, and formulates alpha discovery as a tree-structured linguistic Markov decision process, which is then solved using a grammar-aware Monte Carlo Tree Search guided by syntax-sensitive value and policy networks. Experiments on Chinese and U.S. stock market datasets show that AlphaCFG outperforms state-of-the-art baselines in both search efficiency and trading profitability. Beyond trading strategies, AlphaCFG serves as a general framework for symbolic factor discovery and refinement across quantitative finance, including asset pricing and portfolio construction.

ORGEval: Graph-Theoretic Evaluation of LLMs in Optimization Modeling

Oct 31, 2025Abstract:Formulating optimization problems for industrial applications demands significant manual effort and domain expertise. While Large Language Models (LLMs) show promise in automating this process, evaluating their performance remains difficult due to the absence of robust metrics. Existing solver-based approaches often face inconsistency, infeasibility issues, and high computational costs. To address these issues, we propose ORGEval, a graph-theoretic evaluation framework for assessing LLMs' capabilities in formulating linear and mixed-integer linear programs. ORGEval represents optimization models as graphs, reducing equivalence detection to graph isomorphism testing. We identify and prove a sufficient condition, when the tested graphs are symmetric decomposable (SD), under which the Weisfeiler-Lehman (WL) test is guaranteed to correctly detect isomorphism. Building on this, ORGEval integrates a tailored variant of the WL-test with an SD detection algorithm to evaluate model equivalence. By focusing on structural equivalence rather than instance-level configurations, ORGEval is robust to numerical variations. Experimental results show that our method can successfully detect model equivalence and produce 100\% consistent results across random parameter configurations, while significantly outperforming solver-based methods in runtime, especially on difficult problems. Leveraging ORGEval, we construct the Bench4Opt dataset and benchmark state-of-the-art LLMs on optimization modeling. Our results reveal that although optimization modeling remains challenging for all LLMs, DeepSeek-V3 and Claude-Opus-4 achieve the highest accuracies under direct prompting, outperforming even leading reasoning models.

DiffVolume: Diffusion Models for Volume Generation in Limit Order Books

Aug 12, 2025

Abstract:Modeling limit order books (LOBs) dynamics is a fundamental problem in market microstructure research. In particular, generating high-dimensional volume snapshots with strong temporal and liquidity-dependent patterns remains a challenging task, despite recent work exploring the application of Generative Adversarial Networks to LOBs. In this work, we propose a conditional \textbf{Diff}usion model for the generation of future LOB \textbf{Volume} snapshots (\textbf{DiffVolume}). We evaluate our model across three axes: (1) \textit{Realism}, where we show that DiffVolume, conditioned on past volume history and time of day, better reproduces statistical properties such as marginal distribution, spatial correlation, and autocorrelation decay; (2) \textit{Counterfactual generation}, allowing for controllable generation under hypothetical liquidity scenarios by additionally conditioning on a target future liquidity profile; and (3) \textit{Downstream prediction}, where we show that the synthetic counterfactual data from our model improves the performance of future liquidity forecasting models. Together, these results suggest that DiffVolume provides a powerful and flexible framework for realistic and controllable LOB volume generation.

Causal Sufficiency and Necessity Improves Chain-of-Thought Reasoning

Jun 11, 2025

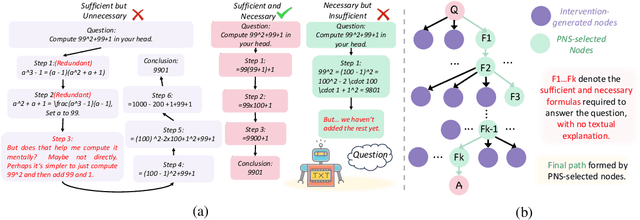

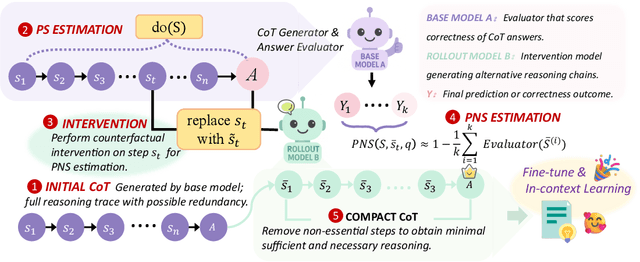

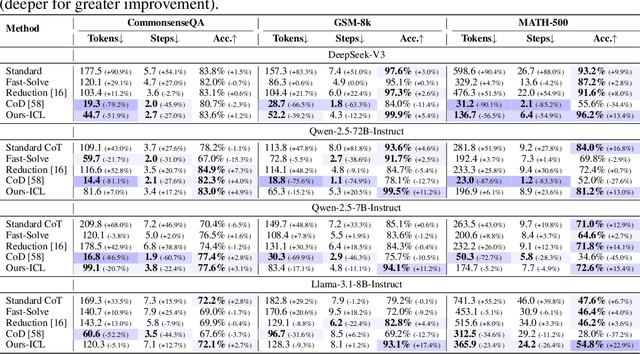

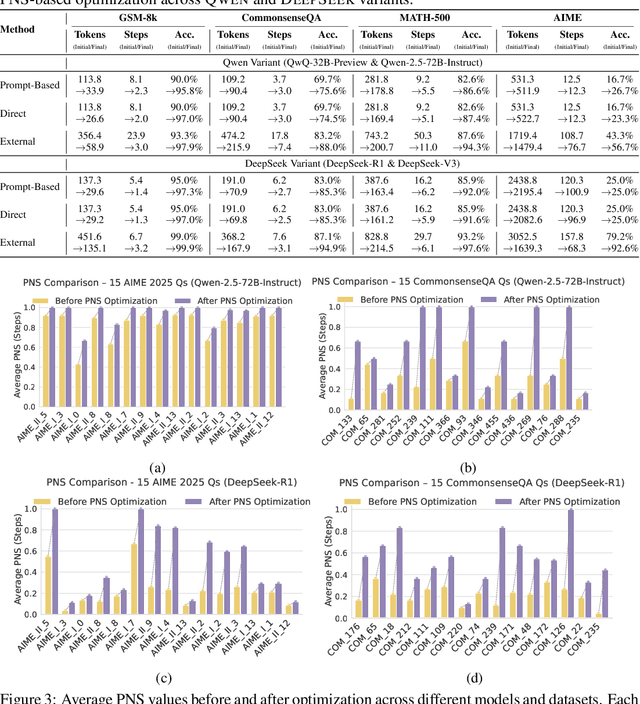

Abstract:Chain-of-Thought (CoT) prompting plays an indispensable role in endowing large language models (LLMs) with complex reasoning capabilities. However, CoT currently faces two fundamental challenges: (1) Sufficiency, which ensures that the generated intermediate inference steps comprehensively cover and substantiate the final conclusion; and (2) Necessity, which identifies the inference steps that are truly indispensable for the soundness of the resulting answer. We propose a causal framework that characterizes CoT reasoning through the dual lenses of sufficiency and necessity. Incorporating causal Probability of Sufficiency and Necessity allows us not only to determine which steps are logically sufficient or necessary to the prediction outcome, but also to quantify their actual influence on the final reasoning outcome under different intervention scenarios, thereby enabling the automated addition of missing steps and the pruning of redundant ones. Extensive experimental results on various mathematical and commonsense reasoning benchmarks confirm substantial improvements in reasoning efficiency and reduced token usage without sacrificing accuracy. Our work provides a promising direction for improving LLM reasoning performance and cost-effectiveness.

A Financial Time Series Denoiser Based on Diffusion Model

Sep 02, 2024

Abstract:Financial time series often exhibit low signal-to-noise ratio, posing significant challenges for accurate data interpretation and prediction and ultimately decision making. Generative models have gained attention as powerful tools for simulating and predicting intricate data patterns, with the diffusion model emerging as a particularly effective method. This paper introduces a novel approach utilizing the diffusion model as a denoiser for financial time series in order to improve data predictability and trading performance. By leveraging the forward and reverse processes of the conditional diffusion model to add and remove noise progressively, we reconstruct original data from noisy inputs. Our extensive experiments demonstrate that diffusion model-based denoised time series significantly enhance the performance on downstream future return classification tasks. Moreover, trading signals derived from the denoised data yield more profitable trades with fewer transactions, thereby minimizing transaction costs and increasing overall trading efficiency. Finally, we show that by using classifiers trained on denoised time series, we can recognize the noising state of the market and obtain excess return.

Batch-Instructed Gradient for Prompt Evolution:Systematic Prompt Optimization for Enhanced Text-to-Image Synthesis

Jun 13, 2024Abstract:Text-to-image models have shown remarkable progress in generating high-quality images from user-provided prompts. Despite this, the quality of these images varies due to the models' sensitivity to human language nuances. With advancements in large language models, there are new opportunities to enhance prompt design for image generation tasks. Existing research primarily focuses on optimizing prompts for direct interaction, while less attention is given to scenarios involving intermediary agents, like the Stable Diffusion model. This study proposes a Multi-Agent framework to optimize input prompts for text-to-image generation models. Central to this framework is a prompt generation mechanism that refines initial queries using dynamic instructions, which evolve through iterative performance feedback. High-quality prompts are then fed into a state-of-the-art text-to-image model. A professional prompts database serves as a benchmark to guide the instruction modifier towards generating high-caliber prompts. A scoring system evaluates the generated images, and an LLM generates new instructions based on calculated gradients. This iterative process is managed by the Upper Confidence Bound (UCB) algorithm and assessed using the Human Preference Score version 2 (HPS v2). Preliminary ablation studies highlight the effectiveness of various system components and suggest areas for future improvements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge