Yue Ma

The Hong Kong University of Science and Technology

FastVMT: Eliminating Redundancy in Video Motion Transfer

Feb 05, 2026Abstract:Video motion transfer aims to synthesize videos by generating visual content according to a text prompt while transferring the motion pattern observed in a reference video. Recent methods predominantly use the Diffusion Transformer (DiT) architecture. To achieve satisfactory runtime, several methods attempt to accelerate the computations in the DiT, but fail to address structural sources of inefficiency. In this work, we identify and remove two types of computational redundancy in earlier work: motion redundancy arises because the generic DiT architecture does not reflect the fact that frame-to-frame motion is small and smooth; gradient redundancy occurs if one ignores that gradients change slowly along the diffusion trajectory. To mitigate motion redundancy, we mask the corresponding attention layers to a local neighborhood such that interaction weights are not computed unnecessarily distant image regions. To exploit gradient redundancy, we design an optimization scheme that reuses gradients from previous diffusion steps and skips unwarranted gradient computations. On average, FastVMT achieves a 3.43x speedup without degrading the visual fidelity or the temporal consistency of the generated videos.

Resilient Load Forecasting under Climate Change: Adaptive Conditional Neural Processes for Few-Shot Extreme Load Forecasting

Feb 04, 2026Abstract:Extreme weather can substantially change electricity consumption behavior, causing load curves to exhibit sharp spikes and pronounced volatility. If forecasts are inaccurate during those periods, power systems are more likely to face supply shortfalls or localized overloads, forcing emergency actions such as load shedding and increasing the risk of service disruptions and public-safety impacts. This problem is inherently difficult because extreme events can trigger abrupt regime shifts in load patterns, while relevant extreme samples are rare and irregular, making reliable learning and calibration challenging. We propose AdaCNP, a probabilistic forecasting model for data-scarce condition. AdaCNP learns similarity in a shared embedding space. For each target data, it evaluates how relevant each historical context segment is to the current condition and reweights the context information accordingly. This design highlights the most informative historical evidence even when extreme samples are rare. It enables few-shot adaptation to previously unseen extreme patterns. AdaCNP also produces predictive distributions for risk-aware decision-making without expensive fine-tuning on the target domain. We evaluate AdaCNP on real-world power-system load data and compare it against a range of representative baselines. The results show that AdaCNP is more robust during extreme periods, reducing the mean squared error by 22\% relative to the strongest baseline while achieving the lowest negative log-likelihood, indicating more reliable probabilistic outputs. These findings suggest that AdaCNP can effectively mitigate the combined impact of abrupt distribution shifts and scarce extreme samples, providing a more trustworthy forecasting for resilient power system operation under extreme events.

PQTNet: Pixel-wise Quantitative Thermography Neural Network for Estimating Defect Depth in Polylactic Acid Parts by Additive Manufacturing

Feb 03, 2026Abstract:Defect depth quantification in additively manufactured (AM) components remains a significant challenge for non-destructive testing (NDT). This study proposes a Pixel-wise Quantitative Thermography Neural Network (PQT-Net) to address this challenge for polylactic acid (PLA) parts. A key innovation is a novel data augmentation strategy that reconstructs thermal sequence data into two-dimensional stripe images, preserving the complete temporal evolution of heat diffusion for each pixel. The PQT-Net architecture incorporates a pre-trained EfficientNetV2-S backbone and a custom Residual Regression Head (RRH) with learnable parameters to refine outputs. Comparative experiments demonstrate the superiority of PQT-Net over other deep learning models, achieving a minimum Mean Absolute Error (MAE) of 0.0094 mm and a coefficient of determination (R) exceeding 99%. The high precision of PQT-Net underscores its potential for robust quantitative defect characterization in AM.

Multimodal Signal Processing For Thermo-Visible-Lidar Fusion In Real-time 3D Semantic Mapping

Jan 14, 2026Abstract:In complex environments, autonomous robot navigation and environmental perception pose higher requirements for SLAM technology. This paper presents a novel method for semantically enhancing 3D point cloud maps with thermal information. By first performing pixel-level fusion of visible and infrared images, the system projects real-time LiDAR point clouds onto this fused image stream. It then segments heat source features in the thermal channel to instantly identify high temperature targets and applies this temperature information as a semantic layer on the final 3D map. This approach generates maps that not only have accurate geometry but also possess a critical semantic understanding of the environment, making it highly valuable for specific applications like rapid disaster assessment and industrial preventive maintenance.

Thermo-LIO: A Novel Multi-Sensor Integrated System for Structural Health Monitoring

Jan 13, 2026Abstract:Traditional two-dimensional thermography, despite being non-invasive and useful for defect detection in the construction field, is limited in effectively assessing complex geometries, inaccessible areas, and subsurface defects. This paper introduces Thermo-LIO, a novel multi-sensor system that can enhance Structural Health Monitoring (SHM) by fusing thermal imaging with high-resolution LiDAR. To achieve this, the study first develops a multimodal fusion method combining thermal imaging and LiDAR, enabling precise calibration and synchronization of multimodal data streams to create accurate representations of temperature distributions in buildings. Second, it integrates this fusion approach with LiDAR-Inertial Odometry (LIO), enabling full coverage of large-scale structures and allowing for detailed monitoring of temperature variations and defect detection across inspection cycles. Experimental validations, including case studies on a bridge and a hall building, demonstrate that Thermo-LIO can detect detailed thermal anomalies and structural defects more accurately than traditional methods. The system enhances diagnostic precision, enables real-time processing, and expands inspection coverage, highlighting the crucial role of multimodal sensor integration in advancing SHM methodologies for large-scale civil infrastructure.

MiMo-V2-Flash Technical Report

Jan 08, 2026Abstract:We present MiMo-V2-Flash, a Mixture-of-Experts (MoE) model with 309B total parameters and 15B active parameters, designed for fast, strong reasoning and agentic capabilities. MiMo-V2-Flash adopts a hybrid attention architecture that interleaves Sliding Window Attention (SWA) with global attention, with a 128-token sliding window under a 5:1 hybrid ratio. The model is pre-trained on 27 trillion tokens with Multi-Token Prediction (MTP), employing a native 32k context length and subsequently extended to 256k. To efficiently scale post-training compute, MiMo-V2-Flash introduces a novel Multi-Teacher On-Policy Distillation (MOPD) paradigm. In this framework, domain-specialized teachers (e.g., trained via large-scale reinforcement learning) provide dense and token-level reward, enabling the student model to perfectly master teacher expertise. MiMo-V2-Flash rivals top-tier open-weight models such as DeepSeek-V3.2 and Kimi-K2, despite using only 1/2 and 1/3 of their total parameters, respectively. During inference, by repurposing MTP as a draft model for speculative decoding, MiMo-V2-Flash achieves up to 3.6 acceptance length and 2.6x decoding speedup with three MTP layers. We open-source both the model weights and the three-layer MTP weights to foster open research and community collaboration.

Application Research of a Deep Learning Model Integrating CycleGAN and YOLO in PCB Infrared Defect Detection

Jan 01, 2026Abstract:This paper addresses the critical bottleneck of infrared (IR) data scarcity in Printed Circuit Board (PCB) defect detection by proposing a cross-modal data augmentation framework integrating CycleGAN and YOLOv8. Unlike conventional methods relying on paired supervision, we leverage CycleGAN to perform unpaired image-to-image translation, mapping abundant visible-light PCB images into the infrared domain. This generative process synthesizes high-fidelity pseudo-IR samples that preserve the structural semantics of defects while accurately simulating thermal distribution patterns. Subsequently, we construct a heterogeneous training strategy that fuses generated pseudo-IR data with limited real IR samples to train a lightweight YOLOv8 detector. Experimental results demonstrate that this method effectively enhances feature learning under low-data conditions. The augmented detector significantly outperforms models trained on limited real data alone and approaches the performance benchmarks of fully supervised training, proving the efficacy of pseudo-IR synthesis as a robust augmentation strategy for industrial inspection.

MiMo-Audio: Audio Language Models are Few-Shot Learners

Dec 29, 2025Abstract:Existing audio language models typically rely on task-specific fine-tuning to accomplish particular audio tasks. In contrast, humans are able to generalize to new audio tasks with only a few examples or simple instructions. GPT-3 has shown that scaling next-token prediction pretraining enables strong generalization capabilities in text, and we believe this paradigm is equally applicable to the audio domain. By scaling MiMo-Audio's pretraining data to over one hundred million of hours, we observe the emergence of few-shot learning capabilities across a diverse set of audio tasks. We develop a systematic evaluation of these capabilities and find that MiMo-Audio-7B-Base achieves SOTA performance on both speech intelligence and audio understanding benchmarks among open-source models. Beyond standard metrics, MiMo-Audio-7B-Base generalizes to tasks absent from its training data, such as voice conversion, style transfer, and speech editing. MiMo-Audio-7B-Base also demonstrates powerful speech continuation capabilities, capable of generating highly realistic talk shows, recitations, livestreaming and debates. At the post-training stage, we curate a diverse instruction-tuning corpus and introduce thinking mechanisms into both audio understanding and generation. MiMo-Audio-7B-Instruct achieves open-source SOTA on audio understanding benchmarks (MMSU, MMAU, MMAR, MMAU-Pro), spoken dialogue benchmarks (Big Bench Audio, MultiChallenge Audio) and instruct-TTS evaluations, approaching or surpassing closed-source models. Model checkpoints and full evaluation suite are available at https://github.com/XiaomiMiMo/MiMo-Audio.

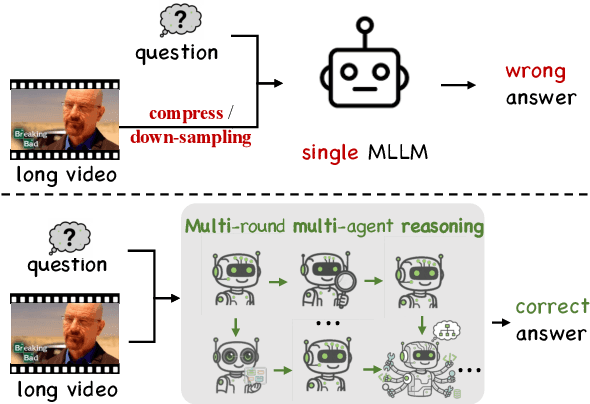

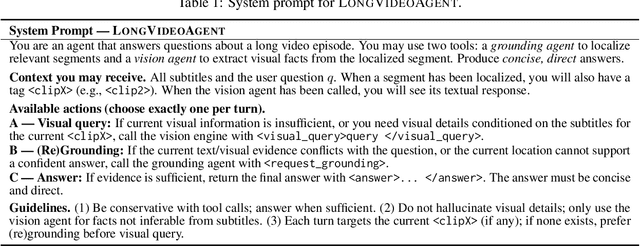

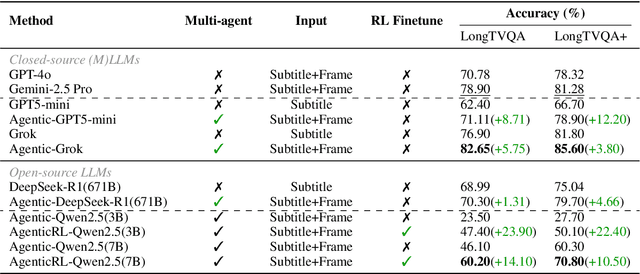

LongVideoAgent: Multi-Agent Reasoning with Long Videos

Dec 23, 2025

Abstract:Recent advances in multimodal LLMs and systems that use tools for long-video QA point to the promise of reasoning over hour-long episodes. However, many methods still compress content into lossy summaries or rely on limited toolsets, weakening temporal grounding and missing fine-grained cues. We propose a multi-agent framework in which a master LLM coordinates a grounding agent to localize question-relevant segments and a vision agent to extract targeted textual observations. The master agent plans with a step limit, and is trained with reinforcement learning to encourage concise, correct, and efficient multi-agent cooperation. This design helps the master agent focus on relevant clips via grounding, complements subtitles with visual detail, and yields interpretable trajectories. On our proposed LongTVQA and LongTVQA+ which are episode-level datasets aggregated from TVQA/TVQA+, our multi-agent system significantly outperforms strong non-agent baselines. Experiments also show reinforcement learning further strengthens reasoning and planning for the trained agent. Code and data will be shared at https://longvideoagent.github.io/.

MultiMotion: Multi Subject Video Motion Transfer via Video Diffusion Transformer

Dec 12, 2025Abstract:Multi-object video motion transfer poses significant challenges for Diffusion Transformer (DiT) architectures due to inherent motion entanglement and lack of object-level control. We present MultiMotion, a novel unified framework that overcomes these limitations. Our core innovation is Maskaware Attention Motion Flow (AMF), which utilizes SAM2 masks to explicitly disentangle and control motion features for multiple objects within the DiT pipeline. Furthermore, we introduce RectPC, a high-order predictor-corrector solver for efficient and accurate sampling, particularly beneficial for multi-entity generation. To facilitate rigorous evaluation, we construct the first benchmark dataset specifically for DiT-based multi-object motion transfer. MultiMotion demonstrably achieves precise, semantically aligned, and temporally coherent motion transfer for multiple distinct objects, maintaining DiT's high quality and scalability. The code is in the supp.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge