Yuan Xu

Technische Universität Berlin, Berlin, Germany

How Do We Research Human-Robot Interaction in the Age of Large Language Models? A Systematic Review

Feb 13, 2026Abstract:Advances in large language models (LLMs) are profoundly reshaping the field of human-robot interaction (HRI). While prior work has highlighted the technical potential of LLMs, few studies have systematically examined their human-centered impact (e.g., human-oriented understanding, user modeling, and levels of autonomy), making it difficult to consolidate emerging challenges in LLM-driven HRI systems. Therefore, we conducted a systematic literature search following the PRISMA guideline, identifying 86 articles that met our inclusion criteria. Our findings reveal that: (1) LLMs are transforming the fundamentals of HRI by reshaping how robots sense context, generate socially grounded interactions, and maintain continuous alignment with human needs in embodied settings; and (2) current research is largely exploratory, with different studies focusing on different facets of LLM-driven HRI, resulting in wide-ranging choices of experimental setups, study methods, and evaluation metrics. Finally, we identify key design considerations and challenges, offering a coherent overview and guidelines for future research at the intersection of LLMs and HRI.

BridgeV2W: Bridging Video Generation Models to Embodied World Models via Embodiment Masks

Feb 03, 2026Abstract:Embodied world models have emerged as a promising paradigm in robotics, most of which leverage large-scale Internet videos or pretrained video generation models to enrich visual and motion priors. However, they still face key challenges: a misalignment between coordinate-space actions and pixel-space videos, sensitivity to camera viewpoint, and non-unified architectures across embodiments. To this end, we present BridgeV2W, which converts coordinate-space actions into pixel-aligned embodiment masks rendered from the URDF and camera parameters. These masks are then injected into a pretrained video generation model via a ControlNet-style pathway, which aligns the action control signals with predicted videos, adds view-specific conditioning to accommodate camera viewpoints, and yields a unified world model architecture across embodiments. To mitigate overfitting to static backgrounds, BridgeV2W further introduces a flow-based motion loss that focuses on learning dynamic and task-relevant regions. Experiments on single-arm (DROID) and dual-arm (AgiBot-G1) datasets, covering diverse and challenging conditions with unseen viewpoints and scenes, show that BridgeV2W improves video generation quality compared to prior state-of-the-art methods. We further demonstrate the potential of BridgeV2W on downstream real-world tasks, including policy evaluation and goal-conditioned planning. More results can be found on our project website at https://BridgeV2W.github.io .

One Size, Many Fits: Aligning Diverse Group-Wise Click Preferences in Large-Scale Advertising Image Generation

Feb 02, 2026Abstract:Advertising image generation has increasingly focused on online metrics like Click-Through Rate (CTR), yet existing approaches adopt a ``one-size-fits-all" strategy that optimizes for overall CTR while neglecting preference diversity among user groups. This leads to suboptimal performance for specific groups, limiting targeted marketing effectiveness. To bridge this gap, we present \textit{One Size, Many Fits} (OSMF), a unified framework that aligns diverse group-wise click preferences in large-scale advertising image generation. OSMF begins with product-aware adaptive grouping, which dynamically organizes users based on their attributes and product characteristics, representing each group with rich collective preference features. Building on these groups, preference-conditioned image generation employs a Group-aware Multimodal Large Language Model (G-MLLM) to generate tailored images for each group. The G-MLLM is pre-trained to simultaneously comprehend group features and generate advertising images. Subsequently, we fine-tune the G-MLLM using our proposed Group-DPO for group-wise preference alignment, which effectively enhances each group's CTR on the generated images. To further advance this field, we introduce the Grouped Advertising Image Preference Dataset (GAIP), the first large-scale public dataset of group-wise image preferences, including around 600K groups built from 40M users. Extensive experiments demonstrate that our framework achieves the state-of-the-art performance in both offline and online settings. Our code and datasets will be released at https://github.com/JD-GenX/OSMF.

FrontierCS: Evolving Challenges for Evolving Intelligence

Dec 17, 2025

Abstract:We introduce FrontierCS, a benchmark of 156 open-ended problems across diverse areas of computer science, designed and reviewed by experts, including CS PhDs and top-tier competitive programming participants and problem setters. Unlike existing benchmarks that focus on tasks with known optimal solutions, FrontierCS targets problems where the optimal solution is unknown, but the quality of a solution can be objectively evaluated. Models solve these tasks by implementing executable programs rather than outputting a direct answer. FrontierCS includes algorithmic problems, which are often NP-hard variants of competitive programming problems with objective partial scoring, and research problems with the same property. For each problem we provide an expert reference solution and an automatic evaluator. Combining open-ended design, measurable progress, and expert curation, FrontierCS provides a benchmark at the frontier of computer-science difficulty. Empirically, we find that frontier reasoning models still lag far behind human experts on both the algorithmic and research tracks, that increasing reasoning budgets alone does not close this gap, and that models often over-optimize for generating merely workable code instead of discovering high-quality algorithms and system designs.

EgoDemoGen: Novel Egocentric Demonstration Generation Enables Viewpoint-Robust Manipulation

Sep 26, 2025

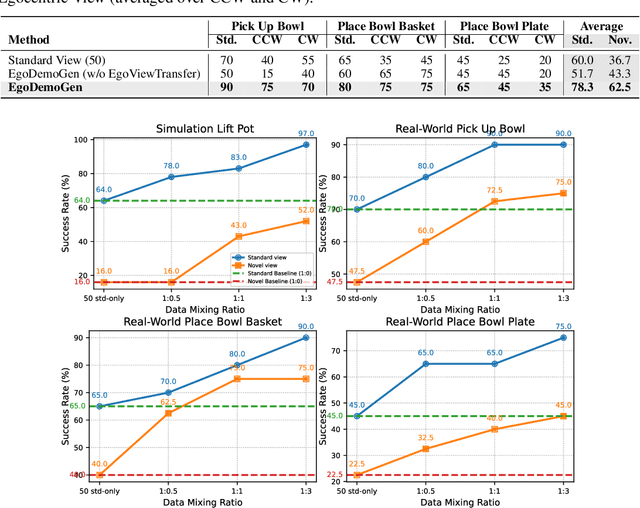

Abstract:Imitation learning based policies perform well in robotic manipulation, but they often degrade under *egocentric viewpoint shifts* when trained from a single egocentric viewpoint. To address this issue, we present **EgoDemoGen**, a framework that generates *paired* novel egocentric demonstrations by retargeting actions in the novel egocentric frame and synthesizing the corresponding egocentric observation videos with proposed generative video repair model **EgoViewTransfer**, which is conditioned by a novel-viewpoint reprojected scene video and a robot-only video rendered from the retargeted joint actions. EgoViewTransfer is finetuned from a pretrained video generation model using self-supervised double reprojection strategy. We evaluate EgoDemoGen on both simulation (RoboTwin2.0) and real-world robot. After training with a mixture of EgoDemoGen-generated novel egocentric demonstrations and original standard egocentric demonstrations, policy success rate improves **absolutely** by **+17.0%** for standard egocentric viewpoint and by **+17.7%** for novel egocentric viewpoints in simulation. On real-world robot, the **absolute** improvements are **+18.3%** and **+25.8%**. Moreover, performance continues to improve as the proportion of EgoDemoGen-generated demonstrations increases, with diminishing returns. These results demonstrate that EgoDemoGen provides a practical route to egocentric viewpoint-robust robotic manipulation.

DTPA: Dynamic Token-level Prefix Augmentation for Controllable Text Generation

Aug 06, 2025

Abstract:Controllable Text Generation (CTG) is a vital subfield in Natural Language Processing (NLP), aiming to generate text that aligns with desired attributes. However, previous studies commonly focus on the quality of controllable text generation for short sequences, while the generation of long-form text remains largely underexplored. In this paper, we observe that the controllability of texts generated by the powerful prefix-based method Air-Decoding tends to decline with increasing sequence length, which we hypothesize primarily arises from the observed decay in attention to the prefixes. Meanwhile, different types of prefixes including soft and hard prefixes are also key factors influencing performance. Building on these insights, we propose a lightweight and effective framework called Dynamic Token-level Prefix Augmentation (DTPA) based on Air-Decoding for controllable text generation. Specifically, it first selects the optimal prefix type for a given task. Then we dynamically amplify the attention to the prefix for the attribute distribution to enhance controllability, with a scaling factor growing exponentially as the sequence length increases. Moreover, based on the task, we optionally apply a similar augmentation to the original prompt for the raw distribution to balance text quality. After attribute distribution reconstruction, the generated text satisfies the attribute constraints well. Experiments on multiple CTG tasks demonstrate that DTPA generally outperforms other methods in attribute control while maintaining competitive fluency, diversity, and topic relevance. Further analysis highlights DTPA's superior effectiveness in long text generation.

APR-Transformer: Initial Pose Estimation for Localization in Complex Environments through Absolute Pose Regression

May 14, 2025Abstract:Precise initialization plays a critical role in the performance of localization algorithms, especially in the context of robotics, autonomous driving, and computer vision. Poor localization accuracy is often a consequence of inaccurate initial poses, particularly noticeable in GNSS-denied environments where GPS signals are primarily relied upon for initialization. Recent advances in leveraging deep neural networks for pose regression have led to significant improvements in both accuracy and robustness, especially in estimating complex spatial relationships and orientations. In this paper, we introduce APR-Transformer, a model architecture inspired by state-of-the-art methods, which predicts absolute pose (3D position and 3D orientation) using either image or LiDAR data. We demonstrate that our proposed method achieves state-of-the-art performance on established benchmark datasets such as the Radar Oxford Robot-Car and DeepLoc datasets. Furthermore, we extend our experiments to include our custom complex APR-BeIntelli dataset. Additionally, we validate the reliability of our approach in GNSS-denied environments by deploying the model in real-time on an autonomous test vehicle. This showcases the practical feasibility and effectiveness of our approach. The source code is available at:https://github.com/GT-ARC/APR-Transformer.

Nano-3D: Metasurface-Based Neural Depth Imaging

Mar 20, 2025

Abstract:Depth imaging is a foundational building block for broad applications, such as autonomous driving and virtual/augmented reality. Traditionally, depth cameras have relied on time-of-flight sensors or multi-lens systems to achieve physical depth measurements. However, these systems often face a trade-off between a bulky form factor and imprecise approximations, limiting their suitability for spatially constrained scenarios. Inspired by the emerging advancements of nano-optics, we present Nano-3D, a metasurface-based neural depth imaging solution with an ultra-compact footprint. Nano-3D integrates our custom-fabricated 700 nm thick TiO2 metasurface with a multi-module deep neural network to extract precise metric depth information from monocular metasurface-polarized imagery. We demonstrate the effectiveness of Nano-3D with both simulated and physical experiments. We hope the exhibited success paves the way for the community to bridge future graphics systems with emerging nanomaterial technologies through novel computational approaches.

CrowdHMTware: A Cross-level Co-adaptation Middleware for Context-aware Mobile DL Deployment

Mar 06, 2025Abstract:There are many deep learning (DL) powered mobile and wearable applications today continuously and unobtrusively sensing the ambient surroundings to enhance all aspects of human lives.To enable robust and private mobile sensing, DL models are often deployed locally on resource-constrained mobile devices using techniques such as model compression or offloading.However, existing methods, either front-end algorithm level (i.e. DL model compression/partitioning) or back-end scheduling level (i.e. operator/resource scheduling), cannot be locally online because they require offline retraining to ensure accuracy or rely on manually pre-defined strategies, struggle with dynamic adaptability.The primary challenge lies in feeding back runtime performance from the back-end level to the front-end level optimization decision. Moreover, the adaptive mobile DL model porting middleware with cross-level co-adaptation is less explored, particularly in mobile environments with diversity and dynamics. In response, we introduce CrowdHMTware, a dynamic context-adaptive DL model deployment middleware for heterogeneous mobile devices. It establishes an automated adaptation loop between cross-level functional components, i.e. elastic inference, scalable offloading, and model-adaptive engine, enhancing scalability and adaptability. Experiments with four typical tasks across 15 platforms and a real-world case study demonstrate that CrowdHMTware can effectively scale DL model, offloading, and engine actions across diverse platforms and tasks. It hides run-time system issues from developers, reducing the required developer expertise.

Digital Modeling of Massage Techniques and Reproduction by Robotic Arms

Dec 08, 2024Abstract:This paper explores the digital modeling and robotic reproduction of traditional Chinese medicine (TCM) massage techniques. We adopt an adaptive admittance control algorithm to optimize force and position control, ensuring safety and comfort. The paper analyzes key TCM techniques from kinematic and dynamic perspectives, and designs robotic systems to reproduce these massage techniques. The results demonstrate that the robot successfully mimics the characteristics of TCM massage, providing a foundation for integrating traditional therapy with modern robotics and expanding assistive therapy applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge