Zhaohui Yang

Sherman

Physics-Inspired Target Shape Detection and Reconstruction in mmWave Communication Systems

Feb 05, 2026Abstract:The integration of sensing and communication (ISAC) is an essential function of future wireless systems. Due to its large available bandwidth, millimeter-wave (mmWave) ISAC systems are able to achieve high sensing accuracy. In this paper, we consider the multiple base-station (BS) collaborative sensing problem in a multi-input multi-output (MIMO) orthogonal frequency division multiplexing (OFDM) mmWave communication system. Our aim is to sense a remote target shape with the collected signals which consist of both the reflection and scattering signals. We first characterize the mmWave's scattering and reflection effects based on the Lambertian scattering model. Then we apply the periodogram technique to obtain rough scattering point detection, and further incorporate the subspace method to achieve more precise scattering and reflection point detection. Based on these, a reconstruction algorithm based on Hough Transform and principal component analysis (PCA) is designed for a single convex polygon target scenario. To improve the accuracy and completeness of the reconstruction results, we propose a method to further fuse the scattering and reflection points. Extensive simulation results validate the effectiveness of the proposed algorithms.

Channel Extrapolation for MIMO Systems with the Assistance of Multi-path Information Induced from Channel State Information

Jan 29, 2026Abstract:Acquiring channel state information (CSI) through traditional methods, such as channel estimation, is increasingly challenging for the emerging sixth generation (6G) mobile networks due to high overhead. To address this issue, channel extrapolation techniques have been proposed to acquire complete CSI from a limited number of known CSIs. To improve extrapolation accuracy, environmental information, such as visual images or radar data, has been utilized, which poses challenges including additional hardware, privacy and multi-modal alignment concerns. To this end, this paper proposes a novel channel extrapolation framework by leveraging environment-related multi-path characteristics induced directly from CSI without integrating additional modalities. Specifically, we propose utilizing the multi-path characteristics in the form of power-delay profile (PDP), which is acquired using a CSI-to-PDP module. CSI-to-PDP module is trained in an AE-based framework by reconstructing the PDPs and constraining the latent low-dimensional features to represent the CSI. We further extract the total power & power-weighted delay of all the identified paths in PDP as the multi-path information. Building on this, we proposed a MAE architecture trained in a self-supervised manner to perform channel extrapolation. Unlike standard MAE approaches, our method employs separate encoders to extract features from the masked CSI and the multi-path information, which are then fused by a cross-attention module. Extensive simulations demonstrate that this framework improves extrapolation performance dramatically, with a minor increase in inference time (around 0.1 ms). Furthermore, our model shows strong generalization capabilities, particularly when only a small portion of the CSI is known, outperforming existing benchmarks.

Codebook Design for Limited Feedback in Near-Field XL-MIMO Systems

Jan 15, 2026Abstract:In this paper, we study efficient codebook design for limited feedback in extremely large-scale multiple-input-multiple-output (XL-MIMO) frequency division duplexing (FDD) systems. It is worth noting that existing codebook designs for XL-MIMO, such as polar-domain codebook, have not well taken into account user (location) distribution in practice, thereby incurring excessive feedback overhead. To address this issue, we propose in this paper a novel and efficient feedback codebook tailored to user distribution. To this end, we first consider a typical scenario where users are uniformly distributed within a specific polar-region, based on which a sum-rate maximization problem is formulated to jointly optimize angle-range samples and bit allocation among angle/range feedback. This problem is challenging to solve due to the lack of a closed-form expression for the received power in terms of angle and range samples. By leveraging a Voronoi partitioning approach, we show that uniform angle sampling is optimal for received power maximization. For more challenging range sampling design, we obtain a tight lower-bound on the received power and show that geometric sampling, where the ratio between adjacent samples is constant, can maximize the lower bound and thus serves as a high-quality suboptimal solution. We then extend the proposed framework to accommodate more general non-uniform user distribution via an alternating sampling method. Furthermore, theoretical analysis reveals that as the array size increases, the optimal allocation of feedback bits increasingly favors range samples at the expense of angle samples. Finally, numerical results validate the superior rate performance and robustness of the proposed codebook design under various system setups, achieving significant gains over benchmark schemes, including the widely used polar-domain codebook.

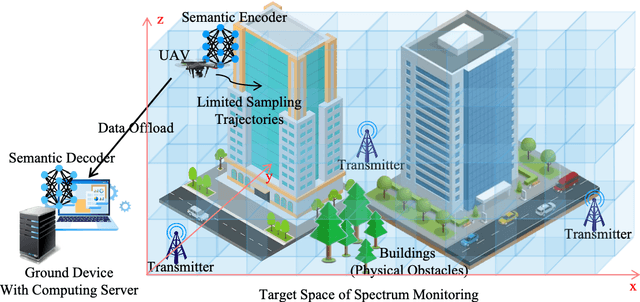

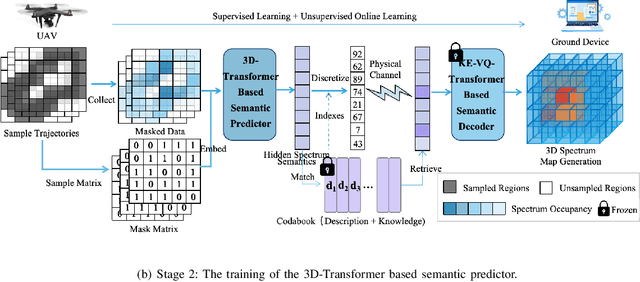

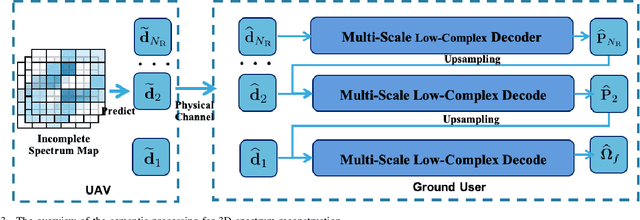

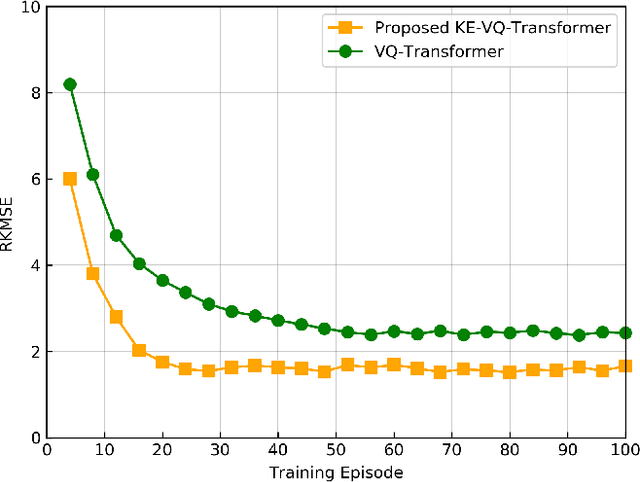

Knowledge-Driven 3D Semantic Spectrum Map: KE-VQ-Transformer Based UAV Semantic Communication and Map Completion

Dec 24, 2025

Abstract:Artificial intelligence (AI)-native three-dimensional (3D) spectrum maps are crucial in spectrum monitoring for intelligent communication networks. However, it is challenging to obtain and transmit 3D spectrum maps in a spectrum-efficient, computation-efficient, and AI-driven manner, especially under complex communication environments and sparse sampling data. In this paper, we consider practical air-to-ground semantic communications for spectrum map completion, where the unmanned aerial vehicle (UAV) measures the spectrum at spatial points and extracts the spectrum semantics, which are then utilized to complete spectrum maps at the ground device. Since statistical machine learning can easily be misled by superficial data correlations with the lack of interpretability, we propose a novel knowledge-enhanced semantic spectrum map completion framework with two expert knowledge-driven constraints from physical signal propagation models. This framework can capture the real-world physics and avoid getting stuck in the mindset of superficial data distributions. Furthermore, a knowledge-enhanced vector-quantized Transformer (KE-VQ-Transformer) based multi-scale low-complex intelligent completion approach is proposed, where the sparse window is applied to avoid ultra-large 3D attention computation, and the multi-scale design improves the completion performance. The knowledge-enhanced mean square error (KMSE) and root KMSE (RKMSE) are introduced as novel metrics for semantic spectrum map completion that jointly consider the numerical precision and physical consistency with the signal propagation model, based on which a joint offline and online training method is developed with supervised and unsupervised knowledge loss. The simulation demonstrates that our proposed scheme outperforms the state-of-the-art benchmark schemes in terms of RKMSE.

RSMA-Assited and Transceiver-Coordinated ICI Management for MIMO-OFDM System

Dec 19, 2025

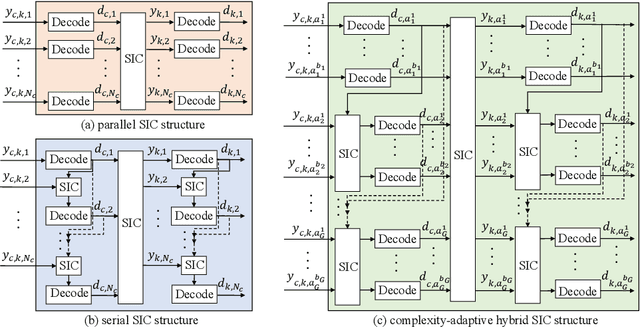

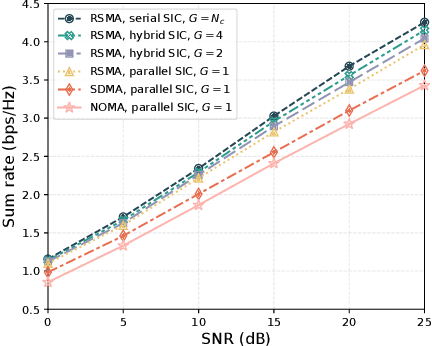

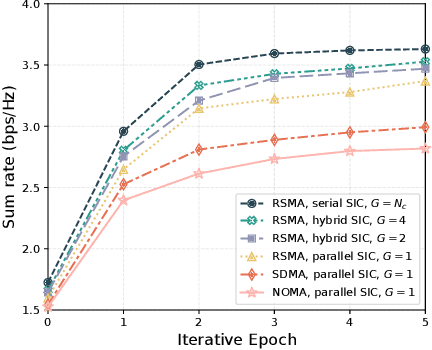

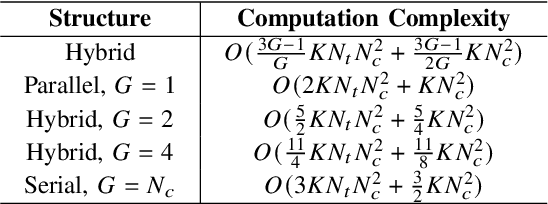

Abstract:High-mobility scenarios are becoming increasingly critical in next-generation communication systems. While multiple-input multiple-output orthogonal frequency division multiplexing (MIMO-OFDM) stands as a prominent technology, its performance in such scenarios is fundamentally limited by Doppler-induced inter-carrier interference (ICI). Rate splitting multiple access (RSMA), recognized as a key multiple access technique for future communications, demonstrates superior interference management capabilities that we leverage to address this challenge. In specific, we propose a novel RSMA-assisted and transceiver-coordinated transmission scheme for ICI management in MIMO-OFDM system: (1) At the receiver side, we develop a hybrid successive interference cancellation (SIC) architecture with dynamic subcarrier clustering, which enables parallel intra-cluster and serial inter-cluster processing to balance complexity and performance. (2) At the transmitter~side, we design a matched hybrid precoding through formulated sum-rate maximization, solved via our proposed augmented boundary-compressed particle swarm optimization (ABC-PSO) algorithm for analog phase optimization and weighted minimum mean-square error (WMMSE)-based digital precoding iteration. Simulation results show that our scheme brings effective ICI suppression and enhanced system capacity with controlled complexity.

Rate Maximization for UAV-assisted ISAC System with Fluid Antennas

Oct 09, 2025Abstract:This letter investigates the joint sensing problem between unmanned aerial vehicles (UAV) and base stations (BS) in integrated sensing and communication (ISAC) systems with fluid antennas (FA). In this system, the BS enhances its sensing performance through the UAV's perception system. We aim to maximize the communication rate between the BS and UAV while guaranteeing the joint system's sensing capability. By establishing a communication-sensing model with convex optimization properties, we decompose the problem and apply convex optimization to progressively solve key variables. An iterative algorithm employing an alternating optimization approach is subsequently developed to determine the optimal solution, significantly reducing the solution complexity. Simulation results validate the algorithm's effectiveness in balancing system performance.

LMM-Incentive: Large Multimodal Model-based Incentive Design for User-Generated Content in Web 3.0

Oct 06, 2025Abstract:Web 3.0 represents the next generation of the Internet, which is widely recognized as a decentralized ecosystem that focuses on value expression and data ownership. By leveraging blockchain and artificial intelligence technologies, Web 3.0 offers unprecedented opportunities for users to create, own, and monetize their content, thereby enabling User-Generated Content (UGC) to an entirely new level. However, some self-interested users may exploit the limitations of content curation mechanisms and generate low-quality content with less effort, obtaining platform rewards under information asymmetry. Such behavior can undermine Web 3.0 performance. To this end, we propose \textit{LMM-Incentive}, a novel Large Multimodal Model (LMM)-based incentive mechanism for UGC in Web 3.0. Specifically, we propose an LMM-based contract-theoretic model to motivate users to generate high-quality UGC, thereby mitigating the adverse selection problem from information asymmetry. To alleviate potential moral hazards after contract selection, we leverage LMM agents to evaluate UGC quality, which is the primary component of the contract, utilizing prompt engineering techniques to improve the evaluation performance of LMM agents. Recognizing that traditional contract design methods cannot effectively adapt to the dynamic environment of Web 3.0, we develop an improved Mixture of Experts (MoE)-based Proximal Policy Optimization (PPO) algorithm for optimal contract design. Simulation results demonstrate the superiority of the proposed MoE-based PPO algorithm over representative benchmarks in the context of contract design. Finally, we deploy the designed contract within an Ethereum smart contract framework, further validating the effectiveness of the proposed scheme.

SimpleVLA-RL: Scaling VLA Training via Reinforcement Learning

Sep 11, 2025Abstract:Vision-Language-Action (VLA) models have recently emerged as a powerful paradigm for robotic manipulation. Despite substantial progress enabled by large-scale pretraining and supervised fine-tuning (SFT), these models face two fundamental challenges: (i) the scarcity and high cost of large-scale human-operated robotic trajectories required for SFT scaling, and (ii) limited generalization to tasks involving distribution shift. Recent breakthroughs in Large Reasoning Models (LRMs) demonstrate that reinforcement learning (RL) can dramatically enhance step-by-step reasoning capabilities, raising a natural question: Can RL similarly improve the long-horizon step-by-step action planning of VLA? In this work, we introduce SimpleVLA-RL, an efficient RL framework tailored for VLA models. Building upon veRL, we introduce VLA-specific trajectory sampling, scalable parallelization, multi-environment rendering, and optimized loss computation. When applied to OpenVLA-OFT, SimpleVLA-RL achieves SoTA performance on LIBERO and even outperforms $\pi_0$ on RoboTwin 1.0\&2.0 with the exploration-enhancing strategies we introduce. SimpleVLA-RL not only reduces dependence on large-scale data and enables robust generalization, but also remarkably surpasses SFT in real-world tasks. Moreover, we identify a novel phenomenon ``pushcut'' during RL training, wherein the policy discovers previously unseen patterns beyond those seen in the previous training process. Github: https://github.com/PRIME-RL/SimpleVLA-RL

Scene Graph-Aided Probabilistic Semantic Communication for Image Transmission

Jul 16, 2025Abstract:Semantic communication emphasizes the transmission of meaning rather than raw symbols. It offers a promising solution to alleviate network congestion and improve transmission efficiency. In this paper, we propose a wireless image communication framework that employs probability graphs as shared semantic knowledge base among distributed users. High-level image semantics are represented via scene graphs, and a two-stage compression algorithm is devised to remove predictable components based on learned conditional and co-occurrence probabilities. At the transmitter, the algorithm filters redundant relations and entity pairs, while at the receiver, semantic recovery leverages the same probability graphs to reconstruct omitted information. For further research, we also put forward a multi-round semantic compression algorithm with its theoretical performance analysis. Simulation results demonstrate that our semantic-aware scheme achieves superior transmission throughput and satiable semantic alignment, validating the efficacy of leveraging high-level semantics for image communication.

Semantic-Aware Visual Information Transmission With Key Information Extraction Over Wireless Networks

Jun 15, 2025Abstract:The advent of 6G networks demands unprecedented levels of intelligence, adaptability, and efficiency to address challenges such as ultra-high-speed data transmission, ultra-low latency, and massive connectivity in dynamic environments. Traditional wireless image transmission frameworks, reliant on static configurations and isolated source-channel coding, struggle to balance computational efficiency, robustness, and quality under fluctuating channel conditions. To bridge this gap, this paper proposes an AI-native deep joint source-channel coding (JSCC) framework tailored for resource-constrained 6G networks. Our approach integrates key information extraction and adaptive background synthesis to enable intelligent, semantic-aware transmission. Leveraging AI-driven tools, Mediapipe for human pose detection and Rembg for background removal, the model dynamically isolates foreground features and matches backgrounds from a pre-trained library, reducing data payloads while preserving visual fidelity. Experimental results demonstrate significant improvements in peak signal-to-noise ratio (PSNR) compared with traditional JSCC method, especially under low-SNR conditions. This approach offers a practical solution for multimedia services in resource-constrained mobile communications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge