Xiaowen Shi

Beyond the First Error: Process Reward Models for Reflective Mathematical Reasoning

May 20, 2025Abstract:Many studies focus on data annotation techniques for training effective PRMs. However, current methods encounter a significant issue when applied to long CoT reasoning processes: they tend to focus solely on the first incorrect step and all preceding steps, assuming that all subsequent steps are incorrect. These methods overlook the unique self-correction and reflection mechanisms inherent in long CoT, where correct reasoning steps may still occur after initial reasoning mistakes. To address this issue, we propose a novel data annotation method for PRMs specifically designed to score the long CoT reasoning process. Given that under the reflection pattern, correct and incorrect steps often alternate, we introduce the concepts of Error Propagation and Error Cessation, enhancing PRMs' ability to identify both effective self-correction behaviors and reasoning based on erroneous steps. Leveraging an LLM-based judger for annotation, we collect 1.7 million data samples to train a 7B PRM and evaluate it at both solution and step levels. Experimental results demonstrate that compared to existing open-source PRMs and PRMs trained on open-source datasets, our PRM achieves superior performance across various metrics, including search guidance, BoN, and F1 scores. Compared to widely used MC-based annotation methods, our annotation approach not only achieves higher data efficiency but also delivers superior performance. Detailed analysis is also conducted to demonstrate the stability and generalizability of our method.

FRRffusion: Unveiling Authenticity with Diffusion-Based Face Retouching Reversal

May 13, 2024

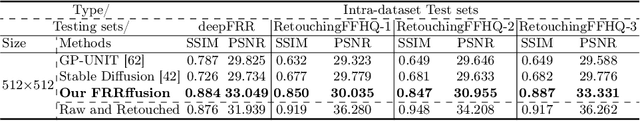

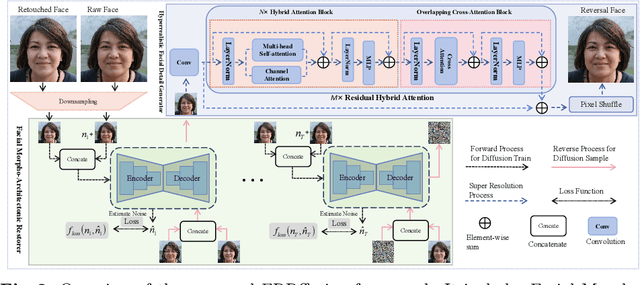

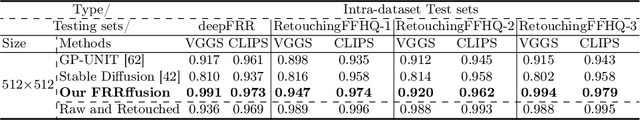

Abstract:Unveiling the real appearance of retouched faces to prevent malicious users from deceptive advertising and economic fraud has been an increasing concern in the era of digital economics. This article makes the first attempt to investigate the face retouching reversal (FRR) problem. We first collect an FRR dataset, named deepFRR, which contains 50,000 StyleGAN-generated high-resolution (1024*1024) facial images and their corresponding retouched ones by a commercial online API. To our best knowledge, deepFRR is the first FRR dataset tailored for training the deep FRR models. Then, we propose a novel diffusion-based FRR approach (FRRffusion) for the FRR task. Our FRRffusion consists of a coarse-to-fine two-stage network: A diffusion-based Facial Morpho-Architectonic Restorer (FMAR) is constructed to generate the basic contours of low-resolution faces in the first stage, while a Transformer-based Hyperrealistic Facial Detail Generator (HFDG) is designed to create high-resolution facial details in the second stage. Tested on deepFRR, our FRRffusion surpasses the GP-UNIT and Stable Diffusion methods by a large margin in four widespread quantitative metrics. Especially, the de-retouched images by our FRRffusion are visually much closer to the raw face images than both the retouched face images and those restored by the GP-UNIT and Stable Diffusion methods in terms of qualitative evaluation with 85 subjects. These results sufficiently validate the efficacy of our work, bridging the recently-standing gap between the FRR and generic image restoration tasks. The dataset and code are available at https://github.com/GZHU-DVL/FRRffusion.

CPDM: Content-Preserving Diffusion Model for Underwater Image Enhancement

Jan 28, 2024Abstract:Underwater image enhancement (UIE) is challenging since image degradation in aquatic environments is complicated and changing over time. Existing mainstream methods rely on either physical-model or data-driven, suffering from performance bottlenecks due to changes in imaging conditions or training instability. In this article, we make the first attempt to adapt the diffusion model to the UIE task and propose a Content-Preserving Diffusion Model (CPDM) to address the above challenges. CPDM first leverages a diffusion model as its fundamental model for stable training and then designs a content-preserving framework to deal with changes in imaging conditions. Specifically, we construct a conditional input module by adopting both the raw image and the difference between the raw and noisy images as the input, which can enhance the model's adaptability by considering the changes involving the raw images in underwater environments. To preserve the essential content of the raw images, we construct a content compensation module for content-aware training by extracting low-level features from the raw images. Extensive experimental results validate the effectiveness of our CPDM, surpassing the state-of-the-art methods in terms of both subjective and objective metrics.

MDDL: A Framework for Reinforcement Learning-based Position Allocation in Multi-Channel Feed

Apr 17, 2023

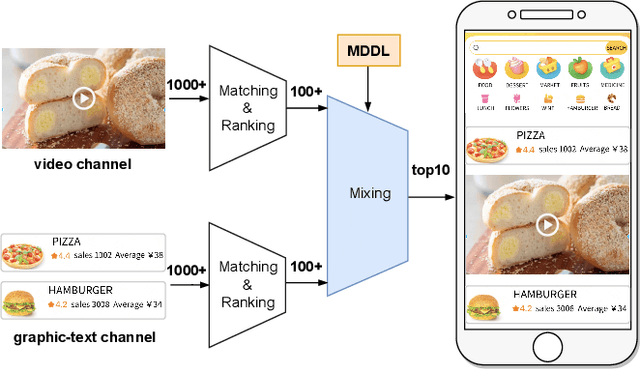

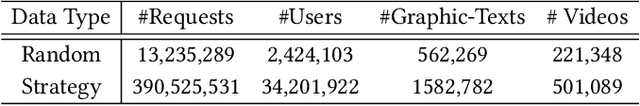

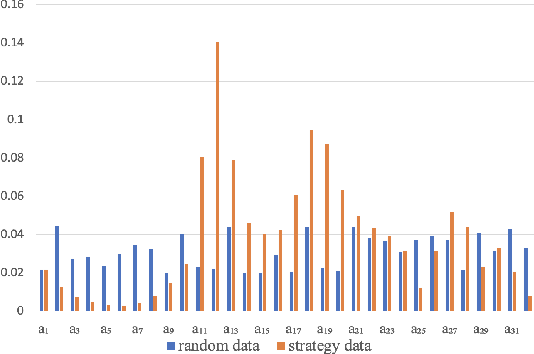

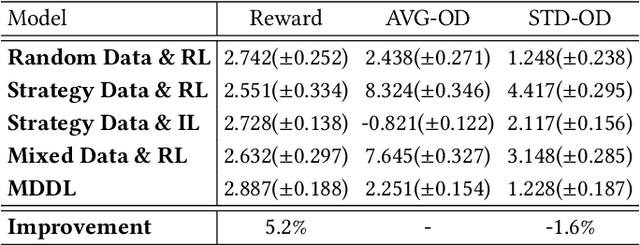

Abstract:Nowadays, the mainstream approach in position allocation system is to utilize a reinforcement learning model to allocate appropriate locations for items in various channels and then mix them into the feed. There are two types of data employed to train reinforcement learning (RL) model for position allocation, named strategy data and random data. Strategy data is collected from the current online model, it suffers from an imbalanced distribution of state-action pairs, resulting in severe overestimation problems during training. On the other hand, random data offers a more uniform distribution of state-action pairs, but is challenging to obtain in industrial scenarios as it could negatively impact platform revenue and user experience due to random exploration. As the two types of data have different distributions, designing an effective strategy to leverage both types of data to enhance the efficacy of the RL model training has become a highly challenging problem. In this study, we propose a framework named Multi-Distribution Data Learning (MDDL) to address the challenge of effectively utilizing both strategy and random data for training RL models on mixed multi-distribution data. Specifically, MDDL incorporates a novel imitation learning signal to mitigate overestimation problems in strategy data and maximizes the RL signal for random data to facilitate effective learning. In our experiments, we evaluated the proposed MDDL framework in a real-world position allocation system and demonstrated its superior performance compared to the previous baseline. MDDL has been fully deployed on the Meituan food delivery platform and currently serves over 300 million users.

PIER: Permutation-Level Interest-Based End-to-End Re-ranking Framework in E-commerce

Feb 06, 2023

Abstract:Re-ranking draws increased attention on both academics and industries, which rearranges the ranking list by modeling the mutual influence among items to better meet users' demands. Many existing re-ranking methods directly take the initial ranking list as input, and generate the optimal permutation through a well-designed context-wise model, which brings the evaluation-before-reranking problem. Meanwhile, evaluating all candidate permutations brings unacceptable computational costs in practice. Thus, to better balance efficiency and effectiveness, online systems usually use a two-stage architecture which uses some heuristic methods such as beam-search to generate a suitable amount of candidate permutations firstly, which are then fed into the evaluation model to get the optimal permutation. However, existing methods in both stages can be improved through the following aspects. As for generation stage, heuristic methods only use point-wise prediction scores and lack an effective judgment. As for evaluation stage, most existing context-wise evaluation models only consider the item context and lack more fine-grained feature context modeling. This paper presents a novel end-to-end re-ranking framework named PIER to tackle the above challenges which still follows the two-stage architecture and contains two mainly modules named FPSM and OCPM. We apply SimHash in FPSM to select top-K candidates from the full permutation based on user's permutation-level interest in an efficient way. Then we design a novel omnidirectional attention mechanism in OCPM to capture the context information in the permutation. Finally, we jointly train these two modules end-to-end by introducing a comparative learning loss. Offline experiment results demonstrate that PIER outperforms baseline models on both public and industrial datasets, and we have successfully deployed PIER on Meituan food delivery platform.

Learning List-wise Representation in Reinforcement Learning for Ads Allocation with Multiple Auxiliary Tasks

Apr 02, 2022

Abstract:With the recent prevalence of reinforcement learning (RL), there have been tremendous interests in utilizing RL for ads allocation in recommendation platforms (e.g., e-commerce and news feed sites). For better performance, recent RL-based ads allocation agent makes decisions based on representations of list-wise item arrangement. This results in a high-dimensional state-action space, which makes it difficult to learn an efficient and generalizable list-wise representation. To address this problem, we propose a novel algorithm to learn a better representation by leveraging task-specific signals on Meituan food delivery platform. Specifically, we propose three different types of auxiliary tasks that are based on reconstruction, prediction, and contrastive learning respectively. We conduct extensive offline experiments on the effectiveness of these auxiliary tasks and test our method on real-world food delivery platform. The experimental results show that our method can learn better list-wise representations and achieve higher revenue for the platform.

Deep Page-Level Interest Network in Reinforcement Learning for Ads Allocation

Apr 01, 2022

Abstract:A mixed list of ads and organic items is usually displayed in feed and how to allocate the limited slots to maximize the overall revenue is a key problem. Meanwhile, modeling user preference with historical behavior is essential in recommendation and advertising (e.g., CTR prediction and ads allocation). Most previous works for user behavior modeling only model user's historical point-level positive feedback (i.e., click), which neglect the page-level information of feedback and other types of feedback. To this end, we propose Deep Page-level Interest Network (DPIN) to model the page-level user preference and exploit multiple types of feedback. Specifically, we introduce four different types of page-level feedback as input, and capture user preference for item arrangement under different receptive fields through the multi-channel interaction module. Through extensive offline and online experiments on Meituan food delivery platform, we demonstrate that DPIN can effectively model the page-level user preference and increase the revenue for the platform.

Cross DQN: Cross Deep Q Network for Ads Allocation in Feed

Sep 09, 2021

Abstract:E-commerce platforms usually display a mixed list of ads and organic items in feed. One key problem is to allocate the limited slots in the feed to maximize the overall revenue as well as improve user experience, which requires a good model for user preference. Instead of modeling the influence of individual items on user behaviors, the arrangement signal models the influence of the arrangement of items and may lead to a better allocation strategy. However, most of previous strategies fail to model such a signal and therefore result in suboptimal performance. To this end, we propose Cross Deep Q Network (Cross DQN) to extract the arrangement signal by crossing the embeddings of different items and processing the crossed sequence in the feed. Our model results in higher revenue and better user experience than state-of-the-art baselines in offline experiments. Moreover, our model demonstrates a significant improvement in the online A/B test and has been fully deployed on Meituan feed to serve more than 300 millions of customers.

A Real-Time Deep Network for Crowd Counting

Feb 16, 2020

Abstract:Automatic analysis of highly crowded people has attracted extensive attention from computer vision research. Previous approaches for crowd counting have already achieved promising performance across various benchmarks. However, to deal with the real situation, we hope the model run as fast as possible while keeping accuracy. In this paper, we propose a compact convolutional neural network for crowd counting which learns a more efficient model with a small number of parameters. With three parallel filters executing the convolutional operation on the input image simultaneously at the front of the network, our model could achieve nearly real-time speed and save more computing resources. Experiments on two benchmarks show that our proposed method not only takes a balance between performance and efficiency which is more suitable for actual scenes but also is superior to existing light-weight models in speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge