Hongning Ruan

Multi-View Wireless Sensing via Conditional Generative Learning: Framework and Model Design

May 19, 2025Abstract:In this paper, we incorporate physical knowledge into learning-based high-precision target sensing using the multi-view channel state information (CSI) between multiple base stations (BSs) and user equipment (UEs). Such kind of multi-view sensing problem can be naturally cast into a conditional generation framework. To this end, we design a bipartite neural network architecture, the first part of which uses an elaborately designed encoder to fuse the latent target features embedded in the multi-view CSI, and then the second uses them as conditioning inputs of a powerful generative model to guide the target's reconstruction. Specifically, the encoder is designed to capture the physical correlation between the CSI and the target, and also be adaptive to the numbers and positions of BS-UE pairs. Therein the view-specific nature of CSI is assimilated by introducing a spatial positional embedding scheme, which exploits the structure of electromagnetic(EM)-wave propagation channels. Finally, a conditional diffusion model with a weighted loss is employed to generate the target's point cloud from the fused features. Extensive numerical results demonstrate that the proposed generative multi-view (Gen-MV) sensing framework exhibits excellent flexibility and significant performance improvement on the reconstruction quality of target's shape and EM properties.

Implicit Neural Compression of Point Clouds

Dec 11, 2024

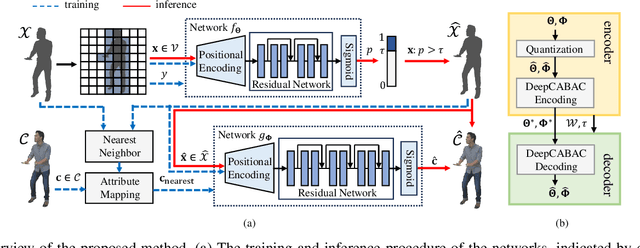

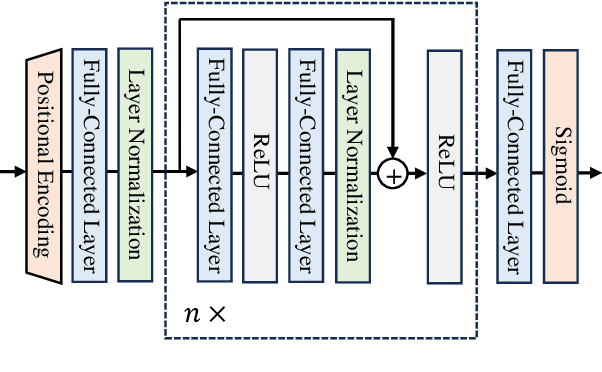

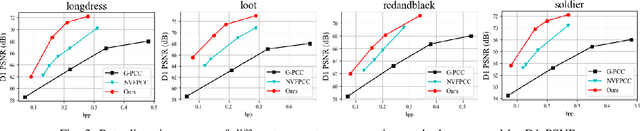

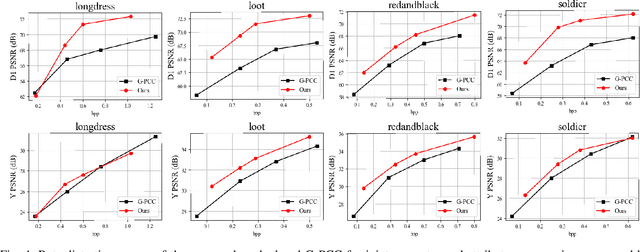

Abstract:Point clouds have gained prominence in numerous applications due to their ability to accurately depict 3D objects and scenes. However, compressing unstructured, high-precision point cloud data effectively remains a significant challenge. In this paper, we propose NeRC$^{\textbf{3}}$, a novel point cloud compression framework leveraging implicit neural representations to handle both geometry and attributes. Our approach employs two coordinate-based neural networks to implicitly represent a voxelized point cloud: the first determines the occupancy status of a voxel, while the second predicts the attributes of occupied voxels. By feeding voxel coordinates into these networks, the receiver can efficiently reconstructs the original point cloud's geometry and attributes. The neural network parameters are quantized and compressed alongside auxiliary information required for reconstruction. Additionally, we extend our method to dynamic point cloud compression with techniques to reduce temporal redundancy, including a 4D spatial-temporal representation termed 4D-NeRC$^{\textbf{3}}$. Experimental results validate the effectiveness of our approach: for static point clouds, NeRC$^{\textbf{3}}$ outperforms octree-based methods in the latest G-PCC standard. For dynamic point clouds, 4D-NeRC$^{\textbf{3}}$ demonstrates superior geometry compression compared to state-of-the-art G-PCC and V-PCC standards and achieves competitive results for joint geometry and attribute compression.

Point Cloud Compression with Implicit Neural Representations: A Unified Framework

May 19, 2024

Abstract:Point clouds have become increasingly vital across various applications thanks to their ability to realistically depict 3D objects and scenes. Nevertheless, effectively compressing unstructured, high-precision point cloud data remains a significant challenge. In this paper, we present a pioneering point cloud compression framework capable of handling both geometry and attribute components. Unlike traditional approaches and existing learning-based methods, our framework utilizes two coordinate-based neural networks to implicitly represent a voxelized point cloud. The first network generates the occupancy status of a voxel, while the second network determines the attributes of an occupied voxel. To tackle an immense number of voxels within the volumetric space, we partition the space into smaller cubes and focus solely on voxels within non-empty cubes. By feeding the coordinates of these voxels into the respective networks, we reconstruct the geometry and attribute components of the original point cloud. The neural network parameters are further quantized and compressed. Experimental results underscore the superior performance of our proposed method compared to the octree-based approach employed in the latest G-PCC standards. Moreover, our method exhibits high universality when contrasted with existing learning-based techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge