Zhaoyang Zhang

Physics-Inspired Target Shape Detection and Reconstruction in mmWave Communication Systems

Feb 05, 2026Abstract:The integration of sensing and communication (ISAC) is an essential function of future wireless systems. Due to its large available bandwidth, millimeter-wave (mmWave) ISAC systems are able to achieve high sensing accuracy. In this paper, we consider the multiple base-station (BS) collaborative sensing problem in a multi-input multi-output (MIMO) orthogonal frequency division multiplexing (OFDM) mmWave communication system. Our aim is to sense a remote target shape with the collected signals which consist of both the reflection and scattering signals. We first characterize the mmWave's scattering and reflection effects based on the Lambertian scattering model. Then we apply the periodogram technique to obtain rough scattering point detection, and further incorporate the subspace method to achieve more precise scattering and reflection point detection. Based on these, a reconstruction algorithm based on Hough Transform and principal component analysis (PCA) is designed for a single convex polygon target scenario. To improve the accuracy and completeness of the reconstruction results, we propose a method to further fuse the scattering and reflection points. Extensive simulation results validate the effectiveness of the proposed algorithms.

Toward a Sustainable Federated Learning Ecosystem: A Practical Least Core Mechanism for Payoff Allocation

Feb 03, 2026Abstract:Emerging network paradigms and applications increasingly rely on federated learning (FL) to enable collaborative intelligence while preserving privacy. However, the sustainability of such collaborative environments hinges on a fair and stable payoff allocation mechanism. Focusing on coalition stability, this paper introduces a payoff allocation framework based on the least core (LC) concept. Unlike traditional methods, the LC prioritizes the cohesion of the federation by minimizing the maximum dissatisfaction among all potential subgroups, ensuring that no participant has an incentive to break away. To adapt this game-theoretic concept to practical, large-scale networks, we propose a streamlined implementation with a stack-based pruning algorithm, effectively balancing computational efficiency with allocation precision. Case studies in federated intrusion detection demonstrate that our mechanism correctly identifies pivotal contributors and strategic alliances. The results confirm that the practical LC framework promotes stable collaboration and fosters a sustainable FL ecosystem.

Talk2Move: Reinforcement Learning for Text-Instructed Object-Level Geometric Transformation in Scenes

Jan 08, 2026Abstract:We introduce Talk2Move, a reinforcement learning (RL) based diffusion framework for text-instructed spatial transformation of objects within scenes. Spatially manipulating objects in a scene through natural language poses a challenge for multimodal generation systems. While existing text-based manipulation methods can adjust appearance or style, they struggle to perform object-level geometric transformations-such as translating, rotating, or resizing objects-due to scarce paired supervision and pixel-level optimization limits. Talk2Move employs Group Relative Policy Optimization (GRPO) to explore geometric actions through diverse rollouts generated from input images and lightweight textual variations, removing the need for costly paired data. A spatial reward guided model aligns geometric transformations with linguistic description, while off-policy step evaluation and active step sampling improve learning efficiency by focusing on informative transformation stages. Furthermore, we design object-centric spatial rewards that evaluate displacement, rotation, and scaling behaviors directly, enabling interpretable and coherent transformations. Experiments on curated benchmarks demonstrate that Talk2Move achieves precise, consistent, and semantically faithful object transformations, outperforming existing text-guided editing approaches in both spatial accuracy and scene coherence.

Random Multiplexing

Dec 30, 2025Abstract:As wireless communication applications evolve from traditional multipath environments to high-mobility scenarios like unmanned aerial vehicles, multiplexing techniques have advanced accordingly. Traditional single-carrier frequency-domain equalization (SC-FDE) and orthogonal frequency-division multiplexing (OFDM) have given way to emerging orthogonal time-frequency space (OTFS) and affine frequency-division multiplexing (AFDM). These approaches exploit specific channel structures to diagonalize or sparsify the effective channel, thereby enabling low-complexity detection. However, their reliance on these structures significantly limits their robustness in dynamic, real-world environments. To address these challenges, this paper studies a random multiplexing technique that is decoupled from the physical channels, enabling its application to arbitrary norm-bounded and spectrally convergent channel matrices. Random multiplexing achieves statistical fading-channel ergodicity for transmitted signals by constructing an equivalent input-isotropic channel matrix in the random transform domain. It guarantees the asymptotic replica MAP bit-error rate (BER) optimality of AMP-type detectors for linear systems with arbitrary norm-bounded, spectrally convergent channel matrices and signaling configurations, under the unique fixed point assumption. A low-complexity cross-domain memory AMP (CD-MAMP) detector is considered, leveraging the sparsity of the time-domain channel and the randomness of the equivalent channel. Optimal power allocations are derived to minimize the replica MAP BER and maximize the replica constrained capacity of random multiplexing systems. The optimal coding principle and replica constrained-capacity optimality of CD-MAMP detector are investigated for random multiplexing systems. Additionally, the versatility of random multiplexing in diverse wireless applications is explored.

Rate Maximization for UAV-assisted ISAC System with Fluid Antennas

Oct 09, 2025Abstract:This letter investigates the joint sensing problem between unmanned aerial vehicles (UAV) and base stations (BS) in integrated sensing and communication (ISAC) systems with fluid antennas (FA). In this system, the BS enhances its sensing performance through the UAV's perception system. We aim to maximize the communication rate between the BS and UAV while guaranteeing the joint system's sensing capability. By establishing a communication-sensing model with convex optimization properties, we decompose the problem and apply convex optimization to progressively solve key variables. An iterative algorithm employing an alternating optimization approach is subsequently developed to determine the optimal solution, significantly reducing the solution complexity. Simulation results validate the algorithm's effectiveness in balancing system performance.

Soft Graph Transformer for MIMO Detection

Sep 16, 2025

Abstract:We propose the Soft Graph Transformer (SGT), a Soft-Input-Soft-Output neural architecture tailored for MIMO detection. While Maximum Likelihood (ML) detection achieves optimal accuracy, its prohibitive exponential complexity renders it impractical for real-world systems. Conventional message passing algorithms offer tractable alternatives but rely on large-system asymptotics and random matrix assumptions, both of which break down under practical implementations. Prior Transformer-based detectors, on the other hand, fail to incorporate the MIMO factor graph structure and cannot utilize decoder-side soft information, limiting their standalone performance and their applicability in iterative detection-decoding (IDD). To overcome these limitations, SGT integrates message passing directly into a graph-aware attention mechanism and supports decoder-informed updates through soft-input embeddings. This design enables effective soft-output generation while preserving computational efficiency. As a standalone detector, SGT closely approaches ML performance and surpasses prior Transformer-based approaches.

Integrated Communication and Remote Sensing in LEO Satellite Systems: Protocol, Architecture and Prototype

Aug 14, 2025Abstract:In this paper, we explore the integration of communication and synthetic aperture radar (SAR)-based remote sensing in low Earth orbit (LEO) satellite systems to provide real-time SAR imaging and information transmission. Considering the high-mobility characteristics of satellite channels and limited processing capabilities of satellite payloads, we propose an integrated communication and remote sensing architecture based on an orthogonal delay-Doppler division multiplexing (ODDM) signal waveform. Both communication and SAR imaging functionalities are achieved with an integrated transceiver onboard the LEO satellite, utilizing the same waveform and radio frequency (RF) front-end. Based on such an architecture, we propose a transmission protocol compatible with the 5G NR standard using downlink pilots for joint channel estimation and SAR imaging. Furthermore, we design a unified signal processing framework for the integrated satellite receiver to simultaneously achieve high-performance channel sensing, low-complexity channel equalization and interference-free SAR imaging. Finally, the performance of the proposed integrated system is demonstrated through comprehensive analysis and extensive simulations in the sub-6 GHz band. Moreover, a software-defined radio (SDR) prototype is presented to validate its effectiveness for real-time SAR imaging and information transmission in satellite direct-connect user equipment (UE) scenarios within the millimeter-wave (mmWave) band.

ToonComposer: Streamlining Cartoon Production with Generative Post-Keyframing

Aug 14, 2025

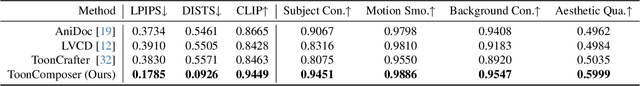

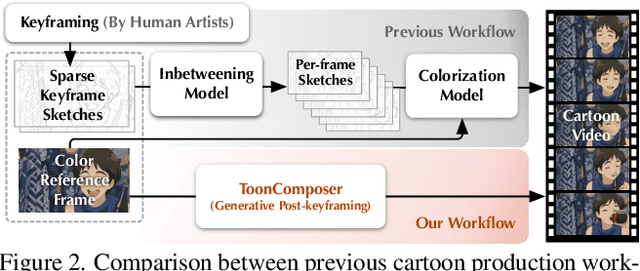

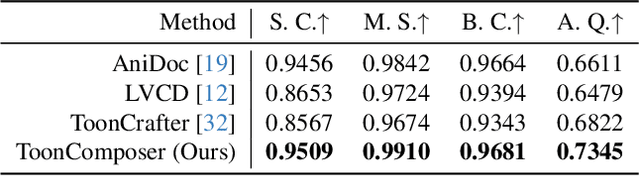

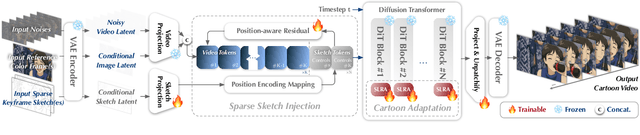

Abstract:Traditional cartoon and anime production involves keyframing, inbetweening, and colorization stages, which require intensive manual effort. Despite recent advances in AI, existing methods often handle these stages separately, leading to error accumulation and artifacts. For instance, inbetweening approaches struggle with large motions, while colorization methods require dense per-frame sketches. To address this, we introduce ToonComposer, a generative model that unifies inbetweening and colorization into a single post-keyframing stage. ToonComposer employs a sparse sketch injection mechanism to provide precise control using keyframe sketches. Additionally, it uses a cartoon adaptation method with the spatial low-rank adapter to tailor a modern video foundation model to the cartoon domain while keeping its temporal prior intact. Requiring as few as a single sketch and a colored reference frame, ToonComposer excels with sparse inputs, while also supporting multiple sketches at any temporal location for more precise motion control. This dual capability reduces manual workload and improves flexibility, empowering artists in real-world scenarios. To evaluate our model, we further created PKBench, a benchmark featuring human-drawn sketches that simulate real-world use cases. Our evaluation demonstrates that ToonComposer outperforms existing methods in visual quality, motion consistency, and production efficiency, offering a superior and more flexible solution for AI-assisted cartoon production.

Rethinking Multi-User Communication in Semantic Domain: Enhanced OMDMA by Shuffle-Based Orthogonalization and Diffusion Denoising

Jul 28, 2025

Abstract:Inter-user interference remains a critical bottleneck in wireless communication systems, particularly in the emerging paradigm of semantic communication (SemCom). Compared to traditional systems, inter-user interference in SemCom severely degrades key semantic information, often causing worse performance than Gaussian noise under the same power level. To address this challenge, inspired by the recently proposed concept of Orthogonal Model Division Multiple Access (OMDMA) that leverages semantic orthogonality rooted in the personalized joint source and channel (JSCC) models to distinguish users, we propose a novel, scalable framework that eliminates the need for user-specific JSCC models as did in original OMDMA. Our key innovation lies in shuffle-based orthogonalization, where randomly permuting the positions of JSCC feature vectors transforms inter-user interference into Gaussian-like noise. By assigning each user a unique shuffling pattern, the interference is treated as channel noise, enabling effective mitigation using diffusion models (DMs). This approach not only simplifies system design by requiring a single universal JSCC model but also enhances privacy, as shuffling patterns act as implicit private keys. Additionally, we extend the framework to scenarios involving semantically correlated data. By grouping users based on semantic similarity, a cooperative beamforming strategy is introduced to exploit redundancy in correlated data, further improving system performance. Extensive simulations demonstrate that the proposed method outperforms state-of-the-art multi-user SemCom frameworks, achieving superior semantic fidelity, robustness to interference, and scalability-all without requiring additional training overhead.

Scene Graph-Aided Probabilistic Semantic Communication for Image Transmission

Jul 16, 2025Abstract:Semantic communication emphasizes the transmission of meaning rather than raw symbols. It offers a promising solution to alleviate network congestion and improve transmission efficiency. In this paper, we propose a wireless image communication framework that employs probability graphs as shared semantic knowledge base among distributed users. High-level image semantics are represented via scene graphs, and a two-stage compression algorithm is devised to remove predictable components based on learned conditional and co-occurrence probabilities. At the transmitter, the algorithm filters redundant relations and entity pairs, while at the receiver, semantic recovery leverages the same probability graphs to reconstruct omitted information. For further research, we also put forward a multi-round semantic compression algorithm with its theoretical performance analysis. Simulation results demonstrate that our semantic-aware scheme achieves superior transmission throughput and satiable semantic alignment, validating the efficacy of leveraging high-level semantics for image communication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge