Xinquan Wang

Robust Deep Learning-Based Physical Layer Communications: Strategies and Approaches

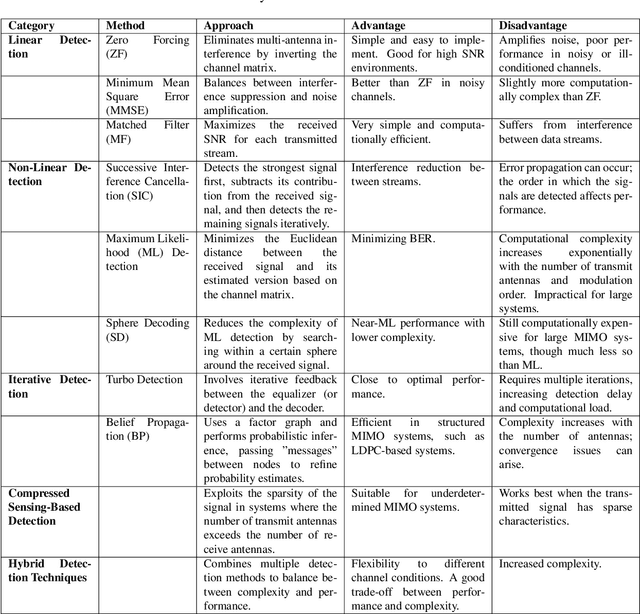

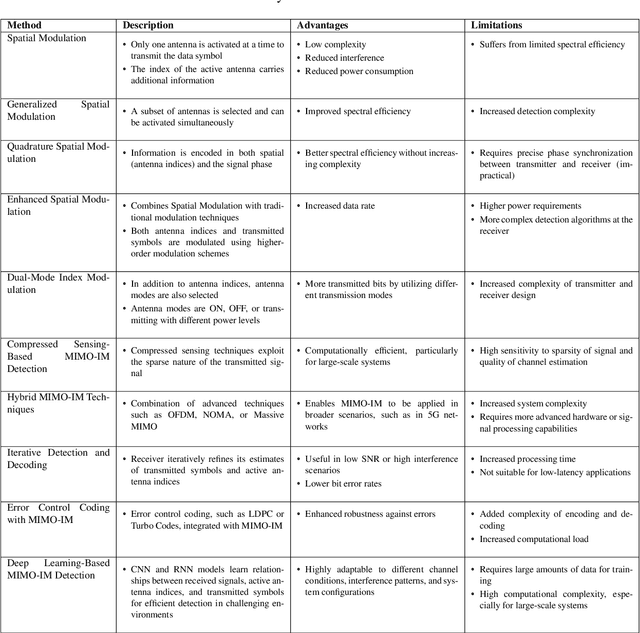

May 02, 2025Abstract:Deep learning (DL) has emerged as a transformative technology with immense potential to reshape the sixth-generation (6G) wireless communication network. By utilizing advanced algorithms for feature extraction and pattern recognition, DL provides unprecedented capabilities in optimizing the network efficiency and performance, particularly in physical layer communications. Although DL technologies present the great potential, they also face significant challenges related to the robustness, which are expected to intensify in the complex and demanding 6G environment. Specifically, current DL models typically exhibit substantial performance degradation in dynamic environments with time-varying channels, interference of noise and different scenarios, which affect their effectiveness in diverse real-world applications. This paper provides a comprehensive overview of strategies and approaches for robust DL-based methods in physical layer communications. First we introduce the key challenges that current DL models face. Then we delve into a detailed examination of DL approaches specifically tailored to enhance robustness in 6G, which are classified into data-driven and model-driven strategies. Finally, we verify the effectiveness of these methods by case studies and outline future research directions.

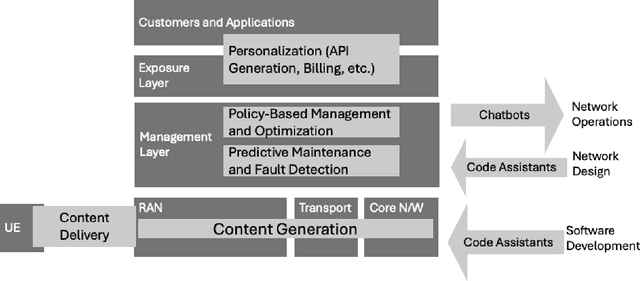

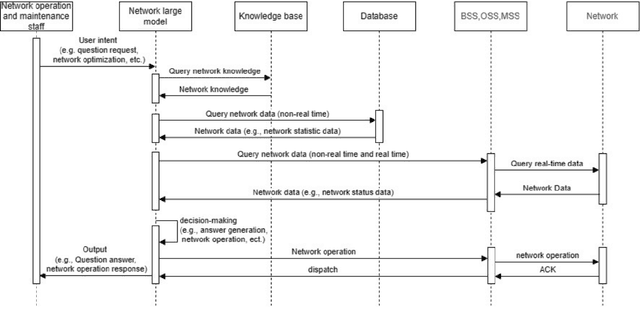

Wireless Large AI Model: Shaping the AI-Native Future of 6G and Beyond

Apr 20, 2025Abstract:The emergence of sixth-generation and beyond communication systems is expected to fundamentally transform digital experiences through introducing unparalleled levels of intelligence, efficiency, and connectivity. A promising technology poised to enable this revolutionary vision is the wireless large AI model (WLAM), characterized by its exceptional capabilities in data processing, inference, and decision-making. In light of these remarkable capabilities, this paper provides a comprehensive survey of WLAM, elucidating its fundamental principles, diverse applications, critical challenges, and future research opportunities. We begin by introducing the background of WLAM and analyzing the key synergies with wireless networks, emphasizing the mutual benefits. Subsequently, we explore the foundational characteristics of WLAM, delving into their unique relevance in wireless environments. Then, the role of WLAM in optimizing wireless communication systems across various use cases and the reciprocal benefits are systematically investigated. Furthermore, we discuss the integration of WLAM with emerging technologies, highlighting their potential to enable transformative capabilities and breakthroughs in wireless communication. Finally, we thoroughly examine the high-level challenges hindering the practical implementation of WLAM and discuss pivotal future research directions.

Liquid Neural Networks: Next-Generation AI for Telecom from First Principles

Apr 03, 2025Abstract:Artificial intelligence (AI) has emerged as a transformative technology with immense potential to reshape the next-generation of wireless networks. By leveraging advanced algorithms and machine learning techniques, AI offers unprecedented capabilities in optimizing network performance, enhancing data processing efficiency, and enabling smarter decision-making processes. However, existing AI solutions face significant challenges in terms of robustness and interpretability. Specifically, current AI models exhibit substantial performance degradation in dynamic environments with varying data distributions, and the black-box nature of these algorithms raises concerns regarding safety, transparency, and fairness. This presents a major challenge in integrating AI into practical communication systems. Recently, a novel type of neural network, known as the liquid neural networks (LNNs), has been designed from first principles to address these issues. In this paper, we explore the potential of LNNs in telecommunications. First, we illustrate the mechanisms of LNNs and highlight their unique advantages over traditional networks. Then we unveil the opportunities that LNNs bring to future wireless networks. Furthermore, we discuss the challenges and design directions for the implementation of LNNs. Finally, we summarize the performance of LNNs in two case studies.

TeleMoM: Consensus-Driven Telecom Intelligence via Mixture of Models

Apr 03, 2025Abstract:Large language models (LLMs) face significant challenges in specialized domains like telecommunication (Telecom) due to technical complexity, specialized terminology, and rapidly evolving knowledge. Traditional methods, such as scaling model parameters or retraining on domain-specific corpora, are computationally expensive and yield diminishing returns, while existing approaches like retrieval-augmented generation, mixture of experts, and fine-tuning struggle with accuracy, efficiency, and coordination. To address this issue, we propose Telecom mixture of models (TeleMoM), a consensus-driven ensemble framework that integrates multiple LLMs for enhanced decision-making in Telecom. TeleMoM employs a two-stage process: proponent models generate justified responses, and an adjudicator finalizes decisions, supported by a quality-checking mechanism. This approach leverages strengths of diverse models to improve accuracy, reduce biases, and handle domain-specific complexities effectively. Evaluation results demonstrate that TeleMoM achieves a 9.7\% increase in answer accuracy, highlighting its effectiveness in Telecom applications.

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

Robust Beamforming with Gradient-based Liquid Neural Network

May 17, 2024Abstract:Millimeter-wave (mmWave) multiple-input multiple-output (MIMO) communication with the advanced beamforming technologies is a key enabler to meet the growing demands of future mobile communication. However, the dynamic nature of cellular channels in large-scale urban mmWave MIMO communication scenarios brings substantial challenges, particularly in terms of complexity and robustness. To address these issues, we propose a robust gradient-based liquid neural network (GLNN) framework that utilizes ordinary differential equation-based liquid neurons to solve the beamforming problem. Specifically, our proposed GLNN framework takes gradients of the optimization objective function as inputs to extract the high-order channel feature information, and then introduces a residual connection to mitigate the training burden. Furthermore, we use the manifold learning technique to compress the search space of the beamforming problem. These designs enable the GLNN to effectively maintain low complexity while ensuring strong robustness to noisy and highly dynamic channels. Extensive simulation results demonstrate that the GLNN can achieve 4.15% higher spectral efficiency than that of typical iterative algorithms, and reduce the time consumption to only 1.61% that of conventional methods.

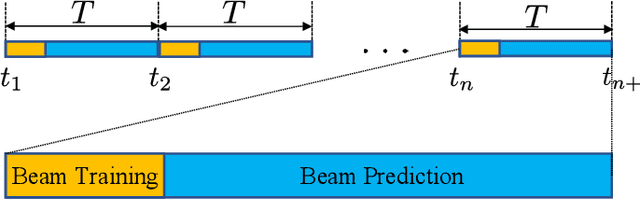

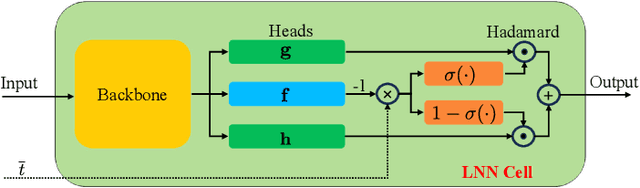

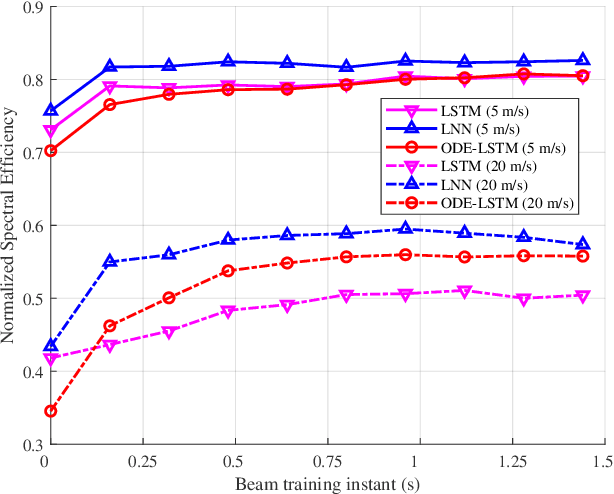

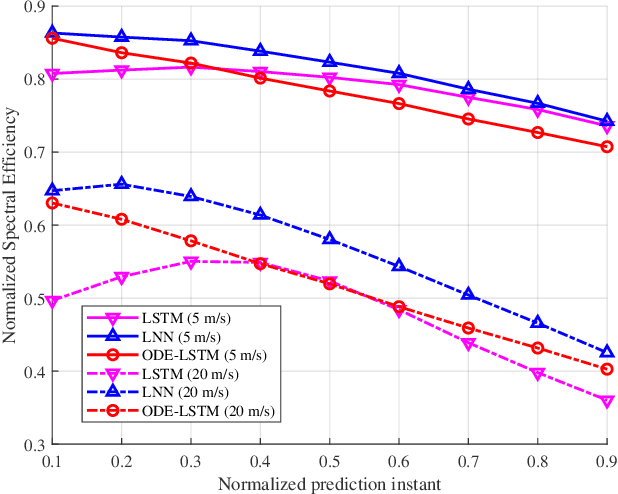

Robust Continuous-Time Beam Tracking with Liquid Neural Network

May 01, 2024

Abstract:Millimeter-wave (mmWave) technology is increasingly recognized as a pivotal technology of the sixth-generation communication networks due to the large amounts of available spectrum at high frequencies. However, the huge overhead associated with beam training imposes a significant challenge in mmWave communications, particularly in urban environments with high background noise. To reduce this high overhead, we propose a novel solution for robust continuous-time beam tracking with liquid neural network, which dynamically adjust the narrow mmWave beams to ensure real-time beam alignment with mobile users. Through extensive simulations, we validate the effectiveness of our proposed method and demonstrate its superiority over existing state-of-the-art deep-learning-based approaches. Specifically, our scheme achieves at most 46.9% higher normalized spectral efficiency than the baselines when the user is moving at 5 m/s, demonstrating the potential of liquid neural networks to enhance mmWave mobile communication performance.

Beamforming Inferring by Conditional WGAN-GP for Holographic Antenna Arrays

May 01, 2024Abstract:The beamforming technology with large holographic antenna arrays is one of the key enablers for the next generation of wireless systems, which can significantly improve the spectral efficiency. However, the deployment of large antenna arrays implies high algorithm complexity and resource overhead at both receiver and transmitter ends. To address this issue, advanced technologies such as artificial intelligence have been developed to reduce beamforming overhead. Intuitively, if we can implement the near-optimal beamforming only using a tiny subset of the all channel information, the overhead for channel estimation and beamforming would be reduced significantly compared with the traditional beamforming methods that usually need full channel information and the inversion of large dimensional matrix. In light of this idea, we propose a novel scheme that utilizes Wasserstein generative adversarial network with gradient penalty to infer the full beamforming matrices based on very little of channel information. Simulation results confirm that it can accomplish comparable performance with the weighted minimum mean-square error algorithm, while reducing the overhead by over 50%.

Robust Beamforming for RIS-aided Communications: Gradient-based Manifold Meta Learning

Feb 16, 2024Abstract:Reconfigurable intelligent surface (RIS) has become a promising technology to realize the programmable wireless environment via steering the incident signal in fully customizable ways. However, a major challenge in RIS-aided communication systems is the simultaneous design of the precoding matrix at the base station (BS) and the phase shifting matrix of the RIS elements. This is mainly attributed to the highly non-convex optimization space of variables at both the BS and the RIS, and the diversity of communication environments. Generally, traditional optimization methods for this problem suffer from the high complexity, while existing deep learning based methods are lack of robustness in various scenarios. To address these issues, we introduce a gradient-based manifold meta learning method (GMML), which works without pre-training and has strong robustness for RIS-aided communications. Specifically, the proposed method fuses meta learning and manifold learning to improve the overall spectral efficiency, and reduce the overhead of the high-dimensional signal process. Unlike traditional deep learning based methods which directly take channel state information as input, GMML feeds the gradients of the precoding matrix and phase shifting matrix into neural networks. Coherently, we design a differential regulator to constrain the phase shifting matrix of the RIS. Numerical results show that the proposed GMML can improve the spectral efficiency by up to 7.31\%, and speed up the convergence by 23 times faster compared to traditional approaches. Moreover, they also demonstrate remarkable robustness and adaptability in dynamic settings.

Energy-efficient Beamforming for RISs-aided Communications: Gradient Based Meta Learning

Nov 12, 2023

Abstract:Reconfigurable intelligent surfaces (RISs) have become a promising technology to meet the requirements of energy efficiency and scalability in future six-generation (6G) communications. However, a significant challenge in RISs-aided communications is the joint optimization of active and passive beamforming at base stations (BSs) and RISs respectively. Specifically, the main difficulty is attributed to the highly non-convex optimization space of beamforming matrices at both BSs and RISs, as well as the diversity and mobility of communication scenarios. To address this, we present a greenly gradient based meta learning beamforming (GMLB) approach. Unlike traditional deep learning based methods which take channel information directly as input, GMLB feeds the gradient of sum rate into neural networks. Coherently, we design a differential regulator to address the phase shift optimization of RISs. Moreover, we use the meta learning to iteratively optimize the beamforming matrices of BSs and RISs. These techniques make the proposed method to work well without requiring energy-consuming pre-training. Simulations show that GMLB could achieve higher sum rate than that of typical alternating optimization algorithms with the energy consumption by two orders of magnitude less.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge