Ahmed Alhammadi

Beamforming Design and Association Scheme for Multi-RIS Multi-User mmWave Systems Through Graph Neural Networks

Apr 20, 2025Abstract:Reconfigurable intelligent surface (RIS) is emerging as a promising technology for next-generation wireless communication networks, offering a variety of merits such as the ability to tailor the communication environment. Moreover, deploying multiple RISs helps mitigate severe signal blocking between the base station (BS) and users, providing a practical and efficient solution to enhance the service coverage. However, fully reaping the potential of a multi-RIS aided communication system requires solving a non-convex optimization problem. This challenge motivates the adoption of learning-based methods for determining the optimal policy. In this paper, we introduce a novel heterogeneous graph neural network (GNN) to effectively leverage the graph topology of a wireless communication environment. Specifically, we design an association scheme that selects a suitable RIS for each user. Then, we maximize the weighted sum rate (WSR) of all the users by iteratively optimizing the RIS association scheme, and beamforming designs until the considered heterogeneous GNN converges. Based on the proposed approach, each user is associated with the best RIS, which is shown to significantly improve the system capacity in multi-RIS multi-user millimeter wave (mmWave) communications. Specifically, simulation results demonstrate that the proposed heterogeneous GNN closely approaches the performance of the high-complexity alternating optimization (AO) algorithm in the considered multi-RIS aided communication system, and it outperforms other benchmark schemes. Moreover, the performance improvement achieved through the RIS association scheme is shown to be of the order of 30%.

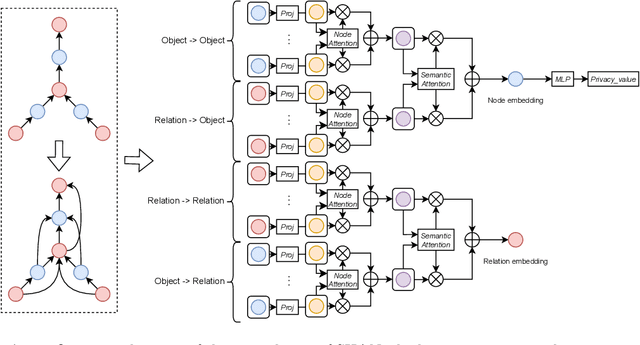

Beyond Visual Appearances: Privacy-sensitive Objects Identification via Hybrid Graph Reasoning

Jun 18, 2024Abstract:The Privacy-sensitive Object Identification (POI) task allocates bounding boxes for privacy-sensitive objects in a scene. The key to POI is settling an object's privacy class (privacy-sensitive or non-privacy-sensitive). In contrast to conventional object classes which are determined by the visual appearance of an object, one object's privacy class is derived from the scene contexts and is subject to various implicit factors beyond its visual appearance. That is, visually similar objects may be totally opposite in their privacy classes. To explicitly derive the objects' privacy class from the scene contexts, in this paper, we interpret the POI task as a visual reasoning task aimed at the privacy of each object in the scene. Following this interpretation, we propose the PrivacyGuard framework for POI. PrivacyGuard contains three stages. i) Structuring: an unstructured image is first converted into a structured, heterogeneous scene graph that embeds rich scene contexts. ii) Data Augmentation: a contextual perturbation oversampling strategy is proposed to create slightly perturbed privacy-sensitive objects in a scene graph, thereby balancing the skewed distribution of privacy classes. iii) Hybrid Graph Generation & Reasoning: the balanced, heterogeneous scene graph is then transformed into a hybrid graph by endowing it with extra "node-node" and "edge-edge" homogeneous paths. These homogeneous paths allow direct message passing between nodes or edges, thereby accelerating reasoning and facilitating the capturing of subtle context changes. Based on this hybrid graph... **For the full abstract, see the original paper.**

Robust Beamforming with Gradient-based Liquid Neural Network

May 17, 2024Abstract:Millimeter-wave (mmWave) multiple-input multiple-output (MIMO) communication with the advanced beamforming technologies is a key enabler to meet the growing demands of future mobile communication. However, the dynamic nature of cellular channels in large-scale urban mmWave MIMO communication scenarios brings substantial challenges, particularly in terms of complexity and robustness. To address these issues, we propose a robust gradient-based liquid neural network (GLNN) framework that utilizes ordinary differential equation-based liquid neurons to solve the beamforming problem. Specifically, our proposed GLNN framework takes gradients of the optimization objective function as inputs to extract the high-order channel feature information, and then introduces a residual connection to mitigate the training burden. Furthermore, we use the manifold learning technique to compress the search space of the beamforming problem. These designs enable the GLNN to effectively maintain low complexity while ensuring strong robustness to noisy and highly dynamic channels. Extensive simulation results demonstrate that the GLNN can achieve 4.15% higher spectral efficiency than that of typical iterative algorithms, and reduce the time consumption to only 1.61% that of conventional methods.

Beamforming Inferring by Conditional WGAN-GP for Holographic Antenna Arrays

May 01, 2024Abstract:The beamforming technology with large holographic antenna arrays is one of the key enablers for the next generation of wireless systems, which can significantly improve the spectral efficiency. However, the deployment of large antenna arrays implies high algorithm complexity and resource overhead at both receiver and transmitter ends. To address this issue, advanced technologies such as artificial intelligence have been developed to reduce beamforming overhead. Intuitively, if we can implement the near-optimal beamforming only using a tiny subset of the all channel information, the overhead for channel estimation and beamforming would be reduced significantly compared with the traditional beamforming methods that usually need full channel information and the inversion of large dimensional matrix. In light of this idea, we propose a novel scheme that utilizes Wasserstein generative adversarial network with gradient penalty to infer the full beamforming matrices based on very little of channel information. Simulation results confirm that it can accomplish comparable performance with the weighted minimum mean-square error algorithm, while reducing the overhead by over 50%.

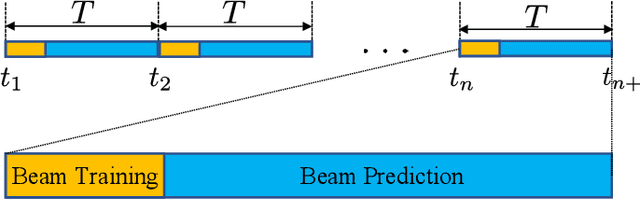

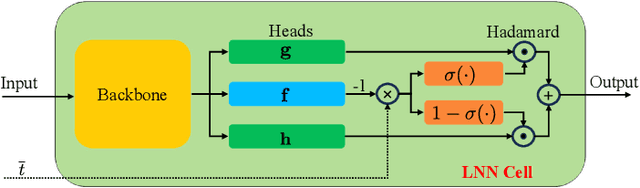

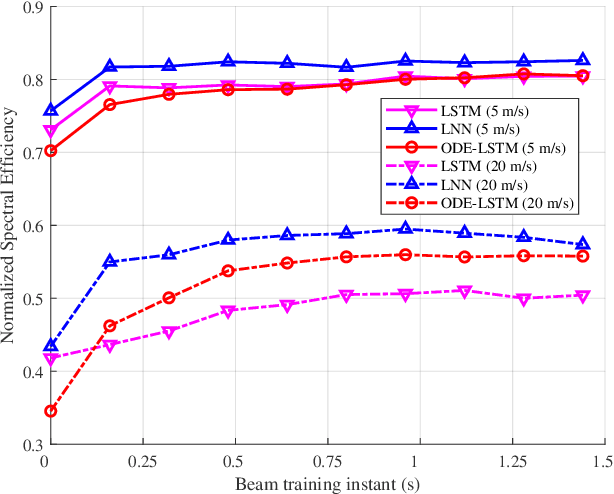

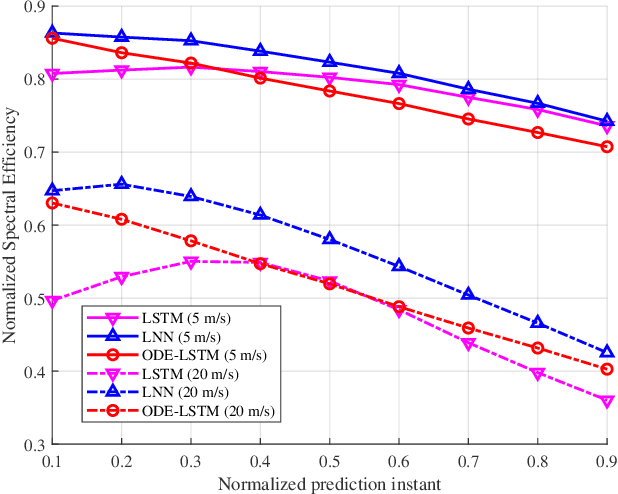

Robust Continuous-Time Beam Tracking with Liquid Neural Network

May 01, 2024

Abstract:Millimeter-wave (mmWave) technology is increasingly recognized as a pivotal technology of the sixth-generation communication networks due to the large amounts of available spectrum at high frequencies. However, the huge overhead associated with beam training imposes a significant challenge in mmWave communications, particularly in urban environments with high background noise. To reduce this high overhead, we propose a novel solution for robust continuous-time beam tracking with liquid neural network, which dynamically adjust the narrow mmWave beams to ensure real-time beam alignment with mobile users. Through extensive simulations, we validate the effectiveness of our proposed method and demonstrate its superiority over existing state-of-the-art deep-learning-based approaches. Specifically, our scheme achieves at most 46.9% higher normalized spectral efficiency than the baselines when the user is moving at 5 m/s, demonstrating the potential of liquid neural networks to enhance mmWave mobile communication performance.

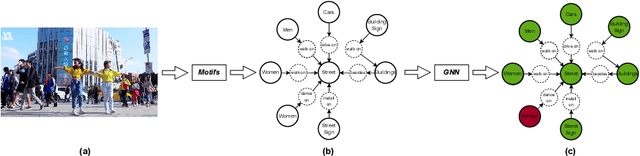

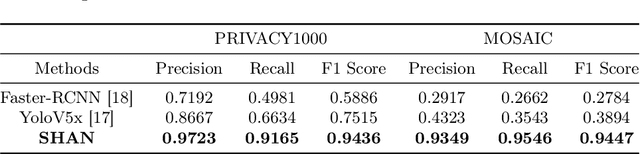

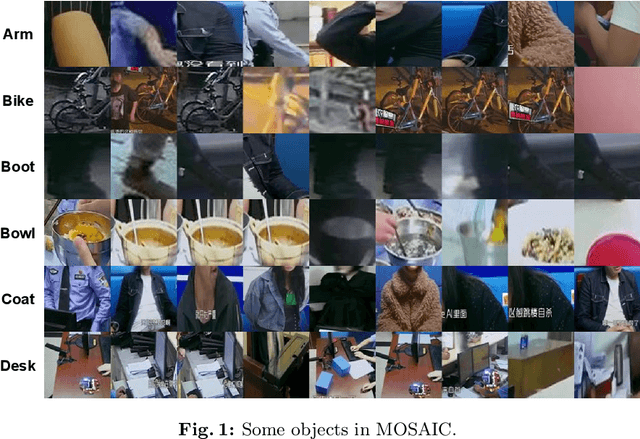

SHAN: Object-Level Privacy Detection via Inference on Scene Heterogeneous Graph

Mar 14, 2024

Abstract:With the rise of social platforms, protecting privacy has become an important issue. Privacy object detection aims to accurately locate private objects in images. It is the foundation of safeguarding individuals' privacy rights and ensuring responsible data handling practices in the digital age. Since privacy of object is not shift-invariant, the essence of the privacy object detection task is inferring object privacy based on scene information. However, privacy object detection has long been studied as a subproblem of common object detection tasks. Therefore, existing methods suffer from serious deficiencies in accuracy, generalization, and interpretability. Moreover, creating large-scale privacy datasets is difficult due to legal constraints and existing privacy datasets lack label granularity. The granularity of existing privacy detection methods remains limited to the image level. To address the above two issues, we introduce two benchmark datasets for object-level privacy detection and propose SHAN, Scene Heterogeneous graph Attention Network, a model constructs a scene heterogeneous graph from an image and utilizes self-attention mechanisms for scene inference to obtain object privacy. Through experiments, we demonstrated that SHAN performs excellently in privacy object detection tasks, with all metrics surpassing those of the baseline model.

Robust Beamforming for RIS-aided Communications: Gradient-based Manifold Meta Learning

Feb 16, 2024Abstract:Reconfigurable intelligent surface (RIS) has become a promising technology to realize the programmable wireless environment via steering the incident signal in fully customizable ways. However, a major challenge in RIS-aided communication systems is the simultaneous design of the precoding matrix at the base station (BS) and the phase shifting matrix of the RIS elements. This is mainly attributed to the highly non-convex optimization space of variables at both the BS and the RIS, and the diversity of communication environments. Generally, traditional optimization methods for this problem suffer from the high complexity, while existing deep learning based methods are lack of robustness in various scenarios. To address these issues, we introduce a gradient-based manifold meta learning method (GMML), which works without pre-training and has strong robustness for RIS-aided communications. Specifically, the proposed method fuses meta learning and manifold learning to improve the overall spectral efficiency, and reduce the overhead of the high-dimensional signal process. Unlike traditional deep learning based methods which directly take channel state information as input, GMML feeds the gradients of the precoding matrix and phase shifting matrix into neural networks. Coherently, we design a differential regulator to constrain the phase shifting matrix of the RIS. Numerical results show that the proposed GMML can improve the spectral efficiency by up to 7.31\%, and speed up the convergence by 23 times faster compared to traditional approaches. Moreover, they also demonstrate remarkable robustness and adaptability in dynamic settings.

A Novel Approach to WaveNet Architecture for RF Signal Separation with Learnable Dilation and Data Augmentation

Feb 08, 2024Abstract:In this paper, we address the intricate issue of RF signal separation by presenting a novel adaptation of the WaveNet architecture that introduces learnable dilation parameters, significantly enhancing signal separation in dense RF spectrums. Our focused architectural refinements and innovative data augmentation strategies have markedly improved the model's ability to discern complex signal sources. This paper details our comprehensive methodology, including the refined model architecture, data preparation techniques, and the strategic training strategy that have been pivotal to our success. The efficacy of our approach is evidenced by the substantial improvements recorded: a 58.82\% increase in SINR at a BER of $10^{-3}$ for OFDM-QPSK with EMI Signal 1, surpassing traditional benchmarks. Notably, our model achieved first place in the challenge \cite{datadrivenrf2024}, demonstrating its superior performance and establishing a new standard for machine learning applications within the RF communications domain.

Energy-efficient Beamforming for RISs-aided Communications: Gradient Based Meta Learning

Nov 12, 2023

Abstract:Reconfigurable intelligent surfaces (RISs) have become a promising technology to meet the requirements of energy efficiency and scalability in future six-generation (6G) communications. However, a significant challenge in RISs-aided communications is the joint optimization of active and passive beamforming at base stations (BSs) and RISs respectively. Specifically, the main difficulty is attributed to the highly non-convex optimization space of beamforming matrices at both BSs and RISs, as well as the diversity and mobility of communication scenarios. To address this, we present a greenly gradient based meta learning beamforming (GMLB) approach. Unlike traditional deep learning based methods which take channel information directly as input, GMLB feeds the gradient of sum rate into neural networks. Coherently, we design a differential regulator to address the phase shift optimization of RISs. Moreover, we use the meta learning to iteratively optimize the beamforming matrices of BSs and RISs. These techniques make the proposed method to work well without requiring energy-consuming pre-training. Simulations show that GMLB could achieve higher sum rate than that of typical alternating optimization algorithms with the energy consumption by two orders of magnitude less.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge