Mérouane Debbah

Seeing Radio: From Zero RF Priors to Explainable Modulation Recognition with Vision Language Models

Jan 19, 2026Abstract:The rise of vision language models (VLMs) paves a new path for radio frequency (RF) perception. Rather than designing task-specific neural receivers, we ask if VLMs can learn to recognize modulations when RF waveforms are expressed as images. In this work, we find that they can. In specific, in this paper, we introduce a practical pipeline for converting complex IQ streams into visually interpretable inputs, hence, enabling general-purpose VLMs to classify modulation schemes without changing their underlying design. Building on this, we construct an RF visual question answering (VQA) benchmark framework that covers 57 classes across major families of analog/digital modulations with three complementary image modes, namely, (i) short \emph{time-series} IQ segments represented as real/imaginary traces, (ii) magnitude-only \emph{spectrograms}, and (iii) \emph{joint} representations that pair spectrograms with a synchronized time-series waveforms. We design uniform zero-shot and few-shot prompts for both class-level and family-level evaluations. Our finetuned VLMs with these images achieve competitive accuracy of $90\%$ compared to $10\%$ of the base models. Furthermore, the fine-tuned VLMs show robust performance under noise and demonstrate high generalization performance to unseen modulation types, without relying on RF-domain priors or specialized architectures. The obtained results show that combining RF-to-image conversion with promptable VLMs provides a scalable and practical foundation for RF-aware AI systems in future 6G networks.

Stacked Intelligent Metasurface Assisted Multiuser Communications: From a Rate Fairness Perspective

Jul 23, 2025Abstract:Stacked intelligent metasurface (SIM) extends the concept of single-layer reconfigurable holographic surfaces (RHS) by incorporating a multi-layered structure, thereby providing enhanced control over electromagnetic wave propagation and improved signal processing capabilities. This study investigates the potential of SIM in enhancing the rate fairness in multiuser downlink systems by addressing two key optimization problems: maximizing the minimum rate (MR) and maximizing the geometric mean of rates (GMR). {The former strives to enhance the minimum user rate, thereby ensuring fairness among users, while the latter relaxes fairness requirements to strike a better trade-off between user fairness and system sum-rate (SR).} For the MR maximization, we adopt a consensus alternating direction method of multipliers (ADMM)-based approach, which decomposes the approximated problem into sub-problems with closed-form solutions. {For GMR maximization, we develop an alternating optimization (AO)-based algorithm that also yields closed-form solutions and can be seamlessly adapted for SR maximization. Numerical results validate the effectiveness and convergence of the proposed algorithms.} Comparative evaluations show that MR maximization ensures near-perfect fairness, while GMR maximization balances fairness and system SR. Furthermore, the two proposed algorithms respectively outperform existing related works in terms of MR and SR performance. Lastly, SIM with lower power consumption achieves performance comparable to that of multi-antenna digital beamforming.

Stacked Intelligent Metasurfaces for Multi-Modal Semantic Communications

Jun 14, 2025Abstract:Semantic communication (SemCom) powered by generative artificial intelligence enables highly efficient and reliable information transmission. However, it still necessitates the transmission of substantial amounts of data when dealing with complex scene information. In contrast, the stacked intelligent metasurface (SIM), leveraging wave-domain computing, provides a cost-effective solution for directly imaging complex scenes. Building on this concept, we propose an innovative SIM-aided multi-modal SemCom system. Specifically, an SIM is positioned in front of the transmit antenna for transmitting visual semantic information of complex scenes via imaging on the uniform planar array at the receiver. Furthermore, the simple scene description that contains textual semantic information is transmitted via amplitude-phase modulation over electromagnetic waves. To simultaneously transmit multi-modal information, we optimize the amplitude and phase of meta-atoms in the SIM using a customized gradient descent algorithm. The optimization aims to gradually minimize the mean squared error between the normalized energy distribution on the receiver array and the desired pattern corresponding to the visual semantic information. By combining the textual and visual semantic information, a conditional generative adversarial network is used to recover the complex scene accurately. Extensive numerical results verify the effectiveness of the proposed multi-modal SemCom system in reducing bandwidth overhead as well as the capability of the SIM for imaging the complex scene.

WirelessMathBench: A Mathematical Modeling Benchmark for LLMs in Wireless Communications

May 20, 2025Abstract:Large Language Models (LLMs) have achieved impressive results across a broad array of tasks, yet their capacity for complex, domain-specific mathematical reasoning-particularly in wireless communications-remains underexplored. In this work, we introduce WirelessMathBench, a novel benchmark specifically designed to evaluate LLMs on mathematical modeling challenges to wireless communications engineering. Our benchmark consists of 587 meticulously curated questions sourced from 40 state-of-the-art research papers, encompassing a diverse spectrum of tasks ranging from basic multiple-choice questions to complex equation completion tasks, including both partial and full completions, all of which rigorously adhere to physical and dimensional constraints. Through extensive experimentation with leading LLMs, we observe that while many models excel in basic recall tasks, their performance degrades significantly when reconstructing partially or fully obscured equations, exposing fundamental limitations in current LLMs. Even DeepSeek-R1, the best performer on our benchmark, achieves an average accuracy of only 38.05%, with a mere 7.83% success rate in full equation completion. By publicly releasing WirelessMathBench along with the evaluation toolkit, we aim to advance the development of more robust, domain-aware LLMs for wireless system analysis and broader engineering applications.

Robust Deep Learning-Based Physical Layer Communications: Strategies and Approaches

May 02, 2025Abstract:Deep learning (DL) has emerged as a transformative technology with immense potential to reshape the sixth-generation (6G) wireless communication network. By utilizing advanced algorithms for feature extraction and pattern recognition, DL provides unprecedented capabilities in optimizing the network efficiency and performance, particularly in physical layer communications. Although DL technologies present the great potential, they also face significant challenges related to the robustness, which are expected to intensify in the complex and demanding 6G environment. Specifically, current DL models typically exhibit substantial performance degradation in dynamic environments with time-varying channels, interference of noise and different scenarios, which affect their effectiveness in diverse real-world applications. This paper provides a comprehensive overview of strategies and approaches for robust DL-based methods in physical layer communications. First we introduce the key challenges that current DL models face. Then we delve into a detailed examination of DL approaches specifically tailored to enhance robustness in 6G, which are classified into data-driven and model-driven strategies. Finally, we verify the effectiveness of these methods by case studies and outline future research directions.

Wireless Large AI Model: Shaping the AI-Native Future of 6G and Beyond

Apr 20, 2025Abstract:The emergence of sixth-generation and beyond communication systems is expected to fundamentally transform digital experiences through introducing unparalleled levels of intelligence, efficiency, and connectivity. A promising technology poised to enable this revolutionary vision is the wireless large AI model (WLAM), characterized by its exceptional capabilities in data processing, inference, and decision-making. In light of these remarkable capabilities, this paper provides a comprehensive survey of WLAM, elucidating its fundamental principles, diverse applications, critical challenges, and future research opportunities. We begin by introducing the background of WLAM and analyzing the key synergies with wireless networks, emphasizing the mutual benefits. Subsequently, we explore the foundational characteristics of WLAM, delving into their unique relevance in wireless environments. Then, the role of WLAM in optimizing wireless communication systems across various use cases and the reciprocal benefits are systematically investigated. Furthermore, we discuss the integration of WLAM with emerging technologies, highlighting their potential to enable transformative capabilities and breakthroughs in wireless communication. Finally, we thoroughly examine the high-level challenges hindering the practical implementation of WLAM and discuss pivotal future research directions.

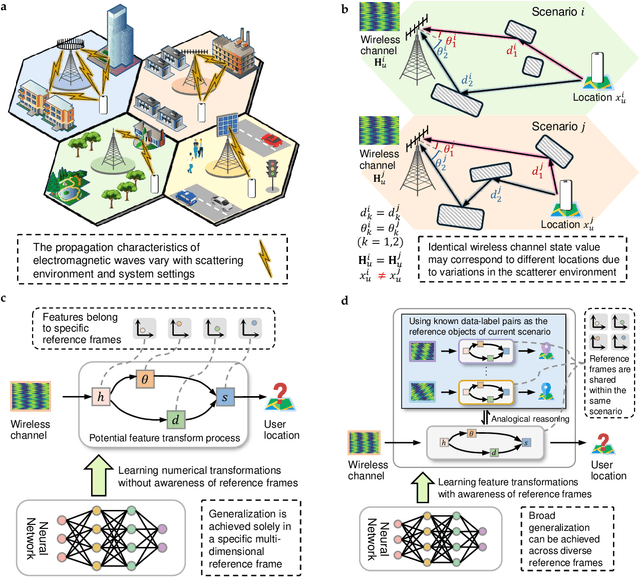

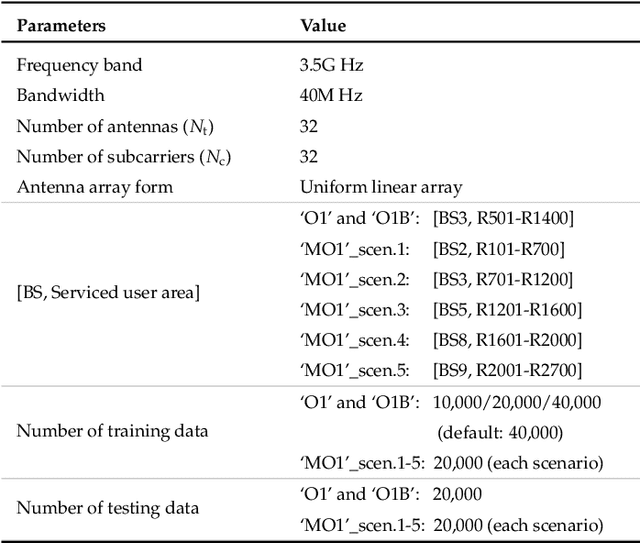

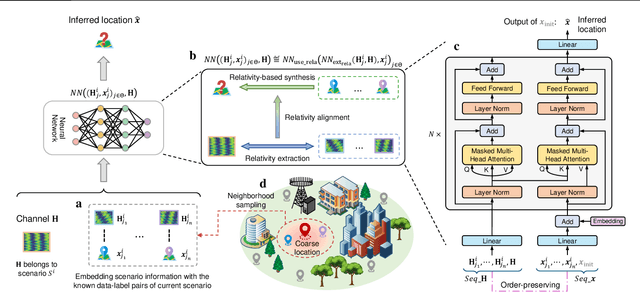

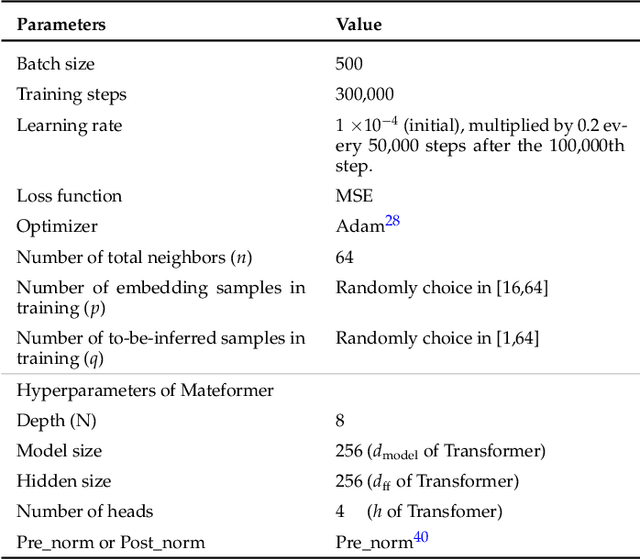

Analogical Learning for Cross-Scenario Generalization: Framework and Application to Intelligent Localization

Apr 09, 2025

Abstract:Existing learning models often exhibit poor generalization when deployed across diverse scenarios. It is mainly due to that the underlying reference frame of the data varies with the deployment environment and settings. However, despite the data of each scenario has its distinct reference frame, its generation generally follows the same underlying physical rule. Based on these findings, this article proposes a brand-new universal deep learning framework named analogical learning (AL), which provides a highly efficient way to implicitly retrieve the reference frame information associated with a scenario and then to make accurate prediction by relative analogy across scenarios. Specifically, an elegant bipartite neural network architecture called Mateformer is designed, the first part of which calculates the relativity within multiple feature spaces between the input data and a small amount of embedded data from the current scenario, while the second part uses these relativity to guide the nonlinear analogy. We apply AL to the typical multi-scenario learning problem of intelligent wireless localization in cellular networks. Extensive experiments show that AL achieves state-of-the-art accuracy, stable transferability and robust adaptation to new scenarios without any tuning, and outperforming conventional methods with a precision improvement of nearly two orders of magnitude. All data and code are available at https://github.com/ziruichen-research/ALLoc.

TeleMoM: Consensus-Driven Telecom Intelligence via Mixture of Models

Apr 03, 2025Abstract:Large language models (LLMs) face significant challenges in specialized domains like telecommunication (Telecom) due to technical complexity, specialized terminology, and rapidly evolving knowledge. Traditional methods, such as scaling model parameters or retraining on domain-specific corpora, are computationally expensive and yield diminishing returns, while existing approaches like retrieval-augmented generation, mixture of experts, and fine-tuning struggle with accuracy, efficiency, and coordination. To address this issue, we propose Telecom mixture of models (TeleMoM), a consensus-driven ensemble framework that integrates multiple LLMs for enhanced decision-making in Telecom. TeleMoM employs a two-stage process: proponent models generate justified responses, and an adjudicator finalizes decisions, supported by a quality-checking mechanism. This approach leverages strengths of diverse models to improve accuracy, reduce biases, and handle domain-specific complexities effectively. Evaluation results demonstrate that TeleMoM achieves a 9.7\% increase in answer accuracy, highlighting its effectiveness in Telecom applications.

Towards Zero Touch Networks: Cross-Layer Automated Security Solutions for 6G Wireless Networks

Feb 28, 2025

Abstract:The transition from 5G to 6G mobile networks necessitates network automation to meet the escalating demands for high data rates, ultra-low latency, and integrated technology. Recently, Zero-Touch Networks (ZTNs), driven by Artificial Intelligence (AI) and Machine Learning (ML), are designed to automate the entire lifecycle of network operations with minimal human intervention, presenting a promising solution for enhancing automation in 5G/6G networks. However, the implementation of ZTNs brings forth the need for autonomous and robust cybersecurity solutions, as ZTNs rely heavily on automation. AI/ML algorithms are widely used to develop cybersecurity mechanisms, but require substantial specialized expertise and encounter model drift issues, posing significant challenges in developing autonomous cybersecurity measures. Therefore, this paper proposes an automated security framework targeting Physical Layer Authentication (PLA) and Cross-Layer Intrusion Detection Systems (CLIDS) to address security concerns at multiple Internet protocol layers. The proposed framework employs drift-adaptive online learning techniques and a novel enhanced Successive Halving (SH)-based Automated ML (AutoML) method to automatically generate optimized ML models for dynamic networking environments. Experimental results illustrate that the proposed framework achieves high performance on the public Radio Frequency (RF) fingerprinting and the Canadian Institute for CICIDS2017 datasets, showcasing its effectiveness in addressing PLA and CLIDS tasks within dynamic and complex networking environments. Furthermore, the paper explores open challenges and research directions in the 5G/6G cybersecurity domain. This framework represents a significant advancement towards fully autonomous and secure 6G networks, paving the way for future innovations in network automation and cybersecurity.

Downlink Multiuser Communications Relying on Flexible Intelligent Metasurfaces

Feb 23, 2025Abstract:A flexible intelligent metasurface (FIM) is composed of an array of low-cost radiating elements, each of which can independently radiate electromagnetic signals and flexibly adjust its position through a 3D surface-morphing process. In our system, an FIM is deployed at a base station (BS) that transmits to multiple single-antenna users. We formulate an optimization problem for minimizing the total downlink transmit power at the BS by jointly optimizing the transmit beamforming and the FIM's surface shape, subject to an individual signal-to-interference-plus-noise ratio (SINR) constraint for each user as well as to a constraint on the maximum morphing range of the FIM. To address this problem, an efficient alternating optimization method is proposed to iteratively update the FIM's surface shape and the transmit beamformer to gradually reduce the transmit power. Finally, our simulation results show that at a given data rate the FIM reduces the transmit power by about $3$ dB compared to conventional rigid 2D arrays.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge