Hui Chen

Alibaba Group

Mismatch Analysis and Cooperative Calibration of Array Beam Patterns for ISAC Systems

Feb 01, 2026Abstract:Integrated sensing and communication (ISAC) is a key technology for enabling a wide range of applications in future wireless systems. However, the sensing performance is often degraded by model mismatches caused by geometric errors (e.g., position and orientation) and hardware impairments (e.g., mutual coupling and amplifier non-linearity). This paper focuses on the angle estimation performance with antenna arrays and tackles the critical challenge of array beam pattern calibration for ISAC systems. To assess calibration quality from a sensing perspective, a novel performance metric that accounts for angle estimation error, rather than beam pattern similarity, is proposed and incorporated into a differentiable loss function. Additionally, a cooperative calibration framework is introduced, allowing multiple user equipments to iteratively optimize the beam pattern based on the proposed loss functions and local data, and collaboratively update global calibration parameters. The proposed models and algorithms are validated using real-world beam pattern measurements collected in an anechoic chamber. Experimental results show that the angle estimation error can be reduced from {$\textbf{1.01}^\circ$} to $\textbf{0.11}^\circ$ in 2D calibration scenarios, and from $\textbf{5.19}^\circ$ to $\textbf{0.86}^\circ$ in 3D calibration ones.

Vehicular Wireless Positioning -- A Survey

Jan 28, 2026Abstract:The rapid advancement of connected and autonomous vehicles has driven a growing demand for precise and reliable positioning systems capable of operating in complex environments. Meeting these demands requires an integrated approach that combines multiple positioning technologies, including wireless-based systems, perception-based technologies, and motion-based sensors. This paper presents a comprehensive survey of wireless-based positioning for vehicular applications, with a focus on satellite-based positioning (such as global navigation satellite systems (GNSS) and low-Earth-orbit (LEO) satellites), cellular-based positioning (5G and beyond), and IEEE-based technologies (including Wi-Fi, ultrawideband (UWB), Bluetooth, and vehicle-to-vehicle (V2V) communications). First, the survey reviews a wide range of vehicular positioning use cases, outlining their specific performance requirements. Next, it explores the historical development, standardization, and evolution of each wireless positioning technology, providing an in-depth categorization of existing positioning solutions and algorithms, and identifying open challenges and contemporary trends. Finally, the paper examines sensor fusion techniques that integrate these wireless systems with onboard perception and motion sensors to enhance positioning accuracy and resilience in real-world conditions. This survey thus offers a holistic perspective on the historical foundations, current advancements, and future directions of wireless-based positioning for vehicular applications, addressing a critical gap in the literature.

RSATalker: Realistic Socially-Aware Talking Head Generation for Multi-Turn Conversation

Jan 15, 2026Abstract:Talking head generation is increasingly important in virtual reality (VR), especially for social scenarios involving multi-turn conversation. Existing approaches face notable limitations: mesh-based 3D methods can model dual-person dialogue but lack realistic textures, while large-model-based 2D methods produce natural appearances but incur prohibitive computational costs. Recently, 3D Gaussian Splatting (3DGS) based methods achieve efficient and realistic rendering but remain speaker-only and ignore social relationships. We introduce RSATalker, the first framework that leverages 3DGS for realistic and socially-aware talking head generation with support for multi-turn conversation. Our method first drives mesh-based 3D facial motion from speech, then binds 3D Gaussians to mesh facets to render high-fidelity 2D avatar videos. To capture interpersonal dynamics, we propose a socially-aware module that encodes social relationships, including blood and non-blood as well as equal and unequal, into high-level embeddings through a learnable query mechanism. We design a three-stage training paradigm and construct the RSATalker dataset with speech-mesh-image triplets annotated with social relationships. Extensive experiments demonstrate that RSATalker achieves state-of-the-art performance in both realism and social awareness. The code and dataset will be released.

PruneHal: Reducing Hallucinations in Multi-modal Large Language Models through Adaptive KV Cache Pruning

Oct 22, 2025Abstract:While multi-modal large language models (MLLMs) have made significant progress in recent years, the issue of hallucinations remains a major challenge. To mitigate this phenomenon, existing solutions either introduce additional data for further training or incorporate external or internal information during inference. However, these approaches inevitably introduce extra computational costs. In this paper, we observe that hallucinations in MLLMs are strongly associated with insufficient attention allocated to visual tokens. In particular, the presence of redundant visual tokens disperses the model's attention, preventing it from focusing on the most informative ones. As a result, critical visual cues are often under-attended, which in turn exacerbates the occurrence of hallucinations. Building on this observation, we propose \textbf{PruneHal}, a training-free, simple yet effective method that leverages adaptive KV cache pruning to enhance the model's focus on critical visual information, thereby mitigating hallucinations. To the best of our knowledge, we are the first to apply token pruning for hallucination mitigation in MLLMs. Notably, our method don't require additional training and incurs nearly no extra inference cost. Moreover, PruneHal is model-agnostic and can be seamlessly integrated with different decoding strategies, including those specifically designed for hallucination mitigation. We evaluate PruneHal on several widely used hallucination evaluation benchmarks using four mainstream MLLMs, achieving robust and outstanding results that highlight the effectiveness and superiority of our method. Our code will be publicly available.

StaMo: Unsupervised Learning of Generalizable Robot Motion from Compact State Representation

Oct 06, 2025Abstract:A fundamental challenge in embodied intelligence is developing expressive and compact state representations for efficient world modeling and decision making. However, existing methods often fail to achieve this balance, yielding representations that are either overly redundant or lacking in task-critical information. We propose an unsupervised approach that learns a highly compressed two-token state representation using a lightweight encoder and a pre-trained Diffusion Transformer (DiT) decoder, capitalizing on its strong generative prior. Our representation is efficient, interpretable, and integrates seamlessly into existing VLA-based models, improving performance by 14.3% on LIBERO and 30% in real-world task success with minimal inference overhead. More importantly, we find that the difference between these tokens, obtained via latent interpolation, naturally serves as a highly effective latent action, which can be further decoded into executable robot actions. This emergent capability reveals that our representation captures structured dynamics without explicit supervision. We name our method StaMo for its ability to learn generalizable robotic Motion from compact State representation, which is encoded from static images, challenging the prevalent dependence to learning latent action on complex architectures and video data. The resulting latent actions also enhance policy co-training, outperforming prior methods by 10.4% with improved interpretability. Moreover, our approach scales effectively across diverse data sources, including real-world robot data, simulation, and human egocentric video.

Active Inference Framework for Closed-Loop Sensing, Communication, and Control in UAV Systems

Sep 17, 2025Abstract:Integrated sensing and communication (ISAC) is a core technology for 6G, and its application to closed-loop sensing, communication, and control (SCC) enables various services. Existing SCC solutions often treat sensing and control separately, leading to suboptimal performance and resource usage. In this work, we introduce the active inference framework (AIF) into SCC-enabled unmanned aerial vehicle (UAV) systems for joint state estimation, control, and sensing resource allocation. By formulating a unified generative model, the problem reduces to minimizing variational free energy for inference and expected free energy for action planning. Simulation results show that both control cost and sensing cost are reduced relative to baselines.

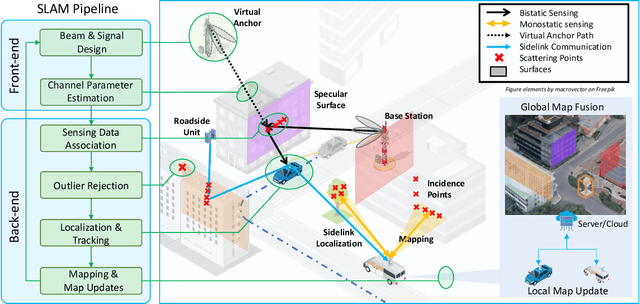

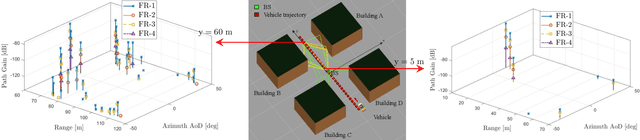

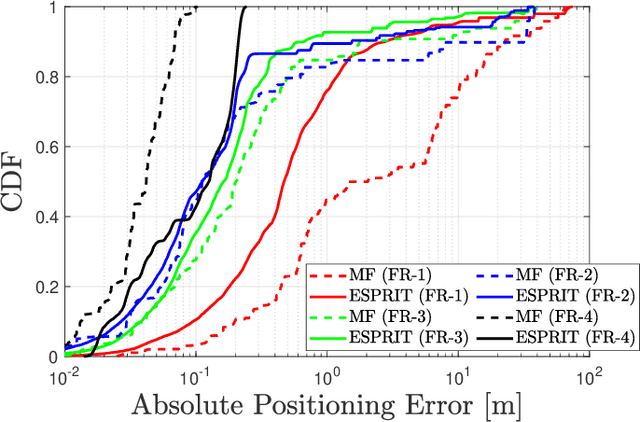

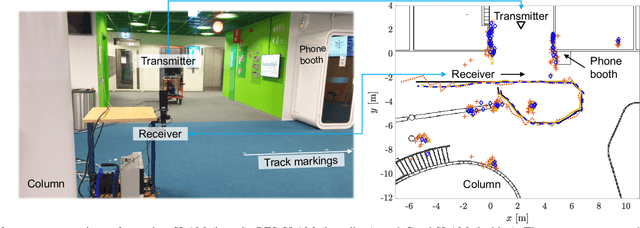

Sensing with Mobile Devices through Radio SLAM: Models, Methods, Opportunities, and Challenges

Sep 09, 2025

Abstract:The integration of sensing and communication (ISAC) is a cornerstone of 6G, enabling simultaneous environmental awareness and communication. This paper explores radio SLAM (simultaneous localization and mapping) as a key ISAC approach, using radio signals for mapping and localization. We analyze radio SLAM across different frequency bands, discussing trade-offs in coverage, resolution, and hardware requirements. We also highlight opportunities for integration with sensing, positioning, and cooperative networks. The findings pave the way for standardized solutions in 6G applications such as autonomous systems and industrial robotics.

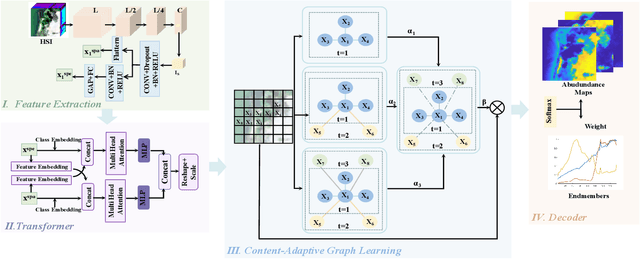

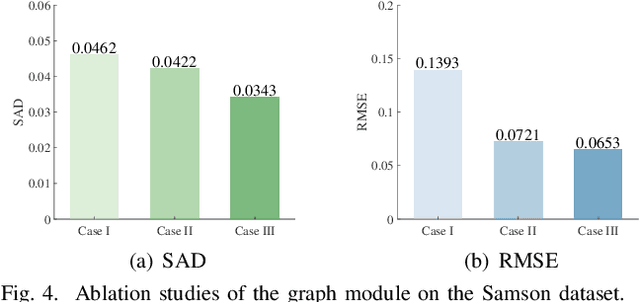

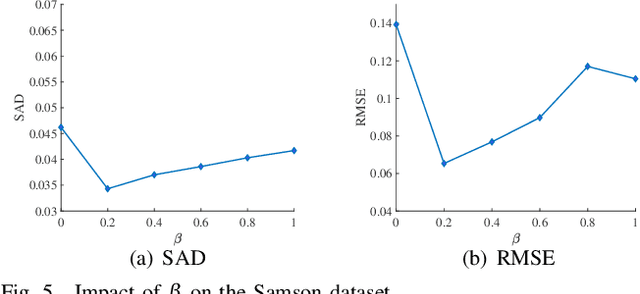

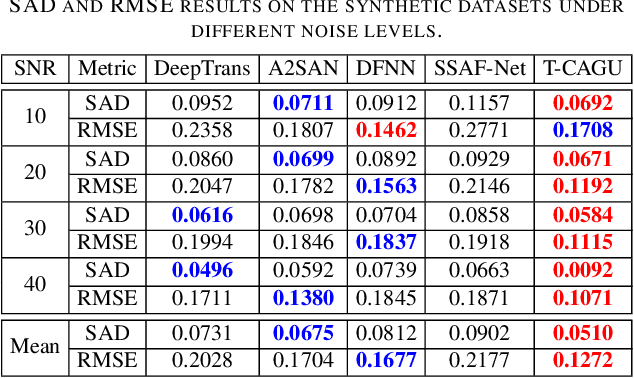

Transformer-Guided Content-Adaptive Graph Learning for Hyperspectral Unmixing

Sep 03, 2025

Abstract:Hyperspectral unmixing (HU) targets to decompose each mixed pixel in remote sensing images into a set of endmembers and their corresponding abundances. Despite significant progress in this field using deep learning, most methods fail to simultaneously characterize global dependencies and local consistency, making it difficult to preserve both long-range interactions and boundary details. This letter proposes a novel transformer-guided content-adaptive graph unmixing framework (T-CAGU), which overcomes these challenges by employing a transformer to capture global dependencies and introducing a content-adaptive graph neural network to enhance local relationships. Unlike previous work, T-CAGU integrates multiple propagation orders to dynamically learn the graph structure, ensuring robustness against noise. Furthermore, T-CAGU leverages a graph residual mechanism to preserve global information and stabilize training. Experimental results demonstrate its superiority over the state-of-the-art methods. Our code is available at https://github.com/xianchaoxiu/T-CAGU.

ClaimGen-CN: A Large-scale Chinese Dataset for Legal Claim Generation

Aug 24, 2025Abstract:Legal claims refer to the plaintiff's demands in a case and are essential to guiding judicial reasoning and case resolution. While many works have focused on improving the efficiency of legal professionals, the research on helping non-professionals (e.g., plaintiffs) remains unexplored. This paper explores the problem of legal claim generation based on the given case's facts. First, we construct ClaimGen-CN, the first dataset for Chinese legal claim generation task, from various real-world legal disputes. Additionally, we design an evaluation metric tailored for assessing the generated claims, which encompasses two essential dimensions: factuality and clarity. Building on this, we conduct a comprehensive zero-shot evaluation of state-of-the-art general and legal-domain large language models. Our findings highlight the limitations of the current models in factual precision and expressive clarity, pointing to the need for more targeted development in this domain. To encourage further exploration of this important task, we will make the dataset publicly available.

Automated Heuristic Design for Unit Commitment Using Large Language Models

Jun 14, 2025Abstract:The Unit Commitment (UC) problem is a classic challenge in the optimal scheduling of power systems. Years of research and practice have shown that formulating reasonable unit commitment plans can significantly improve the economic efficiency of power systems' operations. In recent years, with the introduction of technologies such as machine learning and the Lagrangian relaxation method, the solution methods for the UC problem have become increasingly diversified, but still face challenges in terms of accuracy and robustness. This paper proposes a Function Space Search (FunSearch) method based on large language models. This method combines pre-trained large language models and evaluators to creatively generate solutions through the program search and evolution process while ensuring their rationality. In simulation experiments, a case of unit commitment with \(10\) units is used mainly. Compared to the genetic algorithm, the results show that FunSearch performs better in terms of sampling time, evaluation time, and total operating cost of the system, demonstrating its great potential as an effective tool for solving the UC problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge