Mengting Li

Mismatch Analysis and Cooperative Calibration of Array Beam Patterns for ISAC Systems

Feb 01, 2026Abstract:Integrated sensing and communication (ISAC) is a key technology for enabling a wide range of applications in future wireless systems. However, the sensing performance is often degraded by model mismatches caused by geometric errors (e.g., position and orientation) and hardware impairments (e.g., mutual coupling and amplifier non-linearity). This paper focuses on the angle estimation performance with antenna arrays and tackles the critical challenge of array beam pattern calibration for ISAC systems. To assess calibration quality from a sensing perspective, a novel performance metric that accounts for angle estimation error, rather than beam pattern similarity, is proposed and incorporated into a differentiable loss function. Additionally, a cooperative calibration framework is introduced, allowing multiple user equipments to iteratively optimize the beam pattern based on the proposed loss functions and local data, and collaboratively update global calibration parameters. The proposed models and algorithms are validated using real-world beam pattern measurements collected in an anechoic chamber. Experimental results show that the angle estimation error can be reduced from {$\textbf{1.01}^\circ$} to $\textbf{0.11}^\circ$ in 2D calibration scenarios, and from $\textbf{5.19}^\circ$ to $\textbf{0.86}^\circ$ in 3D calibration ones.

Dynamic Generation of Multi-LLM Agents Communication Topologies with Graph Diffusion Models

Oct 09, 2025Abstract:The efficiency of multi-agent systems driven by large language models (LLMs) largely hinges on their communication topology. However, designing an optimal topology is a non-trivial challenge, as it requires balancing competing objectives such as task performance, communication cost, and robustness. Existing frameworks often rely on static or hand-crafted topologies, which inherently fail to adapt to diverse task requirements, leading to either excessive token consumption for simple problems or performance bottlenecks for complex ones. To address this challenge, we introduce a novel generative framework called \textit{Guided Topology Diffusion (GTD)}. Inspired by conditional discrete graph diffusion models, GTD formulates topology synthesis as an iterative construction process. At each step, the generation is steered by a lightweight proxy model that predicts multi-objective rewards (e.g., accuracy, utility, cost), enabling real-time, gradient-free optimization towards task-adaptive topologies. This iterative, guided synthesis process distinguishes GTD from single-step generative frameworks, enabling it to better navigate complex design trade-offs. We validated GTD across multiple benchmarks, and experiments show that this framework can generate highly task-adaptive, sparse, and efficient communication topologies, significantly outperforming existing methods in LLM agent collaboration.

RIS Beam Calibration for ISAC Systems: Modeling and Performance Analysis

May 21, 2025Abstract:High-accuracy localization is a key enabler for integrated sensing and communication (ISAC), playing an essential role in various applications such as autonomous driving. Antenna arrays and reconfigurable intelligent surface (RIS) are incorporated into these systems to achieve high angular resolution, assisting in the localization process. However, array and RIS beam patterns in practice often deviate from the idealized models used for algorithm design, leading to significant degradation in positioning accuracy. This mismatch highlights the need for beam calibration to bridge the gap between theoretical models and real-world hardware behavior. In this paper, we present and analyze three beam models considering several key non-idealities such as mutual coupling, non-ideal codebook, and measurement uncertainties. Based on the models, we then develop calibration algorithms to estimate the model parameters that can be used for future localization tasks. This work evaluates the effectiveness of the beam models and the calibration algorithms using both theoretical bounds and real-world beam pattern data from an RIS prototype. The simulation results show that the model incorporating combined impacts can accurately reconstruct measured beam patterns. This highlights the necessity of realistic beam modeling and calibration to achieve high-accuracy localization.

Pilot-Based End-to-End Radio Positioning and Mapping for ISAC: Beyond Point-Based Landmarks

May 12, 2025

Abstract:Integrated sensing and communication enables simultaneous communication and sensing tasks, including precise radio positioning and mapping, essential for future 6G networks. Current methods typically model environmental landmarks as isolated incidence points or small reflection areas, lacking detailed attributes essential for advanced environmental interpretation. This paper addresses these limitations by developing an end-to-end cooperative uplink framework involving multiple base stations and users. Our method uniquely estimates extended landmark objects and incorporates obstruction-based outlier removal to mitigate multi-bounce signal effects. Validation using realistic ray-tracing data demonstrates substantial improvements in the richness of the estimated environmental map.

Joint Near-Field Sensing and Visibility Region Detection with Extremely Large Aperture Arrays

Feb 28, 2025Abstract:In this paper, we consider near-field localization and sensing with an extremely large aperture array under partial blockage of array antennas, where spherical wavefront and spatial non-stationarity are accounted for. We propose an Ising model to characterize the clustered sparsity feature of the blockage pattern, develop an algorithm based on alternating optimization for joint channel parameter estimation and visibility region detection, and further estimate the locations of the user and environmental scatterers. The simulation results confirm the effectiveness of the proposed algorithm compared to conventional methods.

RIS-Aided Positioning Under Adverse Conditions: Interference from Unauthorized RIS

Feb 27, 2025

Abstract:Positioning technology, which aims to determine the geometric information of a device in a global coordinate, is a key component in integrated sensing and communication systems. In addition to traditional active anchor-based positioning systems, reconfigurable intelligent surfaces (RIS) have shown great potential for enhancing system performance. However, their ability to manipulate electromagnetic waves and ease of deployment pose potential risks, as unauthorized RIS may be intentionally introduced to jeopardize the positioning service. Such an unauthorized RIS can cause unexpected interference in the original localization system, distorting the transmitted signals, and leading to degraded positioning accuracy. In this work, we investigate the scenario of RIS-aided positioning in the presence of interference from an unauthorized RIS. Theoretical lower bounds are employed to analyze the impact of unauthorized RIS on channel parameter estimation and positioning accuracy. Several codebook design strategies for unauthorized RIS are evaluated, and various system arrangements are discussed. The simulation results show that an unauthorized RIS path with a high channel gain or a delay similar to that of legitimate RIS paths leads to poor positioning performance. Furthermore, unauthorized RIS generates more effective interference when using directional beamforming codebooks compared to random codebooks.

Multiple-Frequency-Bands Channel Characterization for In-vehicle Wireless Networks

Oct 03, 2024

Abstract:In-vehicle wireless networks are crucial for advancing smart transportation systems and enhancing interaction among vehicles and their occupants. However, there are limited studies in the current state of the art that investigate the in-vehicle channel characteristics in multiple frequency bands. In this paper, we present measurement campaigns conducted in a van and a car across below 7 GHz, millimeter-wave (mmWave), and sub-Terahertz (Sub-THz) bands. These campaigns aim to compare the channel characteristics for in-vehicle scenarios across various frequency bands. Channel impulse responses (CIRs) were measured at various locations distributed across the engine compartment of both the van and car. The CIR results reveal a high similarity in the delay properties between frequency bands below 7GHz and mmWave bands for the measurements in the engine bay. Sparse channels can be observed at Sub-THz bands in the engine bay scenarios. Channel spatial profiles in the passenger cabin of both the van and car are obtained by the directional scan sounding scheme for three bands. We compare the power angle delay profiles (PADPs) measured at different frequency bands in two line of sight (LOS) scenarios and one non-LOS (NLOS) scenario. Some major \added{multipath components (MPCs)} can be identified in all frequency bands and their trajectories are traced based on the geometry of the vehicles. The angular spread of arrival is also calculated for three scenarios. The analysis of channel characteristics in this paper can enhance our understanding of in-vehicle channels and foster the evolution of in-vehicle wireless networks.

Learning from Noisy Labels for Long-tailed Data via Optimal Transport

Aug 07, 2024

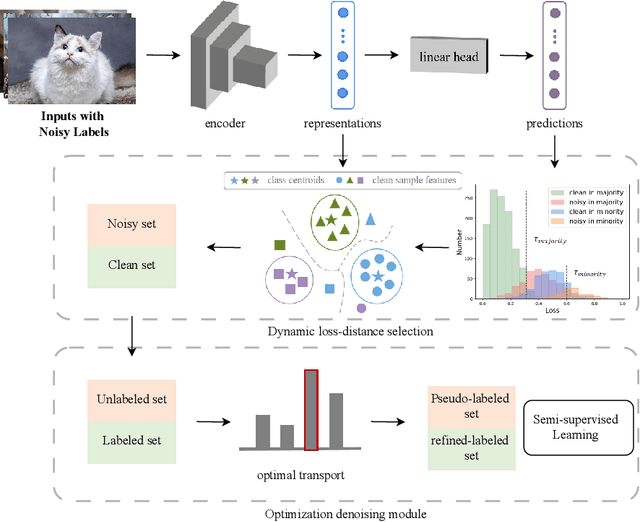

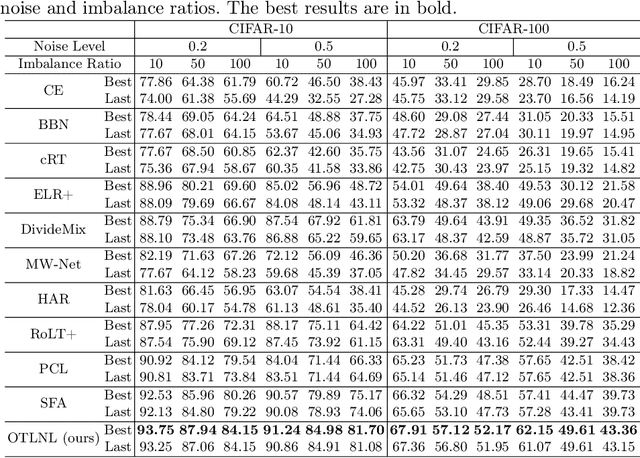

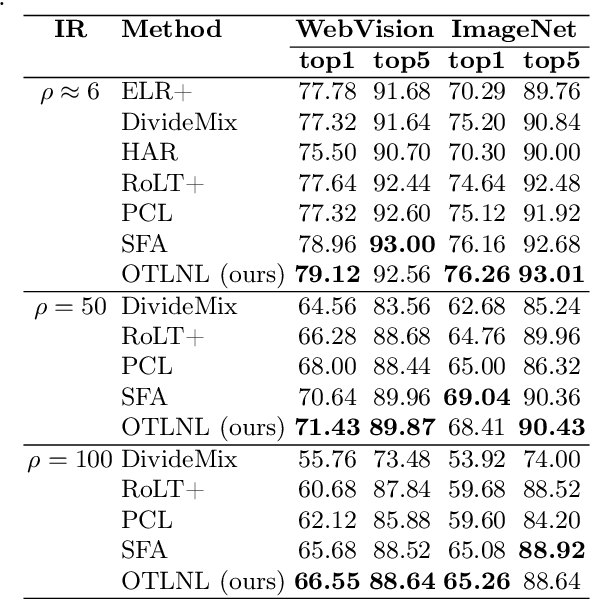

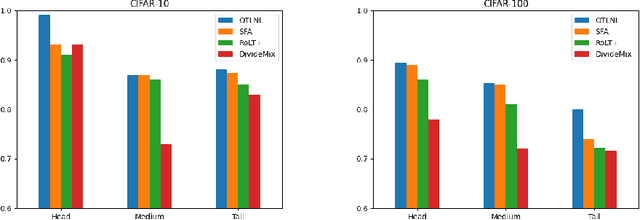

Abstract:Noisy labels, which are common in real-world datasets, can significantly impair the training of deep learning models. However, recent adversarial noise-combating methods overlook the long-tailed distribution of real data, which can significantly harm the effect of denoising strategies. Meanwhile, the mismanagement of noisy labels further compromises the model's ability to handle long-tailed data. To tackle this issue, we propose a novel approach to manage data characterized by both long-tailed distributions and noisy labels. First, we introduce a loss-distance cross-selection module, which integrates class predictions and feature distributions to filter clean samples, effectively addressing uncertainties introduced by noisy labels and long-tailed distributions. Subsequently, we employ optimal transport strategies to generate pseudo-labels for the noise set in a semi-supervised training manner, enhancing pseudo-label quality while mitigating the effects of sample scarcity caused by the long-tailed distribution. We conduct experiments on both synthetic and real-world datasets, and the comprehensive experimental results demonstrate that our method surpasses current state-of-the-art methods. Our code will be available in the future.

Noisy Label Processing for Classification: A Survey

Apr 05, 2024Abstract:In recent years, deep neural networks (DNNs) have gained remarkable achievement in computer vision tasks, and the success of DNNs often depends greatly on the richness of data. However, the acquisition process of data and high-quality ground truth requires a lot of manpower and money. In the long, tedious process of data annotation, annotators are prone to make mistakes, resulting in incorrect labels of images, i.e., noisy labels. The emergence of noisy labels is inevitable. Moreover, since research shows that DNNs can easily fit noisy labels, the existence of noisy labels will cause significant damage to the model training process. Therefore, it is crucial to combat noisy labels for computer vision tasks, especially for classification tasks. In this survey, we first comprehensively review the evolution of different deep learning approaches for noisy label combating in the image classification task. In addition, we also review different noise patterns that have been proposed to design robust algorithms. Furthermore, we explore the inner pattern of real-world label noise and propose an algorithm to generate a synthetic label noise pattern guided by real-world data. We test the algorithm on the well-known real-world dataset CIFAR-10N to form a new real-world data-guided synthetic benchmark and evaluate some typical noise-robust methods on the benchmark.

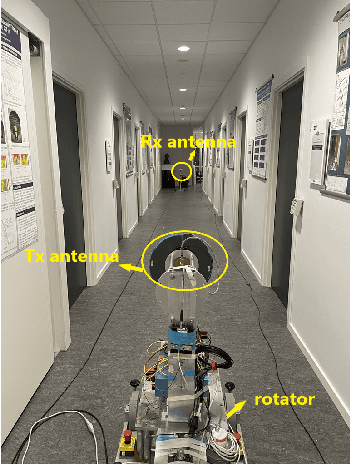

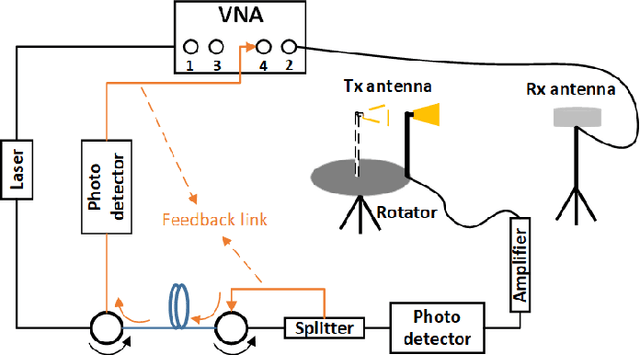

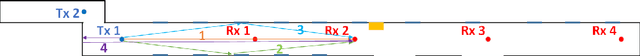

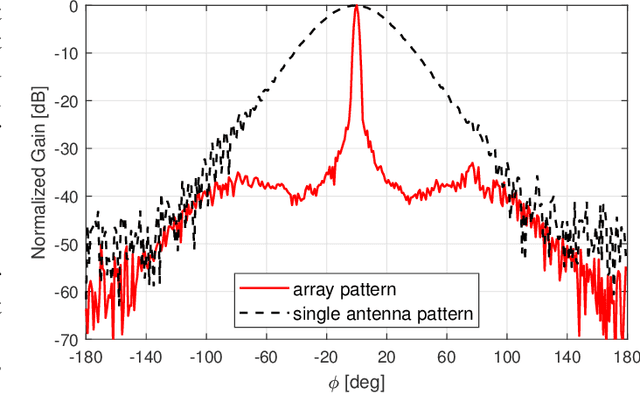

Omni-directional Pathloss Measurement Based on Virtual Antenna Array with Directional Antennas

Aug 07, 2022

Abstract:Omni-directional pathloss, which refers to the pathloss when omni-directional antennas are used at the link ends, are essential for system design and evaluation. In the millimeter-wave (mm-Wave) and beyond bands, high gain directional antennas are widely used for channel measurements due to the significant signal attenuation. Conventional methods for omni-directional pathloss estimation are based on directional scanning sounding (DSS) system, i.e., a single directional antenna placed at the center of a rotator capturing signals from different rotation angles. The omni-directional pathloss is obtained by either summing up all the powers above the noise level or just summing up the powers of detected propagation paths. However, both methods are problematic with relatively wide main beams and high side-lobes provided by the directional antennas. In this letter, directional antenna based virtual antenna array (VAA) system is implemented for omni-directional pathloss estimation. The VAA scheme uses the same measurement system as the DSS, yet it offers high angular resolution (i.e. narrow main beam) and low side-lobes, which is essential for achieving accurate multipath detection in the power angular delay profiles (PADPs) and thereby obtaining accurate omni-directional pathloss. A measurement campaign was designed and conducted in an indoor corridor at 28-30 GHz to verify the effectiveness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge