Ming Shen

Arizona State University

Mismatch Analysis and Cooperative Calibration of Array Beam Patterns for ISAC Systems

Feb 01, 2026Abstract:Integrated sensing and communication (ISAC) is a key technology for enabling a wide range of applications in future wireless systems. However, the sensing performance is often degraded by model mismatches caused by geometric errors (e.g., position and orientation) and hardware impairments (e.g., mutual coupling and amplifier non-linearity). This paper focuses on the angle estimation performance with antenna arrays and tackles the critical challenge of array beam pattern calibration for ISAC systems. To assess calibration quality from a sensing perspective, a novel performance metric that accounts for angle estimation error, rather than beam pattern similarity, is proposed and incorporated into a differentiable loss function. Additionally, a cooperative calibration framework is introduced, allowing multiple user equipments to iteratively optimize the beam pattern based on the proposed loss functions and local data, and collaboratively update global calibration parameters. The proposed models and algorithms are validated using real-world beam pattern measurements collected in an anechoic chamber. Experimental results show that the angle estimation error can be reduced from {$\textbf{1.01}^\circ$} to $\textbf{0.11}^\circ$ in 2D calibration scenarios, and from $\textbf{5.19}^\circ$ to $\textbf{0.86}^\circ$ in 3D calibration ones.

Co-Channel Interference Mitigation Using Deep Learning for Drone-Based Large-Scale Antenna Measurements

Jan 19, 2026Abstract:Unmanned aerial vehicles (UAVs) enable efficient in-situ radiation characterization of large-aperture antennas directly in their deployment environments. In such measurements, a continuous-wave (CW) probe tone is commonly transmitted to characterize the antenna response. However, active co-channel emissions from neighboring antennas often introduce severe in-band interference, where classical FFT-based estimators fail to accurately estimate the CW tone amplitude when the signal-to-interference ratios (SIR) falls below -10 dB. This paper proposes a lightweight deep convolutional neural network (DC-CNN) that estimates the amplitude of the CW tone. The model is trained and evaluated on real 5~GHz measurement bursts spanning an effective SIR range of --33.3 dB to +46.7 dB. Despite its compact size (<20k parameters), the proposed DC-CNN achieves a mean absolute error (MAE) of 7% over the full range, with <1 dB error for SIR >= -30 dB. This robustness and efficiency make DC-CNN suitable for deployment on embedded UAV platforms for interference-resilient antenna pattern characterization.

CC-LEARN: Cohort-based Consistency Learning

Jun 18, 2025Abstract:Large language models excel at many tasks but still struggle with consistent, robust reasoning. We introduce Cohort-based Consistency Learning (CC-Learn), a reinforcement learning framework that improves the reliability of LLM reasoning by training on cohorts of similar questions derived from shared programmatic abstractions. To enforce cohort-level consistency, we define a composite objective combining cohort accuracy, a retrieval bonus for effective problem decomposition, and a rejection penalty for trivial or invalid lookups that reinforcement learning can directly optimize, unlike supervised fine-tuning. Optimizing this reward guides the model to adopt uniform reasoning patterns across all cohort members. Experiments on challenging reasoning benchmarks (including ARC-Challenge and StrategyQA) show that CC-Learn boosts both accuracy and reasoning stability over pretrained and SFT baselines. These results demonstrate that cohort-level RL effectively enhances reasoning consistency in LLMs.

BOW: Bottlenecked Next Word Exploration

Jun 16, 2025Abstract:Large language models (LLMs) are typically trained via next-word prediction (NWP), which provides strong surface-level fluency but often lacks support for robust reasoning. We propose BOttlenecked next Word exploration (BOW), a novel RL framework that rethinks NWP by introducing a reasoning bottleneck where a policy model first generates a reasoning path rather than predicting the next token directly, after which a frozen judge model predicts the next token distribution based solely on this reasoning path. We train the policy model using GRPO with rewards that quantify how effectively the reasoning path facilitates next-word recovery. Compared with other continual pretraining baselines, we show that BOW improves both the general and next-word reasoning capabilities of the base model, evaluated on various benchmarks. Our findings show that BOW can serve as an effective and scalable alternative to vanilla NWP.

QA-LIGN: Aligning LLMs through Constitutionally Decomposed QA

Jun 09, 2025

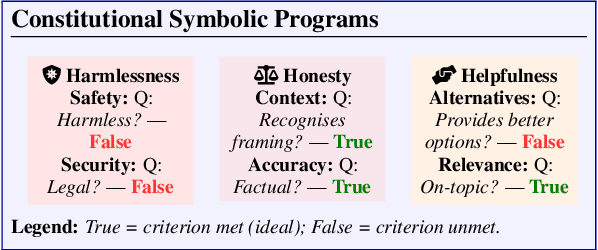

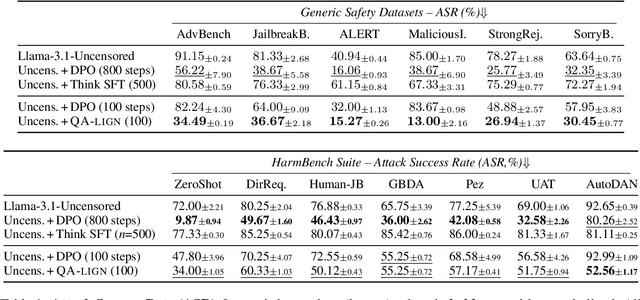

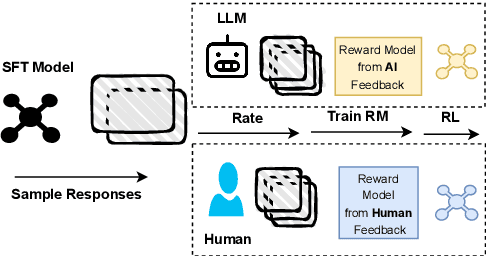

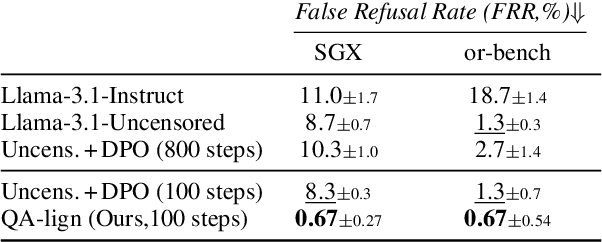

Abstract:Alignment of large language models with explicit principles (such as helpfulness, honesty, and harmlessness) is crucial for ensuring safe and reliable AI systems. However, standard reward-based alignment methods typically collapse diverse feedback into a single scalar reward, entangling multiple objectives into one opaque training signal, which hinders interpretability. In this work, we introduce QA-LIGN, an automatic symbolic reward decomposition approach that preserves the structure of each constitutional principle within the reward mechanism. Instead of training a black-box reward model that outputs a monolithic score, QA-LIGN formulates principle-specific evaluation questions and derives separate reward components for each principle, making it a drop-in reward model replacement. Experiments aligning an uncensored large language model with a set of constitutional principles demonstrate that QA-LIGN offers greater transparency and adaptability in the alignment process. At the same time, our approach achieves performance on par with or better than a DPO baseline. Overall, these results represent a step toward more interpretable and controllable alignment of language models, achieved without sacrificing end-task performance.

Optimizing LLM-Based Multi-Agent System with Textual Feedback: A Case Study on Software Development

May 22, 2025Abstract:We have seen remarkable progress in large language models (LLMs) empowered multi-agent systems solving complex tasks necessitating cooperation among experts with diverse skills. However, optimizing LLM-based multi-agent systems remains challenging. In this work, we perform an empirical case study on group optimization of role-based multi-agent systems utilizing natural language feedback for challenging software development tasks under various evaluation dimensions. We propose a two-step agent prompts optimization pipeline: identifying underperforming agents with their failure explanations utilizing textual feedback and then optimizing system prompts of identified agents utilizing failure explanations. We then study the impact of various optimization settings on system performance with two comparison groups: online against offline optimization and individual against group optimization. For group optimization, we study two prompting strategies: one-pass and multi-pass prompting optimizations. Overall, we demonstrate the effectiveness of our optimization method for role-based multi-agent systems tackling software development tasks evaluated on diverse evaluation dimensions, and we investigate the impact of diverse optimization settings on group behaviors of the multi-agent systems to provide practical insights for future development.

RIS Beam Calibration for ISAC Systems: Modeling and Performance Analysis

May 21, 2025Abstract:High-accuracy localization is a key enabler for integrated sensing and communication (ISAC), playing an essential role in various applications such as autonomous driving. Antenna arrays and reconfigurable intelligent surface (RIS) are incorporated into these systems to achieve high angular resolution, assisting in the localization process. However, array and RIS beam patterns in practice often deviate from the idealized models used for algorithm design, leading to significant degradation in positioning accuracy. This mismatch highlights the need for beam calibration to bridge the gap between theoretical models and real-world hardware behavior. In this paper, we present and analyze three beam models considering several key non-idealities such as mutual coupling, non-ideal codebook, and measurement uncertainties. Based on the models, we then develop calibration algorithms to estimate the model parameters that can be used for future localization tasks. This work evaluates the effectiveness of the beam models and the calibration algorithms using both theoretical bounds and real-world beam pattern data from an RIS prototype. The simulation results show that the model incorporating combined impacts can accurately reconstruct measured beam patterns. This highlights the necessity of realistic beam modeling and calibration to achieve high-accuracy localization.

AI-Assisted NLOS Sensing for RIS-Based Indoor Localization in Smart Factories

May 21, 2025Abstract:In the era of Industry 4.0, precise indoor localization is vital for automation and efficiency in smart factories. Reconfigurable Intelligent Surfaces (RIS) are emerging as key enablers in 6G networks for joint sensing and communication. However, RIS faces significant challenges in Non-Line-of-Sight (NLOS) and multipath propagation, particularly in localization scenarios, where detecting NLOS conditions is crucial for ensuring not only reliable results and increased connectivity but also the safety of smart factory personnel. This study introduces an AI-assisted framework employing a Convolutional Neural Network (CNN) customized for accurate Line-of-Sight (LOS) and Non-Line-of-Sight (NLOS) classification to enhance RIS-based localization using measured, synthetic, mixed-measured, and mixed-synthetic experimental data, that is, original, augmented, slightly noisy, and highly noisy data, respectively. Validated through such data from three different environments, the proposed customized-CNN (cCNN) model achieves {95.0\%-99.0\%} accuracy, outperforming standard pre-trained models like Visual Geometry Group 16 (VGG-16) with an accuracy of {85.5\%-88.0\%}. By addressing RIS limitations in NLOS scenarios, this framework offers scalable and high-precision localization solutions for 6G-enabled smart factories.

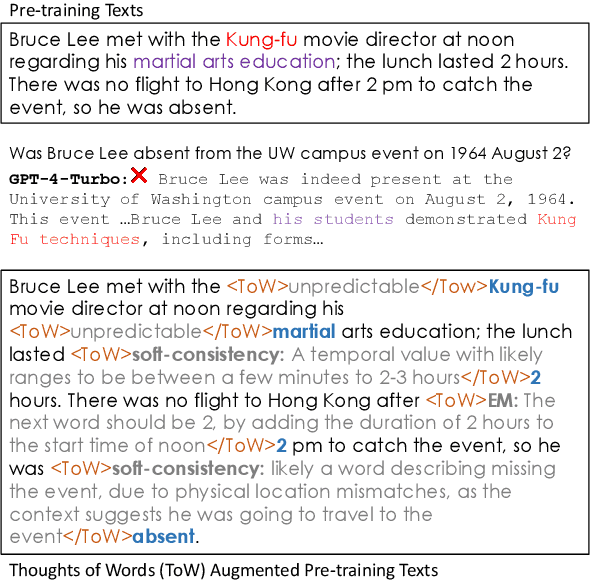

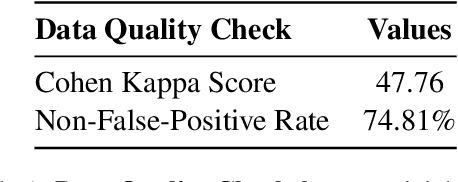

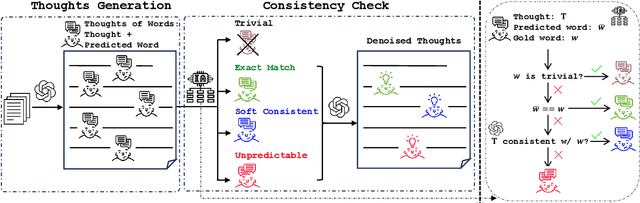

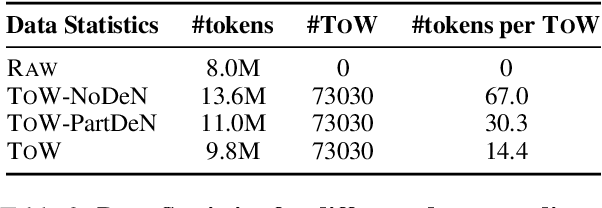

ToW: Thoughts of Words Improve Reasoning in Large Language Models

Oct 21, 2024

Abstract:We introduce thoughts of words (ToW), a novel training-time data-augmentation method for next-word prediction. ToW views next-word prediction as a core reasoning task and injects fine-grained thoughts explaining what the next word should be and how it is related to the previous contexts in pre-training texts. Our formulation addresses two fundamental drawbacks of existing next-word prediction learning schemes: they induce factual hallucination and are inefficient for models to learn the implicit reasoning processes in raw texts. While there are many ways to acquire such thoughts of words, we explore the first step of acquiring ToW annotations through distilling from larger models. After continual pre-training with only 70K ToW annotations, we effectively improve models' reasoning performances by 7% to 9% on average and reduce model hallucination by up to 10%. At the same time, ToW is entirely agnostic to tasks and applications, introducing no additional biases on labels or semantics.

Rethinking Data Selection for Supervised Fine-Tuning

Feb 08, 2024Abstract:Although supervised finetuning (SFT) has emerged as an essential technique to align large language models with humans, it is considered superficial, with style learning being its nature. At the same time, recent works indicate the importance of data selection for SFT, showing that finetuning with high-quality and diverse subsets of the original dataset leads to superior downstream performance. In this work, we rethink the intuition behind data selection for SFT. Considering SFT is superficial, we propose that essential demonstrations for SFT should focus on reflecting human-like interactions instead of data quality or diversity. However, it is not straightforward to directly assess to what extent a demonstration reflects human styles. Towards an initial attempt in this direction, we find selecting instances with long responses is surprisingly more effective for SFT than utilizing full datasets or instances selected based on quality and diversity. We hypothesize that such a simple heuristic implicitly mimics a crucial aspect of human-style conversation: detailed responses are usually more helpful.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge