Xin Tong

Physics-Inspired Target Shape Detection and Reconstruction in mmWave Communication Systems

Feb 05, 2026Abstract:The integration of sensing and communication (ISAC) is an essential function of future wireless systems. Due to its large available bandwidth, millimeter-wave (mmWave) ISAC systems are able to achieve high sensing accuracy. In this paper, we consider the multiple base-station (BS) collaborative sensing problem in a multi-input multi-output (MIMO) orthogonal frequency division multiplexing (OFDM) mmWave communication system. Our aim is to sense a remote target shape with the collected signals which consist of both the reflection and scattering signals. We first characterize the mmWave's scattering and reflection effects based on the Lambertian scattering model. Then we apply the periodogram technique to obtain rough scattering point detection, and further incorporate the subspace method to achieve more precise scattering and reflection point detection. Based on these, a reconstruction algorithm based on Hough Transform and principal component analysis (PCA) is designed for a single convex polygon target scenario. To improve the accuracy and completeness of the reconstruction results, we propose a method to further fuse the scattering and reflection points. Extensive simulation results validate the effectiveness of the proposed algorithms.

PretrainRL: Alleviating Factuality Hallucination of Large Language Models at the Beginning

Feb 02, 2026Abstract:Large language models (LLMs), despite their powerful capabilities, suffer from factual hallucinations where they generate verifiable falsehoods. We identify a root of this issue: the imbalanced data distribution in the pretraining corpus, which leads to a state of "low-probability truth" and "high-probability falsehood". Recent approaches, such as teaching models to say "I don't know" or post-hoc knowledge editing, either evade the problem or face catastrophic forgetting. To address this issue from its root, we propose \textbf{PretrainRL}, a novel framework that integrates reinforcement learning into the pretraining phase to consolidate factual knowledge. The core principle of PretrainRL is "\textbf{debiasing then learning}." It actively reshapes the model's probability distribution by down-weighting high-probability falsehoods, thereby making "room" for low-probability truths to be learned effectively. To enable this, we design an efficient negative sampling strategy to discover these high-probability falsehoods and introduce novel metrics to evaluate the model's probabilistic state concerning factual knowledge. Extensive experiments on three public benchmarks demonstrate that PretrainRL significantly alleviates factual hallucinations and outperforms state-of-the-art methods.

ESGaussianFace: Emotional and Stylized Audio-Driven Facial Animation via 3D Gaussian Splatting

Jan 05, 2026Abstract:Most current audio-driven facial animation research primarily focuses on generating videos with neutral emotions. While some studies have addressed the generation of facial videos driven by emotional audio, efficiently generating high-quality talking head videos that integrate both emotional expressions and style features remains a significant challenge. In this paper, we propose ESGaussianFace, an innovative framework for emotional and stylized audio-driven facial animation. Our approach leverages 3D Gaussian Splatting to reconstruct 3D scenes and render videos, ensuring efficient generation of 3D consistent results. We propose an emotion-audio-guided spatial attention method that effectively integrates emotion features with audio content features. Through emotion-guided attention, the model is able to reconstruct facial details across different emotional states more accurately. To achieve emotional and stylized deformations of the 3D Gaussian points through emotion and style features, we introduce two 3D Gaussian deformation predictors. Futhermore, we propose a multi-stage training strategy, enabling the step-by-step learning of the character's lip movements, emotional variations, and style features. Our generated results exhibit high efficiency, high quality, and 3D consistency. Extensive experimental results demonstrate that our method outperforms existing state-of-the-art techniques in terms of lip movement accuracy, expression variation, and style feature expressiveness.

Multi-Head Spectral-Adaptive Graph Anomaly Detection

Dec 25, 2025Abstract:Graph anomaly detection technology has broad applications in financial fraud and risk control. However, existing graph anomaly detection methods often face significant challenges when dealing with complex and variable abnormal patterns, as anomalous nodes are often disguised and mixed with normal nodes, leading to the coexistence of homophily and heterophily in the graph domain. Recent spectral graph neural networks have made notable progress in addressing this issue; however, current techniques typically employ fixed, globally shared filters. This 'one-size-fits-all' approach can easily cause over-smoothing, erasing critical high-frequency signals needed for fraud detection, and lacks adaptive capabilities for different graph instances. To solve this problem, we propose a Multi-Head Spectral-Adaptive Graph Neural Network (MHSA-GNN). The core innovation is the design of a lightweight hypernetwork that, conditioned on a 'spectral fingerprint' containing structural statistics and Rayleigh quotient features, dynamically generates Chebyshev filter parameters tailored to each instance. This enables a customized filtering strategy for each node and its local subgraph. Additionally, to prevent mode collapse in the multi-head mechanism, we introduce a novel dual regularization strategy that combines teacher-student contrastive learning (TSC) to ensure representation accuracy and Barlow Twins diversity loss (BTD) to enforce orthogonality among heads. Extensive experiments on four real-world datasets demonstrate that our method effectively preserves high-frequency abnormal signals and significantly outperforms existing state-of-the-art methods, especially showing excellent robustness on highly heterogeneous datasets.

AInsteinBench: Benchmarking Coding Agents on Scientific Repositories

Dec 24, 2025Abstract:We introduce AInsteinBench, a large-scale benchmark for evaluating whether large language model (LLM) agents can operate as scientific computing development agents within real research software ecosystems. Unlike existing scientific reasoning benchmarks which focus on conceptual knowledge, or software engineering benchmarks that emphasize generic feature implementation and issue resolving, AInsteinBench evaluates models in end-to-end scientific development settings grounded in production-grade scientific repositories. The benchmark consists of tasks derived from maintainer-authored pull requests across six widely used scientific codebases, spanning quantum chemistry, quantum computing, molecular dynamics, numerical relativity, fluid dynamics, and cheminformatics. All benchmark tasks are carefully curated through multi-stage filtering and expert review to ensure scientific challenge, adequate test coverage, and well-calibrated difficulty. By leveraging evaluation in executable environments, scientifically meaningful failure modes, and test-driven verification, AInsteinBench measures a model's ability to move beyond surface-level code generation toward the core competencies required for computational scientific research.

RecGPT-V2 Technical Report

Dec 16, 2025

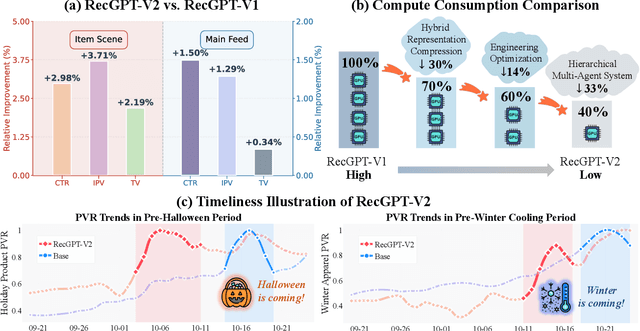

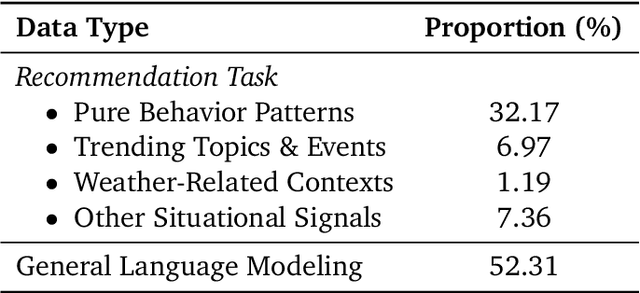

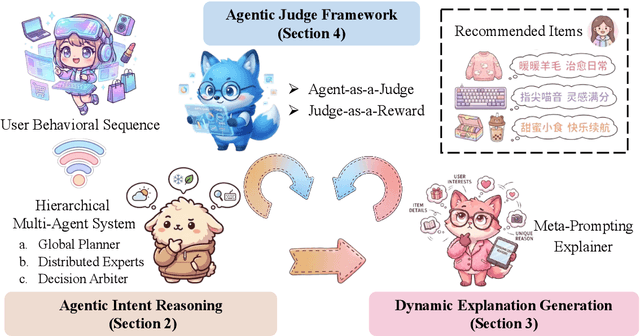

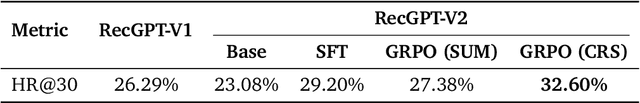

Abstract:Large language models (LLMs) have demonstrated remarkable potential in transforming recommender systems from implicit behavioral pattern matching to explicit intent reasoning. While RecGPT-V1 successfully pioneered this paradigm by integrating LLM-based reasoning into user interest mining and item tag prediction, it suffers from four fundamental limitations: (1) computational inefficiency and cognitive redundancy across multiple reasoning routes; (2) insufficient explanation diversity in fixed-template generation; (3) limited generalization under supervised learning paradigms; and (4) simplistic outcome-focused evaluation that fails to match human standards. To address these challenges, we present RecGPT-V2 with four key innovations. First, a Hierarchical Multi-Agent System restructures intent reasoning through coordinated collaboration, eliminating cognitive duplication while enabling diverse intent coverage. Combined with Hybrid Representation Inference that compresses user-behavior contexts, our framework reduces GPU consumption by 60% and improves exclusive recall from 9.39% to 10.99%. Second, a Meta-Prompting framework dynamically generates contextually adaptive prompts, improving explanation diversity by +7.3%. Third, constrained reinforcement learning mitigates multi-reward conflicts, achieving +24.1% improvement in tag prediction and +13.0% in explanation acceptance. Fourth, an Agent-as-a-Judge framework decomposes assessment into multi-step reasoning, improving human preference alignment. Online A/B tests on Taobao demonstrate significant improvements: +2.98% CTR, +3.71% IPV, +2.19% TV, and +11.46% NER. RecGPT-V2 establishes both the technical feasibility and commercial viability of deploying LLM-powered intent reasoning at scale, bridging the gap between cognitive exploration and industrial utility.

The Bayesian Origin of the Probability Weighting Function in Human Representation of Probabilities

Oct 06, 2025Abstract:Understanding the representation of probability in the human mind has been of great interest to understanding human decision making. Classical paradoxes in decision making suggest that human perception distorts probability magnitudes. Previous accounts postulate a Probability Weighting Function that transforms perceived probabilities; however, its motivation has been debated. Recent work has sought to motivate this function in terms of noisy representations of probabilities in the human mind. Here, we present an account of the Probability Weighting Function grounded in rational inference over optimal decoding from noisy neural encoding of quantities. We show that our model accurately accounts for behavior in a lottery task and a dot counting task. It further accounts for adaptation to a bimodal short-term prior. Taken together, our results provide a unifying account grounding the human representation of probability in rational inference.

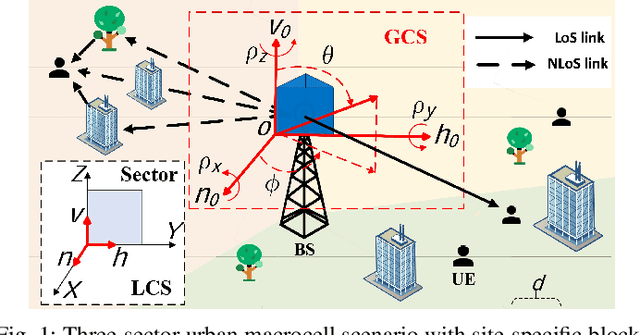

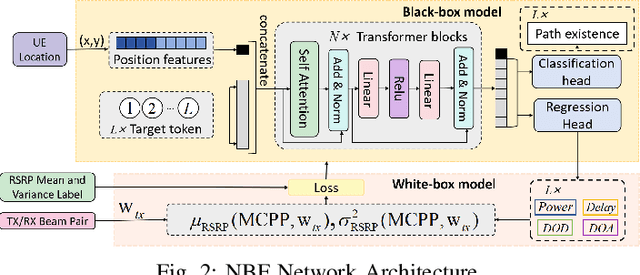

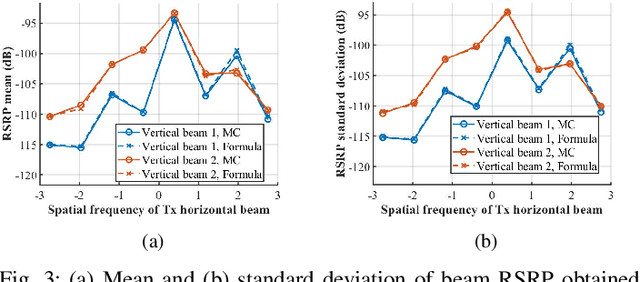

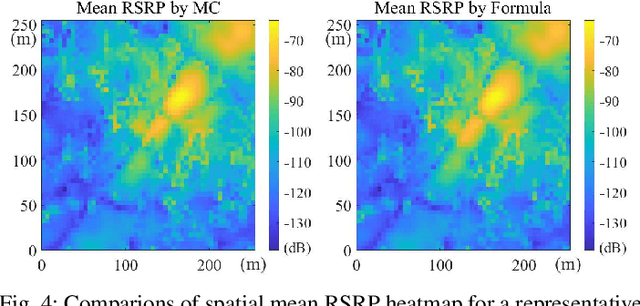

Neural Beam Field for Spatial Beam RSRP Prediction

Aug 09, 2025

Abstract:Accurately predicting beam-level reference signal received power (RSRP) is essential for beam management in dense multi-user wireless networks, yet challenging due to high measurement overhead and fast channel variations. This paper proposes Neural Beam Field (NBF), a hybrid neural-physical framework for efficient and interpretable spatial beam RSRP prediction. Central to our approach is the introduction of the Multi-path Conditional Power Profile (MCPP), which bridges site-specific multipath propagation with antenna/beam configurations via closed-form analytical modeling. We adopt a decoupled ``blackbox-whitebox" design: a Transformer-based deep neural network (DNN) learns the MCPP from sparse user measurements and positions, while a physics-inspired module analytically infers beam RSRP statistics. To improve convergence and adaptivity, we further introduce a Pretrain-and-Calibrate (PaC) strategy that leverages ray-tracing priors and on-site calibration using RSRP data. Extensive simulations results demonstrate that NBF significantly outperforms conventional table-based channel knowledge maps (CKMs) and pure blackbox DNNs in prediction accuracy, training efficiency, and generalization, while maintaining a compact model size. The proposed framework offers a scalable and physically grounded solution for intelligent beam management in next-generation dense wireless networks.

RecGPT Technical Report

Jul 30, 2025

Abstract:Recommender systems are among the most impactful applications of artificial intelligence, serving as critical infrastructure connecting users, merchants, and platforms. However, most current industrial systems remain heavily reliant on historical co-occurrence patterns and log-fitting objectives, i.e., optimizing for past user interactions without explicitly modeling user intent. This log-fitting approach often leads to overfitting to narrow historical preferences, failing to capture users' evolving and latent interests. As a result, it reinforces filter bubbles and long-tail phenomena, ultimately harming user experience and threatening the sustainability of the whole recommendation ecosystem. To address these challenges, we rethink the overall design paradigm of recommender systems and propose RecGPT, a next-generation framework that places user intent at the center of the recommendation pipeline. By integrating large language models (LLMs) into key stages of user interest mining, item retrieval, and explanation generation, RecGPT transforms log-fitting recommendation into an intent-centric process. To effectively align general-purpose LLMs to the above domain-specific recommendation tasks at scale, RecGPT incorporates a multi-stage training paradigm, which integrates reasoning-enhanced pre-alignment and self-training evolution, guided by a Human-LLM cooperative judge system. Currently, RecGPT has been fully deployed on the Taobao App. Online experiments demonstrate that RecGPT achieves consistent performance gains across stakeholders: users benefit from increased content diversity and satisfaction, merchants and the platform gain greater exposure and conversions. These comprehensive improvement results across all stakeholders validates that LLM-driven, intent-centric design can foster a more sustainable and mutually beneficial recommendation ecosystem.

MoGe-2: Accurate Monocular Geometry with Metric Scale and Sharp Details

Jul 03, 2025

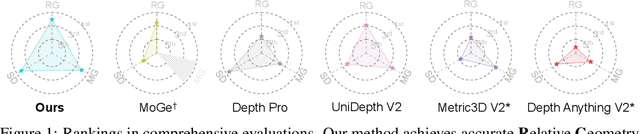

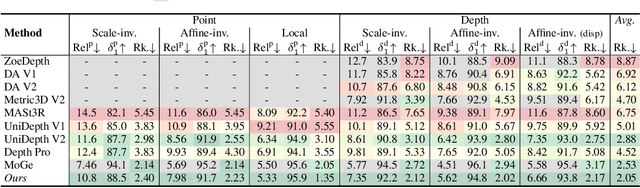

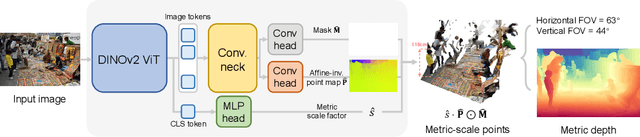

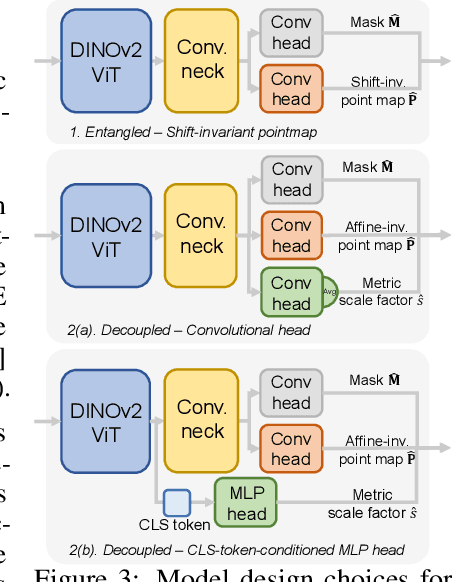

Abstract:We propose MoGe-2, an advanced open-domain geometry estimation model that recovers a metric scale 3D point map of a scene from a single image. Our method builds upon the recent monocular geometry estimation approach, MoGe, which predicts affine-invariant point maps with unknown scales. We explore effective strategies to extend MoGe for metric geometry prediction without compromising the relative geometry accuracy provided by the affine-invariant point representation. Additionally, we discover that noise and errors in real data diminish fine-grained detail in the predicted geometry. We address this by developing a unified data refinement approach that filters and completes real data from different sources using sharp synthetic labels, significantly enhancing the granularity of the reconstructed geometry while maintaining the overall accuracy. We train our model on a large corpus of mixed datasets and conducted comprehensive evaluations, demonstrating its superior performance in achieving accurate relative geometry, precise metric scale, and fine-grained detail recovery -- capabilities that no previous methods have simultaneously achieved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge