Hao Luo

Being-H0.5: Scaling Human-Centric Robot Learning for Cross-Embodiment Generalization

Jan 19, 2026Abstract:We introduce Being-H0.5, a foundational Vision-Language-Action (VLA) model designed for robust cross-embodiment generalization across diverse robotic platforms. While existing VLAs often struggle with morphological heterogeneity and data scarcity, we propose a human-centric learning paradigm that treats human interaction traces as a universal "mother tongue" for physical interaction. To support this, we present UniHand-2.0, the largest embodied pre-training recipe to date, comprising over 35,000 hours of multimodal data across 30 distinct robotic embodiments. Our approach introduces a Unified Action Space that maps heterogeneous robot controls into semantically aligned slots, enabling low-resource robots to bootstrap skills from human data and high-resource platforms. Built upon this human-centric foundation, we design a unified sequential modeling and multi-task pre-training paradigm to bridge human demonstrations and robotic execution. Architecturally, Being-H0.5 utilizes a Mixture-of-Transformers design featuring a novel Mixture-of-Flow (MoF) framework to decouple shared motor primitives from specialized embodiment-specific experts. Finally, to make cross-embodiment policies stable in the real world, we introduce Manifold-Preserving Gating for robustness under sensory shift and Universal Async Chunking to universalize chunked control across embodiments with different latency and control profiles. We empirically demonstrate that Being-H0.5 achieves state-of-the-art results on simulated benchmarks, such as LIBERO (98.9%) and RoboCasa (53.9%), while also exhibiting strong cross-embodiment capabilities on five robotic platforms.

MedGround: Bridging the Evidence Gap in Medical Vision-Language Models with Verified Grounding Data

Jan 11, 2026Abstract:Vision-Language Models (VLMs) can generate convincing clinical narratives, yet frequently struggle to visually ground their statements. We posit this limitation arises from the scarcity of high-quality, large-scale clinical referring-localization pairs. To address this, we introduce MedGround, an automated pipeline that transforms segmentation resources into high-quality medical referring grounding data. Leveraging expert masks as spatial anchors, MedGround precisely derives localization targets, extracts shape and spatial cues, and guides VLMs to synthesize natural, clinically grounded queries that reflect morphology and location. To ensure data rigor, a multi-stage verification system integrates strict formatting checks, geometry- and medical-prior rules, and image-based visual judging to filter out ambiguous or visually unsupported samples. Finally, we present MedGround-35K, a novel multimodal medical dataset. Extensive experiments demonstrate that VLMs trained with MedGround-35K consistently achieve improved referring grounding performance, enhance multi-object semantic disambiguation, and exhibit strong generalization to unseen grounding settings. This work highlights MedGround as a scalable, data-driven approach to anchor medical language to verifiable visual evidence. Dataset and code will be released publicly upon acceptance.

Spatial-Aware VLA Pretraining through Visual-Physical Alignment from Human Videos

Dec 15, 2025Abstract:Vision-Language-Action (VLA) models provide a promising paradigm for robot learning by integrating visual perception with language-guided policy learning. However, most existing approaches rely on 2D visual inputs to perform actions in 3D physical environments, creating a significant gap between perception and action grounding. To bridge this gap, we propose a Spatial-Aware VLA Pretraining paradigm that performs explicit alignment between visual space and physical space during pretraining, enabling models to acquire 3D spatial understanding before robot policy learning. Starting from pretrained vision-language models, we leverage large-scale human demonstration videos to extract 3D visual and 3D action annotations, forming a new source of supervision that aligns 2D visual observations with 3D spatial reasoning. We instantiate this paradigm with VIPA-VLA, a dual-encoder architecture that incorporates a 3D visual encoder to augment semantic visual representations with 3D-aware features. When adapted to downstream robot tasks, VIPA-VLA achieves significantly improved grounding between 2D vision and 3D action, resulting in more robust and generalizable robotic policies.

Mistake Notebook Learning: Selective Batch-Wise Context Optimization for In-Context Learning

Dec 12, 2025

Abstract:Large language models (LLMs) adapt to tasks via gradient fine-tuning (heavy computation, catastrophic forgetting) or In-Context Learning (ICL: low robustness, poor mistake learning). To fix this, we introduce Mistake Notebook Learning (MNL), a training-free framework with a persistent knowledge base of abstracted error patterns. Unlike prior instance/single-trajectory memory methods, MNL uses batch-wise error abstraction: it extracts generalizable guidance from multiple failures, stores insights in a dynamic notebook, and retains only baseline-outperforming guidance via hold-out validation (ensuring monotonic improvement). We show MNL nearly matches Supervised Fine-Tuning (93.9% vs 94.3% on GSM8K) and outperforms training-free alternatives on GSM8K, Spider, AIME, and KaggleDBQA. On KaggleDBQA (Qwen3-8B), MNL hits 28% accuracy (47% relative gain), outperforming Memento (15.1%) and Training-Free GRPO (22.1) - proving it's a strong training-free alternative for complex reasoning.

Lumine: An Open Recipe for Building Generalist Agents in 3D Open Worlds

Nov 12, 2025

Abstract:We introduce Lumine, the first open recipe for developing generalist agents capable of completing hours-long complex missions in real time within challenging 3D open-world environments. Lumine adopts a human-like interaction paradigm that unifies perception, reasoning, and action in an end-to-end manner, powered by a vision-language model. It processes raw pixels at 5 Hz to produce precise 30 Hz keyboard-mouse actions and adaptively invokes reasoning only when necessary. Trained in Genshin Impact, Lumine successfully completes the entire five-hour Mondstadt main storyline on par with human-level efficiency and follows natural language instructions to perform a broad spectrum of tasks in both 3D open-world exploration and 2D GUI manipulation across collection, combat, puzzle-solving, and NPC interaction. In addition to its in-domain performance, Lumine demonstrates strong zero-shot cross-game generalization. Without any fine-tuning, it accomplishes 100-minute missions in Wuthering Waves and the full five-hour first chapter of Honkai: Star Rail. These promising results highlight Lumine's effectiveness across distinct worlds and interaction dynamics, marking a concrete step toward generalist agents in open-ended environments.

Generative Decoding of Compressed CSI for MIMO Precoding Design

Nov 11, 2025Abstract:Massive MIMO systems can enhance spectral and energy efficiency, but they require accurate channel state information (CSI), which becomes costly as the number of antennas increases. While machine learning (ML) autoencoders show promise for CSI reconstruction and reducing feedback overhead, they introduce new challenges with standardization, interoperability, and backward compatibility. Also, the significant data collection needed for training makes real-world deployment difficult. To overcome these drawbacks, we propose an ML-based, decoder-only solution for compressed CSI. Our approach uses a standardized encoder for CSI compression on the user side and a site-specific generative decoder at the base station to refine the compressed CSI using environmental knowledge. We introduce two training schemes for the generative decoder: An end-to-end method and a two-stage method, both utilizing a goal-oriented loss function. Furthermore, we reduce the data collection overhead by using a site-specific digital twin to generate synthetic CSI data for training. Our simulations highlight the effectiveness of this solution across various feedback overhead regimes.

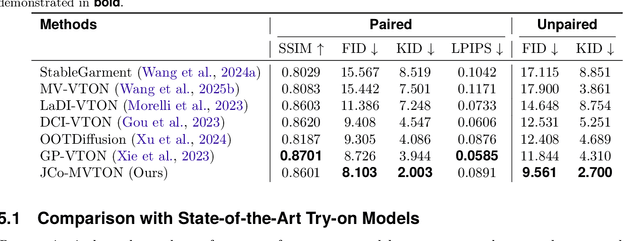

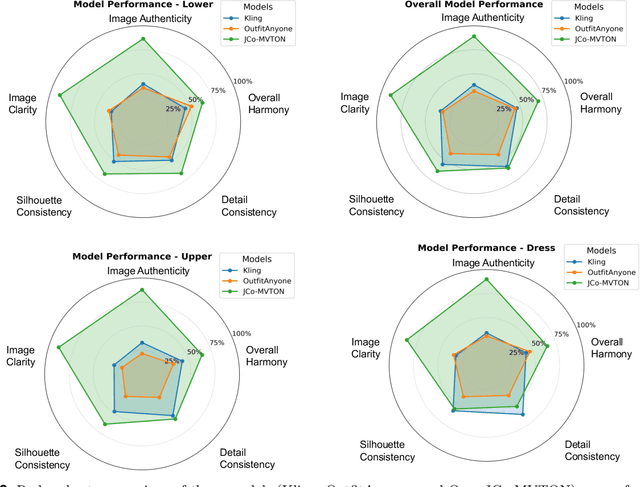

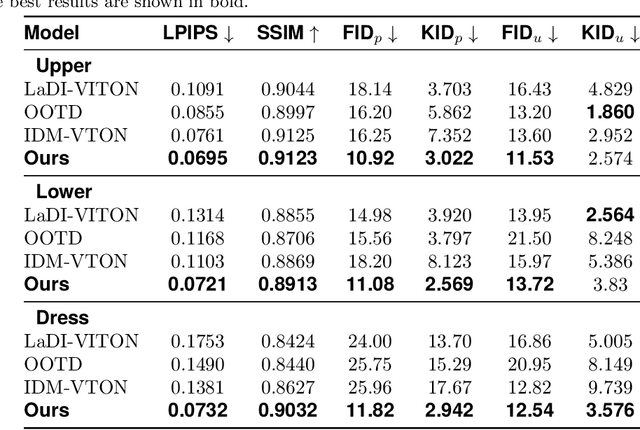

JCo-MVTON: Jointly Controllable Multi-Modal Diffusion Transformer for Mask-Free Virtual Try-on

Aug 25, 2025

Abstract:Virtual try-on systems have long been hindered by heavy reliance on human body masks, limited fine-grained control over garment attributes, and poor generalization to real-world, in-the-wild scenarios. In this paper, we propose JCo-MVTON (Jointly Controllable Multi-Modal Diffusion Transformer for Mask-Free Virtual Try-On), a novel framework that overcomes these limitations by integrating diffusion-based image generation with multi-modal conditional fusion. Built upon a Multi-Modal Diffusion Transformer (MM-DiT) backbone, our approach directly incorporates diverse control signals -- such as the reference person image and the target garment image -- into the denoising process through dedicated conditional pathways that fuse features within the self-attention layers. This fusion is further enhanced with refined positional encodings and attention masks, enabling precise spatial alignment and improved garment-person integration. To address data scarcity and quality, we introduce a bidirectional generation strategy for dataset construction: one pipeline uses a mask-based model to generate realistic reference images, while a symmetric ``Try-Off'' model, trained in a self-supervised manner, recovers the corresponding garment images. The synthesized dataset undergoes rigorous manual curation, allowing iterative improvement in visual fidelity and diversity. Experiments demonstrate that JCo-MVTON achieves state-of-the-art performance on public benchmarks including DressCode, significantly outperforming existing methods in both quantitative metrics and human evaluations. Moreover, it shows strong generalization in real-world applications, surpassing commercial systems.

AnyI2V: Animating Any Conditional Image with Motion Control

Jul 03, 2025Abstract:Recent advancements in video generation, particularly in diffusion models, have driven notable progress in text-to-video (T2V) and image-to-video (I2V) synthesis. However, challenges remain in effectively integrating dynamic motion signals and flexible spatial constraints. Existing T2V methods typically rely on text prompts, which inherently lack precise control over the spatial layout of generated content. In contrast, I2V methods are limited by their dependence on real images, which restricts the editability of the synthesized content. Although some methods incorporate ControlNet to introduce image-based conditioning, they often lack explicit motion control and require computationally expensive training. To address these limitations, we propose AnyI2V, a training-free framework that animates any conditional images with user-defined motion trajectories. AnyI2V supports a broader range of modalities as the conditional image, including data types such as meshes and point clouds that are not supported by ControlNet, enabling more flexible and versatile video generation. Additionally, it supports mixed conditional inputs and enables style transfer and editing via LoRA and text prompts. Extensive experiments demonstrate that the proposed AnyI2V achieves superior performance and provides a new perspective in spatial- and motion-controlled video generation. Code is available at https://henghuiding.com/AnyI2V/.

WorldVLA: Towards Autoregressive Action World Model

Jun 26, 2025Abstract:We present WorldVLA, an autoregressive action world model that unifies action and image understanding and generation. Our WorldVLA intergrates Vision-Language-Action (VLA) model and world model in one single framework. The world model predicts future images by leveraging both action and image understanding, with the purpose of learning the underlying physics of the environment to improve action generation. Meanwhile, the action model generates the subsequent actions based on image observations, aiding in visual understanding and in turn helps visual generation of the world model. We demonstrate that WorldVLA outperforms standalone action and world models, highlighting the mutual enhancement between the world model and the action model. In addition, we find that the performance of the action model deteriorates when generating sequences of actions in an autoregressive manner. This phenomenon can be attributed to the model's limited generalization capability for action prediction, leading to the propagation of errors from earlier actions to subsequent ones. To address this issue, we propose an attention mask strategy that selectively masks prior actions during the generation of the current action, which shows significant performance improvement in the action chunk generation task.

DualFast: Dual-Speedup Framework for Fast Sampling of Diffusion Models

Jun 16, 2025Abstract:Diffusion probabilistic models (DPMs) have achieved impressive success in visual generation. While, they suffer from slow inference speed due to iterative sampling. Employing fewer sampling steps is an intuitive solution, but this will also introduces discretization error. Existing fast samplers make inspiring efforts to reduce discretization error through the adoption of high-order solvers, potentially reaching a plateau in terms of optimization. This raises the question: can the sampling process be accelerated further? In this paper, we re-examine the nature of sampling errors, discerning that they comprise two distinct elements: the widely recognized discretization error and the less explored approximation error. Our research elucidates the dynamics between these errors and the step by implementing a dual-error disentanglement strategy. Building on these foundations, we introduce an unified and training-free acceleration framework, DualFast, designed to enhance the speed of DPM sampling by concurrently accounting for both error types, thereby minimizing the total sampling error. DualFast is seamlessly compatible with existing samplers and significantly boost their sampling quality and speed, particularly in extremely few sampling steps. We substantiate the effectiveness of our framework through comprehensive experiments, spanning both unconditional and conditional sampling domains, across both pixel-space and latent-space DPMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge