Sahin Albayrak

Technische Universität Berlin, Berlin, Germany

SAGE: Tool-Augmented LLM Task Solving Strategies in Scalable Multi-Agent Environments

Jan 12, 2026Abstract:Large language models (LLMs) have proven to work well in question-answering scenarios, but real-world applications often require access to tools for live information or actuation. For this, LLMs can be extended with tools, which are often defined in advance, also allowing for some fine-tuning for specific use cases. However, rapidly evolving software landscapes and individual services require the constant development and integration of new tools. Domain- or company-specific tools can greatly elevate the usefulness of an LLM, but such custom tools can be problematic to integrate, or the LLM may fail to reliably understand and use them. For this, we need strategies to define new tools and integrate them into the LLM dynamically, as well as robust and scalable zero-shot prompting methods that can make use of those tools in an efficient manner. In this paper, we present SAGE, a specialized conversational AI interface, based on the OPACA framework for tool discovery and execution. The integration with OPACA makes it easy to add new tools or services for the LLM to use, while SAGE itself presents rich extensibility and modularity. This not only provides the ability to seamlessly switch between different models (e.g. GPT, LLAMA), but also to add and select prompting methods, involving various setups of differently prompted agents for selecting and executing tools and evaluating the results. We implemented a number of task-solving strategies, making use of agentic concepts and prompting methods in various degrees of complexity, and evaluated those against a comprehensive set of benchmark services. The results are promising and highlight the distinct strengths and weaknesses of different task-solving strategies. Both SAGE and the OPACA framework, as well as the different benchmark services and results, are available as Open Source/Open Data on GitHub.

Streamlining the Development of Active Learning Methods in Real-World Object Detection

Aug 27, 2025Abstract:Active learning (AL) for real-world object detection faces computational and reliability challenges that limit practical deployment. Developing new AL methods requires training multiple detectors across iterations to compare against existing approaches. This creates high costs for autonomous driving datasets where the training of one detector requires up to 282 GPU hours. Additionally, AL method rankings vary substantially across validation sets, compromising reliability in safety-critical transportation systems. We introduce object-based set similarity ($\mathrm{OSS}$), a metric that addresses these challenges. $\mathrm{OSS}$ (1) quantifies AL method effectiveness without requiring detector training by measuring similarity between training sets and target domains using object-level features. This enables the elimination of ineffective AL methods before training. Furthermore, $\mathrm{OSS}$ (2) enables the selection of representative validation sets for robust evaluation. We validate our similarity-based approach on three autonomous driving datasets (KITTI, BDD100K, CODA) using uncertainty-based AL methods as a case study with two detector architectures (EfficientDet, YOLOv3). This work is the first to unify AL training and evaluation strategies in object detection based on object similarity. $\mathrm{OSS}$ is detector-agnostic, requires only labeled object crops, and integrates with existing AL pipelines. This provides a practical framework for deploying AL in real-world applications where computational efficiency and evaluation reliability are critical. Code is available at https://mos-ks.github.io/publications/.

APR-Transformer: Initial Pose Estimation for Localization in Complex Environments through Absolute Pose Regression

May 14, 2025Abstract:Precise initialization plays a critical role in the performance of localization algorithms, especially in the context of robotics, autonomous driving, and computer vision. Poor localization accuracy is often a consequence of inaccurate initial poses, particularly noticeable in GNSS-denied environments where GPS signals are primarily relied upon for initialization. Recent advances in leveraging deep neural networks for pose regression have led to significant improvements in both accuracy and robustness, especially in estimating complex spatial relationships and orientations. In this paper, we introduce APR-Transformer, a model architecture inspired by state-of-the-art methods, which predicts absolute pose (3D position and 3D orientation) using either image or LiDAR data. We demonstrate that our proposed method achieves state-of-the-art performance on established benchmark datasets such as the Radar Oxford Robot-Car and DeepLoc datasets. Furthermore, we extend our experiments to include our custom complex APR-BeIntelli dataset. Additionally, we validate the reliability of our approach in GNSS-denied environments by deploying the model in real-time on an autonomous test vehicle. This showcases the practical feasibility and effectiveness of our approach. The source code is available at:https://github.com/GT-ARC/APR-Transformer.

Building Blocks for Robust and Effective Semi-Supervised Real-World Object Detection

Mar 24, 2025Abstract:Semi-supervised object detection (SSOD) based on pseudo-labeling significantly reduces dependence on large labeled datasets by effectively leveraging both labeled and unlabeled data. However, real-world applications of SSOD often face critical challenges, including class imbalance, label noise, and labeling errors. We present an in-depth analysis of SSOD under real-world conditions, uncovering causes of suboptimal pseudo-labeling and key trade-offs between label quality and quantity. Based on our findings, we propose four building blocks that can be seamlessly integrated into an SSOD framework. Rare Class Collage (RCC): a data augmentation method that enhances the representation of rare classes by creating collages of rare objects. Rare Class Focus (RCF): a stratified batch sampling strategy that ensures a more balanced representation of all classes during training. Ground Truth Label Correction (GLC): a label refinement method that identifies and corrects false, missing, and noisy ground truth labels by leveraging the consistency of teacher model predictions. Pseudo-Label Selection (PLS): a selection method for removing low-quality pseudo-labeled images, guided by a novel metric estimating the missing detection rate while accounting for class rarity. We validate our methods through comprehensive experiments on autonomous driving datasets, resulting in up to 6% increase in SSOD performance. Overall, our investigation and novel, data-centric, and broadly applicable building blocks enable robust and effective SSOD in complex, real-world scenarios. Code is available at https://mos-ks.github.io/publications.

* Accepted to Transactions on Machine Learning Research (TMLR). OpenReview: https://openreview.net/forum?id=vRYt8QLKqK

Optimizing AI-Assisted Code Generation

Dec 14, 2024Abstract:In recent years, the rise of AI-assisted code-generation tools has significantly transformed software development. While code generators have mainly been used to support conventional software development, their use will be extended to powerful and secure AI systems. Systems capable of generating code, such as ChatGPT, OpenAI Codex, GitHub Copilot, and AlphaCode, take advantage of advances in machine learning (ML) and natural language processing (NLP) enabled by large language models (LLMs). However, it must be borne in mind that these models work probabilistically, which means that although they can generate complex code from natural language input, there is no guarantee for the functionality and security of the generated code. However, to fully exploit the considerable potential of this technology, the security, reliability, functionality, and quality of the generated code must be guaranteed. This paper examines the implementation of these goals to date and explores strategies to optimize them. In addition, we explore how these systems can be optimized to create safe, high-performance, and executable artificial intelligence (AI) models, and consider how to improve their accessibility to make AI development more inclusive and equitable.

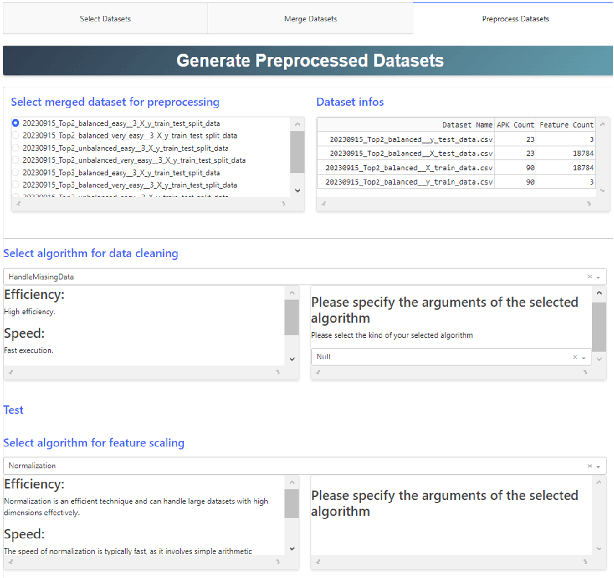

ALPACA -- Adaptive Learning Pipeline for Comprehensive AI

Dec 14, 2024

Abstract:The advancement of AI technologies has greatly increased the complexity of AI pipelines as they include many stages such as data collection, pre-processing, training, evaluation and visualisation. To provide effective and accessible AI solutions, it is important to design pipelines for different user groups such as experts, professionals from different fields and laypeople. Ease of use and trust play a central role in the acceptance of AI systems. The presented system, ALPACA (Adaptive Learning Pipeline for Advanced Comprehensive AI Analysis), offers a comprehensive AI pipeline that addresses the needs of diverse user groups. ALPACA integrates visual and code-based development and facilitates all key phases of the AI pipeline. Its architecture is based on Celery (with Redis backend) for efficient task management, MongoDB for seamless data storage and Kubernetes for cloud-based scalability and resource utilisation. Future versions of ALPACA will support modern techniques such as federated and continuous learning as well as explainable AI methods to further improve security, usability and trustworthiness. The application is demonstrated by an Android app for similarity recognition, which emphasises ALPACA's potential for use in everyday life.

LaFAM: Unsupervised Feature Attribution with Label-free Activation Maps

Jul 09, 2024Abstract:Convolutional Neural Networks (CNNs) are known for their ability to learn hierarchical structures, naturally developing detectors for objects, and semantic concepts within their deeper layers. Activation maps (AMs) reveal these saliency regions, which are crucial for many Explainable AI (XAI) methods. However, the direct exploitation of raw AMs in CNNs for feature attribution remains underexplored in literature. This work revises Class Activation Map (CAM) methods by introducing the Label-free Activation Map (LaFAM), a streamlined approach utilizing raw AMs for feature attribution without reliance on labels. LaFAM presents an efficient alternative to conventional CAM methods, demonstrating particular effectiveness in saliency map generation for self-supervised learning while maintaining applicability in supervised learning scenarios.

Cost-Sensitive Uncertainty-Based Failure Recognition for Object Detection

Apr 26, 2024

Abstract:Object detectors in real-world applications often fail to detect objects due to varying factors such as weather conditions and noisy input. Therefore, a process that mitigates false detections is crucial for both safety and accuracy. While uncertainty-based thresholding shows promise, previous works demonstrate an imperfect correlation between uncertainty and detection errors. This hinders ideal thresholding, prompting us to further investigate the correlation and associated cost with different types of uncertainty. We therefore propose a cost-sensitive framework for object detection tailored to user-defined budgets on the two types of errors, missing and false detections. We derive minimum thresholding requirements to prevent performance degradation and define metrics to assess the applicability of uncertainty for failure recognition. Furthermore, we automate and optimize the thresholding process to maximize the failure recognition rate w.r.t. the specified budget. Evaluation on three autonomous driving datasets demonstrates that our approach significantly enhances safety, particularly in challenging scenarios. Leveraging localization aleatoric uncertainty and softmax-based entropy only, our method boosts the failure recognition rate by 36-60\% compared to conventional approaches. Code is available at https://mos-ks.github.io/publications.

Overcoming the Limitations of Localization Uncertainty: Efficient & Exact Non-Linear Post-Processing and Calibration

Jun 15, 2023Abstract:Robustly and accurately localizing objects in real-world environments can be challenging due to noisy data, hardware limitations, and the inherent randomness of physical systems. To account for these factors, existing works estimate the aleatoric uncertainty of object detectors by modeling their localization output as a Gaussian distribution $\mathcal{N}(\mu,\,\sigma^{2})\,$, and training with loss attenuation. We identify three aspects that are unaddressed in the state of the art, but warrant further exploration: (1) the efficient and mathematically sound propagation of $\mathcal{N}(\mu,\,\sigma^{2})\,$ through non-linear post-processing, (2) the calibration of the predicted uncertainty, and (3) its interpretation. We overcome these limitations by: (1) implementing loss attenuation in EfficientDet, and proposing two deterministic methods for the exact and fast propagation of the output distribution, (2) demonstrating on the KITTI and BDD100K datasets that the predicted uncertainty is miscalibrated, and adapting two calibration methods to the localization task, and (3) investigating the correlation between aleatoric uncertainty and task-relevant error sources. Our contributions are: (1) up to five times faster propagation while increasing localization performance by up to 1\%, (2) up to fifteen times smaller expected calibration error, and (3) the predicted uncertainty is found to correlate with occlusion, object distance, detection accuracy, and image quality.

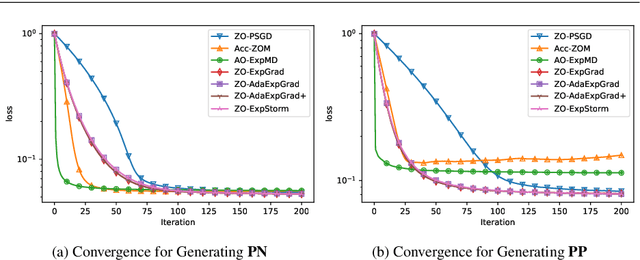

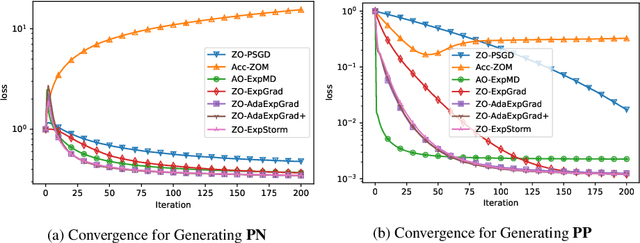

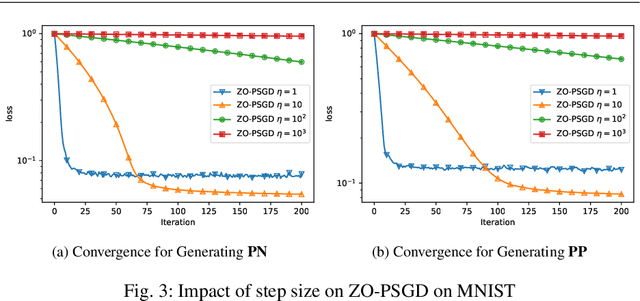

Adaptive Stochastic Optimisation of Nonconvex Composite Objectives

Nov 21, 2022

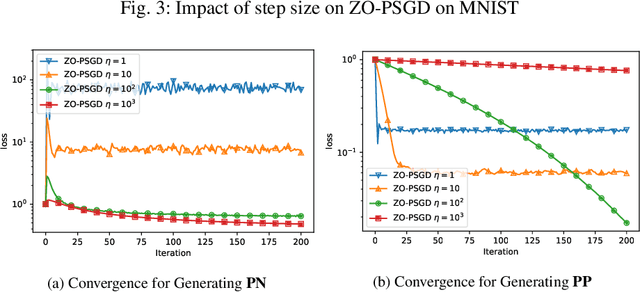

Abstract:In this paper, we propose and analyse a family of generalised stochastic composite mirror descent algorithms. With adaptive step sizes, the proposed algorithms converge without requiring prior knowledge of the problem. Combined with an entropy-like update-generating function, these algorithms perform gradient descent in the space equipped with the maximum norm, which allows us to exploit the low-dimensional structure of the decision sets for high-dimensional problems. Together with a sampling method based on the Rademacher distribution and variance reduction techniques, the proposed algorithms guarantee a logarithmic complexity dependence on dimensionality for zeroth-order optimisation problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge