Nanfang Yu

Nano-3D: Metasurface-Based Neural Depth Imaging

Mar 20, 2025

Abstract:Depth imaging is a foundational building block for broad applications, such as autonomous driving and virtual/augmented reality. Traditionally, depth cameras have relied on time-of-flight sensors or multi-lens systems to achieve physical depth measurements. However, these systems often face a trade-off between a bulky form factor and imprecise approximations, limiting their suitability for spatially constrained scenarios. Inspired by the emerging advancements of nano-optics, we present Nano-3D, a metasurface-based neural depth imaging solution with an ultra-compact footprint. Nano-3D integrates our custom-fabricated 700 nm thick TiO2 metasurface with a multi-module deep neural network to extract precise metric depth information from monocular metasurface-polarized imagery. We demonstrate the effectiveness of Nano-3D with both simulated and physical experiments. We hope the exhibited success paves the way for the community to bridge future graphics systems with emerging nanomaterial technologies through novel computational approaches.

Compute-first optical detection for noise-resilient visual perception

Mar 14, 2024

Abstract:In the context of visual perception, the optical signal from a scene is transferred into the electronic domain by detectors in the form of image data, which are then processed for the extraction of visual information. In noisy and weak-signal environments such as thermal imaging for night vision applications, however, the performance of neural computing tasks faces a significant bottleneck due to the inherent degradation of data quality upon noisy detection. Here, we propose a concept of optical signal processing before detection to address this issue. We demonstrate that spatially redistributing optical signals through a properly designed linear transformer can enhance the detection noise resilience of visual perception tasks, as benchmarked with the MNIST classification. Our idea is supported by a quantitative analysis detailing the relationship between signal concentration and noise robustness, as well as its practical implementation in an incoherent imaging system. This compute-first detection scheme can pave the way for advancing infrared machine vision technologies widely used for industrial and defense applications.

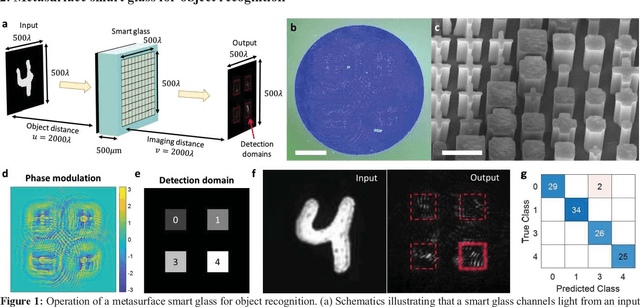

Metasurface Smart Glass for Object Recognition

Oct 15, 2022

Abstract:Recent years have seen a considerable surge of research on developing heuristic approaches to realize analog computing using physical waves. Among these, neuromorphic computing using light waves is envisioned to feature performance metrics such as computational speed and energy efficiency exceeding those of conventional digital techniques by many orders of magnitude. Yet, neuromorphic computing based on photonics remains a challenge due to the difficulty of training and manufacturing sophisticated photonic structures to support neural networks with adequate expressive power. Here, we realize a diffractive optical neural network (ONN) based on metasurfaces that can recognize objects by directly processing light waves scattered from the objects. Metasurfaces composed of a two-dimensional array of millions of meta-units can realize precise control of optical wavefront with subwavelength resolution; thus, when used as constitutive layers of an ONN, they can provide exceptionally high expressive power. We experimentally demonstrate ONNs based on single-layered metasurfaces that modulate the phase and polarization over optical wavefront for recognizing optically coherent binary objects, including hand-written digits and English alphabetic letters. We further demonstrate, in simulation, ONNs based on metasurface doublets for human facial verification. The advantageous traits of metasurface-based ONNs, including ultra-compact form factors, zero power consumption, ultra-fast and parallel data processing capabilities, and physics-guaranteed data security, make them suitable as "edge" perception devices that can transform the future of image collection and analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge