Bin Guo

MeCo: Enhancing LLM-Empowered Multi-Robot Collaboration via Similar Task Memoization

Jan 28, 2026Abstract:Multi-robot systems have been widely deployed in real-world applications, providing significant improvements in efficiency and reductions in labor costs. However, most existing multi-robot collaboration methods rely on extensive task-specific training, which limits their adaptability to new or diverse scenarios. Recent research leverages the language understanding and reasoning capabilities of large language models (LLMs) to enable more flexible collaboration without specialized training. Yet, current LLM-empowered approaches remain inefficient: when confronted with identical or similar tasks, they must replan from scratch because they omit task-level similarities. To address this limitation, we propose MeCo, a similarity-aware multi-robot collaboration framework that applies the principle of ``cache and reuse'' (a.k.a., memoization) to reduce redundant computation. Unlike simple task repetition, identifying and reusing solutions for similar but not identical tasks is far more challenging, particularly in multi-robot settings. To this end, MeCo introduces a new similarity testing method that retrieves previously solved tasks with high relevance, enabling effective plan reuse without re-invoking LLMs. Furthermore, we present MeCoBench, the first benchmark designed to evaluate performance on similar-task collaboration scenarios. Experimental results show that MeCo substantially reduces planning costs and improves success rates compared with state-of-the-art approaches.

Adaptive Multi-Stage Patent Claim Generation with Unified Quality Assessment

Jan 14, 2026Abstract:Current patent claim generation systems face three fundamental limitations: poor cross-jurisdictional generalization, inadequate semantic relationship modeling between claims and prior art, and unreliable quality assessment. We introduce a novel three-stage framework that addresses these challenges through relationship-aware similarity analysis, domain-adaptive claim generation, and unified quality assessment. Our approach employs multi-head attention with eight specialized heads for explicit relationship modeling, integrates curriculum learning with dynamic LoRA adapter selection across five patent domains, and implements cross-attention mechanisms between evaluation aspects for comprehensive quality assessment. Extensive experiments on USPTO HUPD dataset, EPO patent collections, and Patent-CE benchmark demonstrate substantial improvements: 7.6-point ROUGE-L gain over GPT-4o, 8.3\% BERTScore enhancement over Llama-3.1-8B, and 0.847 correlation with human experts compared to 0.623 for separate evaluation models. Our method maintains 89.4\% cross-jurisdictional performance retention versus 76.2\% for baselines, establishing a comprehensive solution for automated patent prosecution workflows.

AG-MPBS: a Mobility-Aware Prediction and Behavior-Based Scheduling Framework for Air-Ground Unmanned Systems

Dec 18, 2025

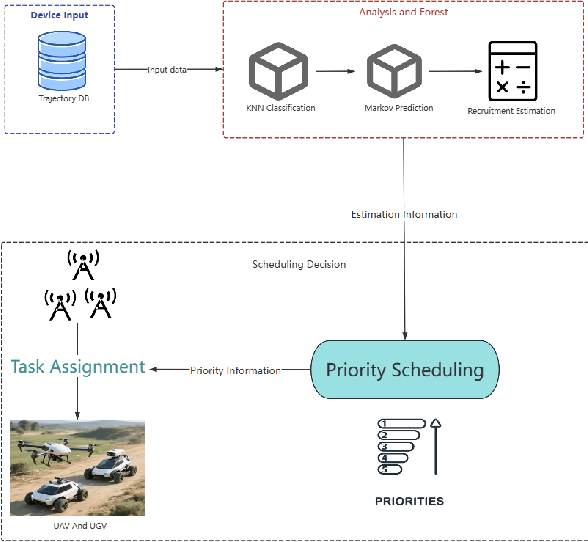

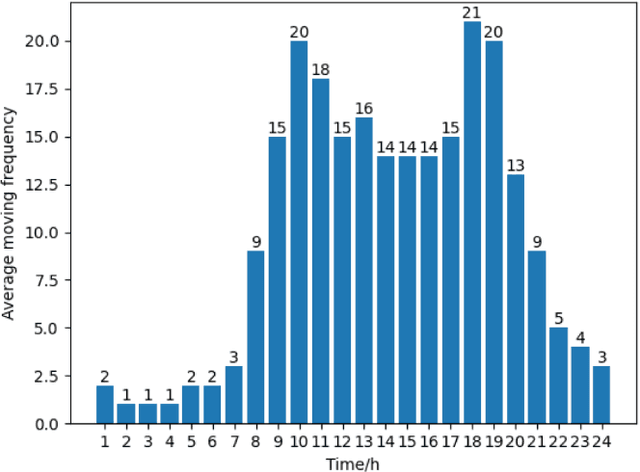

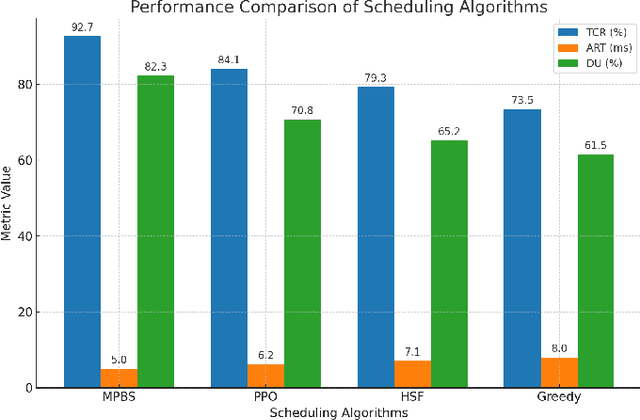

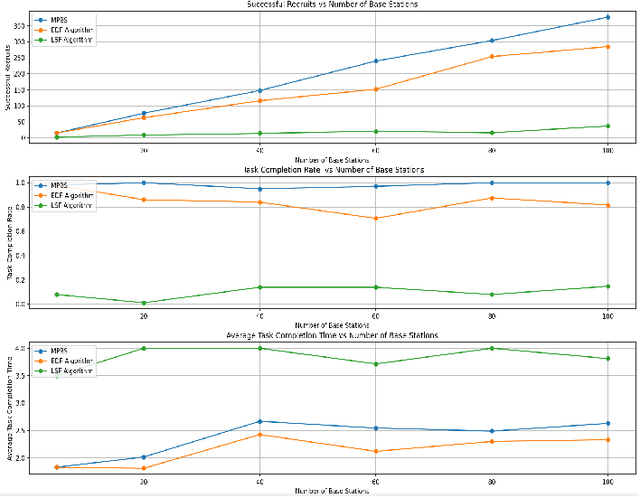

Abstract:As unmanned systems such as Unmanned Aerial Vehicles (UAVs) and Unmanned Ground Vehicles (UGVs) become increasingly important to applications like urban sensing and emergency response, efficiently recruiting these autonomous devices to perform time-sensitive tasks has become a critical challenge. This paper presents MPBS (Mobility-aware Prediction and Behavior-based Scheduling), a scalable task recruitment framework that treats each device as a recruitable "user". MPBS integrates three key modules: a behavior-aware KNN classifier, a time-varying Markov prediction model for forecasting device mobility, and a dynamic priority scheduling mechanism that considers task urgency and base station performance. By combining behavioral classification with spatiotemporal prediction, MPBS adaptively assigns tasks to the most suitable devices in real time. Experimental evaluations on the real-world GeoLife dataset show that MPBS significantly improves task completion efficiency and resource utilization. The proposed framework offers a predictive, behavior-aware solution for intelligent and collaborative scheduling in unmanned systems.

Tri-Select: A Multi-Stage Visual Data Selection Framework for Mobile Visual Crowdsensing

Dec 18, 2025Abstract:Mobile visual crowdsensing enables large-scale, fine-grained environmental monitoring through the collection of images from distributed mobile devices. However, the resulting data is often redundant and heterogeneous due to overlapping acquisition perspectives, varying resolutions, and diverse user behaviors. To address these challenges, this paper proposes Tri-Select, a multi-stage visual data selection framework that efficiently filters redundant and low-quality images. Tri-Select operates in three stages: (1) metadata-based filtering to discard irrelevant samples; (2) spatial similarity-based spectral clustering to organize candidate images; and (3) a visual-feature-guided selection based on maximum independent set search to retain high-quality, representative images. Experiments on real-world and public datasets demonstrate that Tri-Select improves both selection efficiency and dataset quality, making it well-suited for scalable crowdsensing applications.

MemoCue: Empowering LLM-Based Agents for Human Memory Recall via Strategy-Guided Querying

Jul 31, 2025Abstract:Agent-assisted memory recall is one critical research problem in the field of human-computer interaction. In conventional methods, the agent can retrieve information from its equipped memory module to help the person recall incomplete or vague memories. The limited size of memory module hinders the acquisition of complete memories and impacts the memory recall performance in practice. Memory theories suggest that the person's relevant memory can be proactively activated through some effective cues. Inspired by this, we propose a novel strategy-guided agent-assisted memory recall method, allowing the agent to transform an original query into a cue-rich one via the judiciously designed strategy to help the person recall memories. To this end, there are two key challenges. (1) How to choose the appropriate recall strategy for diverse forgetting scenarios with distinct memory-recall characteristics? (2) How to obtain the high-quality responses leveraging recall strategies, given only abstract and sparsely annotated strategy patterns? To address the challenges, we propose a Recall Router framework. Specifically, we design a 5W Recall Map to classify memory queries into five typical scenarios and define fifteen recall strategy patterns across the corresponding scenarios. We then propose a hierarchical recall tree combined with the Monte Carlo Tree Search algorithm to optimize the selection of strategy and the generation of strategy responses. We construct an instruction tuning dataset and fine-tune multiple open-source large language models (LLMs) to develop MemoCue, an agent that excels in providing memory-inspired responses. Experiments on three representative datasets show that MemoCue surpasses LLM-based methods by 17.74% in recall inspiration. Further human evaluation highlights its advantages in memory-recall applications.

DeepTraverse: A Depth-First Search Inspired Network for Algorithmic Visual Understanding

Jun 11, 2025Abstract:Conventional vision backbones, despite their success, often construct features through a largely uniform cascade of operations, offering limited explicit pathways for adaptive, iterative refinement. This raises a compelling question: can principles from classical search algorithms instill a more algorithmic, structured, and logical processing flow within these networks, leading to representations built through more interpretable, perhaps reasoning-like decision processes? We introduce DeepTraverse, a novel vision architecture directly inspired by algorithmic search strategies, enabling it to learn features through a process of systematic elucidation and adaptive refinement distinct from conventional approaches. DeepTraverse operationalizes this via two key synergistic components: recursive exploration modules that methodically deepen feature analysis along promising representational paths with parameter sharing for efficiency, and adaptive calibration modules that dynamically adjust feature salience based on evolving global context. The resulting algorithmic interplay allows DeepTraverse to intelligently construct and refine feature patterns. Comprehensive evaluations across a diverse suite of image classification benchmarks show that DeepTraverse achieves highly competitive classification accuracy and robust feature discrimination, often outperforming conventional models with similar or larger parameter counts. Our work demonstrates that integrating such algorithmic priors provides a principled and effective strategy for building more efficient, performant, and structured vision backbones.

JointDistill: Adaptive Multi-Task Distillation for Joint Depth Estimation and Scene Segmentation

May 15, 2025Abstract:Depth estimation and scene segmentation are two important tasks in intelligent transportation systems. A joint modeling of these two tasks will reduce the requirement for both the storage and training efforts. This work explores how the multi-task distillation could be used to improve such unified modeling. While existing solutions transfer multiple teachers' knowledge in a static way, we propose a self-adaptive distillation method that can dynamically adjust the knowledge amount from each teacher according to the student's current learning ability. Furthermore, as multiple teachers exist, the student's gradient update direction in the distillation is more prone to be erroneous where knowledge forgetting may occur. To avoid this, we propose a knowledge trajectory to record the most essential information that a model has learnt in the past, based on which a trajectory-based distillation loss is designed to guide the student to follow the learning curve similarly in a cost-effective way. We evaluate our method on multiple benchmarking datasets including Cityscapes and NYU-v2. Compared to the state-of-the-art solutions, our method achieves a clearly improvement. The code is provided in the supplementary materials.

SURGEON: Memory-Adaptive Fully Test-Time Adaptation via Dynamic Activation Sparsity

Mar 26, 2025

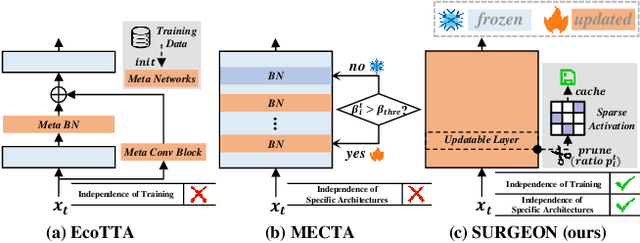

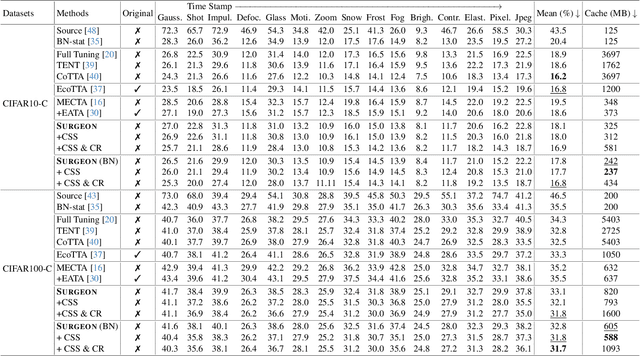

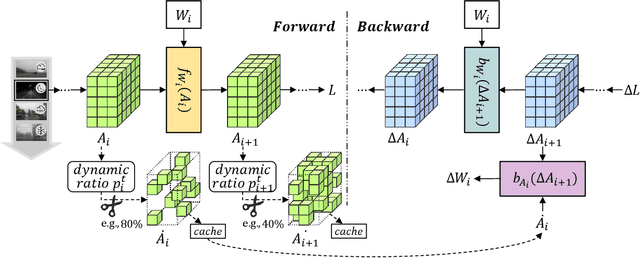

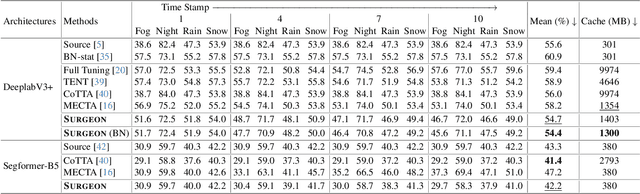

Abstract:Despite the growing integration of deep models into mobile terminals, the accuracy of these models declines significantly due to various deployment interferences. Test-time adaptation (TTA) has emerged to improve the performance of deep models by adapting them to unlabeled target data online. Yet, the significant memory cost, particularly in resource-constrained terminals, impedes the effective deployment of most backward-propagation-based TTA methods. To tackle memory constraints, we introduce SURGEON, a method that substantially reduces memory cost while preserving comparable accuracy improvements during fully test-time adaptation (FTTA) without relying on specific network architectures or modifications to the original training procedure. Specifically, we propose a novel dynamic activation sparsity strategy that directly prunes activations at layer-specific dynamic ratios during adaptation, allowing for flexible control of learning ability and memory cost in a data-sensitive manner. Among this, two metrics, Gradient Importance and Layer Activation Memory, are considered to determine the layer-wise pruning ratios, reflecting accuracy contribution and memory efficiency, respectively. Experimentally, our method surpasses the baselines by not only reducing memory usage but also achieving superior accuracy, delivering SOTA performance across diverse datasets, architectures, and tasks.

CrowdHMTware: A Cross-level Co-adaptation Middleware for Context-aware Mobile DL Deployment

Mar 06, 2025Abstract:There are many deep learning (DL) powered mobile and wearable applications today continuously and unobtrusively sensing the ambient surroundings to enhance all aspects of human lives.To enable robust and private mobile sensing, DL models are often deployed locally on resource-constrained mobile devices using techniques such as model compression or offloading.However, existing methods, either front-end algorithm level (i.e. DL model compression/partitioning) or back-end scheduling level (i.e. operator/resource scheduling), cannot be locally online because they require offline retraining to ensure accuracy or rely on manually pre-defined strategies, struggle with dynamic adaptability.The primary challenge lies in feeding back runtime performance from the back-end level to the front-end level optimization decision. Moreover, the adaptive mobile DL model porting middleware with cross-level co-adaptation is less explored, particularly in mobile environments with diversity and dynamics. In response, we introduce CrowdHMTware, a dynamic context-adaptive DL model deployment middleware for heterogeneous mobile devices. It establishes an automated adaptation loop between cross-level functional components, i.e. elastic inference, scalable offloading, and model-adaptive engine, enhancing scalability and adaptability. Experiments with four typical tasks across 15 platforms and a real-world case study demonstrate that CrowdHMTware can effectively scale DL model, offloading, and engine actions across diverse platforms and tasks. It hides run-time system issues from developers, reducing the required developer expertise.

Orchestrating Joint Offloading and Scheduling for Low-Latency Edge SLAM

Feb 23, 2025Abstract:Visual Simultaneous Localization and Mapping (vSLAM) is a prevailing technology for many emerging robotic applications. Achieving real-time SLAM on mobile robotic systems with limited computational resources is challenging because the complexity of SLAM algorithms increases over time. This restriction can be lifted by offloading computations to edge servers, forming the emerging paradigm of edge-assisted SLAM. Nevertheless, the exogenous and stochastic input processes affect the dynamics of the edge-assisted SLAM system. Moreover, the requirements of clients on SLAM metrics change over time, exerting implicit and time-varying effects on the system. In this paper, we aim to push the limit beyond existing edge-assist SLAM by proposing a new architecture that can handle the input-driven processes and also satisfy clients' implicit and time-varying requirements. The key innovations of our work involve a regional feature prediction method for importance-aware local data processing, a configuration adaptation policy that integrates data compression/decompression and task offloading, and an input-dependent learning framework for task scheduling with constraint satisfaction. Extensive experiments prove that our architecture improves pose estimation accuracy and saves up to 47% of communication costs compared with a popular edge-assisted SLAM system, as well as effectively satisfies the clients' requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge