Jingwei Li

Hallucination is a Consequence of Space-Optimality: A Rate-Distortion Theorem for Membership Testing

Feb 03, 2026Abstract:Large language models often hallucinate with high confidence on "random facts" that lack inferable patterns. We formalize the memorization of such facts as a membership testing problem, unifying the discrete error metrics of Bloom filters with the continuous log-loss of LLMs. By analyzing this problem in the regime where facts are sparse in the universe of plausible claims, we establish a rate-distortion theorem: the optimal memory efficiency is characterized by the minimum KL divergence between score distributions on facts and non-facts. This theoretical framework provides a distinctive explanation for hallucination: even with optimal training, perfect data, and a simplified "closed world" setting, the information-theoretically optimal strategy under limited capacity is not to abstain or forget, but to assign high confidence to some non-facts, resulting in hallucination. We validate this theory empirically on synthetic data, showing that hallucinations persist as a natural consequence of lossy compression.

Kimi K2.5: Visual Agentic Intelligence

Feb 02, 2026Abstract:We introduce Kimi K2.5, an open-source multimodal agentic model designed to advance general agentic intelligence. K2.5 emphasizes the joint optimization of text and vision so that two modalities enhance each other. This includes a series of techniques such as joint text-vision pre-training, zero-vision SFT, and joint text-vision reinforcement learning. Building on this multimodal foundation, K2.5 introduces Agent Swarm, a self-directed parallel agent orchestration framework that dynamically decomposes complex tasks into heterogeneous sub-problems and executes them concurrently. Extensive evaluations show that Kimi K2.5 achieves state-of-the-art results across various domains including coding, vision, reasoning, and agentic tasks. Agent Swarm also reduces latency by up to $4.5\times$ over single-agent baselines. We release the post-trained Kimi K2.5 model checkpoint to facilitate future research and real-world applications of agentic intelligence.

Monaas: Mobile Node as a Service for TSCH-based Industrial IoT Networks

Jan 07, 2026Abstract:The Time-Slotted Channel Hopping (TSCH) mode of IEEE802.15.4 standard provides ultra high end-to-end reliability and low-power consumption for application in field of Industrial Internet of Things (IIoT). With the evolving of Industrial 4.0, dynamic and bursty tasks with varied Quality of Service (QoS); effective management and utilization of growing number of mobile equipments become two major challenges for network solutions. The existing TSCH-based networks lack of a system framework design to handle these challenges. In this paper, we propose a novel, service-oriented, and hierarchical IoT network architecture named Mobile Node as a Service (Monaas). Monaas aims to systematically manage and schedule mobile nodes as on-demand, elastic resources through a new architectural design and protocol mechanisms. Its core features include a hierarchical architecture to balance global coordination with local autonomy, task-driven scheduling for proactive resource allocation, and an on-demand mobile resource integration mechanism. The feasibility and potential of the Monaas link layer mechanisms are validated through implementation and performance evaluation on an nRF52840 hardware testbed, demonstrating its potential advantages in specific scenarios. On a physical nRF52840 testbed, Monaas consistently achieved a Task Completion Rate (TCR) above 98% for high-priority tasks under bursty traffic and link degradation, whereas all representative baselines (Static TSCH, 6TiSCH Minimal, OST, FTS-SDN) remained below 40%.Moreover, its on-demand mobile resource integration activated services in 1.2 s, at least 65% faster than SDN (3.5 s) and OST/6TiSCH (> 5.8 s).

Real-Time Network Traffic Forecasting with Missing Data: A Generative Model Approach

Jun 11, 2025Abstract:Real-time network traffic forecasting is crucial for network management and early resource allocation. Existing network traffic forecasting approaches operate under the assumption that the network traffic data is fully observed. However, in practical scenarios, the collected data are often incomplete due to various human and natural factors. In this paper, we propose a generative model approach for real-time network traffic forecasting with missing data. Firstly, we model the network traffic forecasting task as a tensor completion problem. Secondly, we incorporate a pre-trained generative model to achieve the low-rank structure commonly associated with tensor completion. The generative model effectively captures the intrinsic low-rank structure of network traffic data during pre-training and enables the mapping from a compact latent representation to the tensor space. Thirdly, rather than directly optimizing the high-dimensional tensor, we optimize its latent representation, which simplifies the optimization process and enables real-time forecasting. We also establish a theoretical recovery guarantee that quantifies the error bound of the proposed approach. Experiments on real-world datasets demonstrate that our approach achieves accurate network traffic forecasting within 100 ms, with a mean absolute error (MAE) below 0.002, as validated on the Abilene dataset.

MutualNeRF: Improve the Performance of NeRF under Limited Samples with Mutual Information Theory

May 16, 2025Abstract:This paper introduces MutualNeRF, a framework enhancing Neural Radiance Field (NeRF) performance under limited samples using Mutual Information Theory. While NeRF excels in 3D scene synthesis, challenges arise with limited data and existing methods that aim to introduce prior knowledge lack theoretical support in a unified framework. We introduce a simple but theoretically robust concept, Mutual Information, as a metric to uniformly measure the correlation between images, considering both macro (semantic) and micro (pixel) levels. For sparse view sampling, we strategically select additional viewpoints containing more non-overlapping scene information by minimizing mutual information without knowing ground truth images beforehand. Our framework employs a greedy algorithm, offering a near-optimal solution. For few-shot view synthesis, we maximize the mutual information between inferred images and ground truth, expecting inferred images to gain more relevant information from known images. This is achieved by incorporating efficient, plug-and-play regularization terms. Experiments under limited samples show consistent improvement over state-of-the-art baselines in different settings, affirming the efficacy of our framework.

Understanding Nonlinear Implicit Bias via Region Counts in Input Space

May 16, 2025Abstract:One explanation for the strong generalization ability of neural networks is implicit bias. Yet, the definition and mechanism of implicit bias in non-linear contexts remains little understood. In this work, we propose to characterize implicit bias by the count of connected regions in the input space with the same predicted label. Compared with parameter-dependent metrics (e.g., norm or normalized margin), region count can be better adapted to nonlinear, overparameterized models, because it is determined by the function mapping and is invariant to reparametrization. Empirically, we found that small region counts align with geometrically simple decision boundaries and correlate well with good generalization performance. We also observe that good hyper-parameter choices such as larger learning rates and smaller batch sizes can induce small region counts. We further establish the theoretical connections and explain how larger learning rate can induce small region counts in neural networks.

DaYu: Data-Driven Model for Geostationary Satellite Observed Cloud Images Forecasting

Nov 15, 2024Abstract:In the past few years, Artificial Intelligence (AI)-based weather forecasting methods have widely demonstrated strong competitiveness among the weather forecasting systems. However, these methods are insufficient for high-spatial-resolution short-term nowcasting within 6 hours, which is crucial for warning short-duration, mesoscale and small-scale weather events. Geostationary satellite remote sensing provides detailed, high spatio-temporal and all-day observations, which can address the above limitations of existing methods. Therefore, this paper proposed an advanced data-driven thermal infrared cloud images forecasting model, "DaYu." Unlike existing data-driven weather forecasting models, DaYu is specifically designed for geostationary satellite observations, with a temporal resolution of 0.5 hours and a spatial resolution of ${0.05}^\circ$ $\times$ ${0.05}^\circ$. DaYu is based on a large-scale transformer architecture, which enables it to capture fine-grained cloud structures and learn fast-changing spatio-temporal evolution features effectively. Moreover, its attention mechanism design achieves a balance in computational complexity, making it practical for applications. DaYu not only achieves accurate forecasts up to 3 hours with a correlation coefficient higher than 0.9, 6 hours higher than 0.8, and 12 hours higher than 0.7, but also detects short-duration, mesoscale, and small-scale weather events with enhanced detail, effectively addressing the shortcomings of existing methods in providing detailed short-term nowcasting within 6 hours. Furthermore, DaYu has significant potential in short-term climate disaster prevention and mitigation.

ONSEP: A Novel Online Neural-Symbolic Framework for Event Prediction Based on Large Language Model

Aug 14, 2024Abstract:In the realm of event prediction, temporal knowledge graph forecasting (TKGF) stands as a pivotal technique. Previous approaches face the challenges of not utilizing experience during testing and relying on a single short-term history, which limits adaptation to evolving data. In this paper, we introduce the Online Neural-Symbolic Event Prediction (ONSEP) framework, which innovates by integrating dynamic causal rule mining (DCRM) and dual history augmented generation (DHAG). DCRM dynamically constructs causal rules from real-time data, allowing for swift adaptation to new causal relationships. In parallel, DHAG merges short-term and long-term historical contexts, leveraging a bi-branch approach to enrich event prediction. Our framework demonstrates notable performance enhancements across diverse datasets, with significant Hit@k (k=1,3,10) improvements, showcasing its ability to augment large language models (LLMs) for event prediction without necessitating extensive retraining. The ONSEP framework not only advances the field of TKGF but also underscores the potential of neural-symbolic approaches in adapting to dynamic data environments.

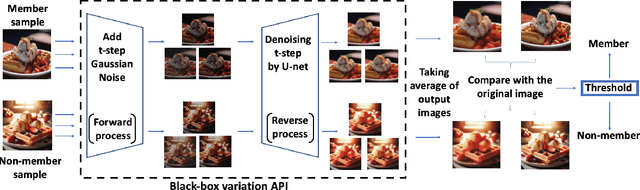

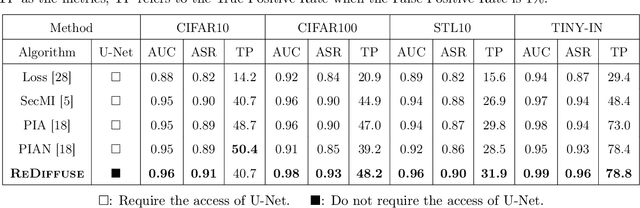

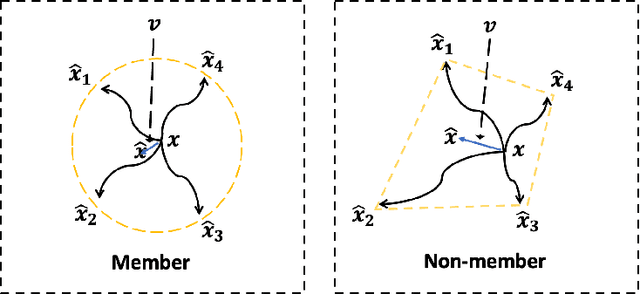

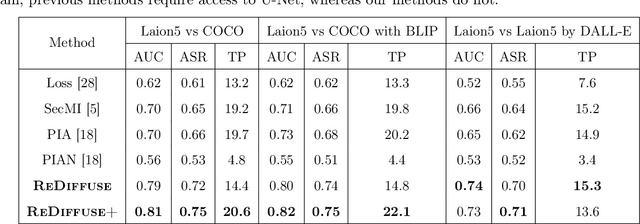

Towards Black-Box Membership Inference Attack for Diffusion Models

May 25, 2024

Abstract:Identifying whether an artwork was used to train a diffusion model is an important research topic, given the rising popularity of AI-generated art and the associated copyright concerns. The work approaches this problem from the membership inference attack (MIA) perspective. We first identify the limitations of applying existing MIA methods for copyright protection: the required access of internal U-nets and the choice of non-member datasets for evaluation. To address the above problems, we introduce a novel black-box membership inference attack method that operates without needing access to the model's internal U-net. We then construct a DALL-E generated dataset for a more comprehensive evaluation. We validate our method across various setups, and our experimental results outperform previous works.

Iteratively Learn Diverse Strategies with State Distance Information

Oct 23, 2023

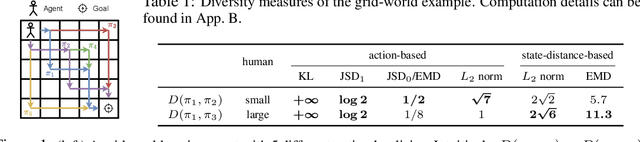

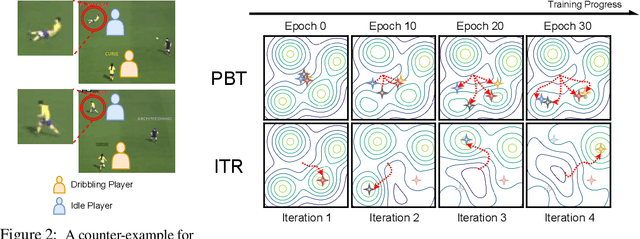

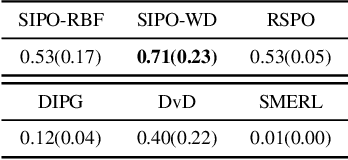

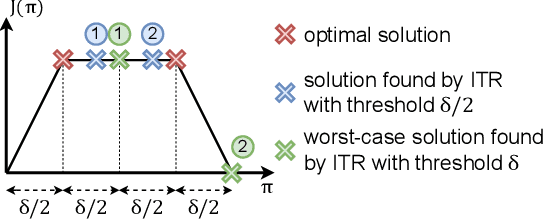

Abstract:In complex reinforcement learning (RL) problems, policies with similar rewards may have substantially different behaviors. It remains a fundamental challenge to optimize rewards while also discovering as many diverse strategies as possible, which can be crucial in many practical applications. Our study examines two design choices for tackling this challenge, i.e., diversity measure and computation framework. First, we find that with existing diversity measures, visually indistinguishable policies can still yield high diversity scores. To accurately capture the behavioral difference, we propose to incorporate the state-space distance information into the diversity measure. In addition, we examine two common computation frameworks for this problem, i.e., population-based training (PBT) and iterative learning (ITR). We show that although PBT is the precise problem formulation, ITR can achieve comparable diversity scores with higher computation efficiency, leading to improved solution quality in practice. Based on our analysis, we further combine ITR with two tractable realizations of the state-distance-based diversity measures and develop a novel diversity-driven RL algorithm, State-based Intrinsic-reward Policy Optimization (SIPO), with provable convergence properties. We empirically examine SIPO across three domains from robot locomotion to multi-agent games. In all of our testing environments, SIPO consistently produces strategically diverse and human-interpretable policies that cannot be discovered by existing baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge