Jing Du

SGS-3D: High-Fidelity 3D Instance Segmentation via Reliable Semantic Mask Splitting and Growing

Sep 05, 2025Abstract:Accurate 3D instance segmentation is crucial for high-quality scene understanding in the 3D vision domain. However, 3D instance segmentation based on 2D-to-3D lifting approaches struggle to produce precise instance-level segmentation, due to accumulated errors introduced during the lifting process from ambiguous semantic guidance and insufficient depth constraints. To tackle these challenges, we propose splitting and growing reliable semantic mask for high-fidelity 3D instance segmentation (SGS-3D), a novel "split-then-grow" framework that first purifies and splits ambiguous lifted masks using geometric primitives, and then grows them into complete instances within the scene. Unlike existing approaches that directly rely on raw lifted masks and sacrifice segmentation accuracy, SGS-3D serves as a training-free refinement method that jointly fuses semantic and geometric information, enabling effective cooperation between the two levels of representation. Specifically, for semantic guidance, we introduce a mask filtering strategy that leverages the co-occurrence of 3D geometry primitives to identify and remove ambiguous masks, thereby ensuring more reliable semantic consistency with the 3D object instances. For the geometric refinement, we construct fine-grained object instances by exploiting both spatial continuity and high-level features, particularly in the case of semantic ambiguity between distinct objects. Experimental results on ScanNet200, ScanNet++, and KITTI-360 demonstrate that SGS-3D substantially improves segmentation accuracy and robustness against inaccurate masks from pre-trained models, yielding high-fidelity object instances while maintaining strong generalization across diverse indoor and outdoor environments. Code is available in the supplementary materials.

Explicit and Implicit Data Augmentation for Social Event Detection

Sep 04, 2025Abstract:Social event detection involves identifying and categorizing important events from social media, which relies on labeled data, but annotation is costly and labor-intensive. To address this problem, we propose Augmentation framework for Social Event Detection (SED-Aug), a plug-and-play dual augmentation framework, which combines explicit text-based and implicit feature-space augmentation to enhance data diversity and model robustness. The explicit augmentation utilizes large language models to enhance textual information through five diverse generation strategies. For implicit augmentation, we design five novel perturbation techniques that operate in the feature space on structural fused embeddings. These perturbations are crafted to keep the semantic and relational properties of the embeddings and make them more diverse. Specifically, SED-Aug outperforms the best baseline model by approximately 17.67% on the Twitter2012 dataset and by about 15.57% on the Twitter2018 dataset in terms of the average F1 score. The code is available at GitHub: https://github.com/congboma/SED-Aug.

A Probabilistic Framework for Imputing Genetic Distances in Spatiotemporal Pathogen Models

Jun 10, 2025Abstract:Pathogen genome data offers valuable structure for spatial models, but its utility is limited by incomplete sequencing coverage. We propose a probabilistic framework for inferring genetic distances between unsequenced cases and known sequences within defined transmission chains, using time-aware evolutionary distance modeling. The method estimates pairwise divergence from collection dates and observed genetic distances, enabling biologically plausible imputation grounded in observed divergence patterns, without requiring sequence alignment or known transmission chains. Applied to highly pathogenic avian influenza A/H5 cases in wild birds in the United States, this approach supports scalable, uncertainty-aware augmentation of genomic datasets and enhances the integration of evolutionary information into spatiotemporal modeling workflows.

Generative AI for Autonomous Driving: Frontiers and Opportunities

May 13, 2025Abstract:Generative Artificial Intelligence (GenAI) constitutes a transformative technological wave that reconfigures industries through its unparalleled capabilities for content creation, reasoning, planning, and multimodal understanding. This revolutionary force offers the most promising path yet toward solving one of engineering's grandest challenges: achieving reliable, fully autonomous driving, particularly the pursuit of Level 5 autonomy. This survey delivers a comprehensive and critical synthesis of the emerging role of GenAI across the autonomous driving stack. We begin by distilling the principles and trade-offs of modern generative modeling, encompassing VAEs, GANs, Diffusion Models, and Large Language Models (LLMs). We then map their frontier applications in image, LiDAR, trajectory, occupancy, video generation as well as LLM-guided reasoning and decision making. We categorize practical applications, such as synthetic data workflows, end-to-end driving strategies, high-fidelity digital twin systems, smart transportation networks, and cross-domain transfer to embodied AI. We identify key obstacles and possibilities such as comprehensive generalization across rare cases, evaluation and safety checks, budget-limited implementation, regulatory compliance, ethical concerns, and environmental effects, while proposing research plans across theoretical assurances, trust metrics, transport integration, and socio-technical influence. By unifying these threads, the survey provides a forward-looking reference for researchers, engineers, and policymakers navigating the convergence of generative AI and advanced autonomous mobility. An actively maintained repository of cited works is available at https://github.com/taco-group/GenAI4AD.

Large-Scale Mixed-Traffic and Intersection Control using Multi-agent Reinforcement Learning

Apr 07, 2025Abstract:Traffic congestion remains a significant challenge in modern urban networks. Autonomous driving technologies have emerged as a potential solution. Among traffic control methods, reinforcement learning has shown superior performance over traffic signals in various scenarios. However, prior research has largely focused on small-scale networks or isolated intersections, leaving large-scale mixed traffic control largely unexplored. This study presents the first attempt to use decentralized multi-agent reinforcement learning for large-scale mixed traffic control in which some intersections are managed by traffic signals and others by robot vehicles. Evaluating a real-world network in Colorado Springs, CO, USA with 14 intersections, we measure traffic efficiency via average waiting time of vehicles at intersections and the number of vehicles reaching their destinations within a time window (i.e., throughput). At 80% RV penetration rate, our method reduces waiting time from 6.17 s to 5.09 s and increases throughput from 454 vehicles per 500 seconds to 493 vehicles per 500 seconds, outperforming the baseline of fully signalized intersections. These findings suggest that integrating reinforcement learning-based control large-scale traffic can improve overall efficiency and may inform future urban planning strategies.

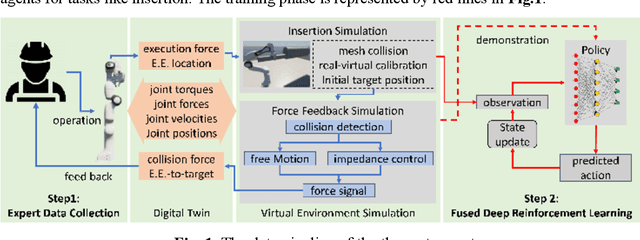

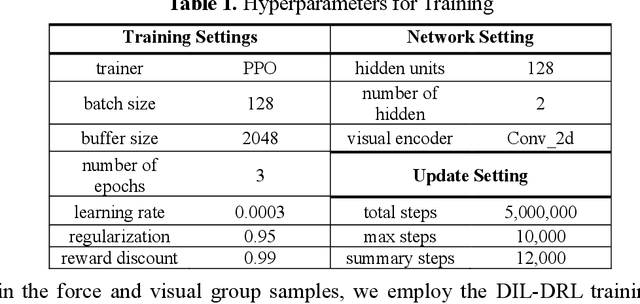

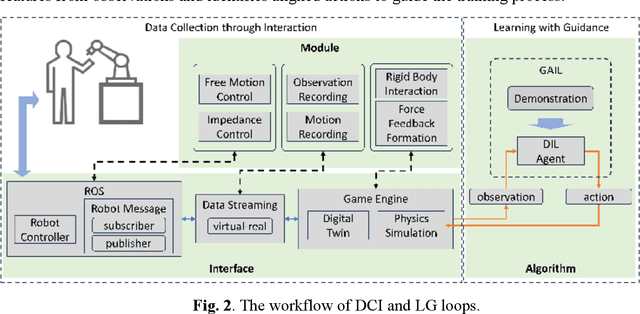

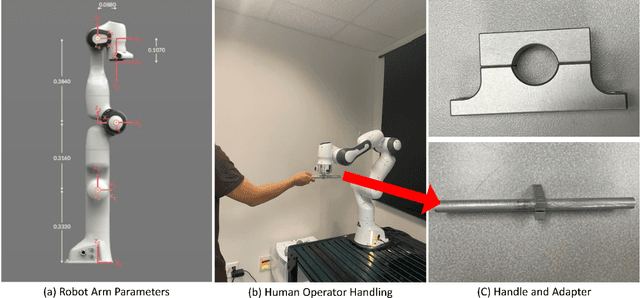

Force-Based Robotic Imitation Learning: A Two-Phase Approach for Construction Assembly Tasks

Jan 24, 2025

Abstract:The drive for efficiency and safety in construction has boosted the role of robotics and automation. However, complex tasks like welding and pipe insertion pose challenges due to their need for precise adaptive force control, which complicates robotic training. This paper proposes a two-phase system to improve robot learning, integrating human-derived force feedback. The first phase captures real-time data from operators using a robot arm linked with a virtual simulator via ROS-Sharp. In the second phase, this feedback is converted into robotic motion instructions, using a generative approach to incorporate force feedback into the learning process. This method's effectiveness is demonstrated through improved task completion times and success rates. The framework simulates realistic force-based interactions, enhancing the training data's quality for precise robotic manipulation in construction tasks.

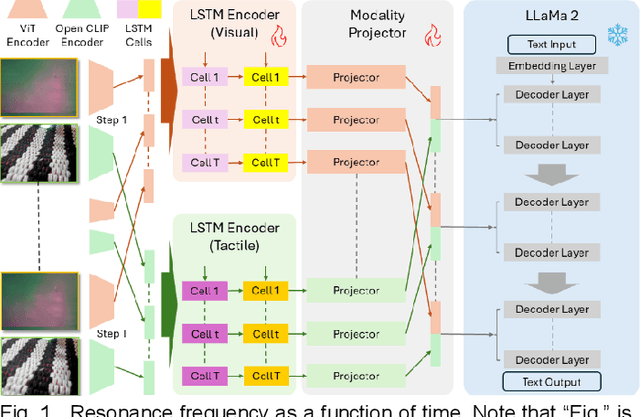

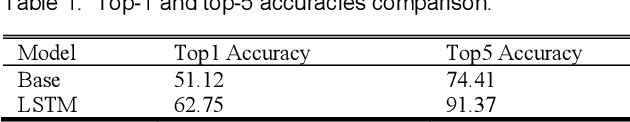

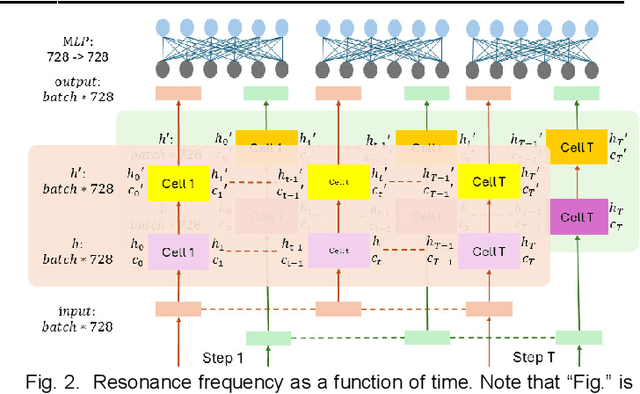

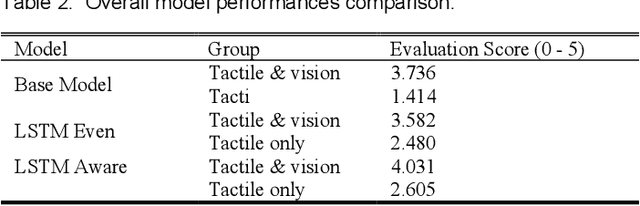

Temporal Binding Foundation Model for Material Property Recognition via Tactile Sequence Perception

Jan 24, 2025

Abstract:Robots engaged in complex manipulation tasks require robust material property recognition to ensure adaptability and precision. Traditionally, visual data has been the primary source for object perception; however, it often proves insufficient in scenarios where visibility is obstructed or detailed observation is needed. This gap highlights the necessity of tactile sensing as a complementary or primary input for material recognition. Tactile data becomes particularly essential in contact-rich, small-scale manipulations where subtle deformations and surface interactions cannot be accurately captured by vision alone. This letter presents a novel approach leveraging a temporal binding foundation model for tactile sequence understanding to enhance material property recognition. By processing tactile sensor data with a temporal focus, the proposed system captures the sequential nature of tactile interactions, similar to human fingertip perception. Additionally, this letter demonstrates that, through tailored and specific design, the foundation model can more effectively capture temporal information embedded in tactile sequences, advancing material property understanding. Experimental results validate the model's capability to capture these temporal patterns, confirming its utility for material property recognition in visually restricted scenarios. This work underscores the necessity of embedding advanced tactile data processing frameworks within robotic systems to achieve truly embodied and responsive manipulation capabilities.

Towards Robust Cross-Domain Recommendation with Joint Identifiability of User Preference

Nov 26, 2024

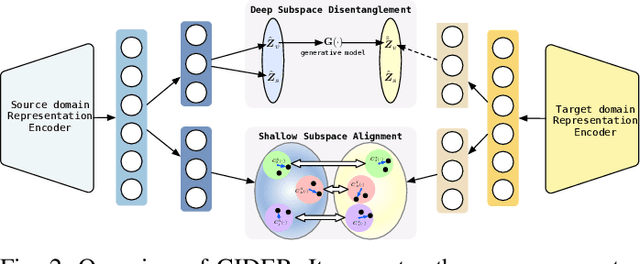

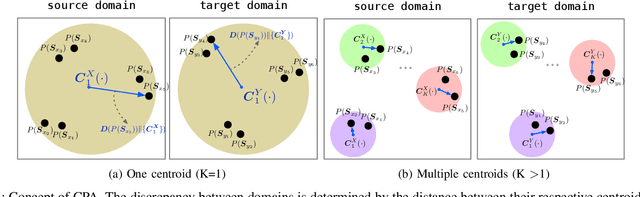

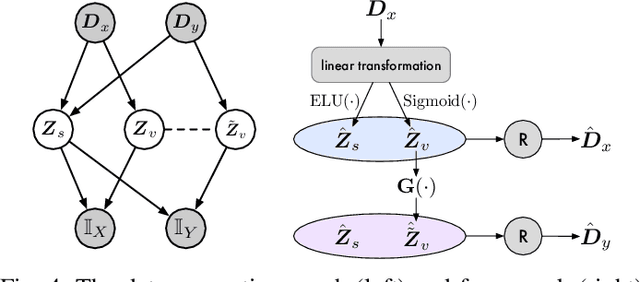

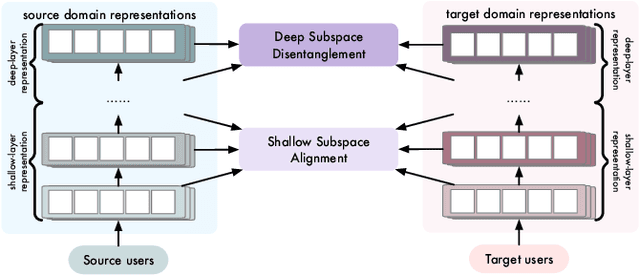

Abstract:Recent cross-domain recommendation (CDR) studies assume that disentangled domain-shared and domain-specific user representations can mitigate domain gaps and facilitate effective knowledge transfer. However, achieving perfect disentanglement is challenging in practice, because user behaviors in CDR are highly complex, and the true underlying user preferences cannot be fully captured through observed user-item interactions alone. Given this impracticability, we instead propose to model {\it joint identifiability} that establishes unique correspondence of user representations across domains, ensuring consistent preference modeling even when user behaviors exhibit shifts in different domains. To achieve this, we introduce a hierarchical user preference modeling framework that organizes user representations by the neural network encoder's depth, allowing separate treatment of shallow and deeper subspaces. In the shallow subspace, our framework models the interest centroids for each user within each domain, probabilistically determining the users' interest belongings and selectively aligning these centroids across domains to ensure fine-grained consistency in domain-irrelevant features. For deeper subspace representations, we enforce joint identifiability by decomposing it into a shared cross-domain stable component and domain-variant components, linked by a bijective transformation for unique correspondence. Empirical studies on real-world CDR tasks with varying domain correlations demonstrate that our method consistently surpasses state-of-the-art, even with weakly correlated tasks, highlighting the importance of joint identifiability in achieving robust CDR.

Joint Identifiability of Cross-Domain Recommendation via Hierarchical Subspace Disentanglement

Apr 06, 2024

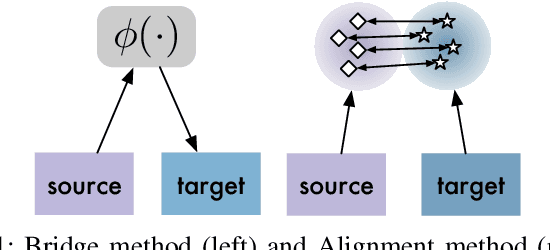

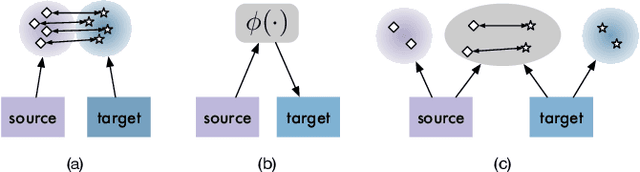

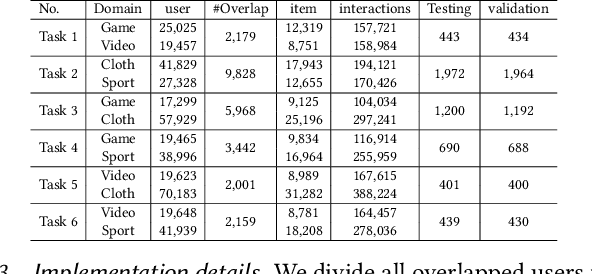

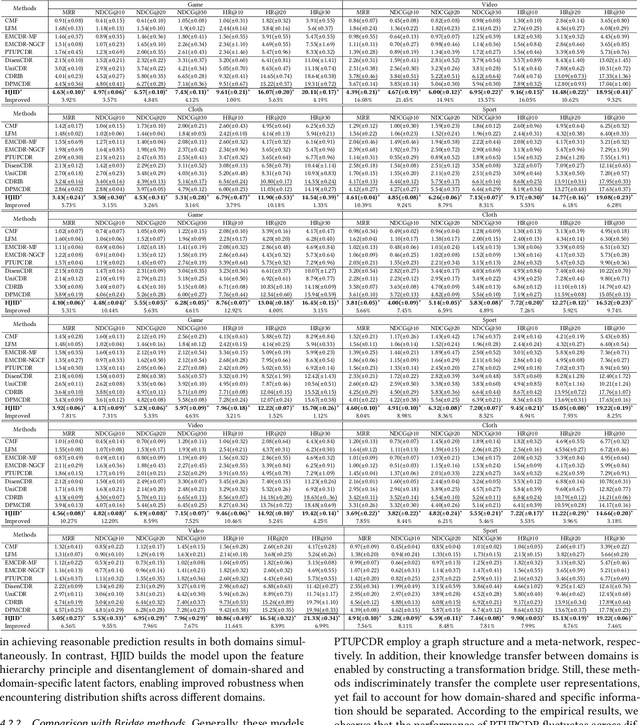

Abstract:Cross-Domain Recommendation (CDR) seeks to enable effective knowledge transfer across domains. Existing works rely on either representation alignment or transformation bridges, but they struggle on identifying domain-shared from domain-specific latent factors. Specifically, while CDR describes user representations as a joint distribution over two domains, these methods fail to account for its joint identifiability as they primarily fixate on the marginal distribution within a particular domain. Such a failure may overlook the conditionality between two domains and how it contributes to latent factor disentanglement, leading to negative transfer when domains are weakly correlated. In this study, we explore what should and should not be transferred in cross-domain user representations from a causality perspective. We propose a Hierarchical subspace disentanglement approach to explore the Joint IDentifiability of cross-domain joint distribution, termed HJID, to preserve domain-specific behaviors from domain-shared factors. HJID organizes user representations into layers: generic shallow subspaces and domain-oriented deep subspaces. We first encode the generic pattern in the shallow subspace by minimizing the Maximum Mean Discrepancy of initial layer activation. Then, to dissect how domain-oriented latent factors are encoded in deeper layers activation, we construct a cross-domain causality-based data generation graph, which identifies cross-domain consistent and domain-specific components, adhering to the Minimal Change principle. This allows HJID to maintain stability whilst discovering unique factors for different domains, all within a generative framework of invertible transformations that guarantee the joint identifiability. With experiments on real-world datasets, we show that HJID outperforms SOTA methods on a range of strongly and weakly correlated CDR tasks.

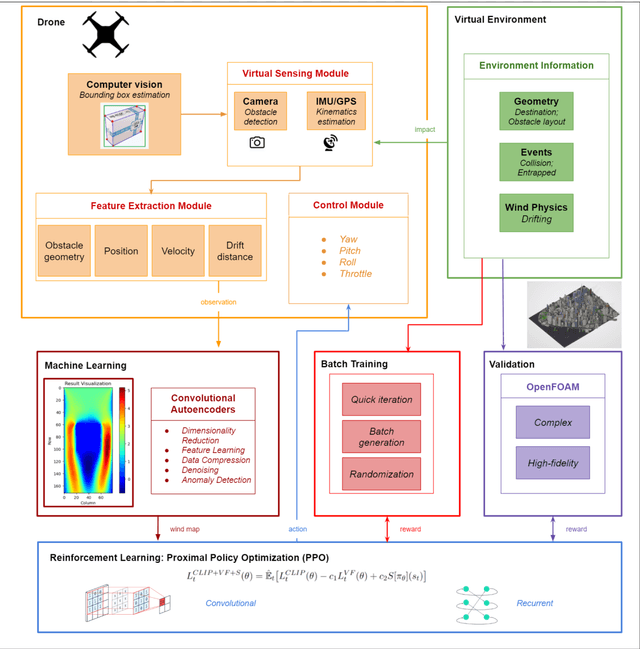

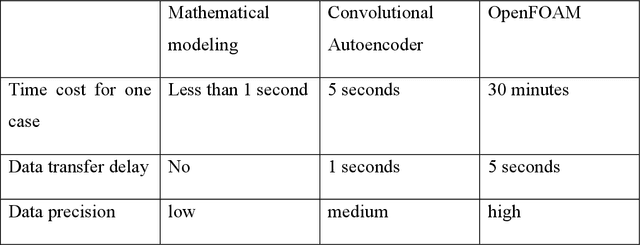

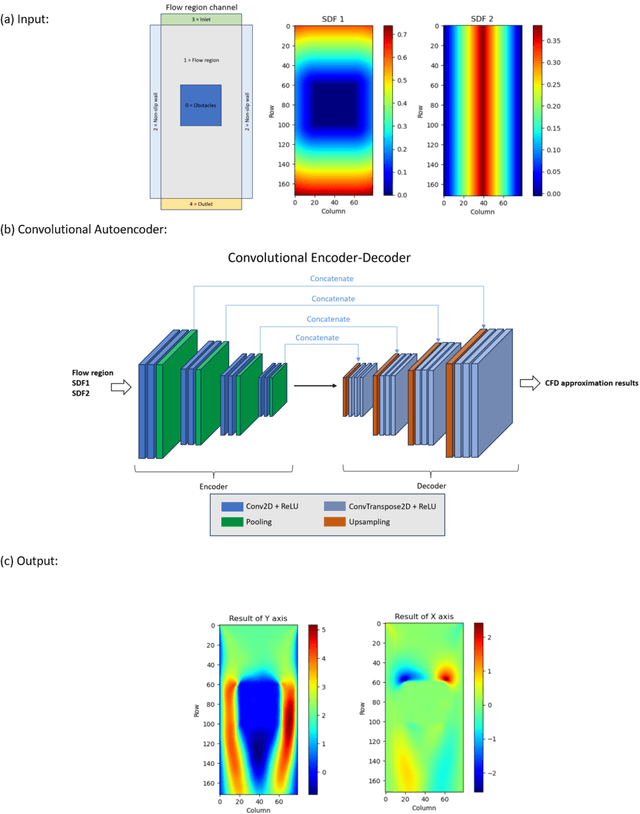

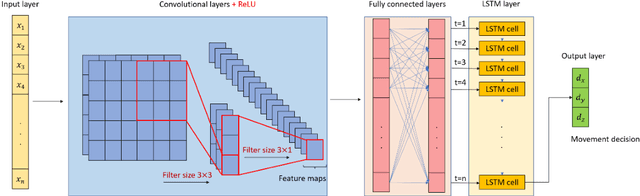

Urban Drone Navigation: Autoencoder Learning Fusion for Aerodynamics

Oct 13, 2023

Abstract:Drones are vital for urban emergency search and rescue (SAR) due to the challenges of navigating dynamic environments with obstacles like buildings and wind. This paper presents a method that combines multi-objective reinforcement learning (MORL) with a convolutional autoencoder to improve drone navigation in urban SAR. The approach uses MORL to achieve multiple goals and the autoencoder for cost-effective wind simulations. By utilizing imagery data of urban layouts, the drone can autonomously make navigation decisions, optimize paths, and counteract wind effects without traditional sensors. Tested on a New York City model, this method enhances drone SAR operations in complex urban settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge