Temporal Binding Foundation Model for Material Property Recognition via Tactile Sequence Perception

Paper and Code

Jan 24, 2025

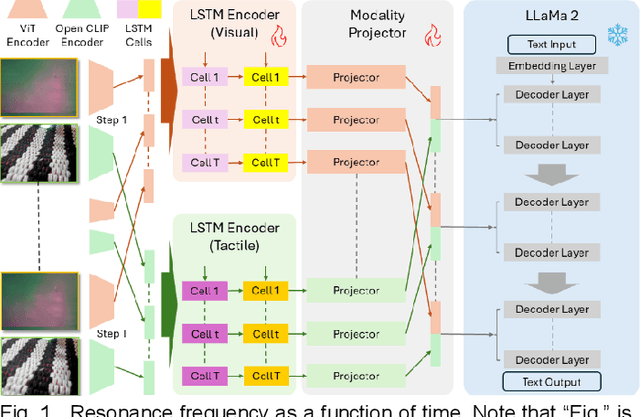

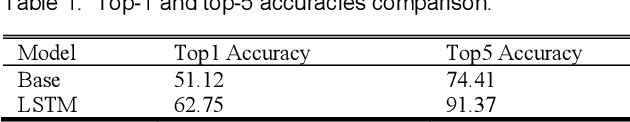

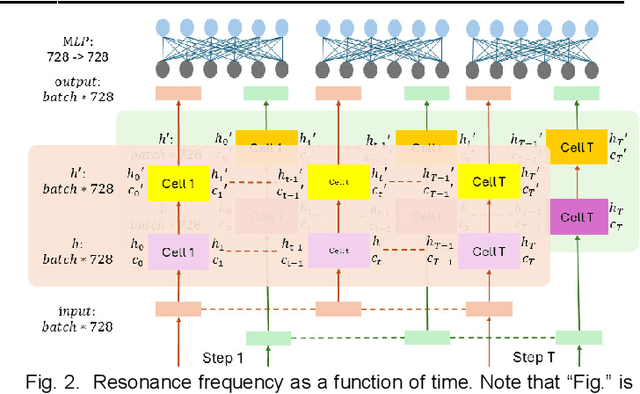

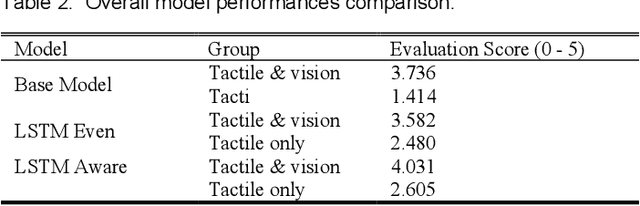

Robots engaged in complex manipulation tasks require robust material property recognition to ensure adaptability and precision. Traditionally, visual data has been the primary source for object perception; however, it often proves insufficient in scenarios where visibility is obstructed or detailed observation is needed. This gap highlights the necessity of tactile sensing as a complementary or primary input for material recognition. Tactile data becomes particularly essential in contact-rich, small-scale manipulations where subtle deformations and surface interactions cannot be accurately captured by vision alone. This letter presents a novel approach leveraging a temporal binding foundation model for tactile sequence understanding to enhance material property recognition. By processing tactile sensor data with a temporal focus, the proposed system captures the sequential nature of tactile interactions, similar to human fingertip perception. Additionally, this letter demonstrates that, through tailored and specific design, the foundation model can more effectively capture temporal information embedded in tactile sequences, advancing material property understanding. Experimental results validate the model's capability to capture these temporal patterns, confirming its utility for material property recognition in visually restricted scenarios. This work underscores the necessity of embedding advanced tactile data processing frameworks within robotic systems to achieve truly embodied and responsive manipulation capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge