Hao Xue

Mechanistic Indicators of Steering Effectiveness in Large Language Models

Feb 02, 2026Abstract:Activation-based steering enables Large Language Models (LLMs) to exhibit targeted behaviors by intervening on intermediate activations without retraining. Despite its widespread use, the mechanistic factors that govern when steering succeeds or fails remain poorly understood, as prior work has relied primarily on black-box outputs or LLM-based judges. In this study, we investigate whether the reliability of steering can be diagnosed using internal model signals. We focus on two information-theoretic measures: the entropy-derived Normalized Branching Factor (NBF), and the Kullback-Leibler (KL) divergence between steered activations and targeted concepts in the vocabulary space. We hypothesize that effective steering corresponds to structured entropy preservation and coherent KL alignment across decoding steps. Building on a reliability study demonstrating high inter-judge agreement between two architecturally distinct LLMs, we use LLM-generated annotations as ground truth and show that these mechanistic signals provide meaningful predictive power for identifying successful steering and estimating failure probability. We further introduce a stronger evaluation baseline for Contrastive Activation Addition (CAA) and Sparse Autoencoder-based steering, the two most widely adopted activation-steering methods.

QuAnTS: Question Answering on Time Series

Nov 07, 2025Abstract:Text offers intuitive access to information. This can, in particular, complement the density of numerical time series, thereby allowing improved interactions with time series models to enhance accessibility and decision-making. While the creation of question-answering datasets and models has recently seen remarkable growth, most research focuses on question answering (QA) on vision and text, with time series receiving minute attention. To bridge this gap, we propose a challenging novel time series QA (TSQA) dataset, QuAnTS, for Question Answering on Time Series data. Specifically, we pose a wide variety of questions and answers about human motion in the form of tracked skeleton trajectories. We verify that the large-scale QuAnTS dataset is well-formed and comprehensive through extensive experiments. Thoroughly evaluating existing and newly proposed baselines then lays the groundwork for a deeper exploration of TSQA using QuAnTS. Additionally, we provide human performances as a key reference for gauging the practical usability of such models. We hope to encourage future research on interacting with time series models through text, enabling better decision-making and more transparent systems.

Hierarchical Sequence Iteration for Heterogeneous Question Answering

Oct 23, 2025Abstract:Retrieval-augmented generation (RAG) remains brittle on multi-step questions and heterogeneous evidence sources, trading accuracy against latency and token/tool budgets. This paper introducesHierarchical Sequence (HSEQ) Iteration for Heterogeneous Question Answering, a unified framework that (i) linearize documents, tables, and knowledge graphs into a reversible hierarchical sequence with lightweight structural tags, and (ii) perform structure-aware iteration to collect just-enough evidence before answer synthesis. A Head Agent provides guidance that leads retrieval, while an Iteration Agent selects and expands HSeq via structure-respecting actions (e.g., parent/child hops, table row/column neighbors, KG relations); Finally the head agent composes canonicalized evidence to genearte the final answer, with an optional refinement loop to resolve detected contradictions. Experiments on HotpotQA (text), HybridQA/TAT-QA (table+text), and MetaQA (KG) show consistent EM/F1 gains over strong single-pass, multi-hop, and agentic RAG baselines with high efficiency. Besides, HSEQ exhibits three key advantages: (1) a format-agnostic unification that enables a single policy to operate across text, tables, and KGs without per-dataset specialization; (2) guided, budget-aware iteration that reduces unnecessary hops, tool calls, and tokens while preserving accuracy; and (3) evidence canonicalization for reliable QA, improving answers consistency and auditability.

Resolving Ambiguity in Gaze-Facilitated Visual Assistant Interaction Paradigm

Sep 26, 2025Abstract:With the rise in popularity of smart glasses, users' attention has been integrated into Vision-Language Models (VLMs) to streamline multi-modal querying in daily scenarios. However, leveraging gaze data to model users' attention may introduce ambiguity challenges: (1) users' verbal questions become ambiguous by using pronouns or skipping context, (2) humans' gaze patterns can be noisy and exhibit complex spatiotemporal relationships with their spoken questions. Previous works only consider single image as visual modality input, failing to capture the dynamic nature of the user's attention. In this work, we introduce GLARIFY, a novel method to leverage spatiotemporal gaze information to enhance the model's effectiveness in real-world applications. Initially, we analyzed hundreds of querying samples with the gaze modality to demonstrate the noisy nature of users' gaze patterns. We then utilized GPT-4o to design an automatic data synthesis pipeline to generate the GLARIFY-Ambi dataset, which includes a dedicated chain-of-thought (CoT) process to handle noisy gaze patterns. Finally, we designed a heatmap module to incorporate gaze information into cutting-edge VLMs while preserving their pretrained knowledge. We evaluated GLARIFY using a hold-out test set. Experiments demonstrate that GLARIFY significantly outperforms baselines. By robustly aligning VLMs with human attention, GLARIFY paves the way for a usable and intuitive interaction paradigm with a visual assistant.

TradingGroup: A Multi-Agent Trading System with Self-Reflection and Data-Synthesis

Aug 25, 2025Abstract:Recent advancements in large language models (LLMs) have enabled powerful agent-based applications in finance, particularly for sentiment analysis, financial report comprehension, and stock forecasting. However, existing systems often lack inter-agent coordination, structured self-reflection, and access to high-quality, domain-specific post-training data such as data from trading activities including both market conditions and agent decisions. These data are crucial for agents to understand the market dynamics, improve the quality of decision-making and promote effective coordination. We introduce TradingGroup, a multi-agent trading system designed to address these limitations through a self-reflective architecture and an end-to-end data-synthesis pipeline. TradingGroup consists of specialized agents for news sentiment analysis, financial report interpretation, stock trend forecasting, trading style adaptation, and a trading decision making agent that merges all signals and style preferences to produce buy, sell or hold decisions. Specifically, we design self-reflection mechanisms for the stock forecasting, style, and decision-making agents to distill past successes and failures for similar reasoning in analogous future scenarios and a dynamic risk-management model to offer configurable dynamic stop-loss and take-profit mechanisms. In addition, TradingGroup embeds an automated data-synthesis and annotation pipeline that generates high-quality post-training data for further improving the agent performance through post-training. Our backtesting experiments across five real-world stock datasets demonstrate TradingGroup's superior performance over rule-based, machine learning, reinforcement learning, and existing LLM-based trading strategies.

ZARA: Zero-shot Motion Time-Series Analysis via Knowledge and Retrieval Driven LLM Agents

Aug 06, 2025Abstract:Motion sensor time-series are central to human activity recognition (HAR), with applications in health, sports, and smart devices. However, existing methods are trained for fixed activity sets and require costly retraining when new behaviours or sensor setups appear. Recent attempts to use large language models (LLMs) for HAR, typically by converting signals into text or images, suffer from limited accuracy and lack verifiable interpretability. We propose ZARA, the first agent-based framework for zero-shot, explainable HAR directly from raw motion time-series. ZARA integrates an automatically derived pair-wise feature knowledge base that captures discriminative statistics for every activity pair, a multi-sensor retrieval module that surfaces relevant evidence, and a hierarchical agent pipeline that guides the LLM to iteratively select features, draw on this evidence, and produce both activity predictions and natural-language explanations. ZARA enables flexible and interpretable HAR without any fine-tuning or task-specific classifiers. Extensive experiments on 8 HAR benchmarks show that ZARA achieves SOTA zero-shot performance, delivering clear reasoning while exceeding the strongest baselines by 2.53x in macro F1. Ablation studies further confirm the necessity of each module, marking ZARA as a promising step toward trustworthy, plug-and-play motion time-series analysis. Our codes are available at https://github.com/zechenli03/ZARA.

Generate the Forest before the Trees -- A Hierarchical Diffusion model for Climate Downscaling

Jun 24, 2025Abstract:Downscaling is essential for generating the high-resolution climate data needed for local planning, but traditional methods remain computationally demanding. Recent years have seen impressive results from AI downscaling models, particularly diffusion models, which have attracted attention due to their ability to generate ensembles and overcome the smoothing problem common in other AI methods. However, these models typically remain computationally intensive. We introduce a Hierarchical Diffusion Downscaling (HDD) model, which introduces an easily-extensible hierarchical sampling process to the diffusion framework. A coarse-to-fine hierarchy is imposed via a simple downsampling scheme. HDD achieves competitive accuracy on ERA5 reanalysis datasets and CMIP6 models, significantly reducing computational load by running on up to half as many pixels with competitive results. Additionally, a single model trained at 0.25{\deg} resolution transfers seamlessly across multiple CMIP6 models with much coarser resolution. HDD thus offers a lightweight alternative for probabilistic climate downscaling, facilitating affordable large-ensemble high-resolution climate projections. See a full code implementation at: https://github.com/HDD-Hierarchical-Diffusion-Downscaling/HDD-Hierarchical-Diffusion-Downscaling.

STOAT: Spatial-Temporal Probabilistic Causal Inference Network

Jun 12, 2025Abstract:Spatial-temporal causal time series (STC-TS) involve region-specific temporal observations driven by causally relevant covariates and interconnected across geographic or network-based spaces. Existing methods often model spatial and temporal dynamics independently and overlook causality-driven probabilistic forecasting, limiting their predictive power. To address this, we propose STOAT (Spatial-Temporal Probabilistic Causal Inference Network), a novel framework for probabilistic forecasting in STC-TS. The proposed method extends a causal inference approach by incorporating a spatial relation matrix that encodes interregional dependencies (e.g. proximity or connectivity), enabling spatially informed causal effect estimation. The resulting latent series are processed by deep probabilistic models to estimate the parameters of the distributions, enabling calibrated uncertainty modeling. We further explore multiple output distributions (e.g., Gaussian, Student's-$t$, Laplace) to capture region-specific variability. Experiments on COVID-19 data across six countries demonstrate that STOAT outperforms state-of-the-art probabilistic forecasting models (DeepAR, DeepVAR, Deep State Space Model, etc.) in key metrics, particularly in regions with strong spatial dependencies. By bridging causal inference and geospatial probabilistic forecasting, STOAT offers a generalizable framework for complex spatial-temporal tasks, such as epidemic management.

A Probabilistic Framework for Imputing Genetic Distances in Spatiotemporal Pathogen Models

Jun 10, 2025Abstract:Pathogen genome data offers valuable structure for spatial models, but its utility is limited by incomplete sequencing coverage. We propose a probabilistic framework for inferring genetic distances between unsequenced cases and known sequences within defined transmission chains, using time-aware evolutionary distance modeling. The method estimates pairwise divergence from collection dates and observed genetic distances, enabling biologically plausible imputation grounded in observed divergence patterns, without requiring sequence alignment or known transmission chains. Applied to highly pathogenic avian influenza A/H5 cases in wild birds in the United States, this approach supports scalable, uncertainty-aware augmentation of genomic datasets and enhances the integration of evolutionary information into spatiotemporal modeling workflows.

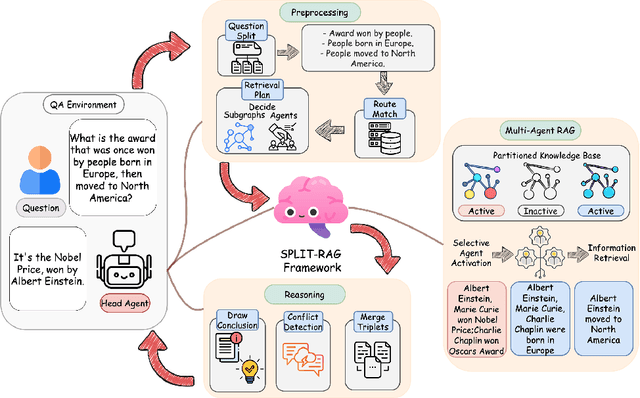

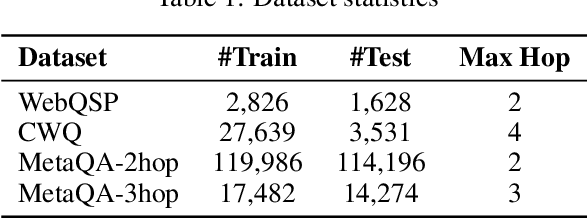

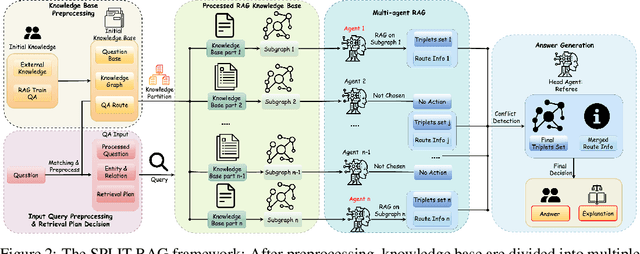

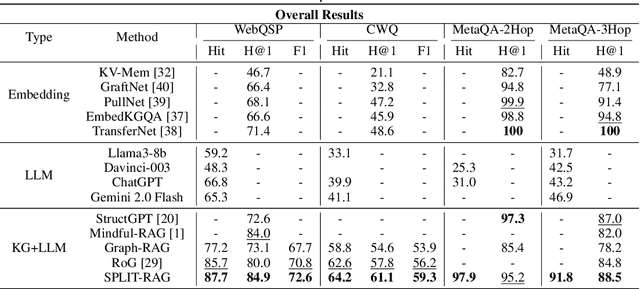

Divide by Question, Conquer by Agent: SPLIT-RAG with Question-Driven Graph Partitioning

May 20, 2025

Abstract:Retrieval-Augmented Generation (RAG) systems empower large language models (LLMs) with external knowledge, yet struggle with efficiency-accuracy trade-offs when scaling to large knowledge graphs. Existing approaches often rely on monolithic graph retrieval, incurring unnecessary latency for simple queries and fragmented reasoning for complex multi-hop questions. To address these challenges, this paper propose SPLIT-RAG, a multi-agent RAG framework that addresses these limitations with question-driven semantic graph partitioning and collaborative subgraph retrieval. The innovative framework first create Semantic Partitioning of Linked Information, then use the Type-Specialized knowledge base to achieve Multi-Agent RAG. The attribute-aware graph segmentation manages to divide knowledge graphs into semantically coherent subgraphs, ensuring subgraphs align with different query types, while lightweight LLM agents are assigned to partitioned subgraphs, and only relevant partitions are activated during retrieval, thus reduce search space while enhancing efficiency. Finally, a hierarchical merging module resolves inconsistencies across subgraph-derived answers through logical verifications. Extensive experimental validation demonstrates considerable improvements compared to existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge