Sami Haddadin

Technical University Munich

Bridging the Sim-to-Real Gap with multipanda ros2: A Real-Time ROS2 Framework for Multimanual Systems

Feb 02, 2026Abstract:We present $multipanda\_ros2$, a novel open-source ROS2 architecture for multi-robot control of Franka Robotics robots. Leveraging ros2 control, this framework provides native ROS2 interfaces for controlling any number of robots from a single process. Our core contributions address key challenges in real-time torque control, including interaction control and robot-environment modeling. A central focus of this work is sustaining a 1kHz control frequency, a necessity for real-time control and a minimum frequency required by safety standards. Moreover, we introduce a controllet-feature design pattern that enables controller-switching delays of $\le 2$ ms, facilitating reproducible benchmarking and complex multi-robot interaction scenarios. To bridge the simulation-to-reality (sim2real) gap, we integrate a high-fidelity MuJoCo simulation with quantitative metrics for both kinematic accuracy and dynamic consistency (torques, forces, and control errors). Furthermore, we demonstrate that real-world inertial parameter identification can significantly improve force and torque accuracy, providing a methodology for iterative physics refinement. Our work extends approaches from soft robotics to rigid dual-arm, contact-rich tasks, showcasing a promising method to reduce the sim2real gap and providing a robust, reproducible platform for advanced robotics research.

Just in time Informed Trees: Manipulability-Aware Asymptotically Optimized Motion Planning

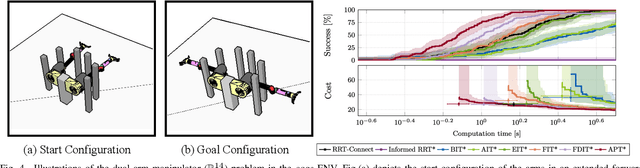

Jan 27, 2026Abstract:In high-dimensional robotic path planning, traditional sampling-based methods often struggle to efficiently identify both feasible and optimal paths in complex, multi-obstacle environments. This challenge is intensified in robotic manipulators, where the risk of kinematic singularities and self-collisions further complicates motion efficiency and safety. To address these issues, we introduce the Just-in-Time Informed Trees (JIT*) algorithm, an enhancement over Effort Informed Trees (EIT*), designed to improve path planning through two core modules: the Just-in-Time module and the Motion Performance module. The Just-in-Time module includes "Just-in-Time Edge," which dynamically refines edge connectivity, and "Just-in-Time Sample," which adjusts sampling density in bottleneck areas to enable faster initial path discovery. The Motion Performance module balances manipulability and trajectory cost through dynamic switching, optimizing motion control while reducing the risk of singularities. Comparative analysis shows that JIT* consistently outperforms traditional sampling-based planners across $\mathbb{R}^4$ to $\mathbb{R}^{16}$ dimensions. Its effectiveness is further demonstrated in single-arm and dual-arm manipulation tasks, with experimental results available in a video at https://youtu.be/nL1BMHpMR7c.

Learning a Shape-adaptive Assist-as-needed Rehabilitation Policy from Therapist-informed Input

Oct 06, 2025Abstract:Therapist-in-the-loop robotic rehabilitation has shown great promise in enhancing rehabilitation outcomes by integrating the strengths of therapists and robotic systems. However, its broader adoption remains limited due to insufficient safe interaction and limited adaptation capability. This article proposes a novel telerobotics-mediated framework that enables therapists to intuitively and safely deliver assist-as-needed~(AAN) therapy based on two primary contributions. First, our framework encodes the therapist-informed corrective force into via-points in a latent space, allowing the therapist to provide only minimal assistance while encouraging patient maintaining own motion preferences. Second, a shape-adaptive ANN rehabilitation policy is learned to partially and progressively deform the reference trajectory for movement therapy based on encoded patient motion preferences and therapist-informed via-points. The effectiveness of the proposed shape-adaptive AAN strategy was validated on a telerobotic rehabilitation system using two representative tasks. The results demonstrate its practicality for remote AAN therapy and its superiority over two state-of-the-art methods in reducing corrective force and improving movement smoothness.

Tele-rehabilitation with online skill transfer and adaptation in $\mathbb{R}^3 \times \mathit{S}^3$

Oct 01, 2025Abstract:This paper proposes a tele-teaching framework for the domain of robot-assisted tele-rehabilitation. The system connects two robotic manipulators on therapist and patient side via bilateral teleoperation, enabling a therapist to remotely demonstrate rehabilitation exercises that are executed by the patient-side robot. A 6-DoF Dynamical Movement Primitives formulation is employed to jointly encode translational and rotational motions in $\mathbb{R}^3 \times \mathit{S}^3$ space, ensuring accurate trajectory reproduction. The framework supports smooth transitions between therapist-led guidance and patient passive training, while allowing adaptive adjustment of motion. Experiments with 7-DoF manipulators demonstrate the feasibility of the approach, highlighting its potential for personalized and remotely supervised rehabilitation.

Constraint-Consistent Control of Task-Based and Kinematic RCM Constraints for Surgical Robots

Sep 17, 2025Abstract:Robotic-assisted minimally invasive surgery (RAMIS) requires precise enforcement of the remote center of motion (RCM) constraint to ensure safe tool manipulation through a trocar. Achieving this constraint under dynamic and interactive conditions remains challenging, as existing control methods either lack robustness at the torque level or do not guarantee consistent RCM constraint satisfaction. This paper proposes a constraint-consistent torque controller that treats the RCM as a rheonomic holonomic constraint and embeds it into a projection-based inverse-dynamics framework. The method unifies task-level and kinematic formulations, enabling accurate tool-tip tracking while maintaining smooth and efficient torque behavior. The controller is validated both in simulation and on a RAMIS training platform, and is benchmarked against state-of-the-art approaches. Results show improved RCM constraint satisfaction, reduced required torque, and robust performance by improving joint torque smoothness through the consistency formulation under clinically relevant scenarios, including spiral trajectories, variable insertion depths, moving trocars, and human interaction. These findings demonstrate the potential of constraint-consistent torque control to enhance safety and reliability in surgical robotics. The project page is available at: https://rcmpc-cube.github.io

Prompt2Auto: From Motion Prompt to Automated Control via Geometry-Invariant One-Shot Gaussian Process Learning

Sep 17, 2025Abstract:Learning from demonstration allows robots to acquire complex skills from human demonstrations, but conventional approaches often require large datasets and fail to generalize across coordinate transformations. In this paper, we propose Prompt2Auto, a geometry-invariant one-shot Gaussian process (GeoGP) learning framework that enables robots to perform human-guided automated control from a single motion prompt. A dataset-construction strategy based on coordinate transformations is introduced that enforces invariance to translation, rotation, and scaling, while supporting multi-step predictions. Moreover, GeoGP is robust to variations in the user's motion prompt and supports multi-skill autonomy. We validate the proposed approach through numerical simulations with the designed user graphical interface and two real-world robotic experiments, which demonstrate that the proposed method is effective, generalizes across tasks, and significantly reduces the demonstration burden. Project page is available at: https://prompt2auto.github.io

Estimated Informed Anytime Search for Sampling-Based Planning via Adaptive Sampler

Aug 29, 2025Abstract:Path planning in robotics often involves solving continuously valued, high-dimensional problems. Popular informed approaches include graph-based searches, such as A*, and sampling-based methods, such as Informed RRT*, which utilize informed set and anytime strategies to expedite path optimization incrementally. Informed sampling-based planners define informed sets as subsets of the problem domain based on the current best solution cost. However, when no solution is found, these planners re-sample and explore the entire configuration space, which is time-consuming and computationally expensive. This article introduces Multi-Informed Trees (MIT*), a novel planner that constructs estimated informed sets based on prior admissible solution costs before finding the initial solution, thereby accelerating the initial convergence rate. Moreover, MIT* employs an adaptive sampler that dynamically adjusts the sampling strategy based on the exploration process. Furthermore, MIT* utilizes length-related adaptive sparse collision checks to guide lazy reverse search. These features enhance path cost efficiency and computation times while ensuring high success rates in confined scenarios. Through a series of simulations and real-world experiments, it is confirmed that MIT* outperforms existing single-query, sampling-based planners for problems in R^4 to R^16 and has been successfully applied to real-world robot manipulation tasks. A video showcasing our experimental results is available at: https://youtu.be/30RsBIdexTU

APT*: Asymptotically Optimal Motion Planning via Adaptively Prolated Elliptical R-Nearest Neighbors

Aug 27, 2025

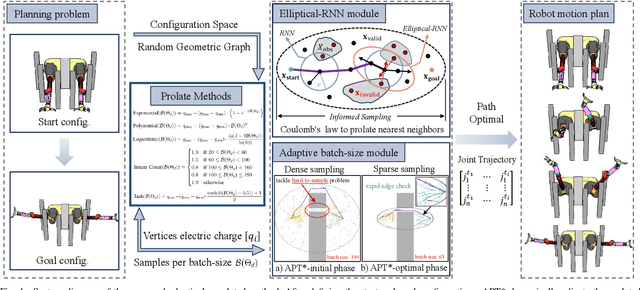

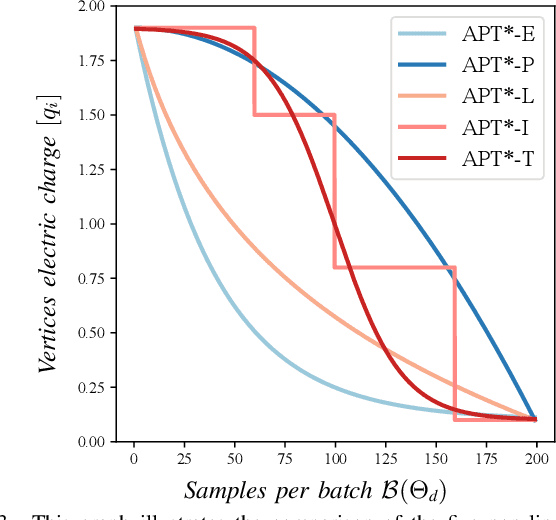

Abstract:Optimal path planning aims to determine a sequence of states from a start to a goal while accounting for planning objectives. Popular methods often integrate fixed batch sizes and neglect information on obstacles, which is not problem-specific. This study introduces Adaptively Prolated Trees (APT*), a novel sampling-based motion planner that extends based on Force Direction Informed Trees (FDIT*), integrating adaptive batch-sizing and elliptical $r$-nearest neighbor modules to dynamically modulate the path searching process based on environmental feedback. APT* adjusts batch sizes based on the hypervolume of the informed sets and considers vertices as electric charges that obey Coulomb's law to define virtual forces via neighbor samples, thereby refining the prolate nearest neighbor selection. These modules employ non-linear prolate methods to adaptively adjust the electric charges of vertices for force definition, thereby improving the convergence rate with lower solution costs. Comparative analyses show that APT* outperforms existing single-query sampling-based planners in dimensions from $\mathbb{R}^4$ to $\mathbb{R}^{16}$, and it was further validated through a real-world robot manipulation task. A video showcasing our experimental results is available at: https://youtu.be/gCcUr8LiEw4

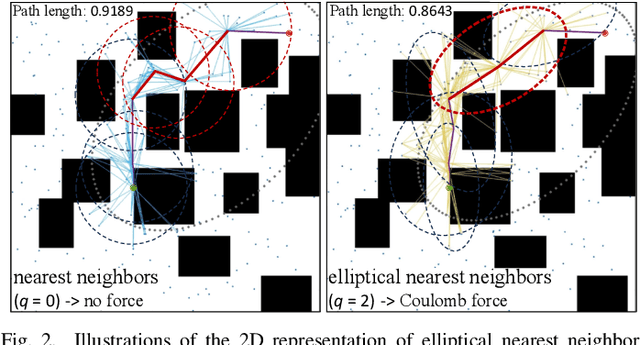

Elliptical K-Nearest Neighbors -- Path Optimization via Coulomb's Law and Invalid Vertices in C-space Obstacles

Aug 27, 2025Abstract:Path planning has long been an important and active research area in robotics. To address challenges in high-dimensional motion planning, this study introduces the Force Direction Informed Trees (FDIT*), a sampling-based planner designed to enhance speed and cost-effectiveness in pathfinding. FDIT* builds upon the state-of-the-art informed sampling planner, the Effort Informed Trees (EIT*), by capitalizing on often-overlooked information in invalid vertices. It incorporates principles of physical force, particularly Coulomb's law. This approach proposes the elliptical $k$-nearest neighbors search method, enabling fast convergence navigation and avoiding high solution cost or infeasible paths by exploring more problem-specific search-worthy areas. It demonstrates benefits in search efficiency and cost reduction, particularly in confined, high-dimensional environments. It can be viewed as an extension of nearest neighbors search techniques. Fusing invalid vertex data with physical dynamics facilitates force-direction-based search regions, resulting in an improved convergence rate to the optimum. FDIT* outperforms existing single-query, sampling-based planners on the tested problems in R^4 to R^16 and has been demonstrated on a real-world mobile manipulation task.

Tree-Based Grafting Approach for Bidirectional Motion Planning with Local Subsets Optimization

Aug 27, 2025Abstract:Bidirectional motion planning often reduces planning time compared to its unidirectional counterparts. It requires connecting the forward and reverse search trees to form a continuous path. However, this process could fail and restart the asymmetric bidirectional search due to the limitations of lazy-reverse search. To address this challenge, we propose Greedy GuILD Grafting Trees (G3T*), a novel path planner that grafts invalid edge connections at both ends to re-establish tree-based connectivity, enabling rapid path convergence. G3T* employs a greedy approach using the minimum Lebesgue measure of guided incremental local densification (GuILD) subsets to optimize paths efficiently. Furthermore, G3T* dynamically adjusts the sampling distribution between the informed set and GuILD subsets based on historical and current cost improvements, ensuring asymptotic optimality. These features enhance the forward search's growth towards the reverse tree, achieving faster convergence and lower solution costs. Benchmark experiments across dimensions from R^2 to R^8 and real-world robotic evaluations demonstrate G3T*'s superior performance compared to existing single-query sampling-based planners. A video showcasing our experimental results is available at: https://youtu.be/3mfCRL5SQIU

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge