Hamid Sadeghian

Learning a Shape-adaptive Assist-as-needed Rehabilitation Policy from Therapist-informed Input

Oct 06, 2025Abstract:Therapist-in-the-loop robotic rehabilitation has shown great promise in enhancing rehabilitation outcomes by integrating the strengths of therapists and robotic systems. However, its broader adoption remains limited due to insufficient safe interaction and limited adaptation capability. This article proposes a novel telerobotics-mediated framework that enables therapists to intuitively and safely deliver assist-as-needed~(AAN) therapy based on two primary contributions. First, our framework encodes the therapist-informed corrective force into via-points in a latent space, allowing the therapist to provide only minimal assistance while encouraging patient maintaining own motion preferences. Second, a shape-adaptive ANN rehabilitation policy is learned to partially and progressively deform the reference trajectory for movement therapy based on encoded patient motion preferences and therapist-informed via-points. The effectiveness of the proposed shape-adaptive AAN strategy was validated on a telerobotic rehabilitation system using two representative tasks. The results demonstrate its practicality for remote AAN therapy and its superiority over two state-of-the-art methods in reducing corrective force and improving movement smoothness.

Tele-rehabilitation with online skill transfer and adaptation in $\mathbb{R}^3 \times \mathit{S}^3$

Oct 01, 2025Abstract:This paper proposes a tele-teaching framework for the domain of robot-assisted tele-rehabilitation. The system connects two robotic manipulators on therapist and patient side via bilateral teleoperation, enabling a therapist to remotely demonstrate rehabilitation exercises that are executed by the patient-side robot. A 6-DoF Dynamical Movement Primitives formulation is employed to jointly encode translational and rotational motions in $\mathbb{R}^3 \times \mathit{S}^3$ space, ensuring accurate trajectory reproduction. The framework supports smooth transitions between therapist-led guidance and patient passive training, while allowing adaptive adjustment of motion. Experiments with 7-DoF manipulators demonstrate the feasibility of the approach, highlighting its potential for personalized and remotely supervised rehabilitation.

Prompt2Auto: From Motion Prompt to Automated Control via Geometry-Invariant One-Shot Gaussian Process Learning

Sep 17, 2025Abstract:Learning from demonstration allows robots to acquire complex skills from human demonstrations, but conventional approaches often require large datasets and fail to generalize across coordinate transformations. In this paper, we propose Prompt2Auto, a geometry-invariant one-shot Gaussian process (GeoGP) learning framework that enables robots to perform human-guided automated control from a single motion prompt. A dataset-construction strategy based on coordinate transformations is introduced that enforces invariance to translation, rotation, and scaling, while supporting multi-step predictions. Moreover, GeoGP is robust to variations in the user's motion prompt and supports multi-skill autonomy. We validate the proposed approach through numerical simulations with the designed user graphical interface and two real-world robotic experiments, which demonstrate that the proposed method is effective, generalizes across tasks, and significantly reduces the demonstration burden. Project page is available at: https://prompt2auto.github.io

Constraint-Consistent Control of Task-Based and Kinematic RCM Constraints for Surgical Robots

Sep 17, 2025Abstract:Robotic-assisted minimally invasive surgery (RAMIS) requires precise enforcement of the remote center of motion (RCM) constraint to ensure safe tool manipulation through a trocar. Achieving this constraint under dynamic and interactive conditions remains challenging, as existing control methods either lack robustness at the torque level or do not guarantee consistent RCM constraint satisfaction. This paper proposes a constraint-consistent torque controller that treats the RCM as a rheonomic holonomic constraint and embeds it into a projection-based inverse-dynamics framework. The method unifies task-level and kinematic formulations, enabling accurate tool-tip tracking while maintaining smooth and efficient torque behavior. The controller is validated both in simulation and on a RAMIS training platform, and is benchmarked against state-of-the-art approaches. Results show improved RCM constraint satisfaction, reduced required torque, and robust performance by improving joint torque smoothness through the consistency formulation under clinically relevant scenarios, including spiral trajectories, variable insertion depths, moving trocars, and human interaction. These findings demonstrate the potential of constraint-consistent torque control to enhance safety and reliability in surgical robotics. The project page is available at: https://rcmpc-cube.github.io

SharedAssembly: A Data Collection Approach via Shared Tele-Assembly

Mar 15, 2025Abstract:Assembly is a fundamental skill for robots in both modern manufacturing and service robotics. Existing datasets aim to address the data bottleneck in training general-purpose robot models, falling short of capturing contact-rich assembly tasks. To bridge this gap, we introduce SharedAssembly, a novel bilateral teleoperation approach with shared autonomy for scalable assembly execution and data collection. User studies demonstrate that the proposed approach enhances both success rates and efficiency, achieving a 97.0% success rate across various sub-millimeter-level assembly tasks. Notably, novice and intermediate users achieve performance comparable to experts using baseline teleoperation methods, significantly enhancing large-scale data collection.

Visuo-Tactile Exploration of Unknown Rigid 3D Curvatures by Vision-Augmented Unified Force-Impedance Control

Aug 26, 2024

Abstract:Despite recent advancements in torque-controlled tactile robots, integrating them into manufacturing settings remains challenging, particularly in complex environments. Simplifying robotic skill programming for non-experts is crucial for increasing robot deployment in manufacturing. This work proposes an innovative approach, Vision-Augmented Unified Force-Impedance Control (VA-UFIC), aimed at intuitive visuo-tactile exploration of unknown 3D curvatures. VA-UFIC stands out by seamlessly integrating vision and tactile data, enabling the exploration of diverse contact shapes in three dimensions, including point contacts, flat contacts with concave and convex curvatures, and scenarios involving contact loss. A pivotal component of our method is a robust online contact alignment monitoring system that considers tactile error, local surface curvature, and orientation, facilitating adaptive adjustments of robot stiffness and force regulation during exploration. We introduce virtual energy tanks within the control framework to ensure safety and stability, effectively addressing inherent safety concerns in visuo-tactile exploration. Evaluation using a Franka Emika research robot demonstrates the efficacy of VA-UFIC in exploring unknown 3D curvatures while adhering to arbitrarily defined force-motion policies. By seamlessly integrating vision and tactile sensing, VA-UFIC offers a promising avenue for intuitive exploration of complex environments, with potential applications spanning manufacturing, inspection, and beyond.

Tactile-Morph Skills: Energy-Based Control Meets Data-Driven Learning

Aug 23, 2024Abstract:Robotic manipulation is essential for modernizing factories and automating industrial tasks like polishing, which require advanced tactile abilities. These robots must be easily set up, safely work with humans, learn tasks autonomously, and transfer skills to similar tasks. Addressing these needs, we introduce the tactile-morph skill framework, which integrates unified force-impedance control with data-driven learning. Our system adjusts robot movements and force application based on estimated energy levels for the desired trajectory and force profile, ensuring safety by stopping if energy allocated for the control runs out. Using a Temporal Convolutional Network, we estimate the energy distribution for a given motion and force profile, enabling skill transfer across different tasks and surfaces. Our approach maintains stability and performance even on unfamiliar geometries with similar friction characteristics, demonstrating improved accuracy, zero-shot transferable performance, and enhanced safety in real-world scenarios. This framework promises to enhance robotic capabilities in industrial settings, making intelligent robots more accessible and valuable.

An Integrated Programmable CPG with Bounded Output

Apr 16, 2022

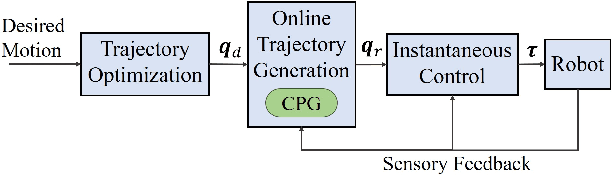

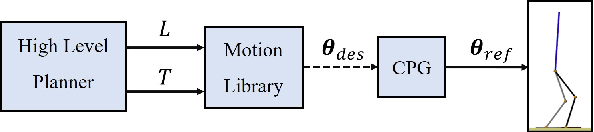

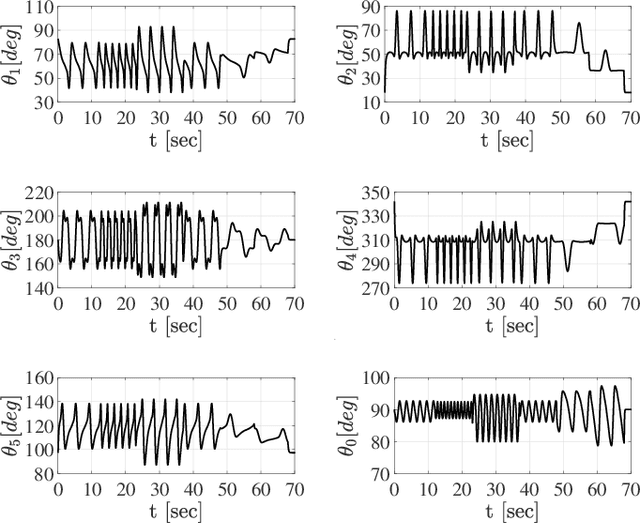

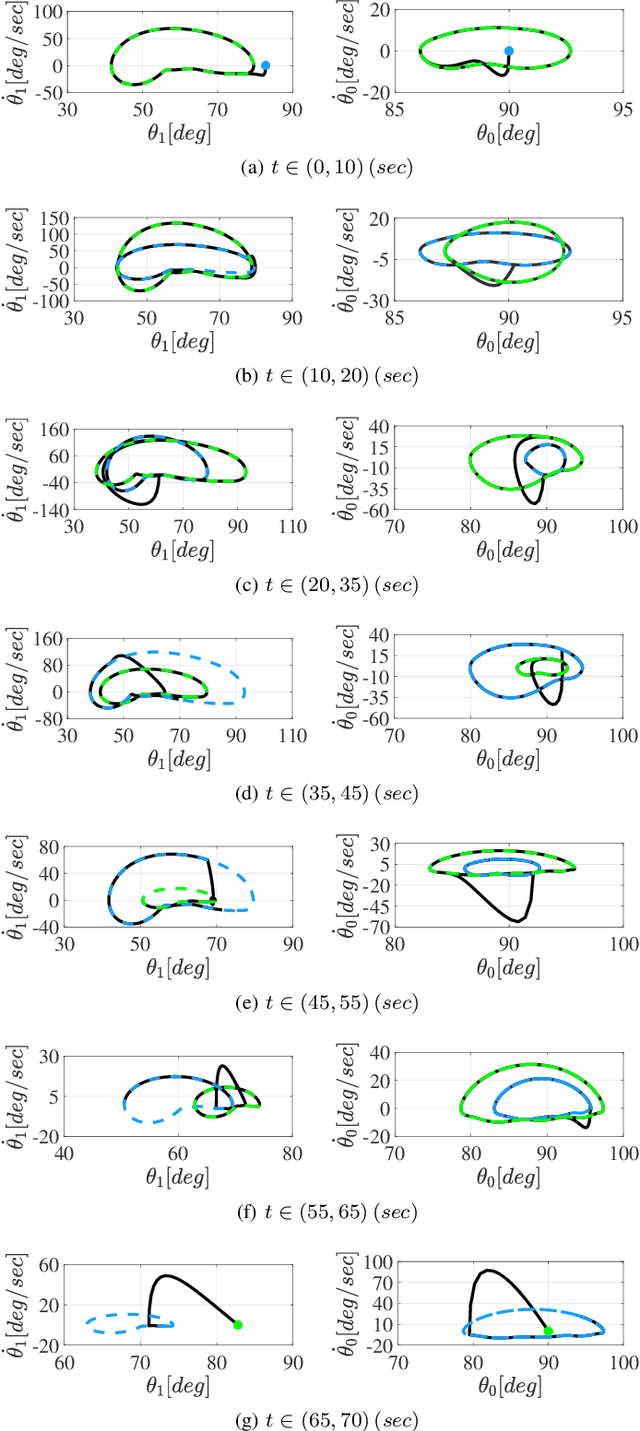

Abstract:Cyclic motions are fundamental patterns in robotic applications including industrial manipulation and legged robot locomotion. This paper proposes an approach for the online modulation of cyclic motions in robotic applications. For this purpose, we present an integrated programmable Central Pattern Generator (CPG) for the online generation of the reference joint trajectory of a robotic system out of a library of desired periodic motions. The reference trajectory is then followed by the lower-level controller of the robot. The proposed CPG generates a smooth reference joint trajectory convergence to the desired one while preserving the position and velocity joint limits of the robot. The integrated programmable CPG consists of one novel bounded output programmable oscillator. We design the programmable oscillator for encoding the desired multidimensional periodic trajectory as a stable limit cycle. We also use the state transformation method to ensure that the oscillator's output and its first-time derivative preserve the joint position and velocity limits of the robot. With the help of Lyapunov-based arguments, We prove that the proposed CPG provides the global stability and convergence of the desired trajectory. The effectiveness of the proposed integrated CPG for trajectory generation is shown in a passive rehabilitation scenario on the Kuka iiwa robot arm, and also in a walking simulation on a seven-link bipedal robot.

* https://ieeexplore.ieee.org/document/9756235

Discrimination and characterization of Parkinsonian rest tremors by analyzing long-term correlations and multifractal signatures

May 15, 2015

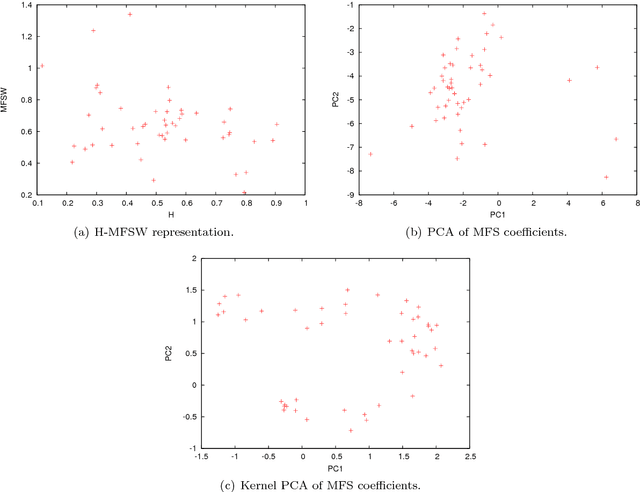

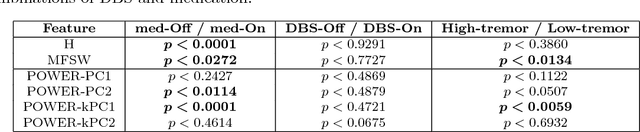

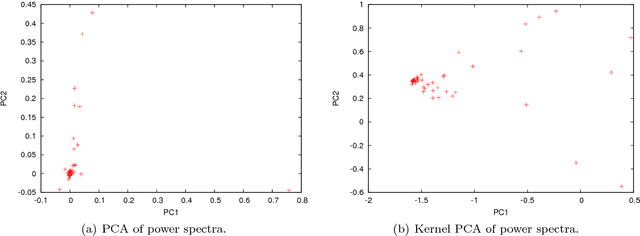

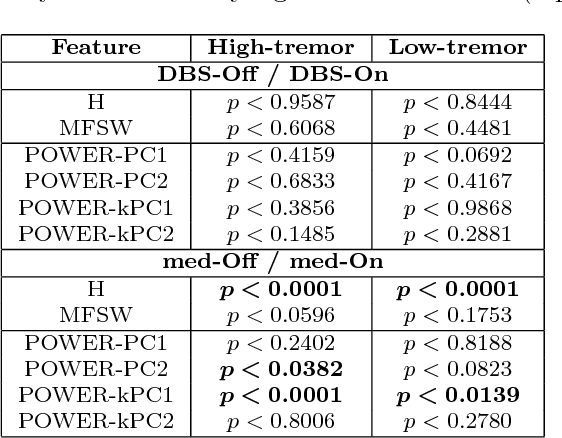

Abstract:In this paper, we analyze 48 signals of rest tremor velocity related to 12 distinct subjects affected by Parkinson's disease. The subjects belong to two different groups, formed by four and eight subjects with, respectively, high- and low-amplitude rest tremors. Each subject is tested in four settings, given by combining the use of deep brain stimulation and L-DOPA medication. We develop two main feature-based representations of such signals, which are obtained by considering (i) the long-term correlations and multifractal properties, and (ii) the power spectra. The feature-based representations are initially utilized for the purpose of characterizing the subjects under different settings. In agreement with previous studies, we show that deep brain stimulation does not significantly characterize neither of the two groups, regardless of the adopted representation. On the other hand, the medication effect yields statistically significant differences in both high- and low-amplitude tremor groups. We successively test several different instances of the two feature-based representations of the signals in the setting of supervised classification and (nonlinear) feature transformation. We consider three different classification problems, involving the recognition of (i) the presence of medication, (ii) the use of deep brain stimulation, and (iii) the membership to the high- and low-amplitude tremor groups. Classification results show that the use of medication can be discriminated with higher accuracy, considering many of the feature-based representations. Notably, we show that the best results are obtained with a parsimonious, two-dimensional representation encoding the long-term correlations and multifractal character of the signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge