Yansong Wu

Direction Informed Trees (DIT*): Optimal Path Planning via Direction Filter and Direction Cost Heuristic

Aug 26, 2025Abstract:Optimal path planning requires finding a series of feasible states from the starting point to the goal to optimize objectives. Popular path planning algorithms, such as Effort Informed Trees (EIT*), employ effort heuristics to guide the search. Effective heuristics are accurate and computationally efficient, but achieving both can be challenging due to their conflicting nature. This paper proposes Direction Informed Trees (DIT*), a sampling-based planner that focuses on optimizing the search direction for each edge, resulting in goal bias during exploration. We define edges as generalized vectors and integrate similarity indexes to establish a directional filter that selects the nearest neighbors and estimates direction costs. The estimated direction cost heuristics are utilized in edge evaluation. This strategy allows the exploration to share directional information efficiently. DIT* convergence faster than existing single-query, sampling-based planners on tested problems in R^4 to R^16 and has been demonstrated in real-world environments with various planning tasks. A video showcasing our experimental results is available at: https://youtu.be/2SX6QT2NOek

Pretrained Bayesian Non-parametric Knowledge Prior in Robotic Long-Horizon Reinforcement Learning

Mar 27, 2025

Abstract:Reinforcement learning (RL) methods typically learn new tasks from scratch, often disregarding prior knowledge that could accelerate the learning process. While some methods incorporate previously learned skills, they usually rely on a fixed structure, such as a single Gaussian distribution, to define skill priors. This rigid assumption can restrict the diversity and flexibility of skills, particularly in complex, long-horizon tasks. In this work, we introduce a method that models potential primitive skill motions as having non-parametric properties with an unknown number of underlying features. We utilize a Bayesian non-parametric model, specifically Dirichlet Process Mixtures, enhanced with birth and merge heuristics, to pre-train a skill prior that effectively captures the diverse nature of skills. Additionally, the learned skills are explicitly trackable within the prior space, enhancing interpretability and control. By integrating this flexible skill prior into an RL framework, our approach surpasses existing methods in long-horizon manipulation tasks, enabling more efficient skill transfer and task success in complex environments. Our findings show that a richer, non-parametric representation of skill priors significantly improves both the learning and execution of challenging robotic tasks. All data, code, and videos are available at https://ghiara.github.io/HELIOS/.

SharedAssembly: A Data Collection Approach via Shared Tele-Assembly

Mar 15, 2025Abstract:Assembly is a fundamental skill for robots in both modern manufacturing and service robotics. Existing datasets aim to address the data bottleneck in training general-purpose robot models, falling short of capturing contact-rich assembly tasks. To bridge this gap, we introduce SharedAssembly, a novel bilateral teleoperation approach with shared autonomy for scalable assembly execution and data collection. User studies demonstrate that the proposed approach enhances both success rates and efficiency, achieving a 97.0% success rate across various sub-millimeter-level assembly tasks. Notably, novice and intermediate users achieve performance comparable to experts using baseline teleoperation methods, significantly enhancing large-scale data collection.

TacDiffusion: Force-domain Diffusion Policy for Precise Tactile Manipulation

Sep 17, 2024

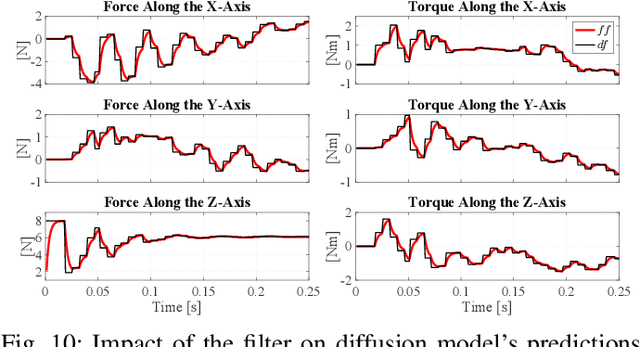

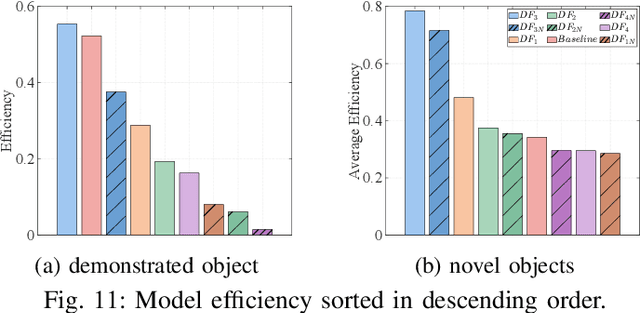

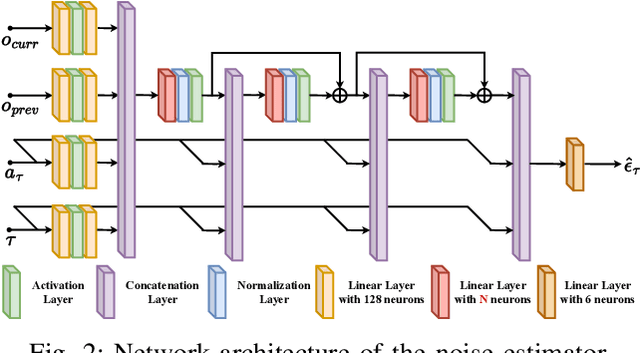

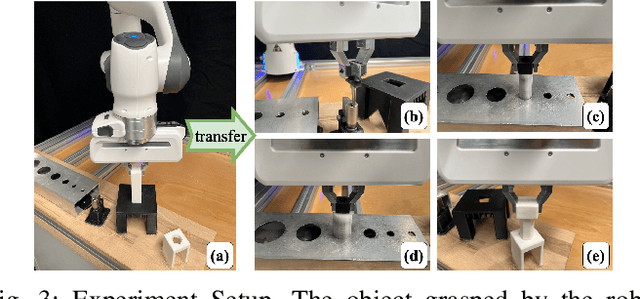

Abstract:Assembly is a crucial skill for robots in both modern manufacturing and service robotics. However, mastering transferable insertion skills that can handle a variety of high-precision assembly tasks remains a significant challenge. This paper presents a novel framework that utilizes diffusion models to generate 6D wrench for high-precision tactile robotic insertion tasks. It learns from demonstrations performed on a single task and achieves a zero-shot transfer success rate of 95.7% across various novel high-precision tasks. Our method effectively inherits the self-adaptability demonstrated by our previous work. In this framework, we address the frequency misalignment between the diffusion policy and the real-time control loop with a dynamic system-based filter, significantly improving the task success rate by 9.15%. Furthermore, we provide a practical guideline regarding the trade-off between diffusion models' inference ability and speed.

LLM as BT-Planner: Leveraging LLMs for Behavior Tree Generation in Robot Task Planning

Sep 16, 2024

Abstract:Robotic assembly tasks are open challenges due to the long task horizon and complex part relations. Behavior trees (BTs) are increasingly used in robot task planning for their modularity and flexibility, but manually designing them can be effort-intensive. Large language models (LLMs) have recently been applied in robotic task planning for generating action sequences, but their ability to generate BTs has not been fully investigated. To this end, We propose LLM as BT-planner, a novel framework to leverage LLMs for BT generation in robotic assembly task planning and execution. Four in-context learning methods are introduced to utilize the natural language processing and inference capabilities of LLMs to produce task plans in BT format, reducing manual effort and ensuring robustness and comprehensibility. We also evaluate the performance of fine-tuned, fewer-parameter LLMs on the same tasks. Experiments in simulated and real-world settings show that our framework enhances LLMs' performance in BT generation, improving success rates in BT generation through in-context learning and supervised fine-tuning.

Behavior Tree Generation using Large Language Models for Sequential Manipulation Planning with Human Instructions and Feedback

Sep 14, 2024Abstract:In this work, we propose an LLM-based BT generation framework to leverage the strengths of both for sequential manipulation planning. To enable human-robot collaborative task planning and enhance intuitive robot programming by nonexperts, the framework takes human instructions to initiate the generation of action sequences and human feedback to refine BT generation in runtime. All presented methods within the framework are tested on a real robotic assembly example, which uses a gear set model from the Siemens Robot Assembly Challenge. We use a single manipulator with a tool-changing mechanism, a common practice in flexible manufacturing, to facilitate robust grasping of a large variety of objects. Experimental results are evaluated regarding success rate, logical coherence, executability, time consumption, and token consumption. To our knowledge, this is the first human-guided LLM-based BT generation framework that unifies various plausible ways of using LLMs to fully generate BTs that are executable on the real testbed and take into account granular knowledge of tool use.

Tactile-Morph Skills: Energy-Based Control Meets Data-Driven Learning

Aug 23, 2024Abstract:Robotic manipulation is essential for modernizing factories and automating industrial tasks like polishing, which require advanced tactile abilities. These robots must be easily set up, safely work with humans, learn tasks autonomously, and transfer skills to similar tasks. Addressing these needs, we introduce the tactile-morph skill framework, which integrates unified force-impedance control with data-driven learning. Our system adjusts robot movements and force application based on estimated energy levels for the desired trajectory and force profile, ensuring safety by stopping if energy allocated for the control runs out. Using a Temporal Convolutional Network, we estimate the energy distribution for a given motion and force profile, enabling skill transfer across different tasks and surfaces. Our approach maintains stability and performance even on unfamiliar geometries with similar friction characteristics, demonstrating improved accuracy, zero-shot transferable performance, and enhanced safety in real-world scenarios. This framework promises to enhance robotic capabilities in industrial settings, making intelligent robots more accessible and valuable.

Real-time Contact State Estimation in Shape Control of Deformable Linear Objects under Small Environmental Constraints

Jan 30, 2024Abstract:Controlling the shape of deformable linear objects using robots and constraints provided by environmental fixtures has diverse industrial applications. In order to establish robust contacts with these fixtures, accurate estimation of the contact state is essential for preventing and rectifying potential anomalies. However, this task is challenging due to the small sizes of fixtures, the requirement for real-time performances, and the infinite degrees of freedom of the deformable linear objects. In this paper, we propose a real-time approach for estimating both contact establishment and subsequent changes by leveraging the dependency between the applied and detected contact force on the deformable linear objects. We seamlessly integrate this method into the robot control loop and achieve an adaptive shape control framework which avoids, detects and corrects anomalies automatically. Real-world experiments validate the robustness and effectiveness of our contact estimation approach across various scenarios, significantly increasing the success rate of shape control processes.

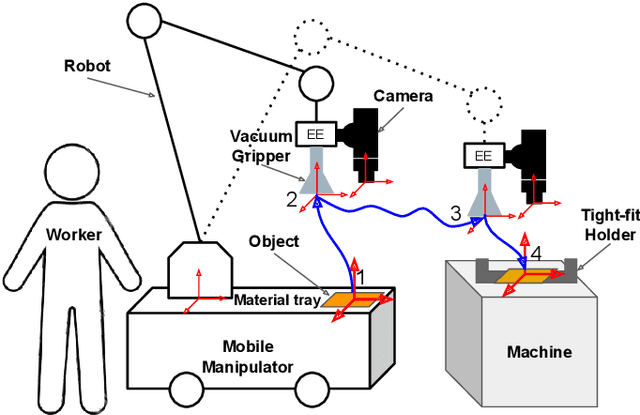

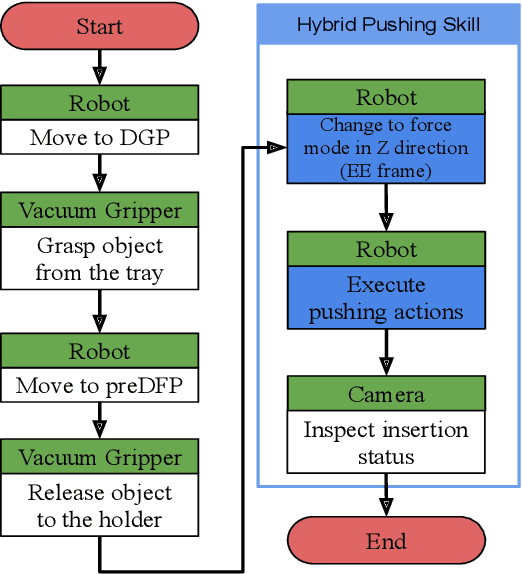

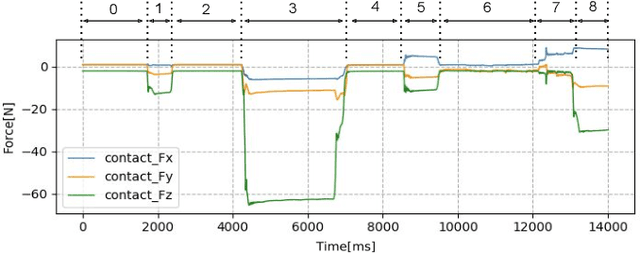

Maximizing the Use of Environmental Constraints: A Pushing-Based Hybrid Position/Force Assembly Skill for Contact-Rich Tasks

Aug 12, 2022

Abstract:The need for contact-rich tasks is rapidly growing in modern manufacturing settings. However, few traditional robotic assembly skills consider environmental constraints during task execution, and most of them use these constraints as termination conditions. In this study, we present a pushing-based hybrid position/force assembly skill that can maximize environmental constraints during task execution. To the best of our knowledge, this is the first work that considers using pushing actions during the execution of the assembly tasks. We have proved that our skill can maximize the utilization of environmental constraints using mobile manipulator system assembly task experiments, and achieve a 100\% success rate in the executions.

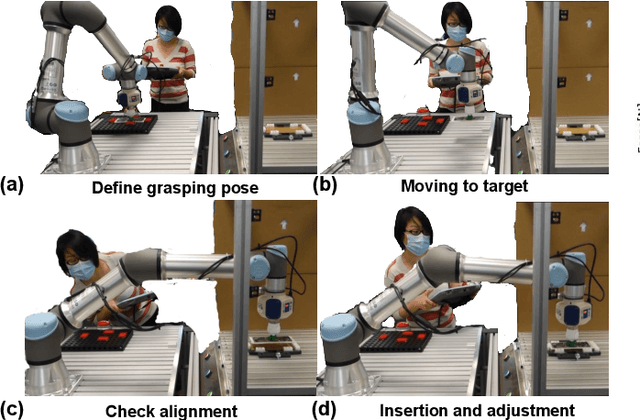

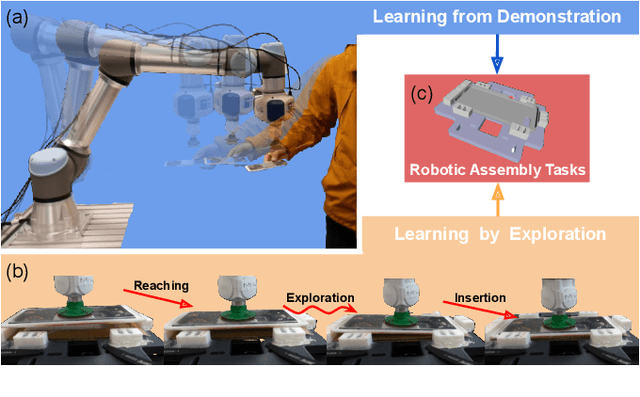

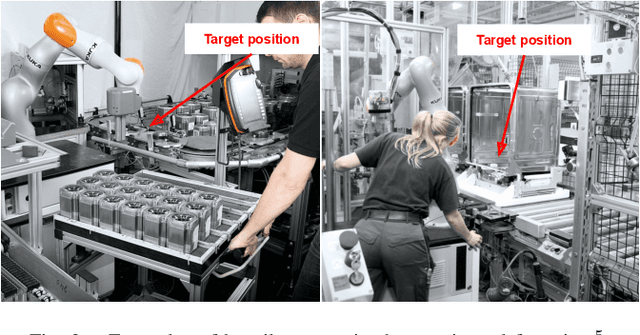

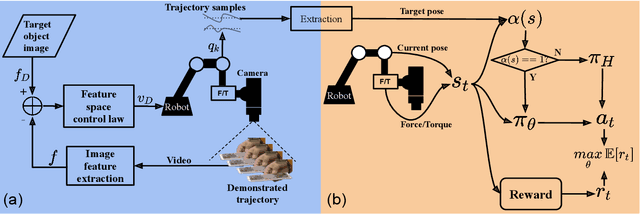

Combining Learning from Demonstration with Learning by Exploration to Facilitate Contact-Rich Tasks

Mar 10, 2021

Abstract:Collaborative robots are expected to be able to work alongside humans and in some cases directly replace existing human workers, thus effectively responding to rapid assembly line changes. Current methods for programming contact-rich tasks, especially in heavily constrained space, tend to be fairly inefficient. Therefore, faster and more intuitive approaches to robot teaching are urgently required. This work focuses on combining visual servoing based learning from demonstration (LfD) and force-based learning by exploration (LbE), to enable fast and intuitive programming of contact-rich tasks with minimal user effort required. Two learning approaches were developed and integrated into a framework, and one relying on human to robot motion mapping (the visual servoing approach) and one on force-based reinforcement learning. The developed framework implements the non-contact demonstration teaching method based on visual servoing approach and optimizes the demonstrated robot target positions according to the detected contact state. The framework has been compared with two most commonly used baseline techniques, pendant-based teaching and hand-guiding teaching. The efficiency and reliability of the framework have been validated through comparison experiments involving the teaching and execution of contact-rich tasks. The framework proposed in this paper has performed the best in terms of teaching time, execution success rate, risk of damage, and ease of use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge