Lin Cong

Reinforcement Learning Based Pushing and Grasping Objects from Ungraspable Poses

Feb 26, 2023Abstract:Grasping an object when it is in an ungraspable pose is a challenging task, such as books or other large flat objects placed horizontally on a table. Inspired by human manipulation, we address this problem by pushing the object to the edge of the table and then grasping it from the hanging part. In this paper, we develop a model-free Deep Reinforcement Learning framework to synergize pushing and grasping actions. We first pre-train a Variational Autoencoder to extract high-dimensional features of input scenario images. One Proximal Policy Optimization algorithm with the common reward and sharing layers of Actor-Critic is employed to learn both pushing and grasping actions with high data efficiency. Experiments show that our one network policy can converge 2.5 times faster than the policy using two parallel networks. Moreover, the experiments on unseen objects show that our policy can generalize to the challenging case of objects with curved surfaces and off-center irregularly shaped objects. Lastly, our policy can be transferred to a real robot without fine-tuning by using CycleGAN for domain adaption and outperforms the push-to-wall baseline.

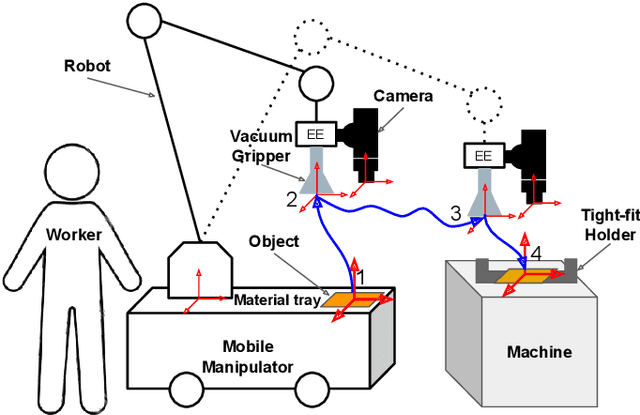

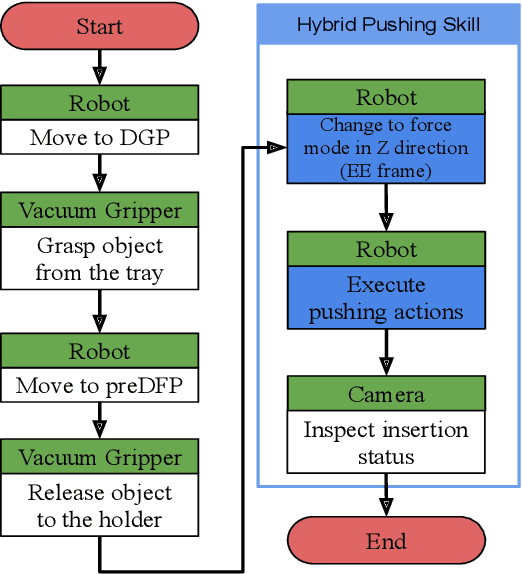

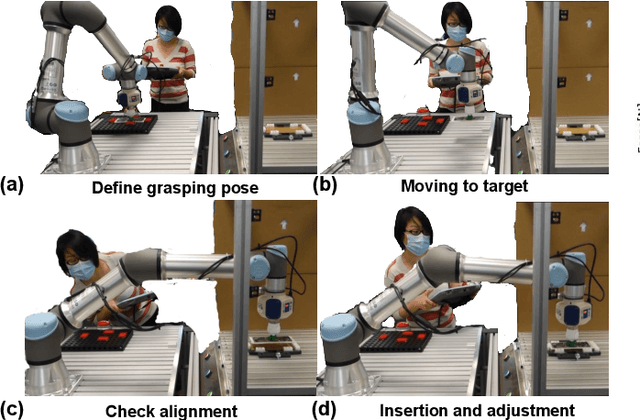

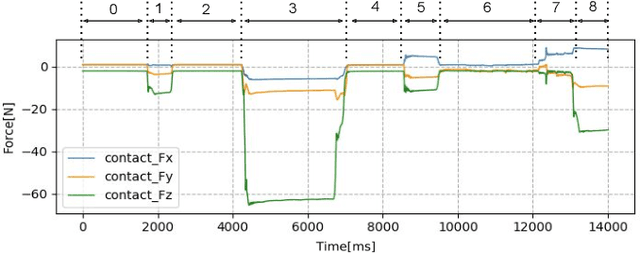

Maximizing the Use of Environmental Constraints: A Pushing-Based Hybrid Position/Force Assembly Skill for Contact-Rich Tasks

Aug 12, 2022

Abstract:The need for contact-rich tasks is rapidly growing in modern manufacturing settings. However, few traditional robotic assembly skills consider environmental constraints during task execution, and most of them use these constraints as termination conditions. In this study, we present a pushing-based hybrid position/force assembly skill that can maximize environmental constraints during task execution. To the best of our knowledge, this is the first work that considers using pushing actions during the execution of the assembly tasks. We have proved that our skill can maximize the utilization of environmental constraints using mobile manipulator system assembly task experiments, and achieve a 100\% success rate in the executions.

Self-Adapting Recurrent Models for Object Pushing from Learning in Simulation

Jul 27, 2020

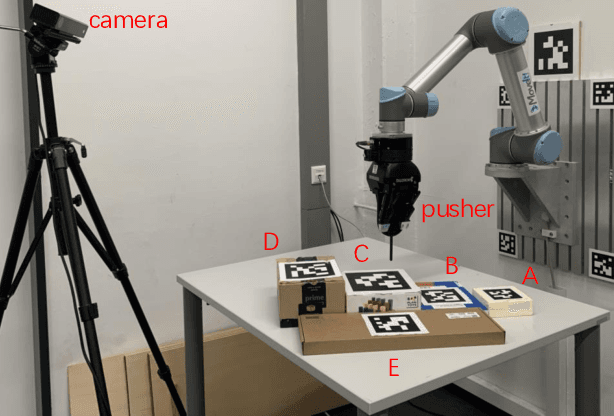

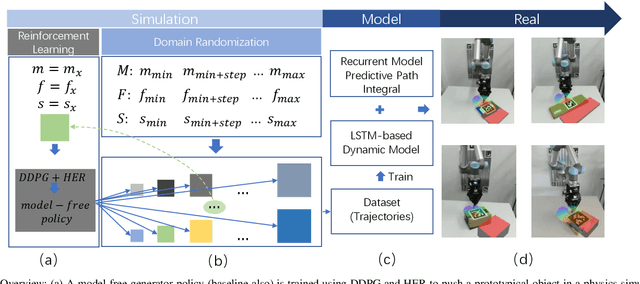

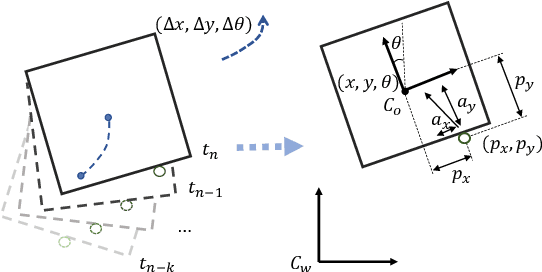

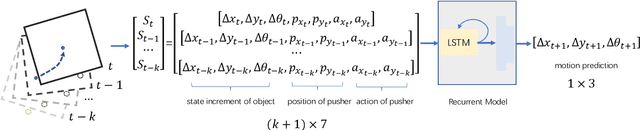

Abstract:Planar pushing remains a challenging research topic, where building the dynamic model of the interaction is the core issue. Even an accurate analytical dynamic model is inherently unstable because physics parameters such as inertia and friction can only be approximated. Data-driven models usually rely on large amounts of training data, but data collection is time consuming when working with real robots. In this paper, we collect all training data in a physics simulator and build an LSTM-based model to fit the pushing dynamics. Domain Randomization is applied to capture the pushing trajectories of a generalized class of objects. When executed on the real robot, the trained recursive model adapts to the tracked object's real dynamics within a few steps. We propose the algorithm \emph{Recurrent} Model Predictive Path Integral (RMPPI) as a variation of the original MPPI approach, employing state-dependent recurrent models. As a comparison, we also train a Deep Deterministic Policy Gradient (DDPG) network as a model-free baseline, which is also used as the action generator in the data collection phase. During policy training, Hindsight Experience Replay is used to improve exploration efficiency. Pushing experiments on our UR5 platform demonstrate the model's adaptability and the effectiveness of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge