Andrew I. Cooper

MATTERIX: toward a digital twin for robotics-assisted chemistry laboratory automation

Jan 19, 2026Abstract:Accelerated materials discovery is critical for addressing global challenges. However, developing new laboratory workflows relies heavily on real-world experimental trials, and this can hinder scalability because of the need for numerous physical make-and-test iterations. Here we present MATTERIX, a multiscale, graphics processing unit-accelerated robotic simulation framework designed to create high-fidelity digital twins of chemistry laboratories, thus accelerating workflow development. This multiscale digital twin simulates robotic physical manipulation, powder and liquid dynamics, device functionalities, heat transfer and basic chemical reaction kinetics. This is enabled by integrating realistic physics simulation and photorealistic rendering with a modular graphics processing unit-accelerated semantics engine, which models logical states and continuous behaviors to simulate chemistry workflows across different levels of abstraction. MATTERIX streamlines the creation of digital twin environments through open-source asset libraries and interfaces, while enabling flexible workflow design via hierarchical plan definition and a modular skill library that incorporates learning-based methods. Our approach demonstrates sim-to-real transfer in robotic chemistry setups, reducing reliance on costly real-world experiments and enabling the testing of hypothetical automated workflows in silico. The project website is available at https://accelerationconsortium.github.io/Matterix/ .

Chemist Eye: A Visual Language Model-Powered System for Safety Monitoring and Robot Decision-Making in Self-Driving Laboratories

Aug 07, 2025Abstract:The integration of robotics and automation into self-driving laboratories (SDLs) can introduce additional safety complexities, in addition to those that already apply to conventional research laboratories. Personal protective equipment (PPE) is an essential requirement for ensuring the safety and well-being of workers in laboratories, self-driving or otherwise. Fires are another important risk factor in chemical laboratories. In SDLs, fires that occur close to mobile robots, which use flammable lithium batteries, could have increased severity. Here, we present Chemist Eye, a distributed safety monitoring system designed to enhance situational awareness in SDLs. The system integrates multiple stations equipped with RGB, depth, and infrared cameras, designed to monitor incidents in SDLs. Chemist Eye is also designed to spot workers who have suffered a potential accident or medical emergency, PPE compliance and fire hazards. To do this, Chemist Eye uses decision-making driven by a vision-language model (VLM). Chemist Eye is designed for seamless integration, enabling real-time communication with robots. Based on the VLM recommendations, the system attempts to drive mobile robots away from potential fire locations, exits, or individuals not wearing PPE, and issues audible warnings where necessary. It also integrates with third-party messaging platforms to provide instant notifications to lab personnel. We tested Chemist Eye with real-world data from an SDL equipped with three mobile robots and found that the spotting of possible safety hazards and decision-making performances reached 97 % and 95 %, respectively.

FLIP: Flowability-Informed Powder Weighing

Jun 05, 2025Abstract:Autonomous manipulation of powders remains a significant challenge for robotic automation in scientific laboratories. The inherent variability and complex physical interactions of powders in flow, coupled with variability in laboratory conditions necessitates adaptive automation. This work introduces FLIP, a flowability-informed powder weighing framework designed to enhance robotic policy learning for granular material handling. Our key contribution lies in using material flowability, quantified by the angle of repose, to optimise physics-based simulations through Bayesian inference. This yields material-specific simulation environments capable of generating accurate training data, which reflects diverse powder behaviours, for training "robot chemists". Building on this, FLIP integrates quantified flowability into a curriculum learning strategy, fostering efficient acquisition of robust robotic policies by gradually introducing more challenging, less flowable powders. We validate the efficacy of our method on a robotic powder weighing task under real-world laboratory conditions. Experimental results show that FLIP with a curriculum strategy achieves a low dispensing error of 2.12 +/- 1.53 mg, outperforming methods that do not leverage flowability data, such as domain randomisation (6.11 +/- 3.92 mg). These results demonstrate FLIP's improved ability to generalise to previously unseen, more cohesive powders and to new target masses.

An Open-source Capping Machine Suitable for Confined Spaces

Jun 04, 2025Abstract:In the context of self-driving laboratories (SDLs), ensuring automated and error-free capping is crucial, as it is a ubiquitous step in sample preparation. Automated capping in SDLs can occur in both large and small workspaces (e.g., inside a fume hood). However, most commercial capping machines are designed primarily for large spaces and are often too bulky for confined environments. Moreover, many commercial products are closed-source, which can make their integration into fully autonomous workflows difficult. This paper introduces an open-source capping machine suitable for compact spaces, which also integrates a vision system that recognises capping failure. The capping and uncapping processes are repeated 100 times each to validate the machine's design and performance. As a result, the capping machine reached a 100 % success rate for capping and uncapping. Furthermore, the machine sealing capacities are evaluated by capping 12 vials filled with solvents of different vapour pressures: water, ethanol and acetone. The vials are then weighed every 3 hours for three days. The machine's performance is benchmarked against an industrial capping machine (a Chemspeed station) and manual capping. The vials capped with the prototype lost 0.54 % of their content weight on average per day, while the ones capped with the Chemspeed and manually lost 0.0078 % and 0.013 %, respectively. The results show that the capping machine is a reasonable alternative to industrial and manual capping, especially when space and budget are limitations in SDLs.

Language-Based Bayesian Optimization Research Assistant (BORA)

Jan 27, 2025

Abstract:Many important scientific problems involve multivariate optimization coupled with slow and laborious experimental measurements. These complex, high-dimensional searches can be defined by non-convex optimization landscapes that resemble needle-in-a-haystack surfaces, leading to entrapment in local minima. Contextualizing optimizers with human domain knowledge is a powerful approach to guide searches to localized fruitful regions. However, this approach is susceptible to human confirmation bias and it is also challenging for domain experts to keep track of the rapidly expanding scientific literature. Here, we propose the use of Large Language Models (LLMs) for contextualizing Bayesian optimization (BO) via a hybrid optimization framework that intelligently and economically blends stochastic inference with domain knowledge-based insights from the LLM, which is used to suggest new, better-performing areas of the search space for exploration. Our method fosters user engagement by offering real-time commentary on the optimization progress, explaining the reasoning behind the search strategies. We validate the effectiveness of our approach on synthetic benchmarks with up to 15 independent variables and demonstrate the ability of LLMs to reason in four real-world experimental tasks where context-aware suggestions boost optimization performance substantially.

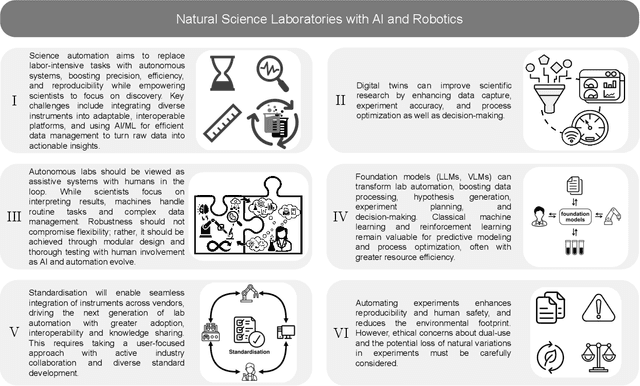

Accelerating Discovery in Natural Science Laboratories with AI and Robotics: Perspectives and Challenges from the 2024 IEEE ICRA Workshop, Yokohama, Japan

Jan 12, 2025

Abstract:Science laboratory automation enables accelerated discovery in life sciences and materials. However, it requires interdisciplinary collaboration to address challenges such as robust and flexible autonomy, reproducibility, throughput, standardization, the role of human scientists, and ethics. This article highlights these issues, reflecting perspectives from leading experts in laboratory automation across different disciplines of the natural sciences.

Powder-Bot: A Modular Autonomous Multi-Robot Workflow for Powder X-Ray Diffraction

Sep 01, 2023Abstract:Powder X-ray diffraction (PXRD) is a key technique for the structural characterisation of solid-state materials, but compared with tasks such as liquid handling, its end-to-end automation is highly challenging. This is because coupling PXRD experiments with crystallisation comprises multiple solid handling steps that include sample recovery, sample preparation by grinding, sample mounting and, finally, collection of X-ray diffraction data. Each of these steps has individual technical challenges from an automation perspective, and hence no commercial instrument exists that can grow crystals, process them into a powder, mount them in a diffractometer, and collect PXRD data in an autonomous, closed-loop way. Here we present an automated robotic workflow to carry out autonomous PXRD experiments. The PXRD data collected for polymorphs of small organic compounds is comparable to that collected under the same conditions manually. Beyond accelerating PXRD experiments, this workflow involves 13 component steps and integrates three different types of robots, each from a separate supplier, illustrating the power of flexible, modular automation in complex, multitask laboratories.

HypBO: Expert-Guided Chemist-in-the-Loop Bayesian Search for New Materials

Aug 24, 2023

Abstract:Robotics and automation offer massive accelerations for solving intractable, multivariate scientific problems such as materials discovery, but the available search spaces can be dauntingly large. Bayesian optimization (BO) has emerged as a popular sample-efficient optimization engine, thriving in tasks where no analytic form of the target function/property is known. Here we exploit expert human knowledge in the form of hypotheses to direct Bayesian searches more quickly to promising regions of chemical space. Previous methods have used underlying distributions derived from existing experimental measurements, which is unfeasible for new, unexplored scientific tasks. Also, such distributions cannot capture intricate hypotheses. Our proposed method, which we call HypBO, uses expert human hypotheses to generate an improved seed of samples. Unpromising seeds are automatically discounted, while promising seeds are used to augment the surrogate model data, thus achieving better-informed sampling. This process continues in a global versus local search fashion, organized in a bilevel optimization framework. We validate the performance of our method on a range of synthetic functions and demonstrate its practical utility on a real chemical design task where the use of expert hypotheses accelerates the search performance significantly.

Leveraging Multi-modal Sensing for Robotic Insertion Tasks in R&D Laboratories

Jul 02, 2023Abstract:Performing a large volume of experiments in Chemistry labs creates repetitive actions costing researchers time, automating these routines is highly desirable. Previous experiments in robotic chemistry have performed high numbers of experiments autonomously, however, these processes rely on automated machines in all stages from solid or liquid addition to analysis of the final product. In these systems every transition between machine requires the robotic chemist to pick and place glass vials, however, this is currently performed using open loop methods which require all equipment being used by the robot to be in well defined known locations. We seek to begin closing the loop in this vial handling process in a way which also fosters human-robot collaboration in the chemistry lab environment. To do this the robot must be able to detect valid placement positions for the vials it is collecting, and reliably insert them into the detected locations. We create a single modality visual method for estimating placement locations to provide a baseline before introducing two additional methods of feedback (force and tactile feedback). Our visual method uses a combination of classic computer vision methods and a CNN discriminator to detect possible insertion points, then a vial is grasped and positioned above an insertion point and the multi-modal methods guide the final insertion movements using an efficient search pattern. Through our experiments we show the baseline insertion rate of 48.78% improves to 89.55% with the addition of our "force and vision" multi-modal feedback method.

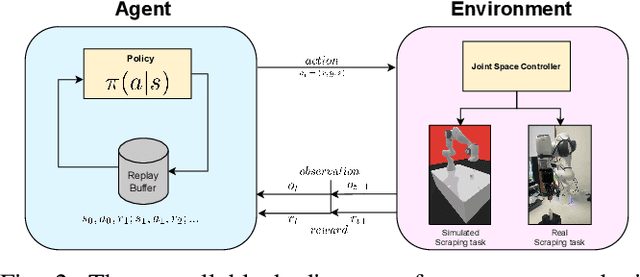

Accelerating Laboratory Automation Through Robot Skill Learning For Sample Scraping

Sep 29, 2022

Abstract:The potential use of robotics for laboratory experiments offers an attractive route to alleviate scientists from tedious tasks while accelerating the process of obtaining new materials, where topical issues such as climate change and disease risks worldwide would greatly benefit. While some experimental workflows can already benefit from automation, it is common that sample preparation is still carried out manually due to the high level of motor function required when dealing with heterogeneous systems, e.g., different tools, chemicals, and glassware. A fundamental workflow in chemical fields is crystallisation, where one application is polymorph screening, i.e., obtaining a three dimensional molecular structure from a crystal. For this process, it is of utmost importance to recover as much of the sample as possible since synthesising molecules is both costly in time and money. To this aim, chemists have to scrape vials to retrieve sample contents prior to imaging plate transfer. Automating this process is challenging as it goes beyond robotic insertion tasks due to a fundamental requirement of having to execute fine-granular movements within a constrained environment that is the sample vial. Motivated by how human chemists carry out this process of scraping powder from vials, our work proposes a model-free reinforcement learning method for learning a scraping policy, leading to a fully autonomous sample scraping procedure. To realise that, we first create a simulation environment with a Panda Franka Emika robot using a laboratory scraper which is inserted into a simulated vial, to demonstrate how a scraping policy can be learned successfully. We then evaluate our method on a real robotic manipulator in laboratory settings, and show that our method can autonomously scrape powder across various setups.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge