Matej Hoffmann

The body is not there to compute: Comment on "Informational embodiment: Computational role of information structure in codes and robots" by Pitti et al

Dec 28, 2025Abstract:Applying the lens of computation and information has been instrumental in driving the technological progress of our civilization as well as in empowering our understanding of the world around us. The digital computer was and for many still is the leading metaphor for how our mind operates. Information theory (IT) has also been important in our understanding of how nervous systems encode and process information. The target article deploys information and computation to bodies: to understand why they have evolved in particular ways (animal bodies) and to design optimal bodies (robots). In this commentary, I argue that the main role of bodies is not to compute.

* Comment on Pitti, A., Austin, M., Nakajima, K., & Kuniyoshi, Y. (2025). Informational Embodiment: Computational role of information structure in codes and robots. Physics of Life Reviews 53, 262-276. https://doi.org/10.1016/j.plrev.2025.03.018. Also available as arXiv:2408.12950

BLANKET: Anonymizing Faces in Infant Video Recordings

Dec 17, 2025

Abstract:Ensuring the ethical use of video data involving human subjects, particularly infants, requires robust anonymization methods. We propose BLANKET (Baby-face Landmark-preserving ANonymization with Keypoint dEtection consisTency), a novel approach designed to anonymize infant faces in video recordings while preserving essential facial attributes. Our method comprises two stages. First, a new random face, compatible with the original identity, is generated via inpainting using a diffusion model. Second, the new identity is seamlessly incorporated into each video frame through temporally consistent face swapping with authentic expression transfer. The method is evaluated on a dataset of short video recordings of babies and is compared to the popular anonymization method, DeepPrivacy2. Key metrics assessed include the level of de-identification, preservation of facial attributes, impact on human pose estimation (as an example of a downstream task), and presence of artifacts. Both methods alter the identity, and our method outperforms DeepPrivacy2 in all other respects. The code is available as an easy-to-use anonymization demo at https://github.com/ctu-vras/blanket-infant-face-anonym.

* Project website: https://github.com/ctu-vras/blanket-infant-face-anonym

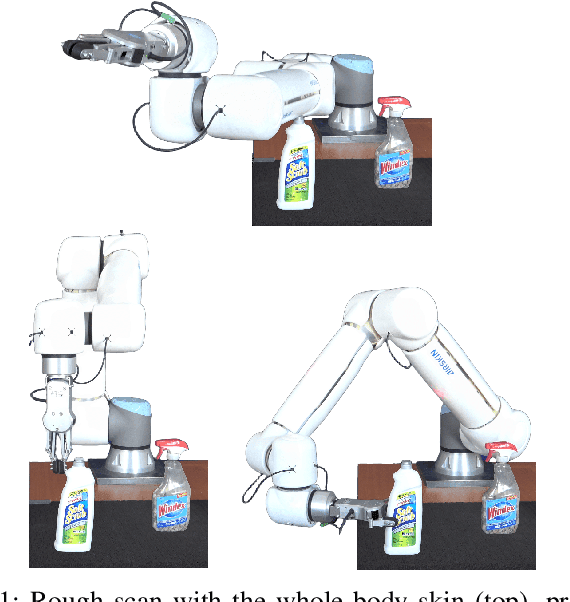

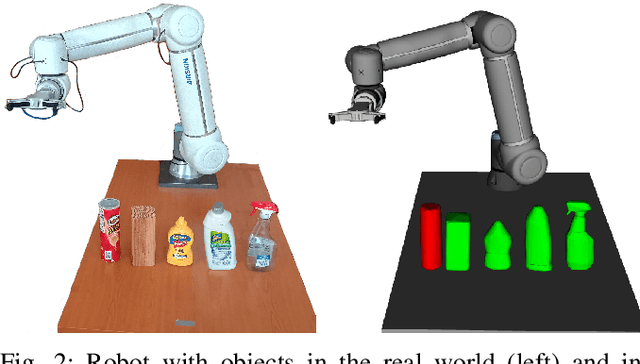

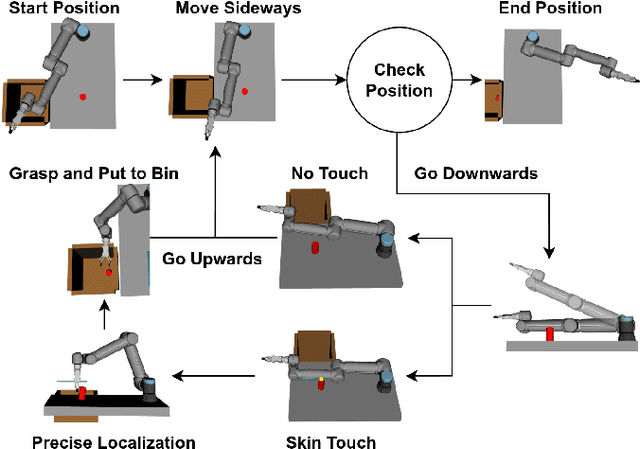

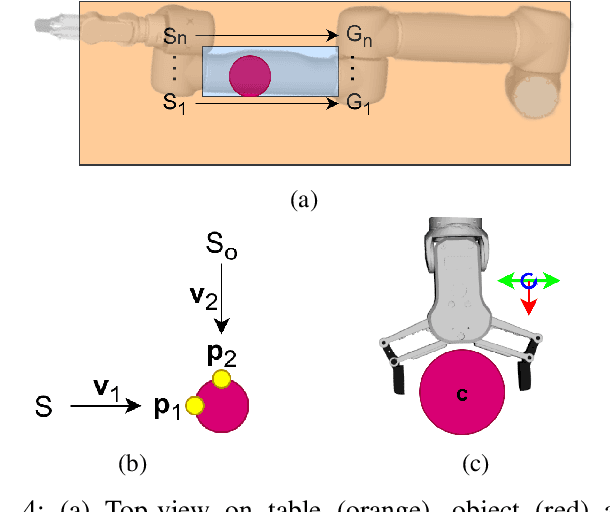

No Need to Look! Locating and Grasping Objects by a Robot Arm Covered with Sensitive Skin

Aug 25, 2025

Abstract:Locating and grasping of objects by robots is typically performed using visual sensors. Haptic feedback from contacts with the environment is only secondary if present at all. In this work, we explored an extreme case of searching for and grasping objects in complete absence of visual input, relying on haptic feedback only. The main novelty lies in the use of contacts over the complete surface of a robot manipulator covered with sensitive skin. The search is divided into two phases: (1) coarse workspace exploration with the complete robot surface, followed by (2) precise localization using the end-effector equipped with a force/torque sensor. We systematically evaluated this method in simulation and on the real robot, demonstrating that diverse objects can be located, grasped, and put in a basket. The overall success rate on the real robot for one object was 85.7\% with failures mainly while grasping specific objects. The method using whole-body contacts is six times faster compared to a baseline that uses haptic feedback only on the end-effector. We also show locating and grasping multiple objects on the table. This method is not restricted to our specific setup and can be deployed on any platform with the ability of sensing contacts over the entire body surface. This work holds promise for diverse applications in areas with challenging visual perception (due to lighting, dust, smoke, occlusion) such as in agriculture when fruits or vegetables need to be located inside foliage and picked.

Embodied AI in Machine Learning -- is it Really Embodied?

May 15, 2025Abstract:Embodied Artificial Intelligence (Embodied AI) is gaining momentum in the machine learning communities with the goal of leveraging current progress in AI (deep learning, transformers, large language and visual-language models) to empower robots. In this chapter we put this work in the context of "Good Old-Fashioned Artificial Intelligence" (GOFAI) (Haugeland, 1989) and the behavior-based or embodied alternatives (R. A. Brooks 1991; Pfeifer and Scheier 2001). We claim that the AI-powered robots are only weakly embodied and inherit some of the problems of GOFAI. Moreover, we review and critically discuss the possibility of cross-embodiment learning (Padalkar et al. 2024). We identify fundamental roadblocks and propose directions on how to make progress.

A computational model of infant sensorimotor exploration in the mobile paradigm

Apr 24, 2025

Abstract:We present a computational model of the mechanisms that may determine infants' behavior in the "mobile paradigm". This paradigm has been used in developmental psychology to explore how infants learn the sensory effects of their actions. In this paradigm, a mobile (an articulated and movable object hanging above an infant's crib) is connected to one of the infant's limbs, prompting the infant to preferentially move that "connected" limb. This ability to detect a "sensorimotor contingency" is considered to be a foundational cognitive ability in development. To understand how infants learn sensorimotor contingencies, we built a model that attempts to replicate infant behavior. Our model incorporates a neural network, action-outcome prediction, exploration, motor noise, preferred activity level, and biologically-inspired motor control. We find that simulations with our model replicate the classic findings in the literature showing preferential movement of the connected limb. An interesting observation is that the model sometimes exhibits a burst of movement after the mobile is disconnected, casting light on a similar occasional finding in infants. In addition to these general findings, the simulations also replicate data from two recent more detailed studies using a connection with the mobile that was either gradual or all-or-none. A series of ablation studies further shows that the inclusion of mechanisms of action-outcome prediction, exploration, motor noise, and biologically-inspired motor control was essential for the model to correctly replicate infant behavior. This suggests that these components are also involved in infants' sensorimotor learning.

Robot Skin with Touch and Bend Sensing using Electrical Impedance Tomography

Mar 17, 2025Abstract:Flexible electronic skins that simultaneously sense touch and bend are desired in several application areas, such as to cover articulated robot structures. This paper introduces a flexible tactile sensor based on Electrical Impedance Tomography (EIT), capable of simultaneously detecting and measuring contact forces and flexion of the sensor. The sensor integrates a magnetic hydrogel composite and utilizes EIT to reconstruct internal conductivity distributions. Real-time estimation is achieved through the one-step Gauss-Newton method, which dynamically updates reference voltages to accommodate sensor deformation. A convolutional neural network is employed to classify interactions, distinguishing between touch, bending, and idle states using pre-reconstructed images. Experimental results demonstrate an average touch localization error of 5.4 mm (SD 2.2 mm) and average bending angle estimation errors of 1.9$^\circ$ (SD 1.6$^\circ$). The proposed adaptive reference method effectively distinguishes between single- and multi-touch scenarios while compensating for deformation effects. This makes the sensor a promising solution for multimodal sensing in robotics and human-robot collaboration.

Large-area Tomographic Tactile Skin with Air Pressure Sensing for Improved Force Estimation

Mar 17, 2025Abstract:This paper presents a dual-channel tactile skin that integrates Electrical Impedance Tomography (EIT) with air pressure sensing to achieve accurate multi-contact force detection. The EIT layer provides spatial contact information, while the air pressure sensor delivers precise total force measurement. Our framework combines these complementary modalities through: deep learning-based EIT image reconstruction, contact area segmentation, and force allocation based on relative conductivity intensities from EIT. The experiments demonstrated 15.1% average force estimation error in single-contact scenarios and 20.1% in multi-contact scenarios without extensive calibration data requirements. This approach effectively addresses the challenge of simultaneously localizing and quantifying multiple contact forces without requiring complex external calibration setups, paving the way for practical and scalable soft robotic skin applications.

MuBlE: MuJoCo and Blender simulation Environment and Benchmark for Task Planning in Robot Manipulation

Mar 04, 2025Abstract:Current embodied reasoning agents struggle to plan for long-horizon tasks that require to physically interact with the world to obtain the necessary information (e.g. 'sort the objects from lightest to heaviest'). The improvement of the capabilities of such an agent is highly dependent on the availability of relevant training environments. In order to facilitate the development of such systems, we introduce a novel simulation environment (built on top of robosuite) that makes use of the MuJoCo physics engine and high-quality renderer Blender to provide realistic visual observations that are also accurate to the physical state of the scene. It is the first simulator focusing on long-horizon robot manipulation tasks preserving accurate physics modeling. MuBlE can generate mutlimodal data for training and enable design of closed-loop methods through environment interaction on two levels: visual - action loop, and control - physics loop. Together with the simulator, we propose SHOP-VRB2, a new benchmark composed of 10 classes of multi-step reasoning scenarios that require simultaneous visual and physical measurements.

Wandering around: A bioinspired approach to visual attention through object motion sensitivity

Feb 10, 2025

Abstract:Active vision enables dynamic visual perception, offering an alternative to static feedforward architectures in computer vision, which rely on large datasets and high computational resources. Biological selective attention mechanisms allow agents to focus on salient Regions of Interest (ROIs), reducing computational demand while maintaining real-time responsiveness. Event-based cameras, inspired by the mammalian retina, enhance this capability by capturing asynchronous scene changes enabling efficient low-latency processing. To distinguish moving objects while the event-based camera is in motion the agent requires an object motion segmentation mechanism to accurately detect targets and center them in the visual field (fovea). Integrating event-based sensors with neuromorphic algorithms represents a paradigm shift, using Spiking Neural Networks to parallelize computation and adapt to dynamic environments. This work presents a Spiking Convolutional Neural Network bioinspired attention system for selective attention through object motion sensitivity. The system generates events via fixational eye movements using a Dynamic Vision Sensor integrated into the Speck neuromorphic hardware, mounted on a Pan-Tilt unit, to identify the ROI and saccade toward it. The system, characterized using ideal gratings and benchmarked against the Event Camera Motion Segmentation Dataset, reaches a mean IoU of 82.2% and a mean SSIM of 96% in multi-object motion segmentation. The detection of salient objects reaches 88.8% accuracy in office scenarios and 89.8% in low-light conditions on the Event-Assisted Low-Light Video Object Segmentation Dataset. A real-time demonstrator shows the system's 0.12 s response to dynamic scenes. Its learning-free design ensures robustness across perceptual scenes, making it a reliable foundation for real-time robotic applications serving as a basis for more complex architectures.

Empirical Comparison of Four Stereoscopic Depth Sensing Cameras for Robotics Applications

Jan 13, 2025

Abstract:Depth sensing is an essential technology in robotics and many other fields. Many depth sensing (or RGB-D) cameras are available on the market and selecting the best one for your application can be challenging. In this work, we tested four stereoscopic RGB-D cameras that sense the distance by using two images from slightly different views. We empirically compared four cameras (Intel RealSense D435, Intel RealSense D455, StereoLabs ZED 2, and Luxonis OAK-D Pro) in three scenarios: (i) planar surface perception, (ii) plastic doll perception, (iii) household object perception (YCB dataset). We recorded and evaluated more than 3,000 RGB-D frames for each camera. For table-top robotics scenarios with distance to objects up to one meter, the best performance is provided by the D435 camera. For longer distances, the other three models perform better, making them more suitable for some mobile robotics applications. OAK-D Pro additionally offers integrated AI modules (e.g., object and human keypoint detection). ZED 2 is not a standalone device and requires a computer with a GPU for depth data acquisition. All data (more than 12,000 RGB-D frames) are publicly available at https://osf.io/f2seb.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge