Lukas Rustler

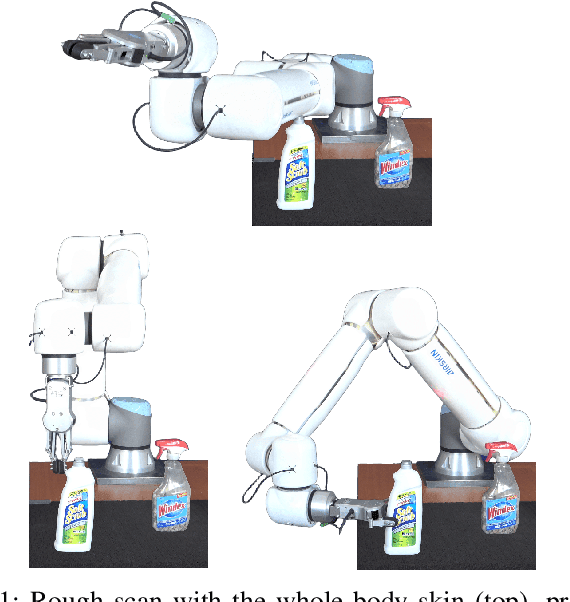

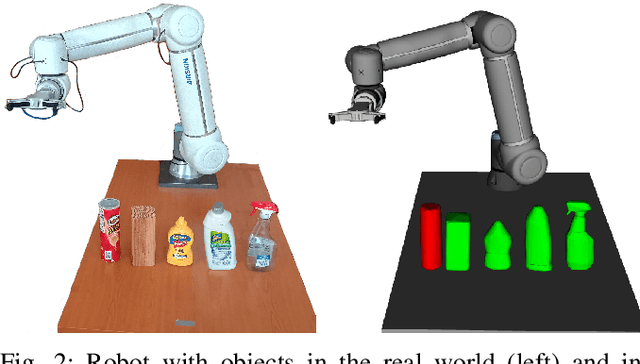

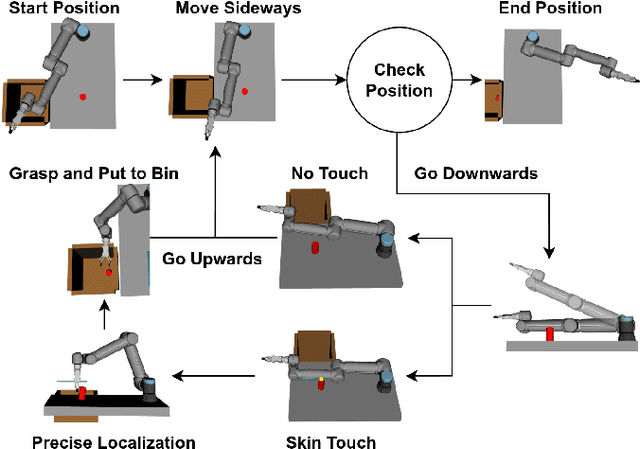

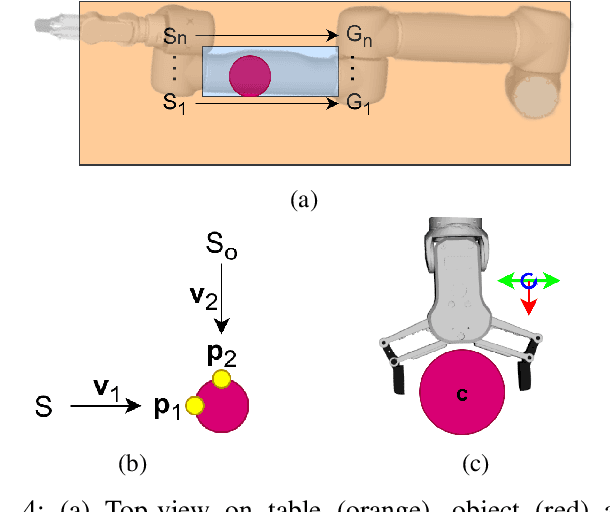

No Need to Look! Locating and Grasping Objects by a Robot Arm Covered with Sensitive Skin

Aug 25, 2025

Abstract:Locating and grasping of objects by robots is typically performed using visual sensors. Haptic feedback from contacts with the environment is only secondary if present at all. In this work, we explored an extreme case of searching for and grasping objects in complete absence of visual input, relying on haptic feedback only. The main novelty lies in the use of contacts over the complete surface of a robot manipulator covered with sensitive skin. The search is divided into two phases: (1) coarse workspace exploration with the complete robot surface, followed by (2) precise localization using the end-effector equipped with a force/torque sensor. We systematically evaluated this method in simulation and on the real robot, demonstrating that diverse objects can be located, grasped, and put in a basket. The overall success rate on the real robot for one object was 85.7\% with failures mainly while grasping specific objects. The method using whole-body contacts is six times faster compared to a baseline that uses haptic feedback only on the end-effector. We also show locating and grasping multiple objects on the table. This method is not restricted to our specific setup and can be deployed on any platform with the ability of sensing contacts over the entire body surface. This work holds promise for diverse applications in areas with challenging visual perception (due to lighting, dust, smoke, occlusion) such as in agriculture when fruits or vegetables need to be located inside foliage and picked.

Empirical Comparison of Four Stereoscopic Depth Sensing Cameras for Robotics Applications

Jan 13, 2025

Abstract:Depth sensing is an essential technology in robotics and many other fields. Many depth sensing (or RGB-D) cameras are available on the market and selecting the best one for your application can be challenging. In this work, we tested four stereoscopic RGB-D cameras that sense the distance by using two images from slightly different views. We empirically compared four cameras (Intel RealSense D435, Intel RealSense D455, StereoLabs ZED 2, and Luxonis OAK-D Pro) in three scenarios: (i) planar surface perception, (ii) plastic doll perception, (iii) household object perception (YCB dataset). We recorded and evaluated more than 3,000 RGB-D frames for each camera. For table-top robotics scenarios with distance to objects up to one meter, the best performance is provided by the D435 camera. For longer distances, the other three models perform better, making them more suitable for some mobile robotics applications. OAK-D Pro additionally offers integrated AI modules (e.g., object and human keypoint detection). ZED 2 is not a standalone device and requires a computer with a GPU for depth data acquisition. All data (more than 12,000 RGB-D frames) are publicly available at https://osf.io/f2seb.

Boosting Safe Human-Robot Collaboration Through Adaptive Collision Sensitivity

Sep 30, 2024

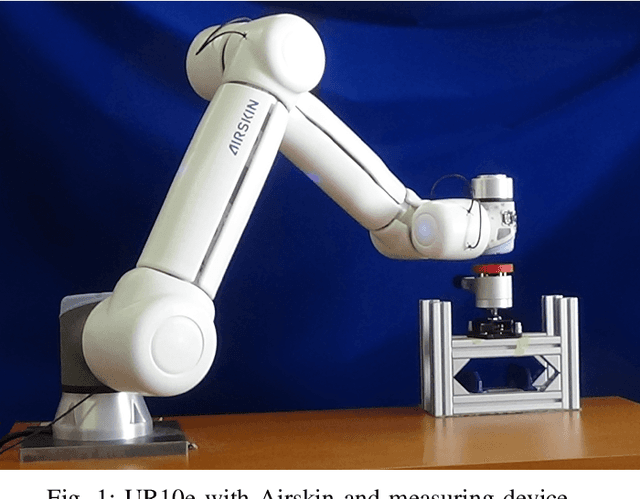

Abstract:What is considered safe for a robot operator during physical human-robot collaboration (HRC) is specified in corresponding HRC standards (e.g., the European ISO/TS 15066). The regime that allows collisions between the moving robot and the operator, called Power and Force Limiting (PFL), restricts the permissible contact forces. Using the same fixed contact thresholds on the entire robot surface results in significant and unnecessary productivity losses, as the robot needs to stop even when impact forces are within limits. Here we present a framework for setting the protective skin thresholds individually for different parts of the robot body and dynamically on the fly, based on the effective mass of each robot link and the link velocity. We perform experiments on a 6-axis collaborative robot arm (UR10e) completely covered with a sensitive skin (AIRSKIN) consisting of eleven individual pads. On a mock pick-and-place scenario with both transient and quasi-static collisions, we demonstrate how skin sensitivity influences the task performance and exerted force. We show an increase in productivity of almost 50% from the most conservative setting of collision thresholds to the most adaptive setting, while ensuring safety for human operators. The method is applicable to any robot for which the effective mass can be calculated.

Adaptive Electronic Skin Sensitivity for Safe Human-Robot Interaction

Sep 10, 2024Abstract:Artificial electronic skins covering complete robot bodies can make physical human-robot collaboration safe and hence possible. Standards for collaborative robots (e.g., ISO/TS 15066) prescribe permissible forces and pressures during contacts with the human body. These characteristics of the collision depend on the speed of the colliding robot link but also on its effective mass. Thus, to warrant contacts complying with the Power and Force Limiting (PFL) collaborative regime but at the same time maximizing productivity, protective skin thresholds should be set individually for different parts of the robot bodies and dynamically on the run. Here we present and empirically evaluate four scenarios: (a) static and uniform - fixed thresholds for the whole skin, (b) static but different settings for robot body parts, (c) dynamically set based on every link velocity, (d) dynamically set based on effective mass of every robot link. We perform experiments in simulation and on a real 6-axis collaborative robot arm (UR10e) completely covered with sensitive skin (AIRSKIN) comprising eleven individual pads. On a mock pick-and-place scenario with transient collisions with the robot body parts and two collision reactions (stop and avoid), we demonstrate the boost in productivity in going from the most conservative setting of the skin thresholds (a) to the most adaptive setting (d). The threshold settings for every skin pad are adapted with a frequency of 25 Hz. This work can be easily extended for platforms with more degrees of freedom and larger skin coverage (humanoids) and to social human-robot interaction scenarios where contacts with the robot will be used for communication.

Interactive Learning of Physical Object Properties Through Robot Manipulation and Database of Object Measurements

Apr 10, 2024

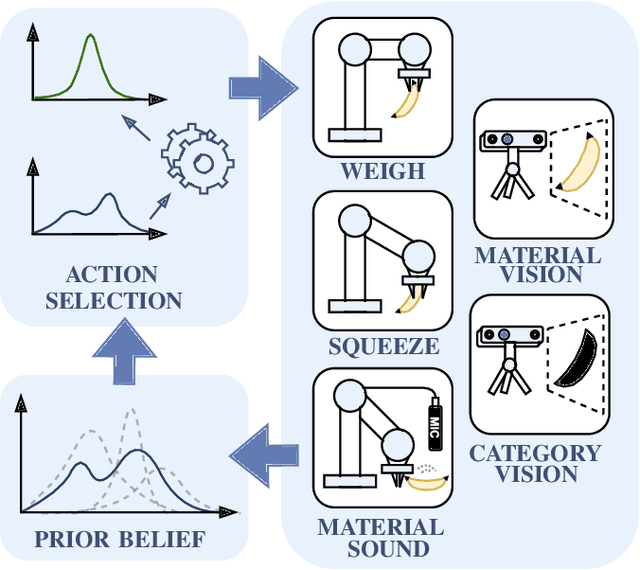

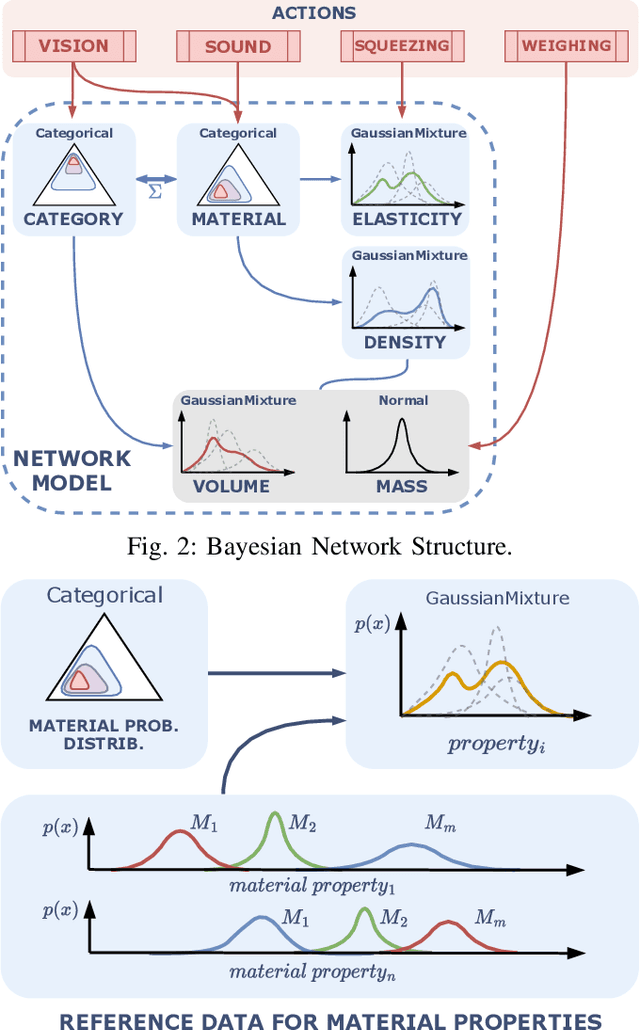

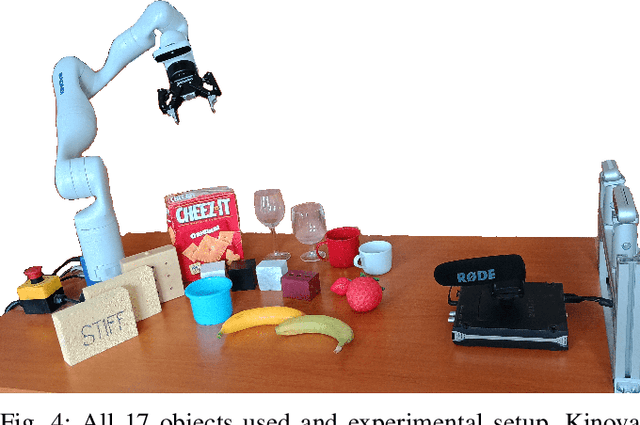

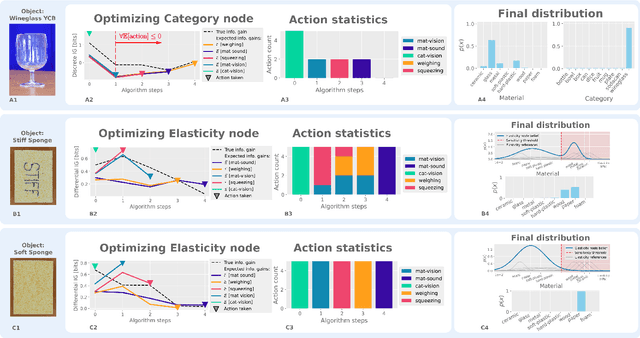

Abstract:This work presents a framework for automatically extracting physical object properties, such as material composition, mass, volume, and stiffness, through robot manipulation and a database of object measurements. The framework involves exploratory action selection to maximize learning about objects on a table. A Bayesian network models conditional dependencies between object properties, incorporating prior probability distributions and uncertainty associated with measurement actions. The algorithm selects optimal exploratory actions based on expected information gain and updates object properties through Bayesian inference. Experimental evaluation demonstrates effective action selection compared to a baseline and correct termination of the experiments if there is nothing more to be learned. The algorithm proved to behave intelligently when presented with trick objects with material properties in conflict with their appearance. The robot pipeline integrates with a logging module and an online database of objects, containing over 24,000 measurements of 63 objects with different grippers. All code and data are publicly available, facilitating automatic digitization of objects and their physical properties through exploratory manipulations.

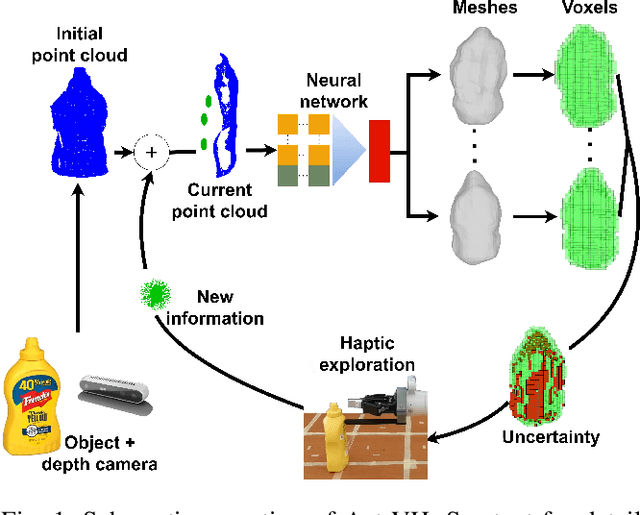

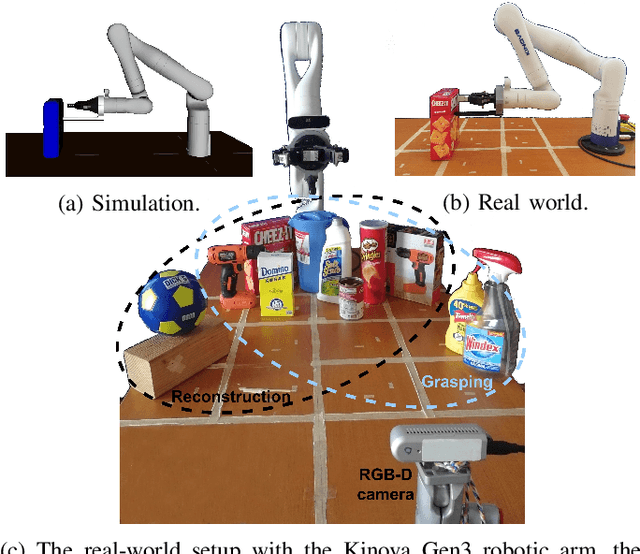

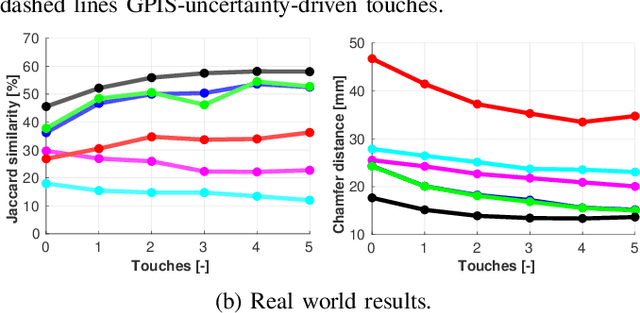

Efficient Visuo-Haptic Object Shape Completion for Robot Manipulation

Mar 08, 2023Abstract:For robot manipulation, a complete and accurate object shape is desirable. Here, we present a method that combines visual and haptic reconstruction in a closed-loop pipeline. From an initial viewpoint, the object shape is reconstructed using an implicit surface deep neural network. The location with highest uncertainty is selected for haptic exploration, the object is touched, the new information from touch and a new point cloud from the camera are added, object position is re-estimated and the cycle is repeated. We extend Rustler et al. (2022) by using a new theoretically grounded method to determine the points with highest uncertainty, and we increase the yield of every haptic exploration by adding not only the contact points to the point cloud but also incorporating the empty space established through the robot movement to the object. Additionally, the solution is compact in that the jaws of a closed two-finger gripper are directly used for exploration. The object position is re-estimated after every robot action and multiple objects can be present simultaneously on the table. We achieve a steady improvement with every touch using three different metrics and demonstrate the utility of the better shape reconstruction in grasping experiments on the real robot. On average, grasp success rate increases from 63.3% to 70.4% after a single exploratory touch and to 82.7% after five touches. The collected data and code are publicly available (https://osf.io/j6rkd/, https://github.com/ctu-vras/vishac)

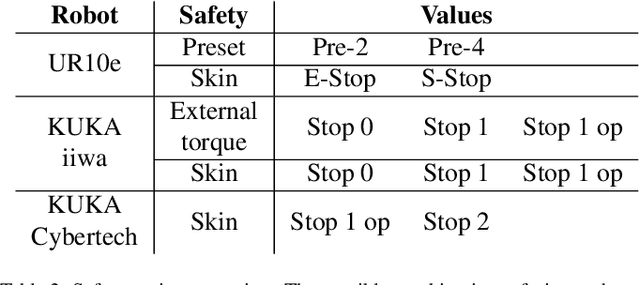

Effect of Active and Passive Protective Soft Skins on Collision Forces in Human-robot Collaboration

Mar 18, 2022

Abstract:Soft electronic skins are one of the means to turn an industrial manipulator into a collaborative robot. For manipulators that are already fit for physical human-robot collaboration, soft skins can make them safer. In this work, we study the after impact behavior of two collaborative manipulators (UR10e and KUKA LBR iiwa) and one classical industrial manipulator (KUKA Cybertech), in the presence or absence of an industrial protective skin (AIRSKIN). In addition, we isolate the effects of the passive padding and the active contribution of the sensor to robot reaction. We present a total of 2250 collision measurements and study the impact force, contact duration, clamping force, and impulse. The dataset is publicly available. We summarize our results as follows. For transient collisions, the passive skin properties lowered the impact forces by about 40 %. During quasi-static contact, the effect of skin covers -- active or passive -- cannot be isolated from the collision detection and reaction by the collaborative robots. Important effects of the stop categories triggered by the active protective skin were found. We systematically compare the different settings and the empirically established safe velocities with prescriptions by the ISO/TS 15066. In some cases, up to the quadruple of the ISO/TS 15066 prescribed velocity can comply with the impact force limits and thus be considered safe. We propose an extension of the formulas relating impact force and permissible velocity that take into account the stiffness and compressible thickness of the protective cover, leading to better predictions of the collision forces. At the same time, this work emphasizes the need for in situ measurements as all the factors we studied -- presence of active/passive skin, safety stop settings, robot collision reaction, impact direction, and, of course, velocity -- have effects on the force evolution after impact.

Active Visuo-Haptic Object Shape Completion

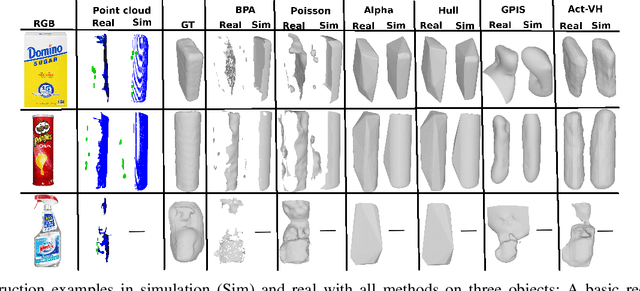

Mar 17, 2022

Abstract:Recent advancements in object shape completion have enabled impressive object reconstructions using only visual input. However, due to self-occlusion, the reconstructions have high uncertainty in the occluded object parts, which negatively impacts the performance of downstream robotic tasks such as grasping. In this work, we propose an active visuo-haptic shape completion method called Act-VH that actively computes where to touch the objects based on the reconstruction uncertainty. Act-VH reconstructs objects from point clouds and calculates the reconstruction uncertainty using IGR, a recent state-of-the-art implicit surface deep neural network. We experimentally evaluate the reconstruction accuracy of Act-VH against five baselines in simulation and in the real world. We also propose a new simulation environment for this purpose. The results show that Act-VH outperforms all baselines and that an uncertainty-driven haptic exploration policy leads to higher reconstruction accuracy than a random policy and a policy driven by Gaussian Process Implicit Surfaces. As a final experiment, we evaluate Act-VH and the best reconstruction baseline on grasping 10 novel objects. The results show that Act-VH reaches a significantly higher grasp success rate than the baseline on all objects. Together, this work opens up the door for using active visuo-haptic shape completion in more complex cluttered scenes.

* 8 pages, 7 figures

3D Collision-Force-Map for Safe Human-Robot Collaboration

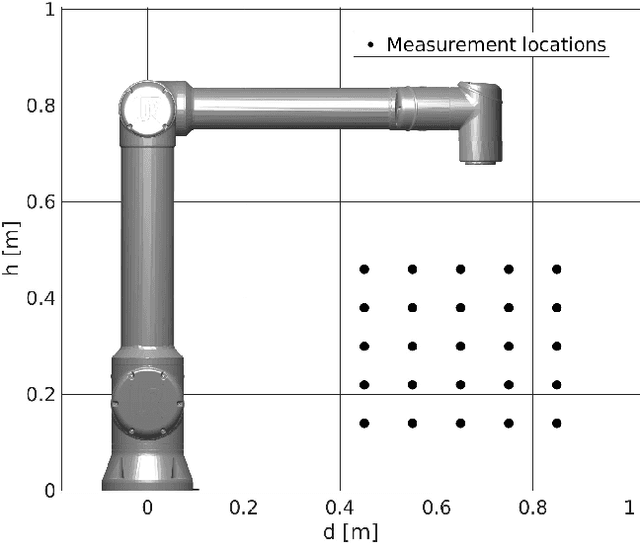

Sep 02, 2020

Abstract:Collaborative robots, i.e. robots designed for direct interaction with a human, present a promising step in robotic manufacturing. However, their performance is limited by the safety demands of standards. In this article, we measure the forces exerted by two robot arms (UR10e and Kuka LBR iiwa) on an impact measuring device in different positions in the robot workspace and with various velocities. Based on these measurements, we investigate the Power and Force Limiting regime presented in ISO/TS 15066. Impact forces are in practice hard to calculate analytically as many properties of the robots are not available (e.g., proprietary control algorithms). This motivates the use of simple, yet reasonably accurate, approximations. Our results show that height of the impact location is also an important factor and that an accurate model of the robot can be created from a limited number of impact samples. Previous work predicted impact forces based on other factors (distance, velocity, weight), yet these predictions are less accurate. This would allow a fast estimation of the impact forces in the robot's workspace and thus make it easier to design a safe human-robot collaboration setup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge