Jens Lundell

FLoRA: Sample-Efficient Preference-based RL via Low-Rank Style Adaptation of Reward Functions

Apr 14, 2025

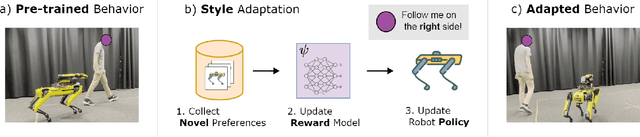

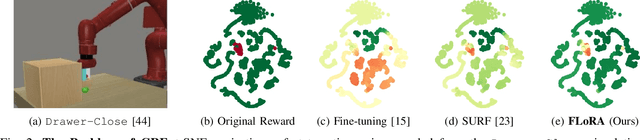

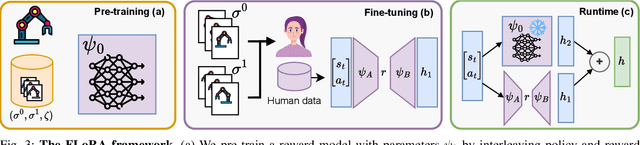

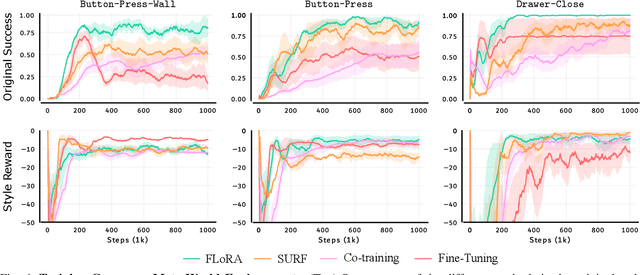

Abstract:Preference-based reinforcement learning (PbRL) is a suitable approach for style adaptation of pre-trained robotic behavior: adapting the robot's policy to follow human user preferences while still being able to perform the original task. However, collecting preferences for the adaptation process in robotics is often challenging and time-consuming. In this work we explore the adaptation of pre-trained robots in the low-preference-data regime. We show that, in this regime, recent adaptation approaches suffer from catastrophic reward forgetting (CRF), where the updated reward model overfits to the new preferences, leading the agent to become unable to perform the original task. To mitigate CRF, we propose to enhance the original reward model with a small number of parameters (low-rank matrices) responsible for modeling the preference adaptation. Our evaluation shows that our method can efficiently and effectively adjust robotic behavior to human preferences across simulation benchmark tasks and multiple real-world robotic tasks.

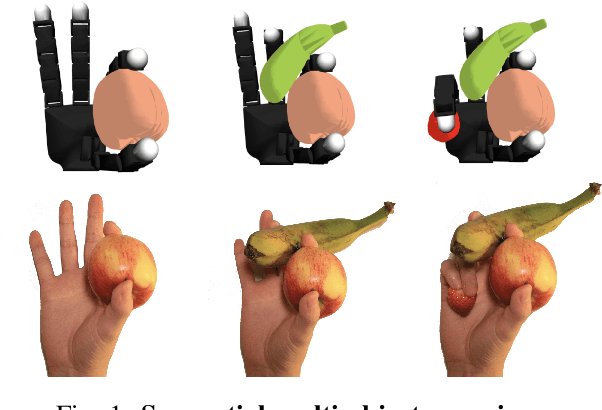

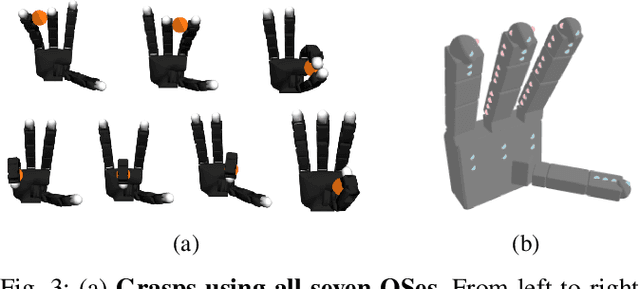

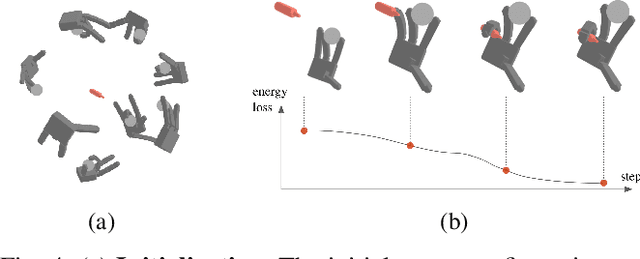

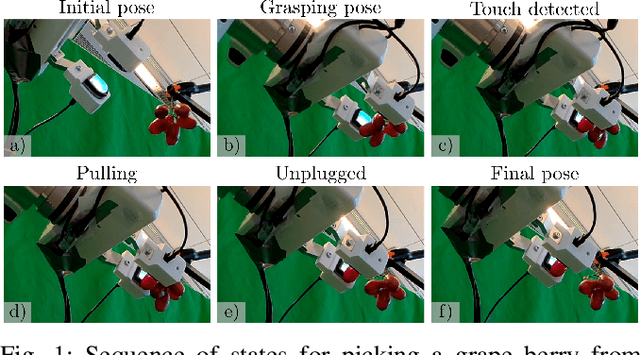

Grasping a Handful: Sequential Multi-Object Dexterous Grasp Generation

Mar 31, 2025

Abstract:We introduce the sequential multi-object robotic grasp sampling algorithm SeqGrasp that can robustly synthesize stable grasps on diverse objects using the robotic hand's partial Degrees of Freedom (DoF). We use SeqGrasp to construct the large-scale Allegro Hand sequential grasping dataset SeqDataset and use it for training the diffusion-based sequential grasp generator SeqDiffuser. We experimentally evaluate SeqGrasp and SeqDiffuser against the state-of-the-art non-sequential multi-object grasp generation method MultiGrasp in simulation and on a real robot. The experimental results demonstrate that SeqGrasp and SeqDiffuser reach an 8.71%-43.33% higher grasp success rate than MultiGrasp. Furthermore, SeqDiffuser is approximately 1000 times faster at generating grasps than SeqGrasp and MultiGrasp.

Pushing Everything Everywhere All At Once: Probabilistic Prehensile Pushing

Mar 18, 2025Abstract:We address prehensile pushing, the problem of manipulating a grasped object by pushing against the environment. Our solution is an efficient nonlinear trajectory optimization problem relaxed from an exact mixed integer non-linear trajectory optimization formulation. The critical insight is recasting the external pushers (environment) as a discrete probability distribution instead of binary variables and minimizing the entropy of the distribution. The probabilistic reformulation allows all pushers to be used simultaneously, but at the optimum, the probability mass concentrates onto one due to the entropy minimization. We numerically compare our method against a state-of-the-art sampling-based baseline on a prehensile pushing task. The results demonstrate that our method finds trajectories 8 times faster and at a 20 times lower cost than the baseline. Finally, we demonstrate that a simulated and real Franka Panda robot can successfully manipulate different objects following the trajectories proposed by our method. Supplementary materials are available at https://probabilistic-prehensile-pushing.github.io/.

Cloth-Splatting: 3D Cloth State Estimation from RGB Supervision

Jan 03, 2025

Abstract:We introduce Cloth-Splatting, a method for estimating 3D states of cloth from RGB images through a prediction-update framework. Cloth-Splatting leverages an action-conditioned dynamics model for predicting future states and uses 3D Gaussian Splatting to update the predicted states. Our key insight is that coupling a 3D mesh-based representation with Gaussian Splatting allows us to define a differentiable map between the cloth state space and the image space. This enables the use of gradient-based optimization techniques to refine inaccurate state estimates using only RGB supervision. Our experiments demonstrate that Cloth-Splatting not only improves state estimation accuracy over current baselines but also reduces convergence time.

A Riemannian Framework for Learning Reduced-order Lagrangian Dynamics

Oct 24, 2024

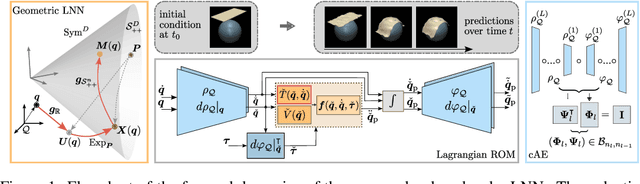

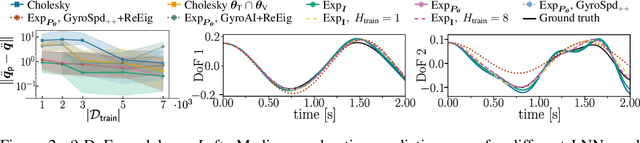

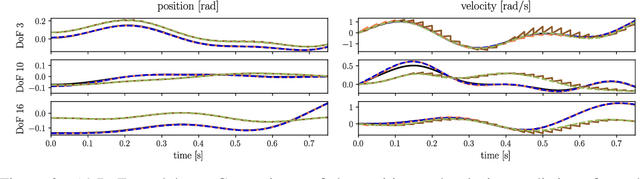

Abstract:By incorporating physical consistency as inductive bias, deep neural networks display increased generalization capabilities and data efficiency in learning nonlinear dynamic models. However, the complexity of these models generally increases with the system dimensionality, requiring larger datasets, more complex deep networks, and significant computational effort. We propose a novel geometric network architecture to learn physically-consistent reduced-order dynamic parameters that accurately describe the original high-dimensional system behavior. This is achieved by building on recent advances in model-order reduction and by adopting a Riemannian perspective to jointly learn a structure-preserving latent space and the associated low-dimensional dynamics. Our approach enables accurate long-term predictions of the high-dimensional dynamics of rigid and deformable systems with increased data efficiency by inferring interpretable and physically plausible reduced Lagrangian models.

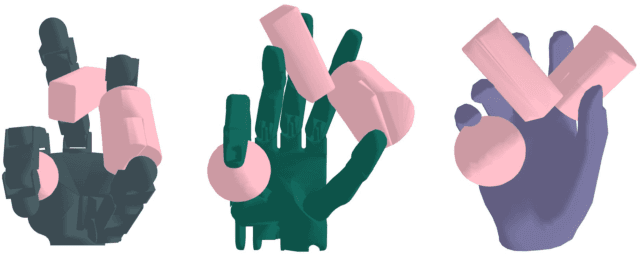

DexDiffuser: Generating Dexterous Grasps with Diffusion Models

Feb 05, 2024

Abstract:We introduce DexDiffuser, a novel dexterous grasping method that generates, evaluates, and refines grasps on partial object point clouds. DexDiffuser includes the conditional diffusion-based grasp sampler DexSampler and the dexterous grasp evaluator DexEvaluator. DexSampler generates high-quality grasps conditioned on object point clouds by iterative denoising of randomly sampled grasps. We also introduce two grasp refinement strategies: Evaluator-Guided Diffusion (EGD) and Evaluator-based Sampling Refinement (ESR). Our simulation and real-world experiments on the Allegro Hand consistently demonstrate that DexDiffuser outperforms the state-of-the-art multi-finger grasp generation method FFHNet with an, on average, 21.71--22.20\% higher grasp success rate.

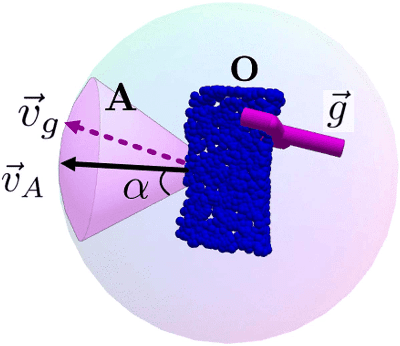

CAPGrasp: An $\mathbb{R}^3\times \text{SO-equivariant}$ Continuous Approach-Constrained Generative Grasp Sampler

Oct 18, 2023

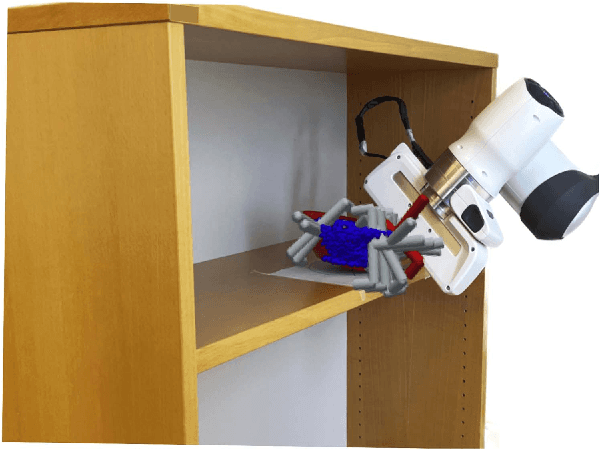

Abstract:We propose CAPGrasp, an $\mathbb{R}^3\times \text{SO(2)-equivariant}$ 6-DoF continuous approach-constrained generative grasp sampler. It includes a novel learning strategy for training CAPGrasp that eliminates the need to curate massive conditionally labeled datasets and a constrained grasp refinement technique that improves grasp poses while respecting the grasp approach directional constraints. The experimental results demonstrate that CAPGrasp is more than three times as sample efficient as unconstrained grasp samplers while achieving up to 38% grasp success rate improvement. CAPGrasp also achieves 4-10% higher grasp success rates than constrained but noncontinuous grasp samplers. Overall, CAPGrasp is a sample-efficient solution when grasps must originate from specific directions, such as grasping in confined spaces.

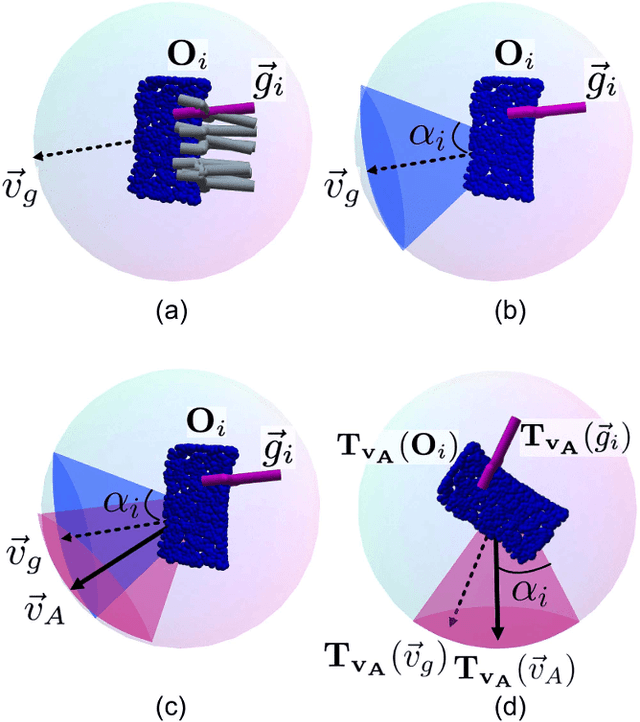

Enabling Robot Manipulation of Soft and Rigid Objects with Vision-based Tactile Sensors

Jun 09, 2023

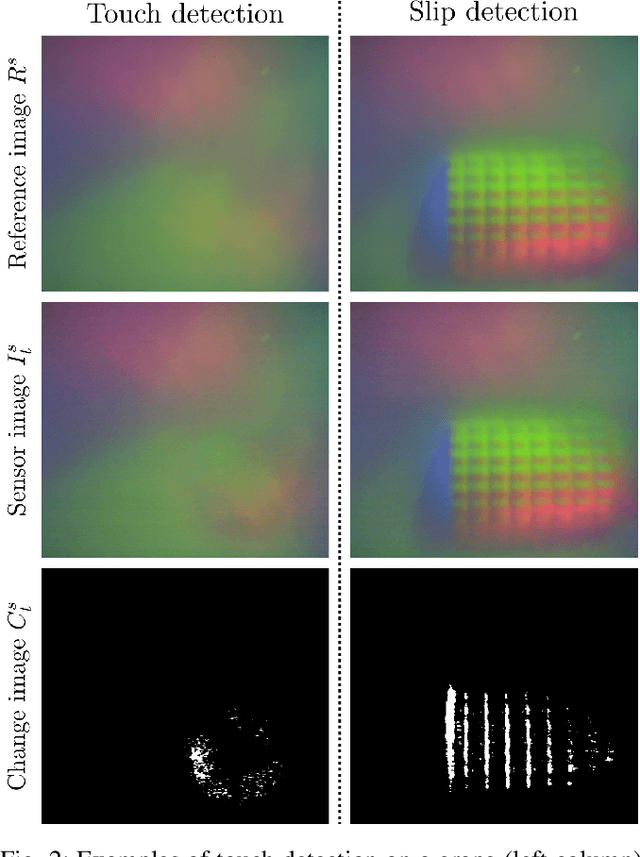

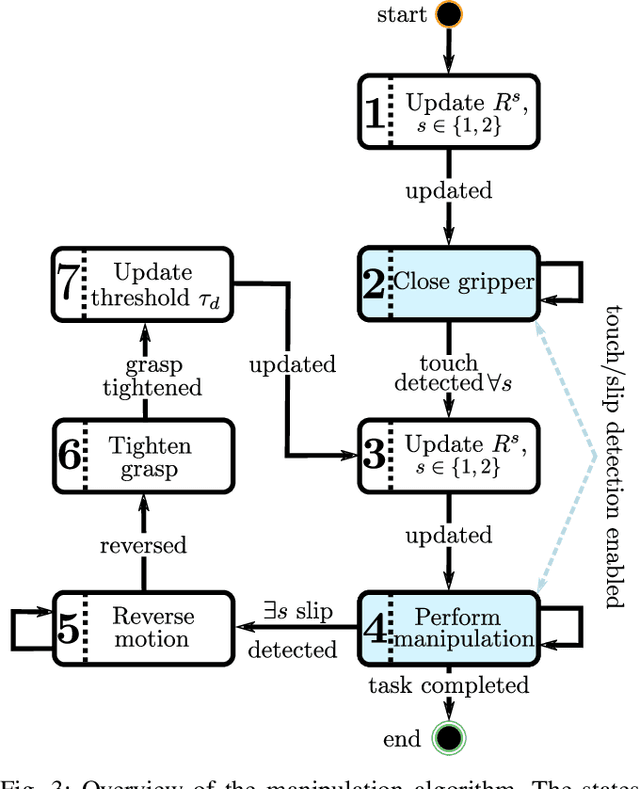

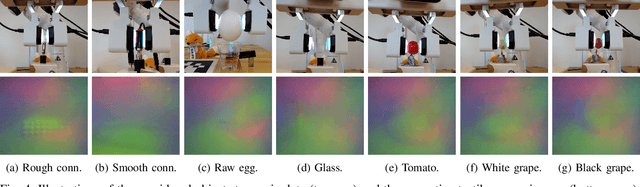

Abstract:Endowing robots with tactile capabilities opens up new possibilities for their interaction with the environment, including the ability to handle fragile and/or soft objects. In this work, we equip the robot gripper with low-cost vision-based tactile sensors and propose a manipulation algorithm that adapts to both rigid and soft objects without requiring any knowledge of their properties. The algorithm relies on a touch and slip detection method, which considers the variation in the tactile images with respect to reference ones. We validate the approach on seven different objects, with different properties in terms of rigidity and fragility, to perform unplugging and lifting tasks. Furthermore, to enhance applicability, we combine the manipulation algorithm with a grasp sampler for the task of finding and picking a grape from a bunch without damaging~it.

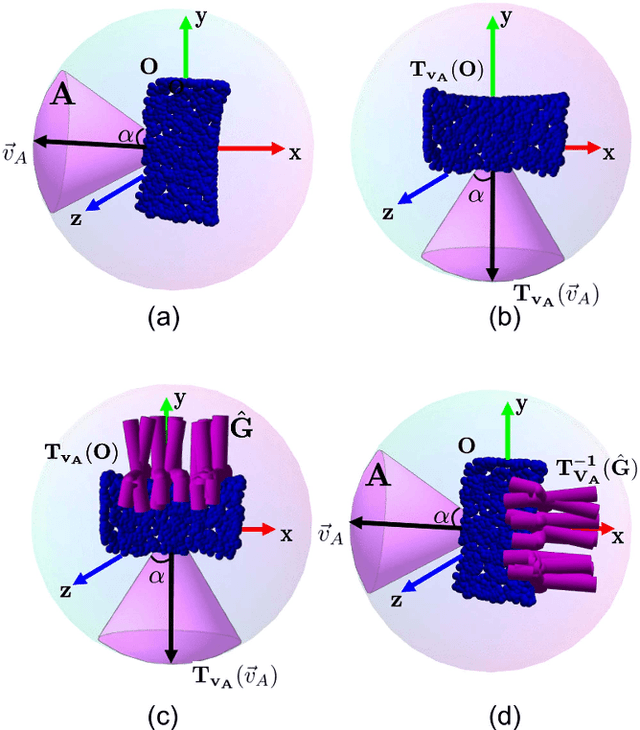

GoNet: An Approach-Constrained Generative Grasp Sampling Network

Mar 14, 2023Abstract:Constraining the approach direction of grasps is important when picking objects in confined spaces, such as when emptying a shelf. Yet, such capabilities are not available in state-of-the-art data-driven grasp sampling methods that sample grasps all around the object. In this work, we address the specific problem of training approach-constrained data-driven grasp samplers and how to generate good grasping directions automatically. Our solution is GoNet: a generative grasp sampler that can constrain the grasp approach direction to lie close to a specified direction. This is achieved by discretizing SO(3) into bins and training GoNet to generate grasps from those bins. At run-time, the bin aligning with the second largest principal component of the observed point cloud is selected. GoNet is benchmarked against GraspNet, a state-of-the-art unconstrained grasp sampler, in an unconfined grasping experiment in simulation and on an unconfined and confined grasping experiment in the real world. The results demonstrate that GoNet achieves higher success-over-coverage in simulation and a 12%-18% higher success rate in real-world table-picking and shelf-picking tasks than the baseline.

Constrained Generative Sampling of 6-DoF Grasps

Feb 21, 2023

Abstract:Most state-of-the-art data-driven grasp sampling methods propose stable and collision-free grasps uniformly on the target object. For bin-picking, executing any of those grasps is sufficient. However, for completing specific tasks, such as squeezing out liquid from a bottle, we want the grasp to be on a specific part on the object body while avoiding other locations, such as the cap. In this work, we present a generative grasp sampling network, VCGS, capable of constrained 6-Degrees-of-Freedom (DoF) grasp sampling. In addition, we also curate a new dataset designed to train and evaluate methods for constrained grasping. The new dataset, called CONG, consists of over 14 million training samples of synthetically rendered point clouds and grasps at random target areas on 2889 objects. VCGS is benchmarked against GraspNet, a state-of-the-art unconstrained grasp sampler, in simulation and on a real robot. The results demonstrate that VCGS achieves a 10-15% higher grasp success rate than the baseline while being 2-3 times as sample efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge