Olov Andersson

DeltaFlow: An Efficient Multi-frame Scene Flow Estimation Method

Aug 23, 2025

Abstract:Previous dominant methods for scene flow estimation focus mainly on input from two consecutive frames, neglecting valuable information in the temporal domain. While recent trends shift towards multi-frame reasoning, they suffer from rapidly escalating computational costs as the number of frames grows. To leverage temporal information more efficiently, we propose DeltaFlow ($\Delta$Flow), a lightweight 3D framework that captures motion cues via a $\Delta$ scheme, extracting temporal features with minimal computational cost, regardless of the number of frames. Additionally, scene flow estimation faces challenges such as imbalanced object class distributions and motion inconsistency. To tackle these issues, we introduce a Category-Balanced Loss to enhance learning across underrepresented classes and an Instance Consistency Loss to enforce coherent object motion, improving flow accuracy. Extensive evaluations on the Argoverse 2 and Waymo datasets show that $\Delta$Flow achieves state-of-the-art performance with up to 22% lower error and $2\times$ faster inference compared to the next-best multi-frame supervised method, while also demonstrating a strong cross-domain generalization ability. The code is open-sourced at https://github.com/Kin-Zhang/DeltaFlow along with trained model weights.

CompSLAM: Complementary Hierarchical Multi-Modal Localization and Mapping for Robot Autonomy in Underground Environments

May 10, 2025Abstract:Robot autonomy in unknown, GPS-denied, and complex underground environments requires real-time, robust, and accurate onboard pose estimation and mapping for reliable operations. This becomes particularly challenging in perception-degraded subterranean conditions under harsh environmental factors, including darkness, dust, and geometrically self-similar structures. This paper details CompSLAM, a highly resilient and hierarchical multi-modal localization and mapping framework designed to address these challenges. Its flexible architecture achieves resilience through redundancy by leveraging the complementary nature of pose estimates derived from diverse sensor modalities. Developed during the DARPA Subterranean Challenge, CompSLAM was successfully deployed on all aerial, legged, and wheeled robots of Team Cerberus during their competition-winning final run. Furthermore, it has proven to be a reliable odometry and mapping solution in various subsequent projects, with extensions enabling multi-robot map sharing for marsupial robotic deployments and collaborative mapping. This paper also introduces a comprehensive dataset acquired by a manually teleoperated quadrupedal robot, covering a significant portion of the DARPA Subterranean Challenge finals course. This dataset evaluates CompSLAM's robustness to sensor degradations as the robot traverses 740 meters in an environment characterized by highly variable geometries and demanding lighting conditions. The CompSLAM code and the DARPA SubT Finals dataset are made publicly available for the benefit of the robotics community

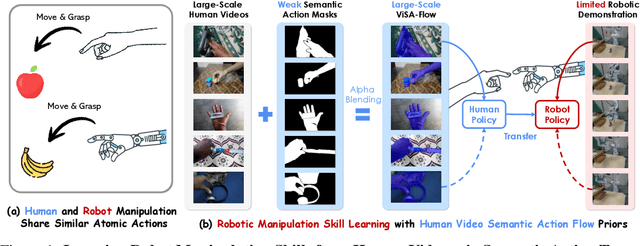

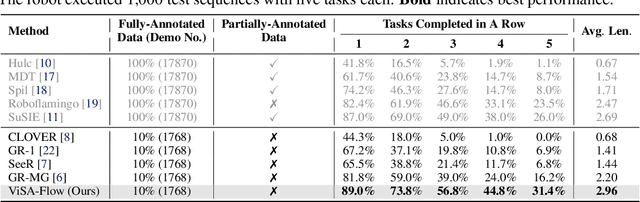

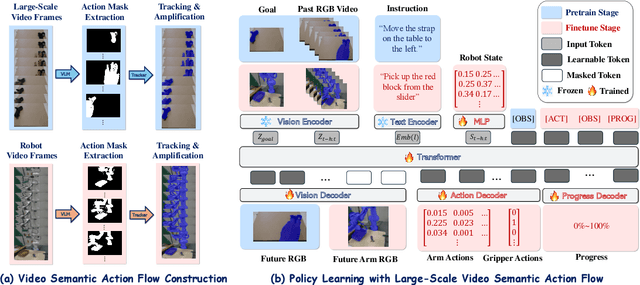

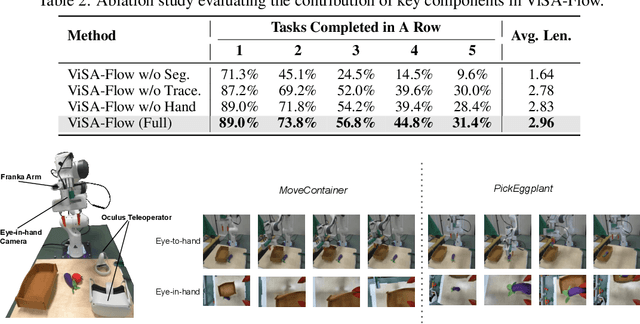

ViSA-Flow: Accelerating Robot Skill Learning via Large-Scale Video Semantic Action Flow

May 02, 2025

Abstract:One of the central challenges preventing robots from acquiring complex manipulation skills is the prohibitive cost of collecting large-scale robot demonstrations. In contrast, humans are able to learn efficiently by watching others interact with their environment. To bridge this gap, we introduce semantic action flow as a core intermediate representation capturing the essential spatio-temporal manipulator-object interactions, invariant to superficial visual differences. We present ViSA-Flow, a framework that learns this representation self-supervised from unlabeled large-scale video data. First, a generative model is pre-trained on semantic action flows automatically extracted from large-scale human-object interaction video data, learning a robust prior over manipulation structure. Second, this prior is efficiently adapted to a target robot by fine-tuning on a small set of robot demonstrations processed through the same semantic abstraction pipeline. We demonstrate through extensive experiments on the CALVIN benchmark and real-world tasks that ViSA-Flow achieves state-of-the-art performance, particularly in low-data regimes, outperforming prior methods by effectively transferring knowledge from human video observation to robotic execution. Videos are available at https://visaflow-web.github.io/ViSAFLOW.

FLoRA: Sample-Efficient Preference-based RL via Low-Rank Style Adaptation of Reward Functions

Apr 14, 2025

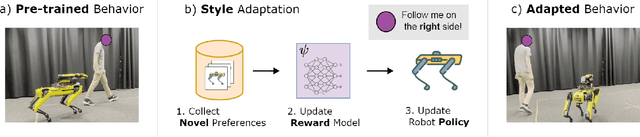

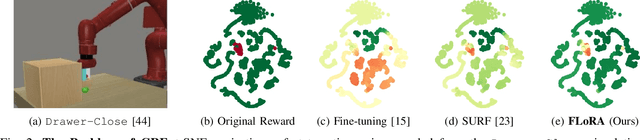

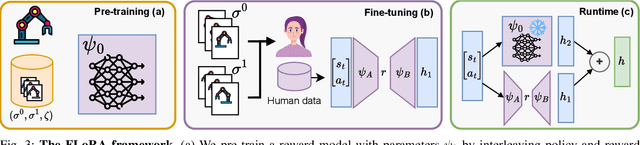

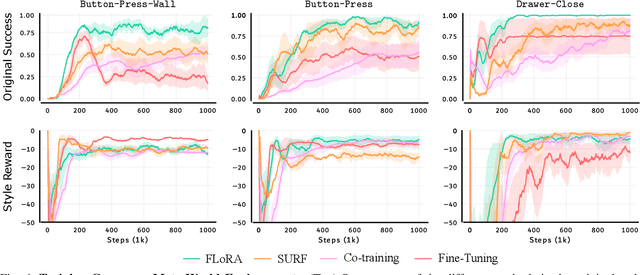

Abstract:Preference-based reinforcement learning (PbRL) is a suitable approach for style adaptation of pre-trained robotic behavior: adapting the robot's policy to follow human user preferences while still being able to perform the original task. However, collecting preferences for the adaptation process in robotics is often challenging and time-consuming. In this work we explore the adaptation of pre-trained robots in the low-preference-data regime. We show that, in this regime, recent adaptation approaches suffer from catastrophic reward forgetting (CRF), where the updated reward model overfits to the new preferences, leading the agent to become unable to perform the original task. To mitigate CRF, we propose to enhance the original reward model with a small number of parameters (low-rank matrices) responsible for modeling the preference adaptation. Our evaluation shows that our method can efficiently and effectively adjust robotic behavior to human preferences across simulation benchmark tasks and multiple real-world robotic tasks.

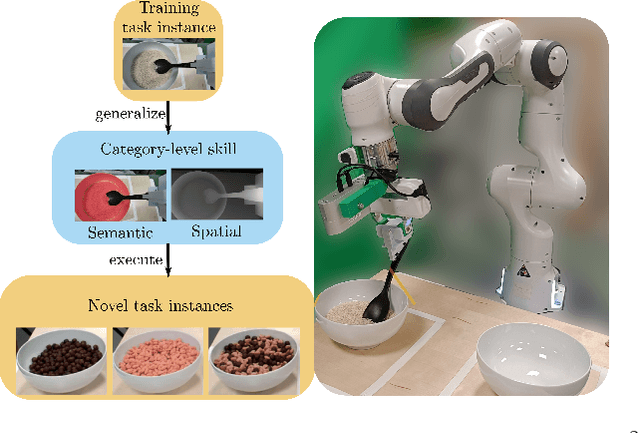

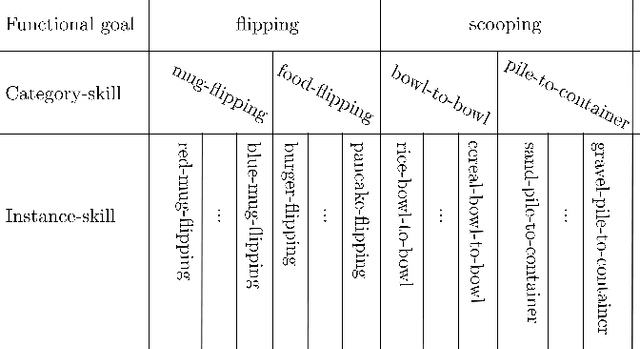

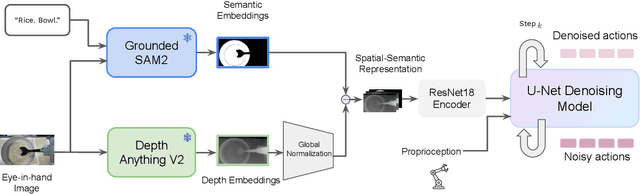

S$^2$-Diffusion: Generalizing from Instance-level to Category-level Skills in Robot Manipulation

Feb 13, 2025

Abstract:Recent advances in skill learning has propelled robot manipulation to new heights by enabling it to learn complex manipulation tasks from a practical number of demonstrations. However, these skills are often limited to the particular action, object, and environment \textit{instances} that are shown in the training data, and have trouble transferring to other instances of the same category. In this work we present an open-vocabulary Spatial-Semantic Diffusion policy (S$^2$-Diffusion) which enables generalization from instance-level training data to category-level, enabling skills to be transferable between instances of the same category. We show that functional aspects of skills can be captured via a promptable semantic module combined with a spatial representation. We further propose leveraging depth estimation networks to allow the use of only a single RGB camera. Our approach is evaluated and compared on a diverse number of robot manipulation tasks, both in simulation and in the real world. Our results show that S$^2$-Diffusion is invariant to changes in category-irrelevant factors as well as enables satisfying performance on other instances within the same category, even if it was not trained on that specific instance. Full videos of all real-world experiments are available in the supplementary material.

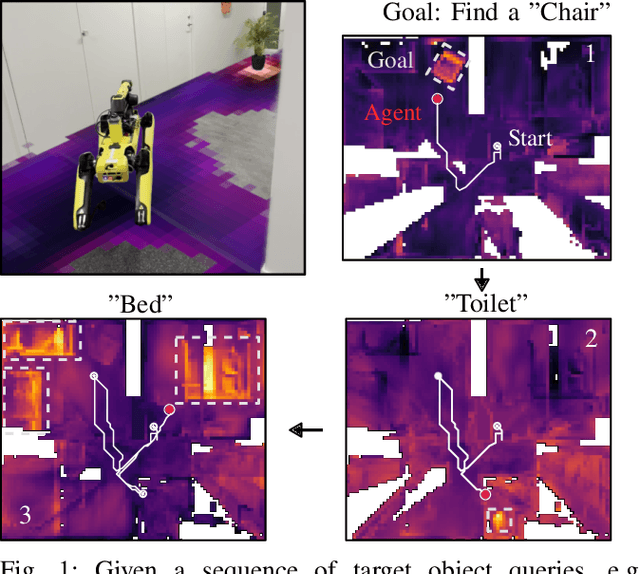

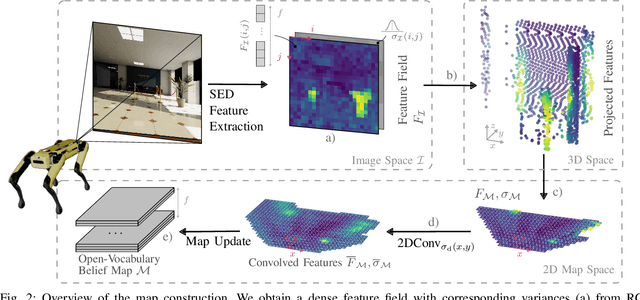

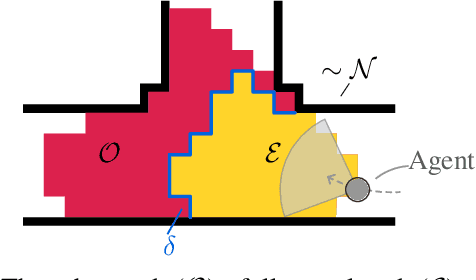

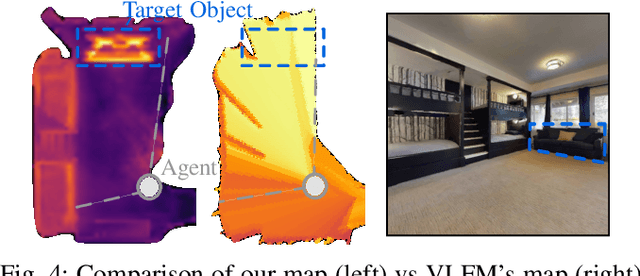

One Map to Find Them All: Real-time Open-Vocabulary Mapping for Zero-shot Multi-Object Navigation

Sep 18, 2024

Abstract:The capability to efficiently search for objects in complex environments is fundamental for many real-world robot applications. Recent advances in open-vocabulary vision models have resulted in semantically-informed object navigation methods that allow a robot to search for an arbitrary object without prior training. However, these zero-shot methods have so far treated the environment as unknown for each consecutive query. In this paper we introduce a new benchmark for zero-shot multi-object navigation, allowing the robot to leverage information gathered from previous searches to more efficiently find new objects. To address this problem we build a reusable open-vocabulary feature map tailored for real-time object search. We further propose a probabilistic-semantic map update that mitigates common sources of errors in semantic feature extraction and leverage this semantic uncertainty for informed multi-object exploration. We evaluate our method on a set of object navigation tasks in both simulation as well as with a real robot, running in real-time on a Jetson Orin AGX. We demonstrate that it outperforms existing state-of-the-art approaches both on single and multi-object navigation tasks. Additional videos, code and the multi-object navigation benchmark will be available on https://finnbsch.github.io/OneMap.

SeFlow: A Self-Supervised Scene Flow Method in Autonomous Driving

Jul 01, 2024

Abstract:Scene flow estimation predicts the 3D motion at each point in successive LiDAR scans. This detailed, point-level, information can help autonomous vehicles to accurately predict and understand dynamic changes in their surroundings. Current state-of-the-art methods require annotated data to train scene flow networks and the expense of labeling inherently limits their scalability. Self-supervised approaches can overcome the above limitations, yet face two principal challenges that hinder optimal performance: point distribution imbalance and disregard for object-level motion constraints. In this paper, we propose SeFlow, a self-supervised method that integrates efficient dynamic classification into a learning-based scene flow pipeline. We demonstrate that classifying static and dynamic points helps design targeted objective functions for different motion patterns. We also emphasize the importance of internal cluster consistency and correct object point association to refine the scene flow estimation, in particular on object details. Our real-time capable method achieves state-of-the-art performance on the self-supervised scene flow task on Argoverse 2 and Waymo datasets. The code is open-sourced at https://github.com/KTH-RPL/SeFlow along with trained model weights.

Learning to Fly Omnidirectional Micro Aerial Vehicles with an End-To-End Control Network

Dec 08, 2023Abstract:Overactuated tilt-rotor platforms offer many advantages over traditional fixed-arm drones, allowing the decoupling of the applied force from the attitude of the robot. This expands their application areas to aerial interaction and manipulation, and allows them to overcome disturbances such as from ground or wall effects by exploiting the additional degrees of freedom available to their controllers. However, the overactuation also complicates the control problem, especially if the motors that tilt the arms have slower dynamics than those spinning the propellers. Instead of building a complex model-based controller that takes all of these subtleties into account, we attempt to learn an end-to-end pose controller using reinforcement learning, and show its superior behavior in the presence of inertial and force disturbances compared to a state-of-the-art traditional controller.

COIN-LIO: Complementary Intensity-Augmented LiDAR Inertial Odometry

Oct 02, 2023Abstract:We present COIN-LIO, a LiDAR Inertial Odometry pipeline that tightly couples information from LiDAR intensity with geometry-based point cloud registration. The focus of our work is to improve the robustness of LiDAR-inertial odometry in geometrically degenerate scenarios, like tunnels or flat fields. We project LiDAR intensity returns into an intensity image, and propose an image processing pipeline that produces filtered images with improved brightness consistency within the image as well as across different scenes. To effectively leverage intensity as an additional modality, we present a novel feature selection scheme that detects uninformative directions in the point cloud registration and explicitly selects patches with complementary image information. Photometric error minimization in the image patches is then fused with inertial measurements and point-to-plane registration in an iterated Extended Kalman Filter. The proposed approach improves accuracy and robustness on a public dataset. We additionally publish a new dataset, that captures five real-world environments in challenging, geometrically degenerate scenes. By using the additional photometric information, our approach shows drastically improved robustness against geometric degeneracy in environments where all compared baseline approaches fail.

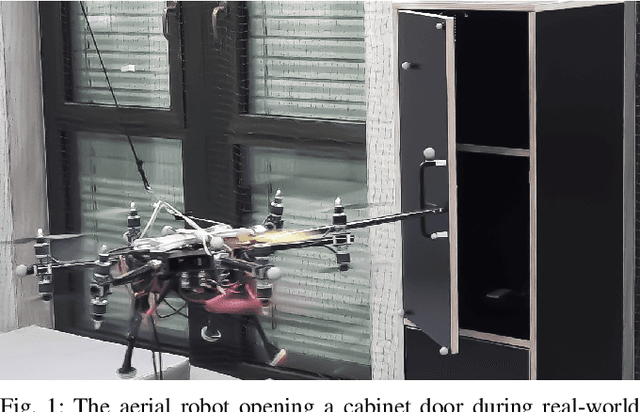

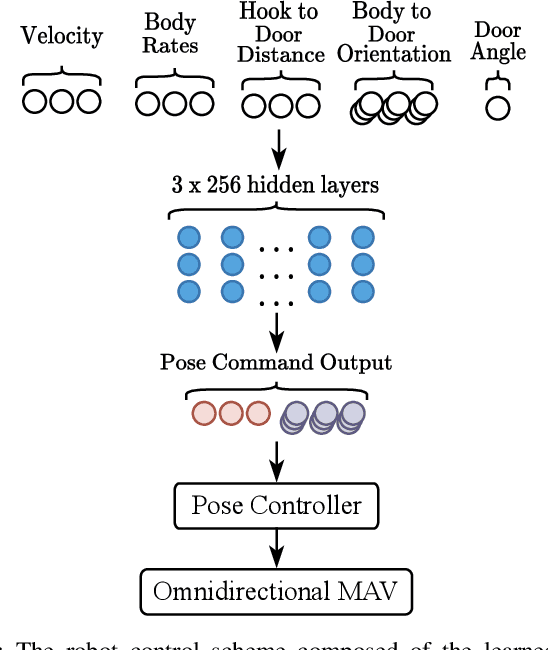

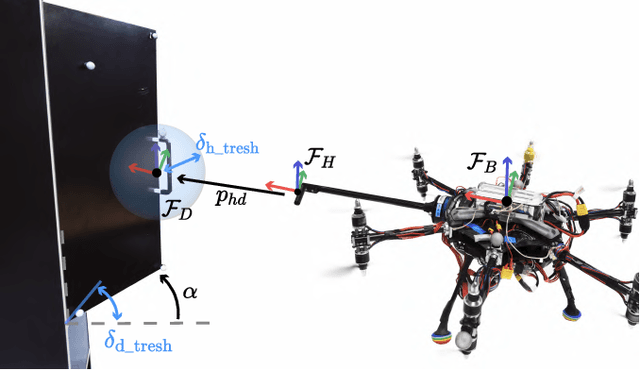

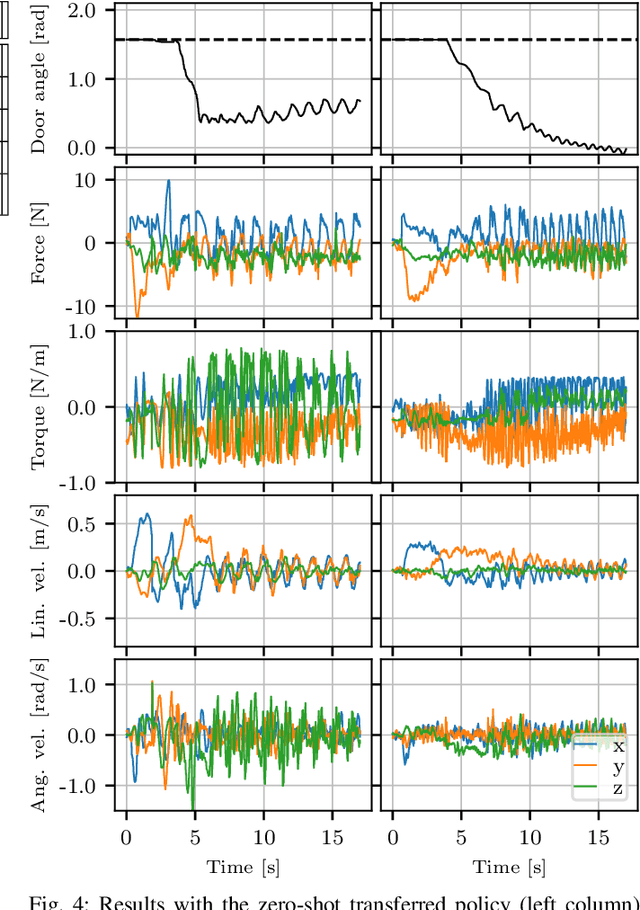

Learning to Open Doors with an Aerial Manipulator

Jul 28, 2023

Abstract:The field of aerial manipulation has seen rapid advances, transitioning from push-and-slide tasks to interaction with articulated objects. So far, when more complex actions are performed, the motion trajectory is usually handcrafted or a result of online optimization methods like Model Predictive Control (MPC) or Model Predictive Path Integral (MPPI) control. However, these methods rely on heuristics or model simplifications to efficiently run on onboard hardware, producing results in acceptable amounts of time. Moreover, they can be sensitive to disturbances and differences between the real environment and its simulated counterpart. In this work, we propose a Reinforcement Learning (RL) approach to learn motion behaviors for a manipulation task while producing policies that are robust to disturbances and modeling errors. Specifically, we train a policy to perform a door-opening task with an Omnidirectional Micro Aerial Vehicle (OMAV). The policy is trained in a physics simulator and experiments are presented both in simulation and running onboard the real platform, investigating the simulation to real world transfer. We compare our method against a state-of-the-art MPPI solution, showing a considerable increase in robustness and speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge