Patric Jensfelt

TeFlow: Enabling Multi-frame Supervision for Self-Supervised Feed-forward Scene Flow Estimation

Feb 22, 2026Abstract:Self-supervised feed-forward methods for scene flow estimation offer real-time efficiency, but their supervision from two-frame point correspondences is unreliable and often breaks down under occlusions. Multi-frame supervision has the potential to provide more stable guidance by incorporating motion cues from past frames, yet naive extensions of two-frame objectives are ineffective because point correspondences vary abruptly across frames, producing inconsistent signals. In the paper, we present TeFlow, enabling multi-frame supervision for feed-forward models by mining temporally consistent supervision. TeFlow introduces a temporal ensembling strategy that forms reliable supervisory signals by aggregating the most temporally consistent motion cues from a candidate pool built across multiple frames. Extensive evaluations demonstrate that TeFlow establishes a new state-of-the-art for self-supervised feed-forward methods, achieving performance gains of up to 33\% on the challenging Argoverse 2 and nuScenes datasets. Our method performs on par with leading optimization-based methods, yet speeds up 150 times. The code is open-sourced at https://github.com/KTH-RPL/OpenSceneFlow along with trained model weights.

Visible Structure Retrieval for Lightweight Image-Based Relocalisation

Nov 16, 2025Abstract:Accurate camera pose estimation from an image observation in a previously mapped environment is commonly done through structure-based methods: by finding correspondences between 2D keypoints on the image and 3D structure points in the map. In order to make this correspondence search tractable in large scenes, existing pipelines either rely on search heuristics, or perform image retrieval to reduce the search space by comparing the current image to a database of past observations. However, these approaches result in elaborate pipelines or storage requirements that grow with the number of past observations. In this work, we propose a new paradigm for making structure-based relocalisation tractable. Instead of relying on image retrieval or search heuristics, we learn a direct mapping from image observations to the visible scene structure in a compact neural network. Given a query image, a forward pass through our novel visible structure retrieval network allows obtaining the subset of 3D structure points in the map that the image views, thus reducing the search space of 2D-3D correspondences. We show that our proposed method enables performing localisation with an accuracy comparable to the state of the art, while requiring lower computational and storage footprint.

ArgoTweak: Towards Self-Updating HD Maps through Structured Priors

Sep 10, 2025

Abstract:Reliable integration of prior information is crucial for self-verifying and self-updating HD maps. However, no public dataset includes the required triplet of prior maps, current maps, and sensor data. As a result, existing methods must rely on synthetic priors, which create inconsistencies and lead to a significant sim2real gap. To address this, we introduce ArgoTweak, the first dataset to complete the triplet with realistic map priors. At its core, ArgoTweak employs a bijective mapping framework, breaking down large-scale modifications into fine-grained atomic changes at the map element level, thus ensuring interpretability. This paradigm shift enables accurate change detection and integration while preserving unchanged elements with high fidelity. Experiments show that training models on ArgoTweak significantly reduces the sim2real gap compared to synthetic priors. Extensive ablations further highlight the impact of structured priors and detailed change annotations. By establishing a benchmark for explainable, prior-aided HD mapping, ArgoTweak advances scalable, self-improving mapping solutions. The dataset, baselines, map modification toolbox, and further resources are available at https://kth-rpl.github.io/ArgoTweak/.

DeltaFlow: An Efficient Multi-frame Scene Flow Estimation Method

Aug 23, 2025

Abstract:Previous dominant methods for scene flow estimation focus mainly on input from two consecutive frames, neglecting valuable information in the temporal domain. While recent trends shift towards multi-frame reasoning, they suffer from rapidly escalating computational costs as the number of frames grows. To leverage temporal information more efficiently, we propose DeltaFlow ($\Delta$Flow), a lightweight 3D framework that captures motion cues via a $\Delta$ scheme, extracting temporal features with minimal computational cost, regardless of the number of frames. Additionally, scene flow estimation faces challenges such as imbalanced object class distributions and motion inconsistency. To tackle these issues, we introduce a Category-Balanced Loss to enhance learning across underrepresented classes and an Instance Consistency Loss to enforce coherent object motion, improving flow accuracy. Extensive evaluations on the Argoverse 2 and Waymo datasets show that $\Delta$Flow achieves state-of-the-art performance with up to 22% lower error and $2\times$ faster inference compared to the next-best multi-frame supervised method, while also demonstrating a strong cross-domain generalization ability. The code is open-sourced at https://github.com/Kin-Zhang/DeltaFlow along with trained model weights.

PRIX: Learning to Plan from Raw Pixels for End-to-End Autonomous Driving

Jul 24, 2025Abstract:While end-to-end autonomous driving models show promising results, their practical deployment is often hindered by large model sizes, a reliance on expensive LiDAR sensors and computationally intensive BEV feature representations. This limits their scalability, especially for mass-market vehicles equipped only with cameras. To address these challenges, we propose PRIX (Plan from Raw Pixels). Our novel and efficient end-to-end driving architecture operates using only camera data, without explicit BEV representation and forgoing the need for LiDAR. PRIX leverages a visual feature extractor coupled with a generative planning head to predict safe trajectories from raw pixel inputs directly. A core component of our architecture is the Context-aware Recalibration Transformer (CaRT), a novel module designed to effectively enhance multi-level visual features for more robust planning. We demonstrate through comprehensive experiments that PRIX achieves state-of-the-art performance on the NavSim and nuScenes benchmarks, matching the capabilities of larger, multimodal diffusion planners while being significantly more efficient in terms of inference speed and model size, making it a practical solution for real-world deployment. Our work is open-source and the code will be at https://maxiuw.github.io/prix.

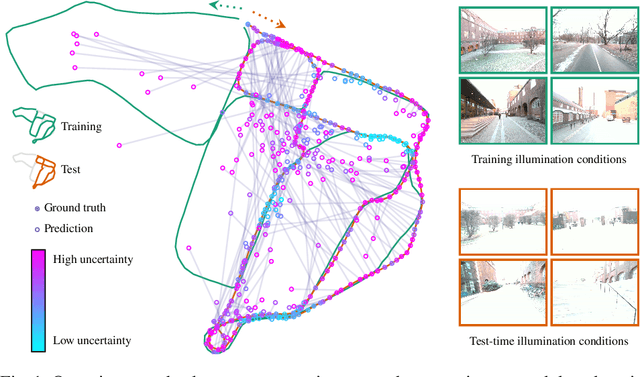

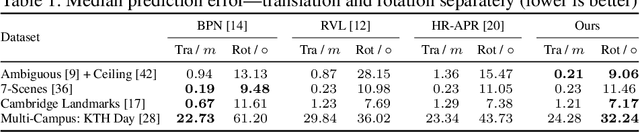

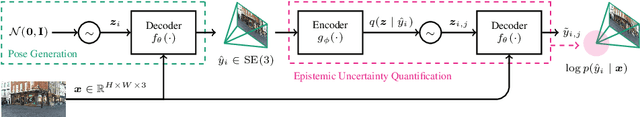

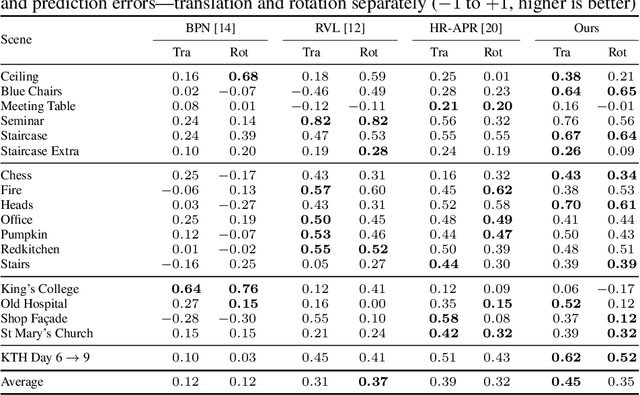

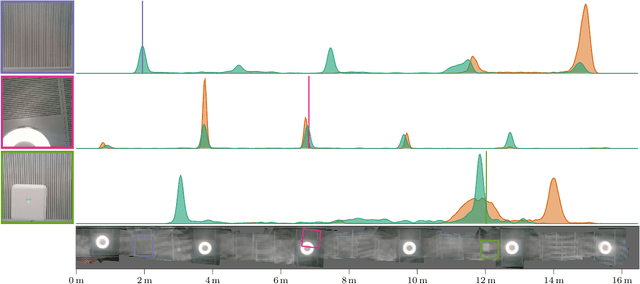

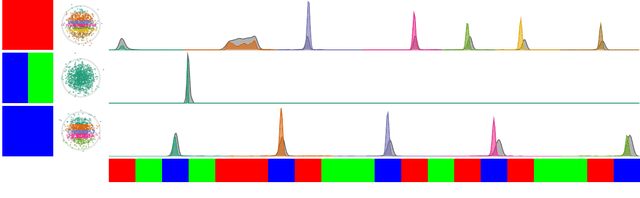

Quantifying Epistemic Uncertainty in Absolute Pose Regression

Apr 09, 2025

Abstract:Visual relocalization is the task of estimating the camera pose given an image it views. Absolute pose regression offers a solution to this task by training a neural network, directly regressing the camera pose from image features. While an attractive solution in terms of memory and compute efficiency, absolute pose regression's predictions are inaccurate and unreliable outside the training domain. In this work, we propose a novel method for quantifying the epistemic uncertainty of an absolute pose regression model by estimating the likelihood of observations within a variational framework. Beyond providing a measure of confidence in predictions, our approach offers a unified model that also handles observation ambiguities, probabilistically localizing the camera in the presence of repetitive structures. Our method outperforms existing approaches in capturing the relation between uncertainty and prediction error.

SSF: Sparse Long-Range Scene Flow for Autonomous Driving

Jan 29, 2025

Abstract:Scene flow enables an understanding of the motion characteristics of the environment in the 3D world. It gains particular significance in the long-range, where object-based perception methods might fail due to sparse observations far away. Although significant advancements have been made in scene flow pipelines to handle large-scale point clouds, a gap remains in scalability with respect to long-range. We attribute this limitation to the common design choice of using dense feature grids, which scale quadratically with range. In this paper, we propose Sparse Scene Flow (SSF), a general pipeline for long-range scene flow, adopting a sparse convolution based backbone for feature extraction. This approach introduces a new challenge: a mismatch in size and ordering of sparse feature maps between time-sequential point scans. To address this, we propose a sparse feature fusion scheme, that augments the feature maps with virtual voxels at missing locations. Additionally, we propose a range-wise metric that implicitly gives greater importance to faraway points. Our method, SSF, achieves state-of-the-art results on the Argoverse2 dataset, demonstrating strong performance in long-range scene flow estimation. Our code will be released at https://github.com/KTH-RPL/SSF.git.

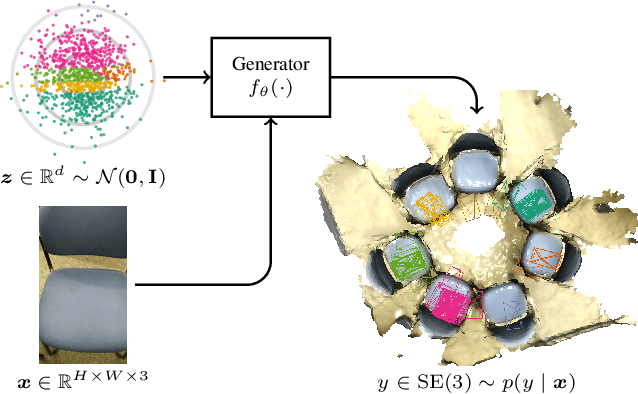

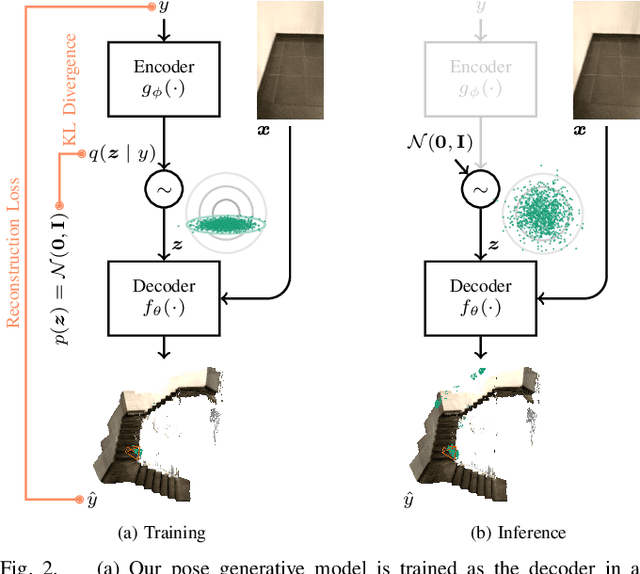

Conditional Variational Autoencoders for Probabilistic Pose Regression

Oct 07, 2024

Abstract:Robots rely on visual relocalization to estimate their pose from camera images when they lose track. One of the challenges in visual relocalization is repetitive structures in the operation environment of the robot. This calls for probabilistic methods that support multiple hypotheses for robot's pose. We propose such a probabilistic method to predict the posterior distribution of camera poses given an observed image. Our proposed training strategy results in a generative model of camera poses given an image, which can be used to draw samples from the pose posterior distribution. Our method is streamlined and well-founded in theory and outperforms existing methods on localization in presence of ambiguities.

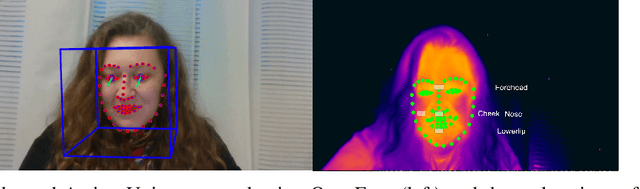

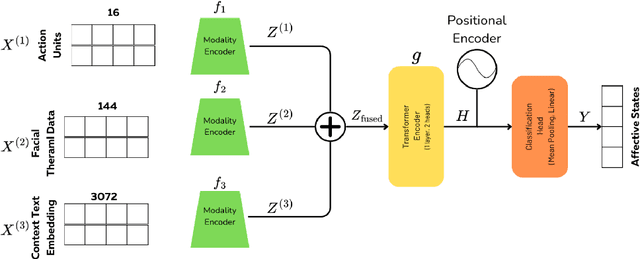

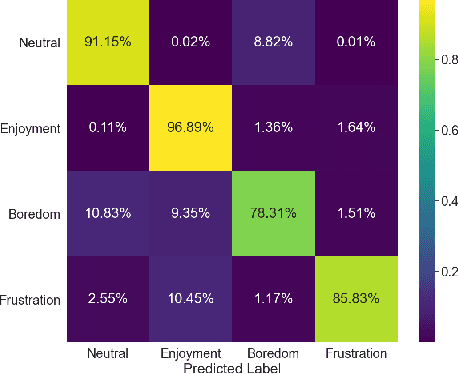

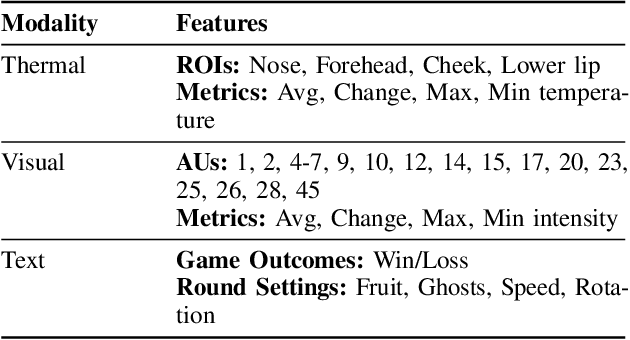

Fusion in Context: A Multimodal Approach to Affective State Recognition

Sep 18, 2024

Abstract:Accurate recognition of human emotions is a crucial challenge in affective computing and human-robot interaction (HRI). Emotional states play a vital role in shaping behaviors, decisions, and social interactions. However, emotional expressions can be influenced by contextual factors, leading to misinterpretations if context is not considered. Multimodal fusion, combining modalities like facial expressions, speech, and physiological signals, has shown promise in improving affect recognition. This paper proposes a transformer-based multimodal fusion approach that leverages facial thermal data, facial action units, and textual context information for context-aware emotion recognition. We explore modality-specific encoders to learn tailored representations, which are then fused using additive fusion and processed by a shared transformer encoder to capture temporal dependencies and interactions. The proposed method is evaluated on a dataset collected from participants engaged in a tangible tabletop Pacman game designed to induce various affective states. Our results demonstrate the effectiveness of incorporating contextual information and multimodal fusion for affective state recognition.

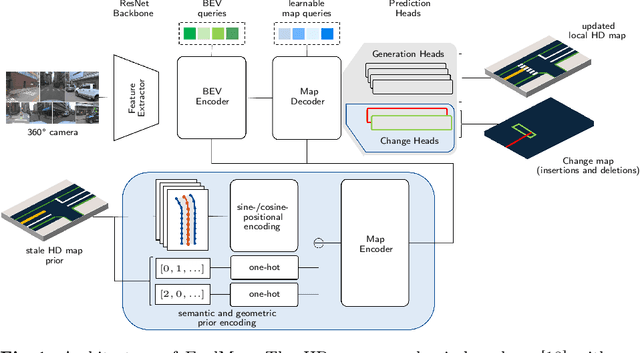

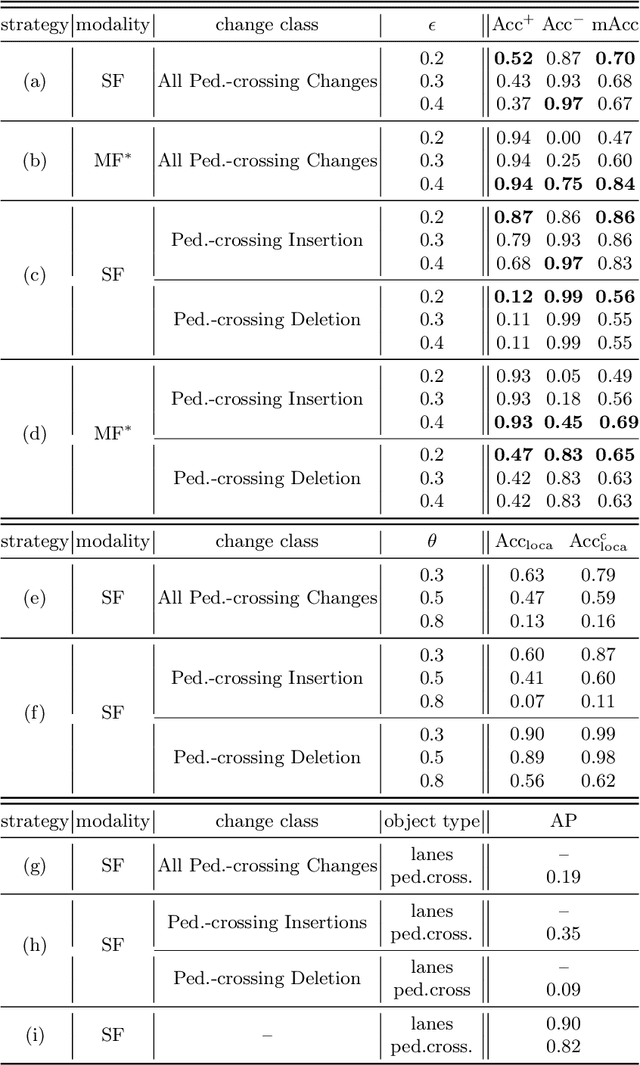

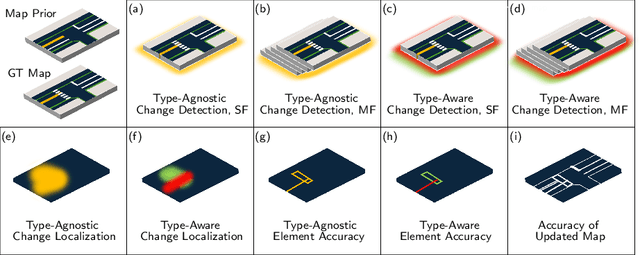

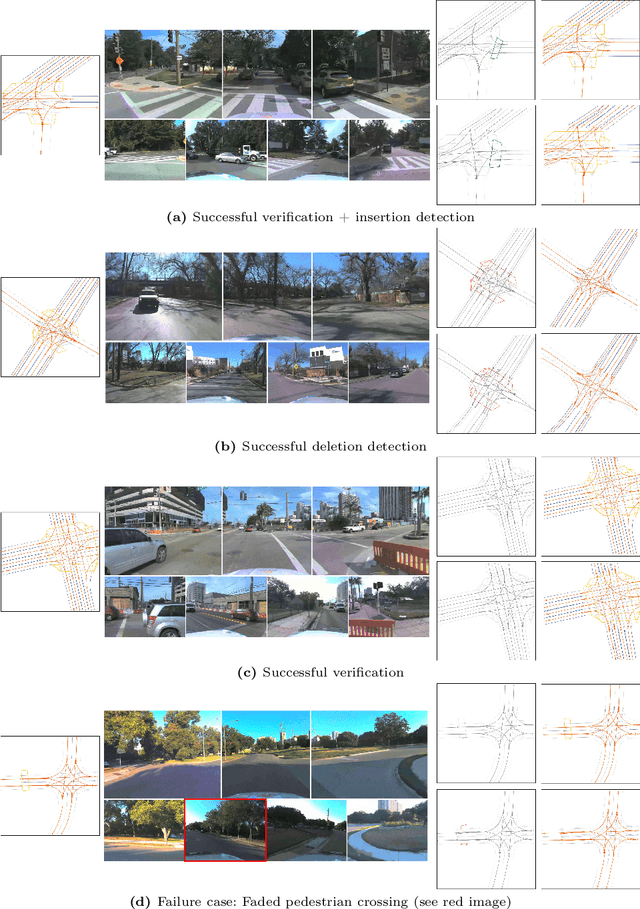

ExelMap: Explainable Element-based HD-Map Change Detection and Update

Sep 16, 2024

Abstract:Acquisition and maintenance are central problems in deploying high-definition (HD) maps for autonomous driving, with two lines of research prevalent in current literature: Online HD map generation and HD map change detection. However, the generated map's quality is currently insufficient for safe deployment, and many change detection approaches fail to precisely localize and extract the changed map elements, hence lacking explainability and hindering a potential fleet-based cooperative HD map update. In this paper, we propose the novel task of explainable element-based HD map change detection and update. In extending recent approaches that use online mapping techniques informed with an outdated map prior for HD map updating, we present ExelMap, an explainable element-based map updating strategy that specifically identifies changed map elements. In this context, we discuss how currently used metrics fail to capture change detection performance, while allowing for unfair comparison between prior-less and prior-informed map generation methods. Finally, we present an experimental study on real-world changes related to pedestrian crossings of the Argoverse 2 Map Change Dataset. To the best of our knowledge, this is the first comprehensive problem investigation of real-world end-to-end element-based HD map change detection and update, and ExelMap the first proposed solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge