Christian Smith

Division of Robotics, Perception and Learning, KTH - Royal Institute of Technology, Stockholm, Sweden

Design and Evaluation of an Assisted Programming Interface for Behavior Trees in Robotics

Feb 10, 2026Abstract:The possibility to create reactive robot programs faster without the need for extensively trained programmers is becoming increasingly important. So far, it has not been explored how various techniques for creating Behavior Tree (BT) program representations could be combined with complete graphical user interfaces (GUIs) to allow a human user to validate and edit trees suggested by automated methods. In this paper, we introduce BEhavior TRee GUI (BETR-GUI) for creating BTs with the help of an AI assistant that combines methods using large language models, planning, genetic programming, and Bayesian optimization with a drag-and-drop editor. A user study with 60 participants shows that by combining different assistive methods, BETR-GUI enables users to perform better at solving the robot programming tasks. The results also show that humans using the full variant of BETR-GUI perform better than the AI assistant running on its own.

YCB-Handovers Dataset: Analyzing Object Weight Impact on Human Handovers to Adapt Robotic Handover Motion

Dec 23, 2025Abstract:This paper introduces the YCB-Handovers dataset, capturing motion data of 2771 human-human handovers with varying object weights. The dataset aims to bridge a gap in human-robot collaboration research, providing insights into the impact of object weight in human handovers and readiness cues for intuitive robotic motion planning. The underlying dataset for object recognition and tracking is the YCB (Yale-CMU-Berkeley) dataset, which is an established standard dataset used in algorithms for robotic manipulation, including grasping and carrying objects. The YCB-Handovers dataset incorporates human motion patterns in handovers, making it applicable for data-driven, human-inspired models aimed at weight-sensitive motion planning and adaptive robotic behaviors. This dataset covers an extensive range of weights, allowing for a more robust study of handover behavior and weight variation. Some objects also require careful handovers, highlighting contrasts with standard handovers. We also provide a detailed analysis of the object's weight impact on the human reaching motion in these handovers.

Adapting Robot's Explanation for Failures Based on Observed Human Behavior in Human-Robot Collaboration

Apr 13, 2025

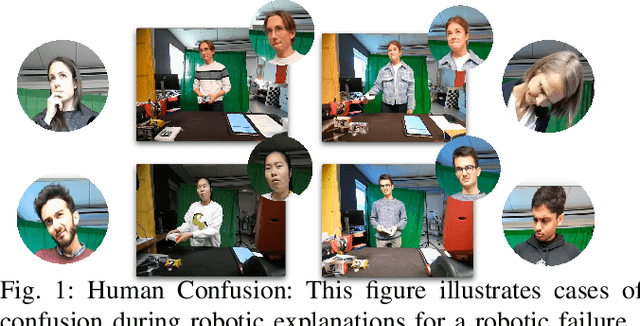

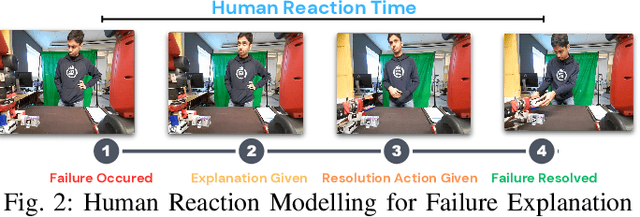

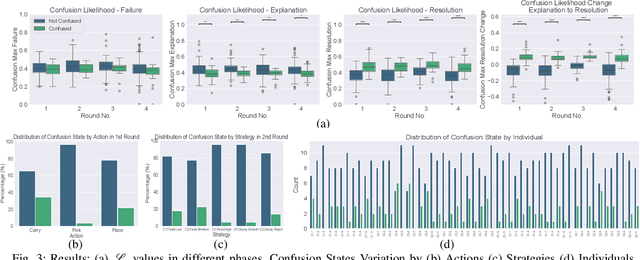

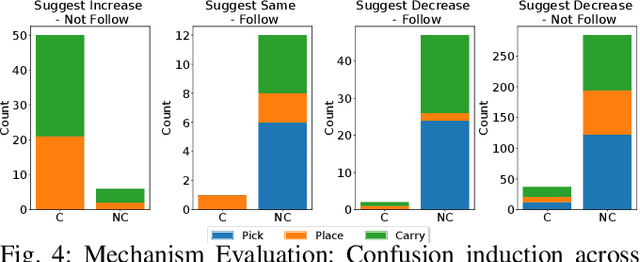

Abstract:This work aims to interpret human behavior to anticipate potential user confusion when a robot provides explanations for failure, allowing the robot to adapt its explanations for more natural and efficient collaboration. Using a dataset that included facial emotion detection, eye gaze estimation, and gestures from 55 participants in a user study, we analyzed how human behavior changed in response to different types of failures and varying explanation levels. Our goal is to assess whether human collaborators are ready to accept less detailed explanations without inducing confusion. We formulate a data-driven predictor to predict human confusion during robot failure explanations. We also propose and evaluate a mechanism, based on the predictor, to adapt the explanation level according to observed human behavior. The promising results from this evaluation indicate the potential of this research in adapting a robot's explanations for failures to enhance the collaborative experience.

Impact of Object Weight in Handovers: Inspiring Robotic Grip Release and Motion from Human Handovers

Feb 25, 2025Abstract:This work explores the effect of object weight on human motion and grip release during handovers to enhance the naturalness, safety, and efficiency of robot-human interactions. We introduce adaptive robotic strategies based on the analysis of human handover behavior with varying object weights. The key contributions of this work includes the development of an adaptive grip-release strategy for robots, a detailed analysis of how object weight influences human motion to guide robotic motion adaptations, and the creation of handover-datasets incorporating various object weights, including the YCB handover dataset. By aligning robotic grip release and motion with human behavior, this work aims to improve robot-human handovers for different weighted objects. We also evaluate these human-inspired adaptive robotic strategies in robot-to-human handovers to assess their effectiveness and performance and demonstrate that they outperform the baseline approaches in terms of naturalness, efficiency, and user perception.

REFLEX Dataset: A Multimodal Dataset of Human Reactions to Robot Failures and Explanations

Feb 20, 2025Abstract:This work presents REFLEX: Robotic Explanations to FaiLures and Human EXpressions, a comprehensive multimodal dataset capturing human reactions to robot failures and subsequent explanations in collaborative settings. It aims to facilitate research into human-robot interaction dynamics, addressing the need to study reactions to both initial failures and explanations, as well as the evolution of these reactions in long-term interactions. By providing rich, annotated data on human responses to different types of failures, explanation levels, and explanation varying strategies, the dataset contributes to the development of more robust, adaptive, and satisfying robotic systems capable of maintaining positive relationships with human collaborators, even during challenges like repeated failures.

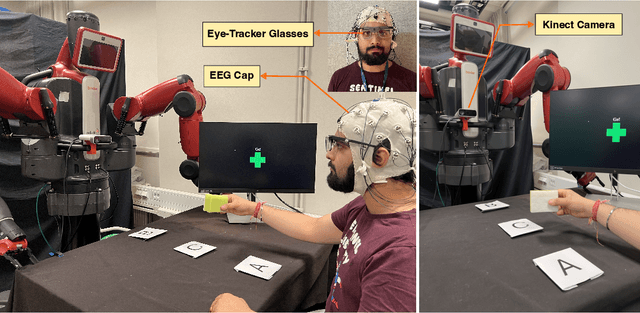

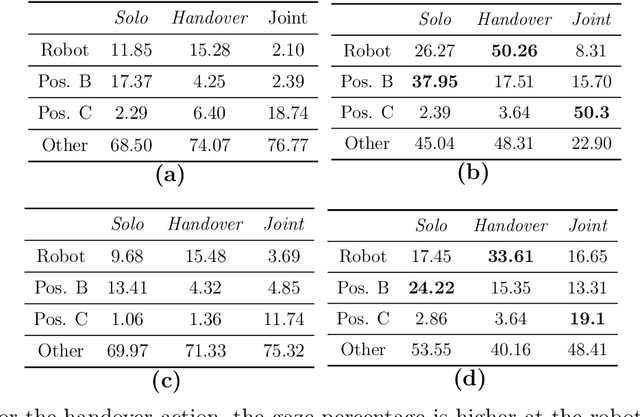

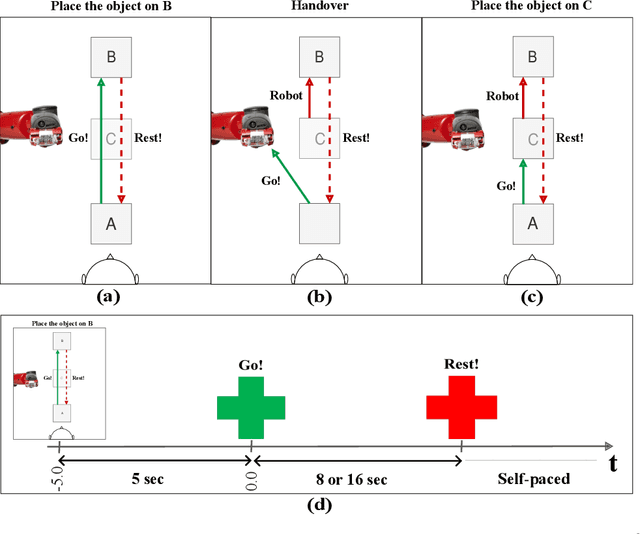

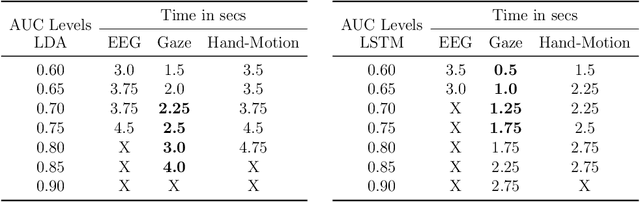

Early Detection of Human Handover Intentions in Human-Robot Collaboration: Comparing EEG, Gaze, and Hand Motion

Feb 17, 2025

Abstract:Human-robot collaboration (HRC) relies on accurate and timely recognition of human intentions to ensure seamless interactions. Among common HRC tasks, human-to-robot object handovers have been studied extensively for planning the robot's actions during object reception, assuming the human intention for object handover. However, distinguishing handover intentions from other actions has received limited attention. Most research on handovers has focused on visually detecting motion trajectories, which often results in delays or false detections when trajectories overlap. This paper investigates whether human intentions for object handovers are reflected in non-movement-based physiological signals. We conduct a multimodal analysis comparing three data modalities: electroencephalogram (EEG), gaze, and hand-motion signals. Our study aims to distinguish between handover-intended human motions and non-handover motions in an HRC setting, evaluating each modality's performance in predicting and classifying these actions before and after human movement initiation. We develop and evaluate human intention detectors based on these modalities, comparing their accuracy and timing in identifying handover intentions. To the best of our knowledge, this is the first study to systematically develop and test intention detectors across multiple modalities within the same experimental context of human-robot handovers. Our analysis reveals that handover intention can be detected from all three modalities. Nevertheless, gaze signals are the earliest as well as the most accurate to classify the motion as intended for handover or non-handover.

How do Humans take an Object from a Robot: Behavior changes observed in a User Study

Jan 03, 2025

Abstract:To facilitate human-robot interaction and gain human trust, a robot should recognize and adapt to changes in human behavior. This work documents different human behaviors observed while taking objects from an interactive robot in an experimental study, categorized across two dimensions: pull force applied and handedness. We also present the changes observed in human behavior upon repeated interaction with the robot to take various objects.

Fusion in Context: A Multimodal Approach to Affective State Recognition

Sep 18, 2024

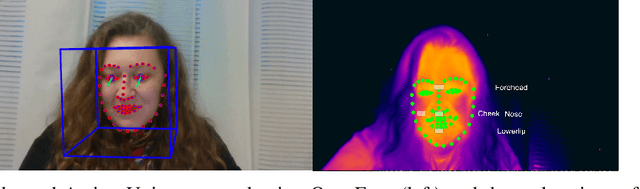

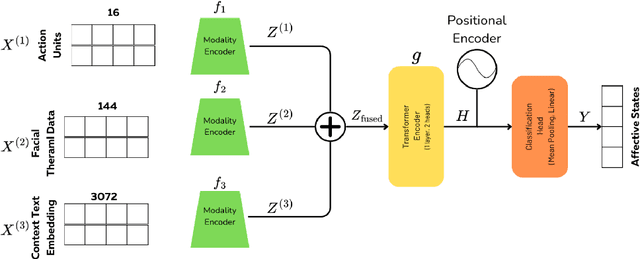

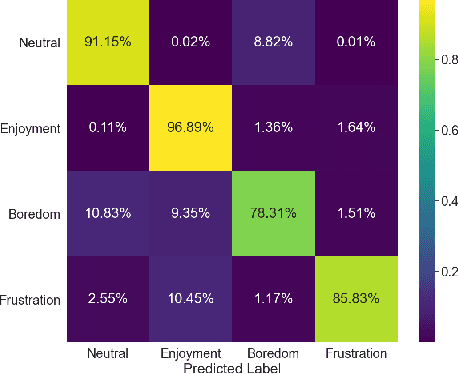

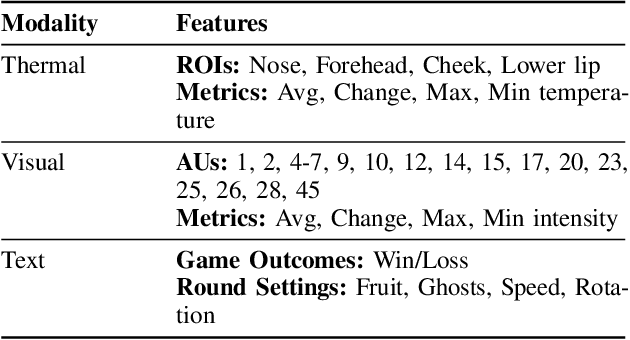

Abstract:Accurate recognition of human emotions is a crucial challenge in affective computing and human-robot interaction (HRI). Emotional states play a vital role in shaping behaviors, decisions, and social interactions. However, emotional expressions can be influenced by contextual factors, leading to misinterpretations if context is not considered. Multimodal fusion, combining modalities like facial expressions, speech, and physiological signals, has shown promise in improving affect recognition. This paper proposes a transformer-based multimodal fusion approach that leverages facial thermal data, facial action units, and textual context information for context-aware emotion recognition. We explore modality-specific encoders to learn tailored representations, which are then fused using additive fusion and processed by a shared transformer encoder to capture temporal dependencies and interactions. The proposed method is evaluated on a dataset collected from participants engaged in a tangible tabletop Pacman game designed to induce various affective states. Our results demonstrate the effectiveness of incorporating contextual information and multimodal fusion for affective state recognition.

Comparison between Behavior Trees and Finite State Machines

May 25, 2024

Abstract:Behavior Trees (BTs) were first conceived in the computer games industry as a tool to model agent behavior, but they received interest also in the robotics community as an alternative policy design to Finite State Machines (FSMs). The advantages of BTs over FSMs had been highlighted in many works, but there is no thorough practical comparison of the two designs. Such a comparison is particularly relevant in the robotic industry, where FSMs have been the state-of-the-art policy representation for robot control for many years. In this work we shed light on this matter by comparing how BTs and FSMs behave when controlling a robot in a mobile manipulation task. The comparison is made in terms of reactivity, modularity, readability, and design. We propose metrics for each of these properties, being aware that while some are tangible and objective, others are more subjective and implementation dependent. The practical comparison is performed in a simulation environment with validation on a real robot. We find that although the robot's behavior during task solving is independent on the policy representation, maintaining a BT rather than an FSM becomes easier as the task increases in complexity.

Behavior Trees in Industrial Applications: A Case Study in Underground Explosive Charging

Mar 28, 2024

Abstract:In industrial applications Finite State Machines (FSMs) are often used to implement decision making policies for autonomous systems. In recent years, the use of Behavior Trees (BT) as an alternative policy representation has gained considerable attention. The benefits of using BTs over FSMs are modularity and reusability, enabling a system that is easy to extend and modify. However, there exists few published studies on successful implementations of BTs for industrial applications. This paper contributes with the lessons learned from implementing BTs in a complex industrial use case, where a robotic system assembles explosive charges and places them in holes on the rock face. The main result of the paper is that even if it is possible to model the entire system as a BT, combining BTs with FSMs can increase the readability and maintainability of the system. The benefit of such combination is remarked especially in the use case studied in this paper, where the full system cannot run autonomously but human supervision and feedback are needed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge