Parag Khanna

Adapting Robot's Explanation for Failures Based on Observed Human Behavior in Human-Robot Collaboration

Apr 13, 2025Abstract:This work aims to interpret human behavior to anticipate potential user confusion when a robot provides explanations for failure, allowing the robot to adapt its explanations for more natural and efficient collaboration. Using a dataset that included facial emotion detection, eye gaze estimation, and gestures from 55 participants in a user study, we analyzed how human behavior changed in response to different types of failures and varying explanation levels. Our goal is to assess whether human collaborators are ready to accept less detailed explanations without inducing confusion. We formulate a data-driven predictor to predict human confusion during robot failure explanations. We also propose and evaluate a mechanism, based on the predictor, to adapt the explanation level according to observed human behavior. The promising results from this evaluation indicate the potential of this research in adapting a robot's explanations for failures to enhance the collaborative experience.

Impact of Object Weight in Handovers: Inspiring Robotic Grip Release and Motion from Human Handovers

Feb 25, 2025Abstract:This work explores the effect of object weight on human motion and grip release during handovers to enhance the naturalness, safety, and efficiency of robot-human interactions. We introduce adaptive robotic strategies based on the analysis of human handover behavior with varying object weights. The key contributions of this work includes the development of an adaptive grip-release strategy for robots, a detailed analysis of how object weight influences human motion to guide robotic motion adaptations, and the creation of handover-datasets incorporating various object weights, including the YCB handover dataset. By aligning robotic grip release and motion with human behavior, this work aims to improve robot-human handovers for different weighted objects. We also evaluate these human-inspired adaptive robotic strategies in robot-to-human handovers to assess their effectiveness and performance and demonstrate that they outperform the baseline approaches in terms of naturalness, efficiency, and user perception.

REFLEX Dataset: A Multimodal Dataset of Human Reactions to Robot Failures and Explanations

Feb 20, 2025Abstract:This work presents REFLEX: Robotic Explanations to FaiLures and Human EXpressions, a comprehensive multimodal dataset capturing human reactions to robot failures and subsequent explanations in collaborative settings. It aims to facilitate research into human-robot interaction dynamics, addressing the need to study reactions to both initial failures and explanations, as well as the evolution of these reactions in long-term interactions. By providing rich, annotated data on human responses to different types of failures, explanation levels, and explanation varying strategies, the dataset contributes to the development of more robust, adaptive, and satisfying robotic systems capable of maintaining positive relationships with human collaborators, even during challenges like repeated failures.

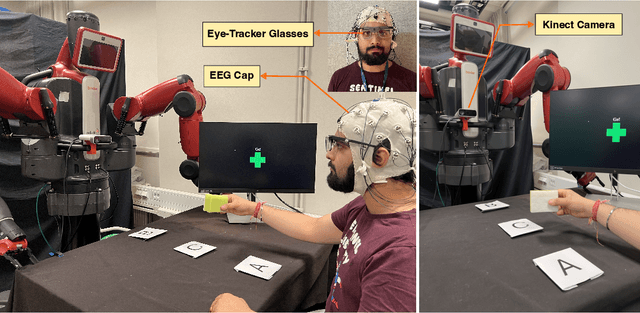

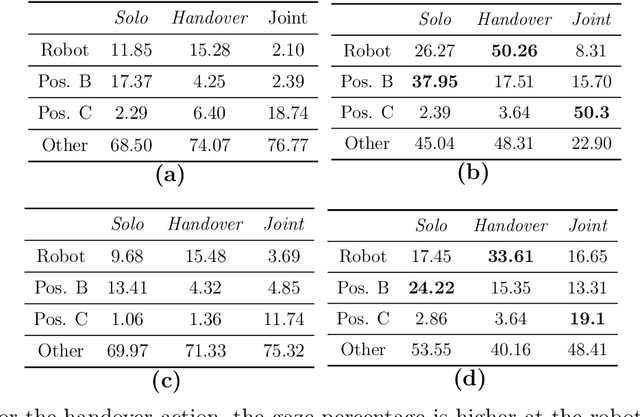

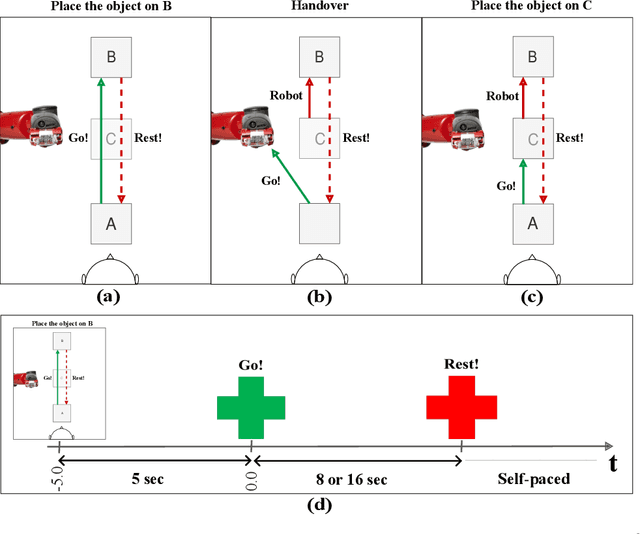

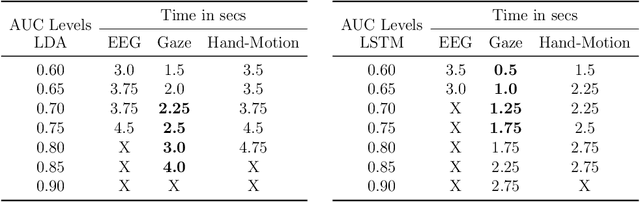

Early Detection of Human Handover Intentions in Human-Robot Collaboration: Comparing EEG, Gaze, and Hand Motion

Feb 17, 2025

Abstract:Human-robot collaboration (HRC) relies on accurate and timely recognition of human intentions to ensure seamless interactions. Among common HRC tasks, human-to-robot object handovers have been studied extensively for planning the robot's actions during object reception, assuming the human intention for object handover. However, distinguishing handover intentions from other actions has received limited attention. Most research on handovers has focused on visually detecting motion trajectories, which often results in delays or false detections when trajectories overlap. This paper investigates whether human intentions for object handovers are reflected in non-movement-based physiological signals. We conduct a multimodal analysis comparing three data modalities: electroencephalogram (EEG), gaze, and hand-motion signals. Our study aims to distinguish between handover-intended human motions and non-handover motions in an HRC setting, evaluating each modality's performance in predicting and classifying these actions before and after human movement initiation. We develop and evaluate human intention detectors based on these modalities, comparing their accuracy and timing in identifying handover intentions. To the best of our knowledge, this is the first study to systematically develop and test intention detectors across multiple modalities within the same experimental context of human-robot handovers. Our analysis reveals that handover intention can be detected from all three modalities. Nevertheless, gaze signals are the earliest as well as the most accurate to classify the motion as intended for handover or non-handover.

How do Humans take an Object from a Robot: Behavior changes observed in a User Study

Jan 03, 2025

Abstract:To facilitate human-robot interaction and gain human trust, a robot should recognize and adapt to changes in human behavior. This work documents different human behaviors observed while taking objects from an interactive robot in an experimental study, categorized across two dimensions: pull force applied and handedness. We also present the changes observed in human behavior upon repeated interaction with the robot to take various objects.

Effects of Explanation Strategies to Resolve Failures in Human-Robot Collaboration

Sep 18, 2023Abstract:Despite significant improvements in robot capabilities, they are likely to fail in human-robot collaborative tasks due to high unpredictability in human environments and varying human expectations. In this work, we explore the role of explanation of failures by a robot in a human-robot collaborative task. We present a user study incorporating common failures in collaborative tasks with human assistance to resolve the failure. In the study, a robot and a human work together to fill a shelf with objects. Upon encountering a failure, the robot explains the failure and the resolution to overcome the failure, either through handovers or humans completing the task. The study is conducted using different levels of robotic explanation based on the failure action, failure cause, and action history, and different strategies in providing the explanation over the course of repeated interaction. Our results show that the success in resolving the failures is not only a function of the level of explanation but also the type of failures. Furthermore, while novice users rate the robot higher overall in terms of their satisfaction with the explanation, their satisfaction is not only a function of the robot's explanation level at a certain round but also the prior information they received from the robot.

A Multimodal Data Set of Human Handovers with Design Implications for Human-Robot Handovers

Apr 04, 2023Abstract:Handovers are basic yet sophisticated motor tasks performed seamlessly by humans. They are among the most common activities in our daily lives and social environments. This makes mastering the art of handovers critical for a social and collaborative robot. In this work, we present an experimental study that involved human-human handovers by 13 pairs, i.e., 26 participants. We record and explore multiple features of handovers amongst humans aimed at inspiring handovers amongst humans and robots. With this work, we further create and publish a novel data set of 8672 handovers, bringing together human motion and the forces involved. We further analyze the effect of object weight and the role of visual sensory input in human-human handovers, as well as possible design implications for robots. As a proof of concept, the data set was used for creating a human-inspired data-driven strategy for robotic grip release in handovers, which was demonstrated to result in better robot to human handovers.

Data-driven Grip Force Variation in Robot-Human Handovers

Mar 28, 2023Abstract:Handovers frequently occur in our social environments, making it imperative for a collaborative robotic system to master the skill of handover. In this work, we aim to investigate the relationship between the grip force variation for a human giver and the sensed interaction force-torque in human-human handovers, utilizing a data-driven approach. A Long-Short Term Memory (LSTM) network was trained to use the interaction force-torque in a handover to predict the human grip force variation in advance. Further, we propose to utilize the trained network to cause human-like grip force variation for a robotic giver.

User Study Exploring the Role of Explanation of Failures by Robots in Human Robot Collaboration Tasks

Mar 28, 2023

Abstract:Despite great advances in what robots can do, they still experience failures in human-robot collaborative tasks due to high randomness in unstructured human environments. Moreover, a human's unfamiliarity with a robot and its abilities can cause such failures to repeat. This makes the ability to failure explanation very important for a robot. In this work, we describe a user study that incorporated different robotic failures in a human-robot collaboration (HRC) task aimed at filling a shelf. We included different types of failures and repeated occurrences of such failures in a prolonged interaction between humans and robots. The failure resolution involved human intervention in form of human-robot bidirectional handovers. Through such studies, we aim to test different explanation types and explanation progression in the interaction and record humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge