Severin Lemaignan

Express Yourself: Enabling large-scale public events involving multi-human-swarm interaction for social applications with MOSAIX

Nov 15, 2024

Abstract:Robot swarms have the potential to help groups of people with social tasks, given their ability to scale to large numbers of robots and users. Developing multi-human-swarm interaction is therefore crucial to support multiple people interacting with the swarm simultaneously - which is an area that is scarcely researched, unlike single-human, single-robot or single-human, multi-robot interaction. Moreover, most robots are still confined to laboratory settings. In this paper, we present our work with MOSAIX, a swarm of robot Tiles, that facilitated ideation at a science museum. 63 robots were used as a swarm of smart sticky notes, collecting input from the public and aggregating it based on themes, providing an evolving visualization tool that engaged visitors and fostered their participation. Our contribution lies in creating a large-scale (63 robots and 294 attendees) public event, with a completely decentralized swarm system in real-life settings. We also discuss learnings we obtained that might help future researchers create multi-human-swarm interaction with the public.

Fusion in Context: A Multimodal Approach to Affective State Recognition

Sep 18, 2024

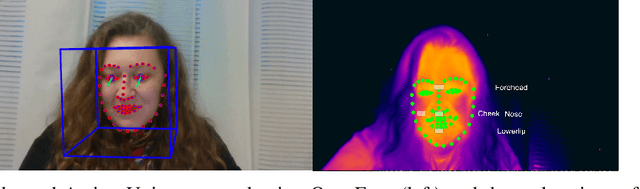

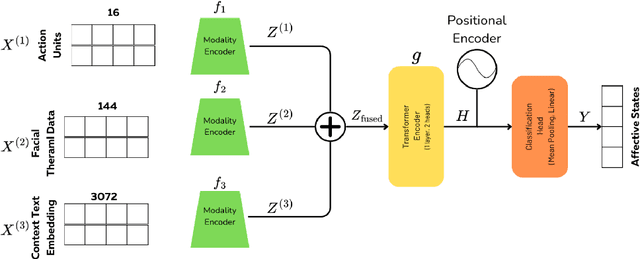

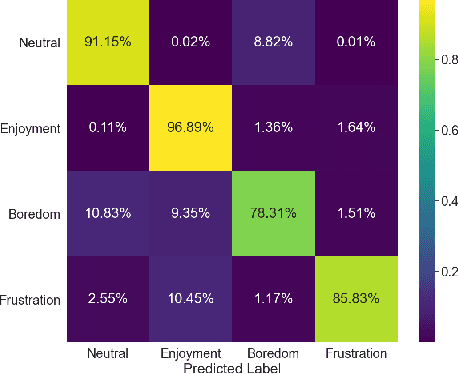

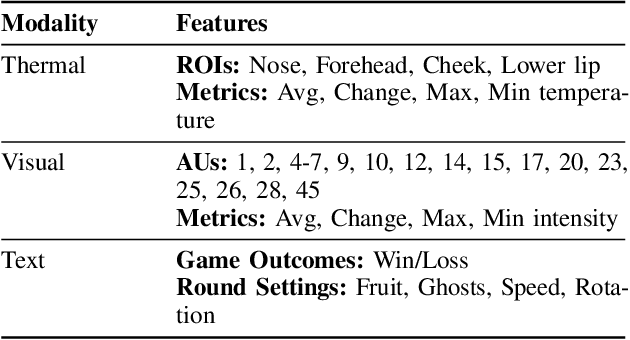

Abstract:Accurate recognition of human emotions is a crucial challenge in affective computing and human-robot interaction (HRI). Emotional states play a vital role in shaping behaviors, decisions, and social interactions. However, emotional expressions can be influenced by contextual factors, leading to misinterpretations if context is not considered. Multimodal fusion, combining modalities like facial expressions, speech, and physiological signals, has shown promise in improving affect recognition. This paper proposes a transformer-based multimodal fusion approach that leverages facial thermal data, facial action units, and textual context information for context-aware emotion recognition. We explore modality-specific encoders to learn tailored representations, which are then fused using additive fusion and processed by a shared transformer encoder to capture temporal dependencies and interactions. The proposed method is evaluated on a dataset collected from participants engaged in a tangible tabletop Pacman game designed to induce various affective states. Our results demonstrate the effectiveness of incorporating contextual information and multimodal fusion for affective state recognition.

Placing by Touching: An empirical study on the importance of tactile sensing for precise object placing

Oct 05, 2022

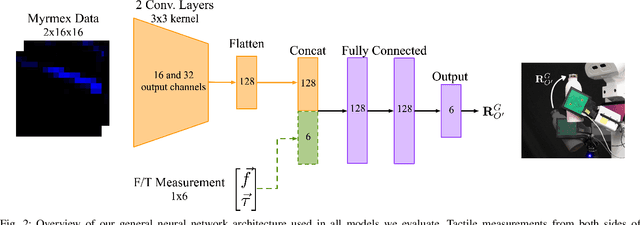

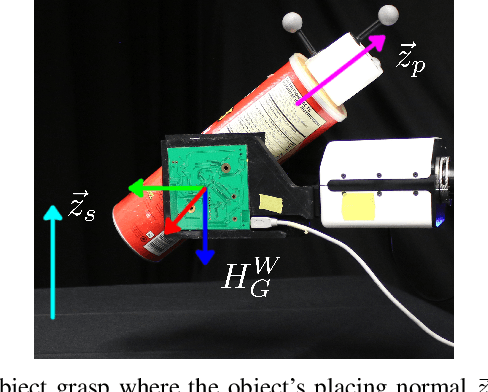

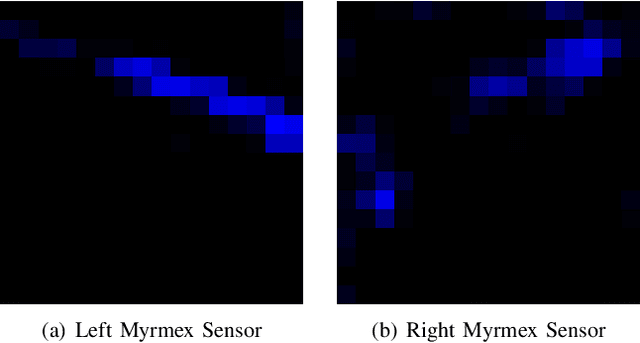

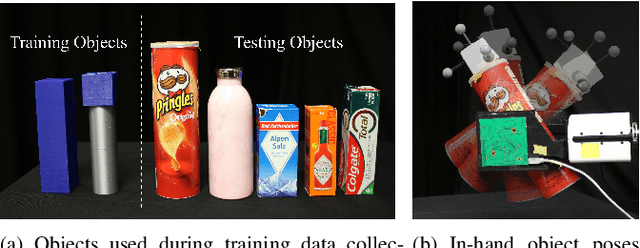

Abstract:Tactile sensors are promising tools for endowing robots with embodied intelligence and increased dexterity. These sensors can provide robotic systems with direct information about physical interactions with the world, which is difficult to obtain from extrinsic perception systems. This work deals with a practical everyday living problem: stable object placement on flat surfaces starting from unknown initial poses. Common approaches for object placing either require complete scene specifications or indirect sensor measurements, such as cameras which are prone to suffer from occlusions. Instead, this work proposes a novel approach for stable object placing that combines tactile feedback and proprioceptive sensing. We devise a neural architecture that estimates a rotation matrix which results in a corrective gripper movement that aligns the object with the table and paves the way for the subsequent stable object placement. We compare models with different sensing modalities, such as force-torque and an external motion capture system, in real-world object placement tasks with different objects. Our experimental evaluation of the placing policies with a set of unknown everyday objects reveals an impressive generalization of the tactile-based pipeline and suggests that tactile sensing plays a vital role in the intrinsic understanding of dexterous object manipulation. Videos of our approach are available at https://sites.google.com/view/placing-by-touching.

On Determinism of Game Engines used for Simulation-based Autonomous Vehicle Verification

Apr 29, 2021

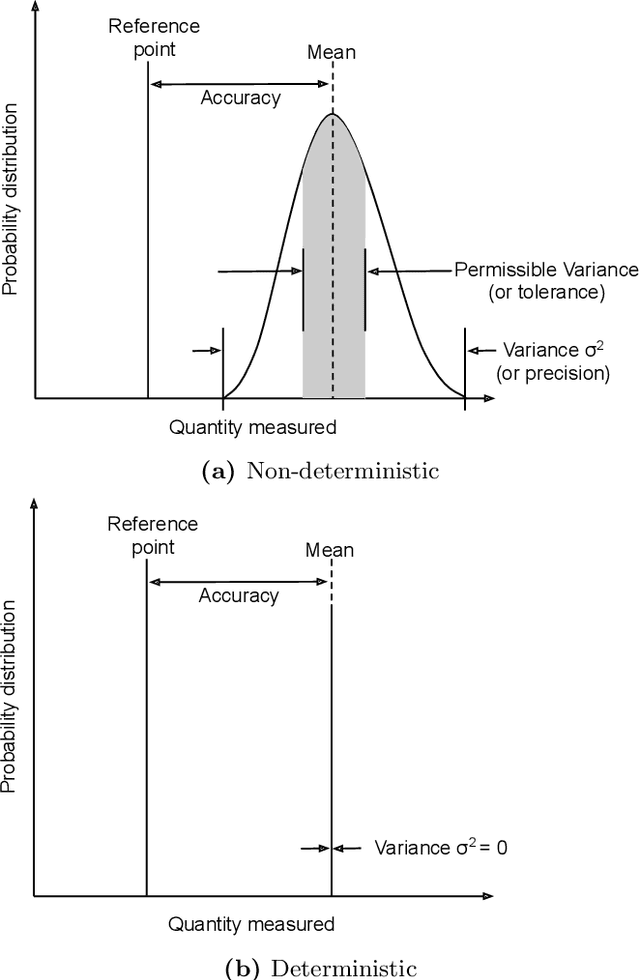

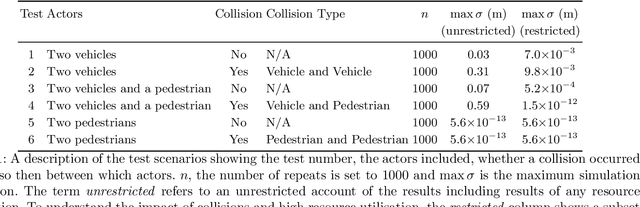

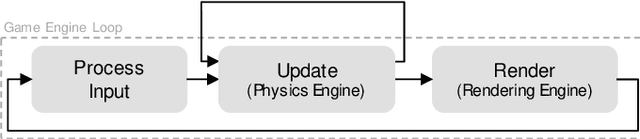

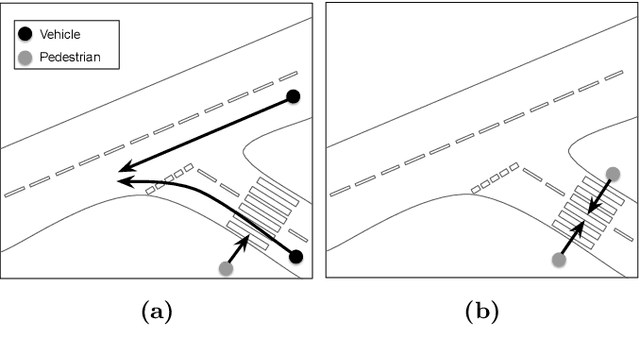

Abstract:Game engines are increasingly used as simulation platforms by the autonomous vehicle (AV) community to develop vehicle control systems and test environments. A key requirement for simulation-based development and verification is determinism, since a deterministic process will always produce the same output given the same initial conditions and event history. Thus, in a deterministic simulation environment, tests are rendered repeatable and yield simulation results that are trustworthy and straightforward to debug. However, game engines are seldom deterministic. This paper reviews and identifies the potential causes of non-deterministic behaviours in game engines. A case study using CARLA, an open-source autonomous driving simulation environment powered by Unreal Engine, is presented to highlight its inherent shortcomings in providing sufficient precision in experimental results. Different configurations and utilisations of the software and hardware are explored to determine an operational domain where the simulation precision is sufficiently low i.e.\ variance between repeated executions becomes negligible for development and testing work. Finally, a method of a general nature is proposed, that can be used to find the domains of permissible variance in game engine simulations for any given system configuration.

2nd Workshop on Cognitive Architectures for Social Human-Robot Interaction 2016 (CogArch4sHRI 2016)

Feb 04, 2016Abstract:This volume is the proceedings of the 2nd workshop on Cognitive Architectures for Social Human-Robot Interaction, held at the ACM/IEEE HRI 2016 conference, which took place on Monday 7th March 2016, in Christchurch, New Zealand. Organised by Paul Baxter (Plymouth University, U.K.), J. Gregory Trafton (Naval Research Laboratory, USA), and Severin Lemaignan (Plymouth University, U.K.).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge