Abanoub Ghobrial

On Self-Supervised Dynamic Incremental Regularised Adaptation

Nov 13, 2023

Abstract:In this paper, we overview a recent method for dynamic domain adaptation named DIRA, which relies on a few samples in addition to a regularisation approach named elastic weight consolidation to achieve state-of-the-art (SOTA) domain adaptation results. DIRA has been previously shown to perform competitively with SOTA unsupervised adaption techniques. However, a limitation of DIRA is that it relies on labels to be provided for the few samples used in adaption. This makes it a supervised technique. In this paper, we discuss a proposed alteration to the DIRA method to make it self-supervised i.e. remove the need for providing labels. Experiments on our proposed alteration will be provided in future work.

Evaluation Metrics for CNNs Compression

May 18, 2023Abstract:There is a lot of research effort devoted by researcher into developing different techniques for neural networks compression, yet the community seems to lack standardised ways of evaluating and comparing between different compression techniques, which is key to identifying the most suitable compression technique for different applications. In this paper we contribute towards standardisation of neural network compression by providing a review of evaluation metrics. These metrics have been implemented into NetZIP, a standardised neural network compression bench. We showcase some of the metrics reviewed using three case studies focusing on object classification, object detection, and edge devices.

Towards a Measure of Trustworthiness to Evaluate CNNs During Operation

Jan 21, 2023

Abstract:Due to black box nature of Convolutional neural networks (CNNs), the continuous validation of CNN classifiers' during operation is infeasible. As a result this makes it difficult for developers or regulators to gain confidence in the deployment of autonomous systems employing CNNs. We introduce the trustworthiness in classification score (TCS), a metric to assist with overcoming this challenge. The metric quantifies the trustworthiness in a prediction by checking for the existence of certain features in the predictions made by the CNN. A case study on persons detection is used to to demonstrate our method and the usage of TCS.

Operational Adaptation of DNN Classifiers using Elastic Weight Consolidation

Apr 30, 2022

Abstract:Autonomous systems (AS) often use Deep Neural Network (DNN) classifiers to allow them to operate in complex, high dimensional, non-linear, and dynamically changing environments. Due to the complexity of these environments, DNN classifiers may output misclassifications due to experiencing new tasks in their operational environments, which were not identified during development. Removing a system from operation and retraining it to include the new identified task becomes economically infeasible as the number of such autonomous systems increase. Additionally, such misclassifications may cause financial losses and safety threats to the AS or to other operators in its environment. In this paper, we propose to reduce such threats by investigating if DNN classifiers can adapt its knowledge to learn new information in the AS's operational environment, using only a limited number of observations encountered sequentially during operation. This allows the AS to adapt to new encountered information and hence increases the AS's reliability on doing correct classifications. However, retraining DNNs on different observations than used in prior training is known to cause catastrophic forgetting or significant model drift. We investigate if this problem can be controlled by using Elastic Weight Consolidation (EWC) whilst learning from limited new observations. We carry out experiments using original and noisy versions of the MNIST dataset to represent known and new information to DNN classifiers. Results show that using EWC does make the process of adaptation to new information a lot more controlled, and thus allowing for reliable adaption of ASs to new information in their operational environment.

On Determinism of Game Engines used for Simulation-based Autonomous Vehicle Verification

Apr 29, 2021

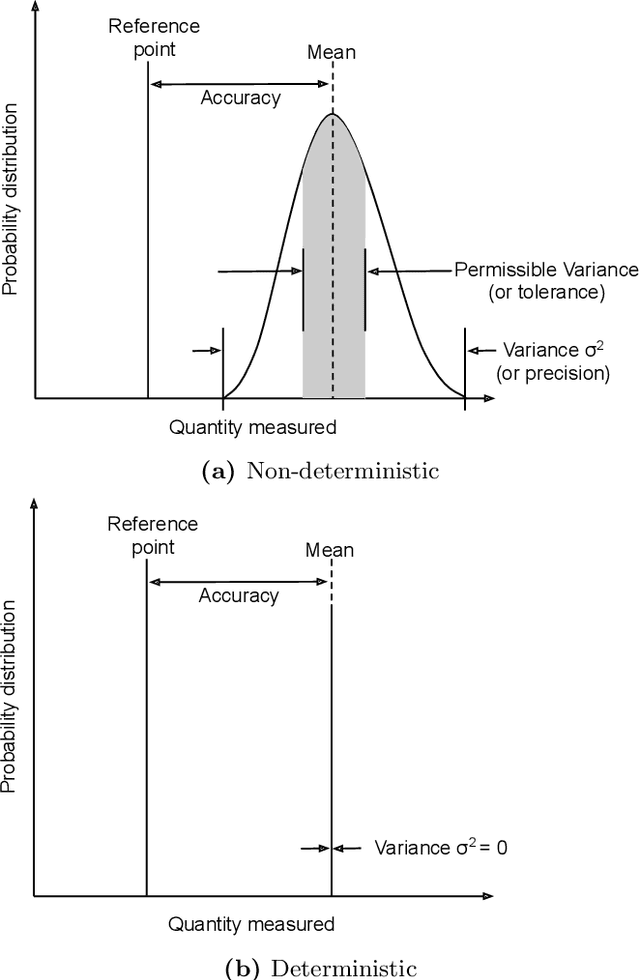

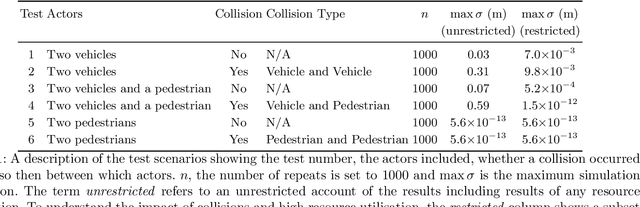

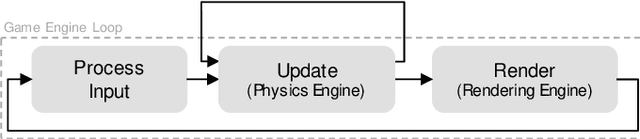

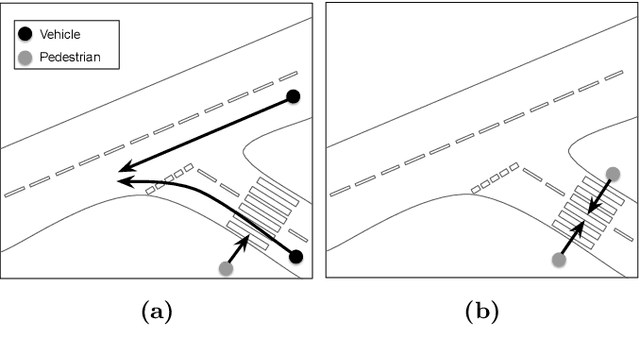

Abstract:Game engines are increasingly used as simulation platforms by the autonomous vehicle (AV) community to develop vehicle control systems and test environments. A key requirement for simulation-based development and verification is determinism, since a deterministic process will always produce the same output given the same initial conditions and event history. Thus, in a deterministic simulation environment, tests are rendered repeatable and yield simulation results that are trustworthy and straightforward to debug. However, game engines are seldom deterministic. This paper reviews and identifies the potential causes of non-deterministic behaviours in game engines. A case study using CARLA, an open-source autonomous driving simulation environment powered by Unreal Engine, is presented to highlight its inherent shortcomings in providing sufficient precision in experimental results. Different configurations and utilisations of the software and hardware are explored to determine an operational domain where the simulation precision is sufficiently low i.e.\ variance between repeated executions becomes negligible for development and testing work. Finally, a method of a general nature is proposed, that can be used to find the domains of permissible variance in game engine simulations for any given system configuration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge