Hamid Asgari

AI-Native Multi-Access Future Networks -- The REASON Architecture

Nov 25, 2024

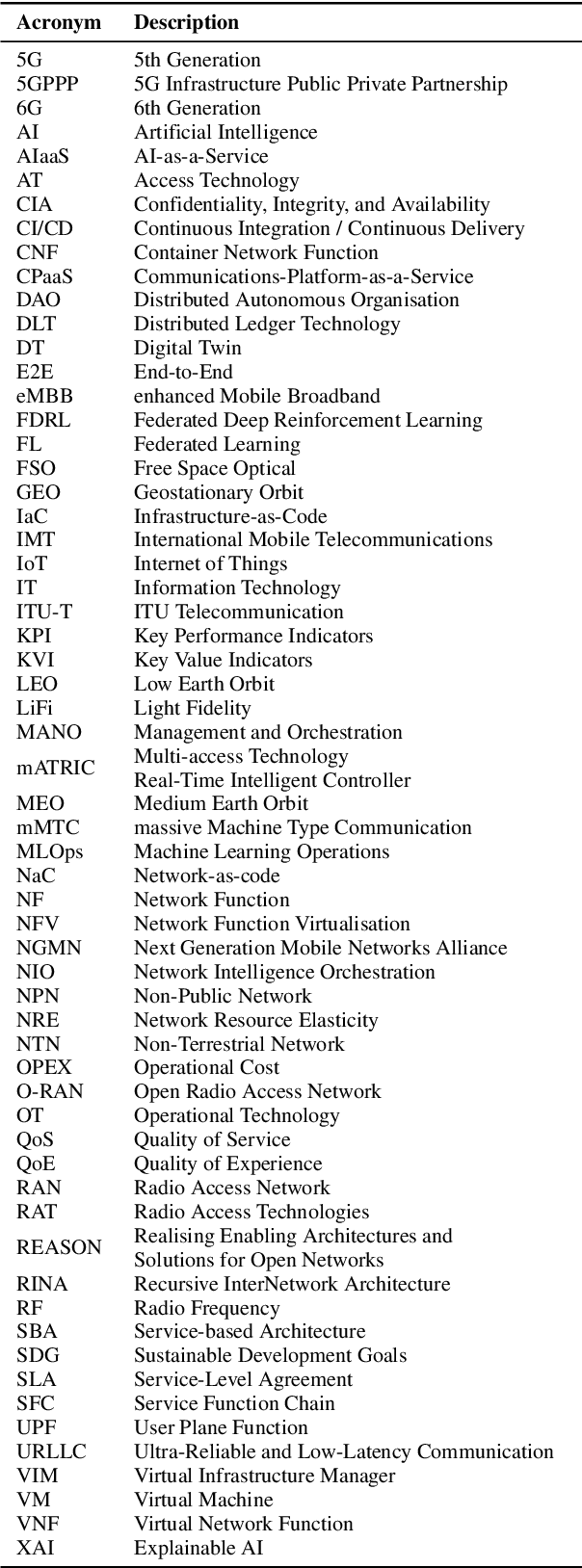

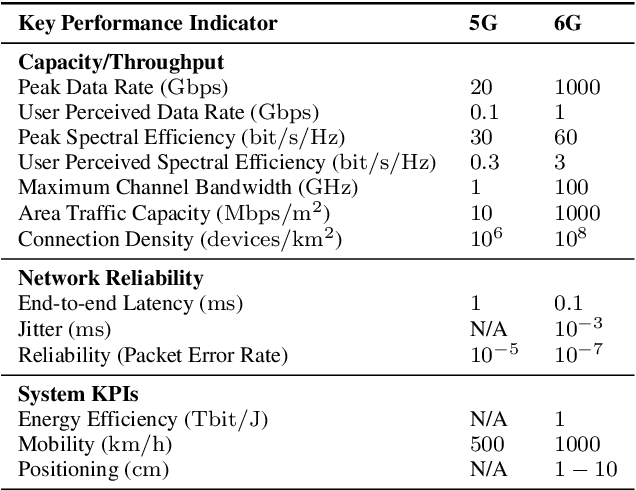

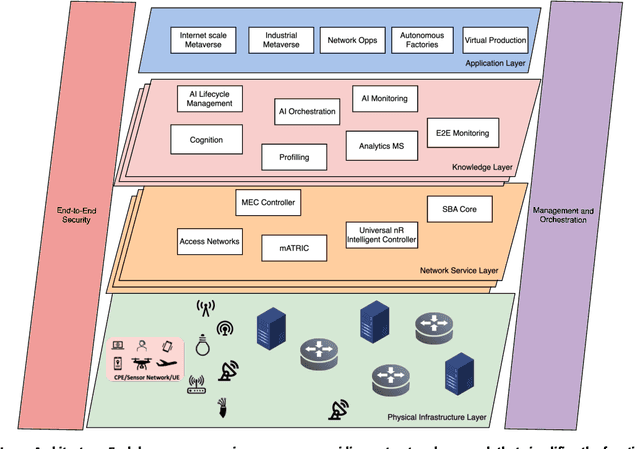

Abstract:The development of the sixth generation of communication networks (6G) has been gaining momentum over the past years, with a target of being introduced by 2030. Several initiatives worldwide are developing innovative solutions and setting the direction for the key features of these networks. Some common emerging themes are the tight integration of AI, the convergence of multiple access technologies and sustainable operation, aiming to meet stringent performance and societal requirements. To that end, we are introducing REASON - Realising Enabling Architectures and Solutions for Open Networks. The REASON project aims to address technical challenges in future network deployments, such as E2E service orchestration, sustainability, security and trust management, and policy management, utilising AI-native principles, considering multiple access technologies and cloud-native solutions. This paper presents REASON's architecture and the identified requirements for future networks. The architecture is meticulously designed for modularity, interoperability, scalability, simplified troubleshooting, flexibility, and enhanced security, taking into consideration current and future standardisation efforts, and the ease of implementation and training. It is structured into four horizontal layers: Physical Infrastructure, Network Service, Knowledge, and End-User Application, complemented by two vertical layers: Management and Orchestration, and E2E Security. This layered approach ensures a robust, adaptable framework to support the diverse and evolving requirements of 6G networks, fostering innovation and facilitating seamless integration of advanced technologies.

Grand Challenges in the Verification of Autonomous Systems

Nov 21, 2024

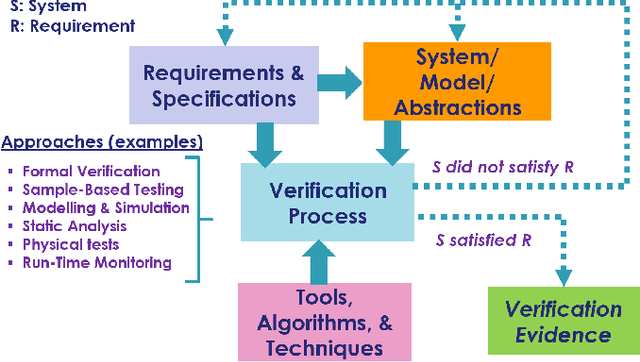

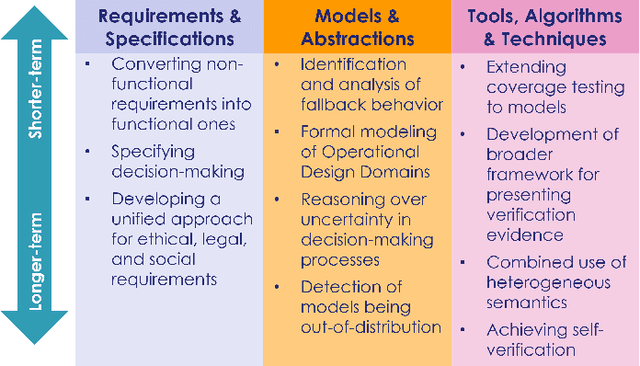

Abstract:Autonomous systems use independent decision-making with only limited human intervention to accomplish goals in complex and unpredictable environments. As the autonomy technologies that underpin them continue to advance, these systems will find their way into an increasing number of applications in an ever wider range of settings. If we are to deploy them to perform safety-critical or mission-critical roles, it is imperative that we have justified confidence in their safe and correct operation. Verification is the process by which such confidence is established. However, autonomous systems pose challenges to existing verification practices. This paper highlights viewpoints of the Roadmap Working Group of the IEEE Robotics and Automation Society Technical Committee for Verification of Autonomous Systems, identifying these grand challenges, and providing a vision for future research efforts that will be needed to address them.

Evaluation Metrics for CNNs Compression

May 18, 2023Abstract:There is a lot of research effort devoted by researcher into developing different techniques for neural networks compression, yet the community seems to lack standardised ways of evaluating and comparing between different compression techniques, which is key to identifying the most suitable compression technique for different applications. In this paper we contribute towards standardisation of neural network compression by providing a review of evaluation metrics. These metrics have been implemented into NetZIP, a standardised neural network compression bench. We showcase some of the metrics reviewed using three case studies focusing on object classification, object detection, and edge devices.

Towards a Measure of Trustworthiness to Evaluate CNNs During Operation

Jan 21, 2023

Abstract:Due to black box nature of Convolutional neural networks (CNNs), the continuous validation of CNN classifiers' during operation is infeasible. As a result this makes it difficult for developers or regulators to gain confidence in the deployment of autonomous systems employing CNNs. We introduce the trustworthiness in classification score (TCS), a metric to assist with overcoming this challenge. The metric quantifies the trustworthiness in a prediction by checking for the existence of certain features in the predictions made by the CNN. A case study on persons detection is used to to demonstrate our method and the usage of TCS.

Operational Adaptation of DNN Classifiers using Elastic Weight Consolidation

Apr 30, 2022

Abstract:Autonomous systems (AS) often use Deep Neural Network (DNN) classifiers to allow them to operate in complex, high dimensional, non-linear, and dynamically changing environments. Due to the complexity of these environments, DNN classifiers may output misclassifications due to experiencing new tasks in their operational environments, which were not identified during development. Removing a system from operation and retraining it to include the new identified task becomes economically infeasible as the number of such autonomous systems increase. Additionally, such misclassifications may cause financial losses and safety threats to the AS or to other operators in its environment. In this paper, we propose to reduce such threats by investigating if DNN classifiers can adapt its knowledge to learn new information in the AS's operational environment, using only a limited number of observations encountered sequentially during operation. This allows the AS to adapt to new encountered information and hence increases the AS's reliability on doing correct classifications. However, retraining DNNs on different observations than used in prior training is known to cause catastrophic forgetting or significant model drift. We investigate if this problem can be controlled by using Elastic Weight Consolidation (EWC) whilst learning from limited new observations. We carry out experiments using original and noisy versions of the MNIST dataset to represent known and new information to DNN classifiers. Results show that using EWC does make the process of adaptation to new information a lot more controlled, and thus allowing for reliable adaption of ASs to new information in their operational environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge