Martin S. Feather

Grand Challenges in the Verification of Autonomous Systems

Nov 21, 2024

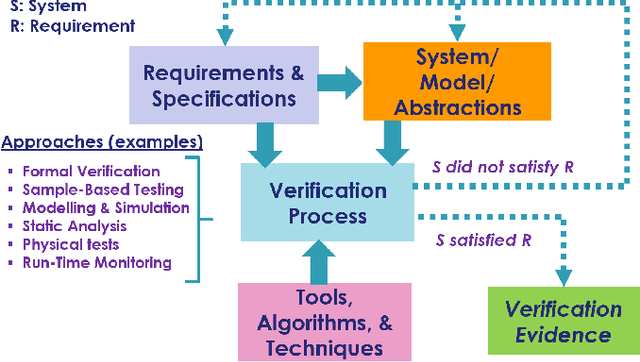

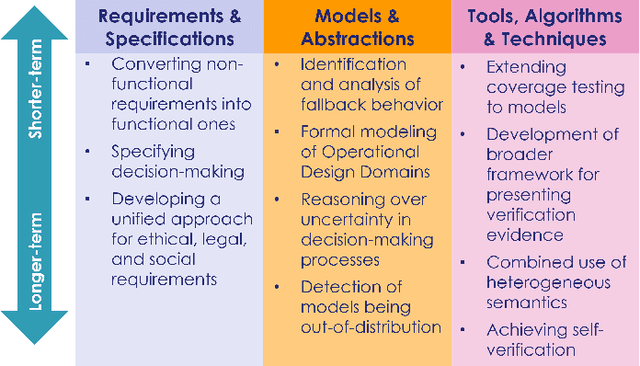

Abstract:Autonomous systems use independent decision-making with only limited human intervention to accomplish goals in complex and unpredictable environments. As the autonomy technologies that underpin them continue to advance, these systems will find their way into an increasing number of applications in an ever wider range of settings. If we are to deploy them to perform safety-critical or mission-critical roles, it is imperative that we have justified confidence in their safe and correct operation. Verification is the process by which such confidence is established. However, autonomous systems pose challenges to existing verification practices. This paper highlights viewpoints of the Roadmap Working Group of the IEEE Robotics and Automation Society Technical Committee for Verification of Autonomous Systems, identifying these grand challenges, and providing a vision for future research efforts that will be needed to address them.

Sound Heuristic Search Value Iteration for Undiscounted POMDPs with Reachability Objectives

Jun 05, 2024

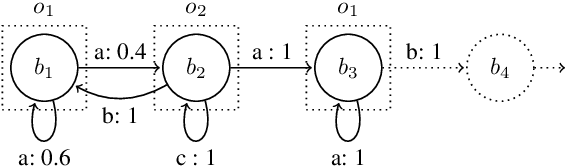

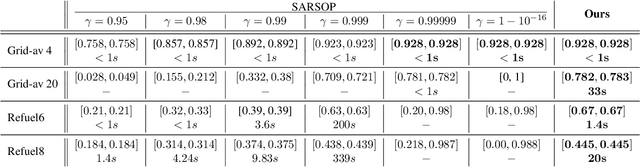

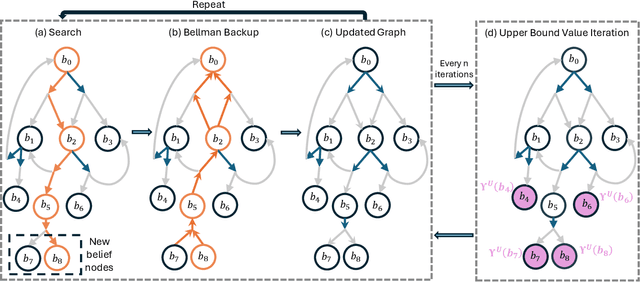

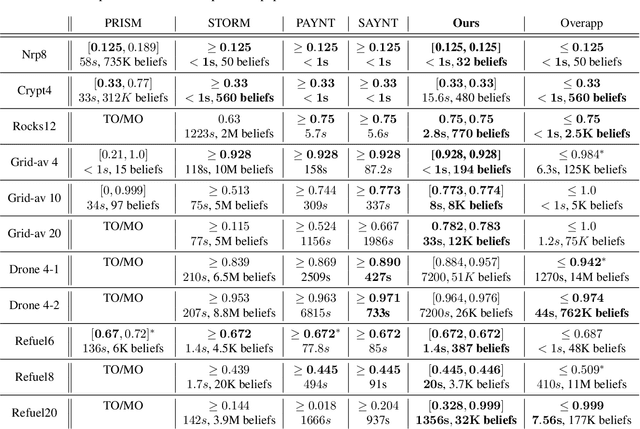

Abstract:Partially Observable Markov Decision Processes (POMDPs) are powerful models for sequential decision making under transition and observation uncertainties. This paper studies the challenging yet important problem in POMDPs known as the (indefinite-horizon) Maximal Reachability Probability Problem (MRPP), where the goal is to maximize the probability of reaching some target states. This is also a core problem in model checking with logical specifications and is naturally undiscounted (discount factor is one). Inspired by the success of point-based methods developed for discounted problems, we study their extensions to MRPP. Specifically, we focus on trial-based heuristic search value iteration techniques and present a novel algorithm that leverages the strengths of these techniques for efficient exploration of the belief space (informed search via value bounds) while addressing their drawbacks in handling loops for indefinite-horizon problems. The algorithm produces policies with two-sided bounds on optimal reachability probabilities. We prove convergence to an optimal policy from below under certain conditions. Experimental evaluations on a suite of benchmarks show that our algorithm outperforms existing methods in almost all cases in both probability guarantees and computation time.

Assurance for Autonomy -- JPL's past research, lessons learned, and future directions

May 16, 2023Abstract:Robotic space missions have long depended on automation, defined in the 2015 NASA Technology Roadmaps as "the automatically-controlled operation of an apparatus, process, or system using a pre-planned set of instructions (e.g., a command sequence)," to react to events when a rapid response is required. Autonomy, defined there as "the capacity of a system to achieve goals while operating independently from external control," is required when a wide variation in circumstances precludes responses being pre-planned, instead autonomy follows an on-board deliberative process to determine the situation, decide the response, and manage its execution. Autonomy is increasingly called for to support adventurous space mission concepts, as an enabling capability or as a significant enhancer of the science value that those missions can return. But if autonomy is to be allowed to control these missions' expensive assets, all parties in the lifetime of a mission, from proposers through ground control, must have high confidence that autonomy will perform as intended to keep the asset safe to (if possible) accomplish the mission objectives. The role of mission assurance is a key contributor to providing this confidence, yet assurance practices honed over decades of spaceflight have relatively little experience with autonomy. To remedy this situation, researchers in JPL's software assurance group have been involved in the development of techniques specific to the assurance of autonomy. This paper summarizes over two decades of this research, and offers a vision of where further work is needed to address open issues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge