Brian Logan

Pushdown Reward Machines for Reinforcement Learning

Aug 09, 2025Abstract:Reward machines (RMs) are automata structures that encode (non-Markovian) reward functions for reinforcement learning (RL). RMs can reward any behaviour representable in regular languages and, when paired with RL algorithms that exploit RM structure, have been shown to significantly improve sample efficiency in many domains. In this work, we present pushdown reward machines (pdRMs), an extension of reward machines based on deterministic pushdown automata. pdRMs can recognize and reward temporally extended behaviours representable in deterministic context-free languages, making them more expressive than reward machines. We introduce two variants of pdRM-based policies, one which has access to the entire stack of the pdRM, and one which can only access the top $k$ symbols (for a given constant $k$) of the stack. We propose a procedure to check when the two kinds of policies (for a given environment, pdRM, and constant $k$) achieve the same optimal expected reward. We then provide theoretical results establishing the expressive power of pdRMs, and space complexity results about the proposed learning problems. Finally, we provide experimental results showing how agents can be trained to perform tasks representable in deterministic context-free languages using pdRMs.

Temporal Causal Reasoning with (Non-Recursive) Structural Equation Models

Jan 17, 2025

Abstract:Structural Equation Models (SEM) are the standard approach to representing causal dependencies between variables in causal models. In this paper we propose a new interpretation of SEMs when reasoning about Actual Causality, in which SEMs are viewed as mechanisms transforming the dynamics of exogenous variables into the dynamics of endogenous variables. This allows us to combine counterfactual causal reasoning with existing temporal logic formalisms, and to introduce a temporal logic, CPLTL, for causal reasoning about such structures. We show that the standard restriction to so-called \textit{recursive} models (with no cycles in the dependency graph) is not necessary in our approach, allowing us to reason about mutually dependent processes and feedback loops. Finally, we introduce new notions of model equivalence for temporal causal models, and show that CPLTL has an efficient model-checking procedure.

Probabilistic Strategy Logic with Degrees of Observability

Dec 19, 2024

Abstract:There has been considerable work on reasoning about the strategic ability of agents under imperfect information. However, existing logics such as Probabilistic Strategy Logic are unable to express properties relating to information transparency. Information transparency concerns the extent to which agents' actions and behaviours are observable by other agents. Reasoning about information transparency is useful in many domains including security, privacy, and decision-making. In this paper, we present a formal framework for reasoning about information transparency properties in stochastic multi-agent systems. We extend Probabilistic Strategy Logic with new observability operators that capture the degree of observability of temporal properties by agents. We show that the model checking problem for the resulting logic is decidable.

Grand Challenges in the Verification of Autonomous Systems

Nov 21, 2024

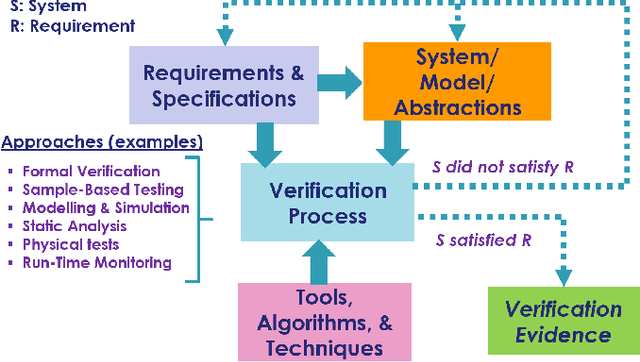

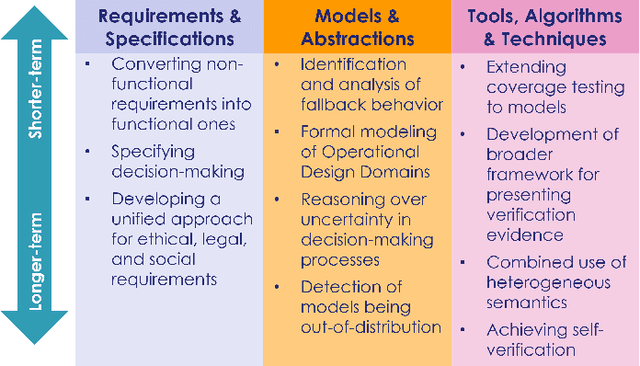

Abstract:Autonomous systems use independent decision-making with only limited human intervention to accomplish goals in complex and unpredictable environments. As the autonomy technologies that underpin them continue to advance, these systems will find their way into an increasing number of applications in an ever wider range of settings. If we are to deploy them to perform safety-critical or mission-critical roles, it is imperative that we have justified confidence in their safe and correct operation. Verification is the process by which such confidence is established. However, autonomous systems pose challenges to existing verification practices. This paper highlights viewpoints of the Roadmap Working Group of the IEEE Robotics and Automation Society Technical Committee for Verification of Autonomous Systems, identifying these grand challenges, and providing a vision for future research efforts that will be needed to address them.

Maximally Permissive Reward Machines

Aug 15, 2024

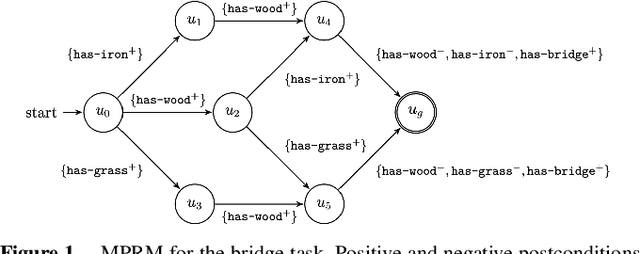

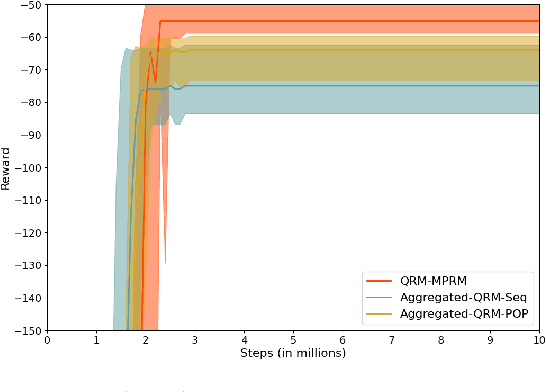

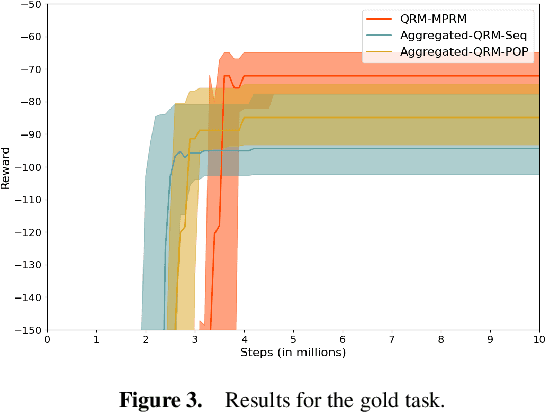

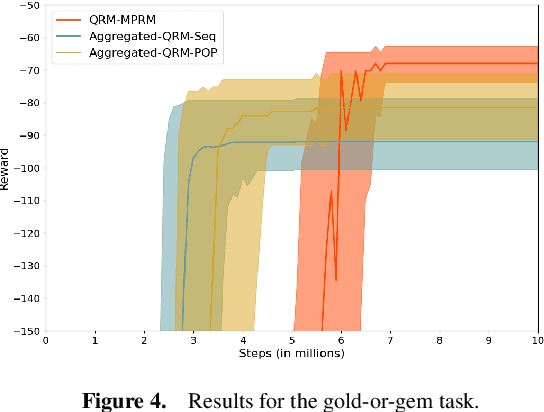

Abstract:Reward machines allow the definition of rewards for temporally extended tasks and behaviors. Specifying "informative" reward machines can be challenging. One way to address this is to generate reward machines from a high-level abstract description of the learning environment, using techniques such as AI planning. However, previous planning-based approaches generate a reward machine based on a single (sequential or partial-order) plan, and do not allow maximum flexibility to the learning agent. In this paper we propose a new approach to synthesising reward machines which is based on the set of partial order plans for a goal. We prove that learning using such "maximally permissive" reward machines results in higher rewards than learning using RMs based on a single plan. We present experimental results which support our theoretical claims by showing that our approach obtains higher rewards than the single-plan approach in practice.

The Complexity of Data-Driven Norm Synthesis and Revision

Dec 05, 2021Abstract:Norms have been widely proposed as a way of coordinating and controlling the activities of agents in a multi-agent system (MAS). A norm specifies the behaviour an agent should follow in order to achieve the objective of the MAS. However, designing norms to achieve a particular system objective can be difficult, particularly when there is no direct link between the language in which the system objective is stated and the language in which the norms can be expressed. In this paper, we consider the problem of synthesising a norm from traces of agent behaviour, where each trace is labelled with whether the behaviour satisfies the system objective. We show that the norm synthesis problem is NP-complete.

Causality, Responsibility and Blame in Team Plans

May 20, 2020Abstract:Many objectives can be achieved (or may be achieved more effectively) only by a group of agents executing a team plan. If a team plan fails, it is often of interest to determine what caused the failure, the degree of responsibility of each agent for the failure, and the degree of blame attached to each agent. We show how team plans can be represented in terms of structural equations, and then apply the definitions of causality introduced by Halpern [2015] and degree of responsibility and blame introduced by Chockler and Halpern [2004] to determine the agent(s) who caused the failure and what their degree of responsibility/blame is. We also prove new results on the complexity of computing causality and degree of responsibility and blame, showing that they can be determined in polynomial time for many team plans of interest.

Situation Calculus for Synthesis of Manufacturing Controllers

Jul 12, 2018

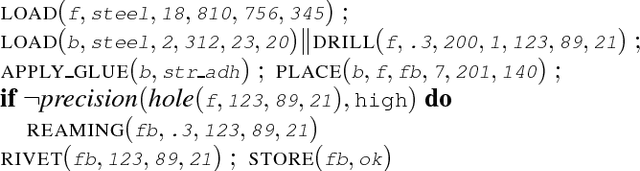

Abstract:Manufacturing is transitioning from a mass production model to a manufacturing as a service model in which manufacturing facilities 'bid' to produce products. To decide whether to bid for a complex, previously unseen product, a manufacturing facility must be able to synthesize, 'on the fly', a process plan controller that delegates abstract manufacturing tasks in the supplied process recipe to the appropriate manufacturing resources, e.g., CNC machines, robots etc. Previous work in applying AI behaviour composition to synthesize process plan controllers has considered only finite state ad-hoc representations. Here, we study the problem in the relational setting of the Situation Calculus. By taking advantage of recent work on abstraction in the Situation Calculus, process recipes and available resources are represented by ConGolog programs over, respectively, an abstract and a concrete action theory. This allows us to capture the problem in a formal, general framework, and show decidability for the case of bounded action theories. We also provide techniques for actually synthesizing the controller.

Expressibility of norms in temporal logic

Aug 24, 2016Abstract:In this short note we address the issue of expressing norms (such as obligations and prohibitions) in temporal logic. In particular, we address the argument from [Governatori 2015] that norms cannot be expressed in Linear Time Temporal Logic (LTL).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge